Hello everybody,

I am trying to deploy a realtime endpoint from a registered mlflow model obtained from a tensorflow training job.

In this repository, you will find the training scripts:

https://github.com/antigones/py-hands-ml-tf/tree/main/azure_ml/job_script

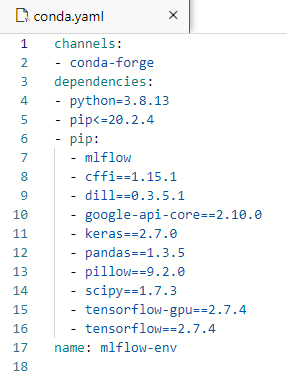

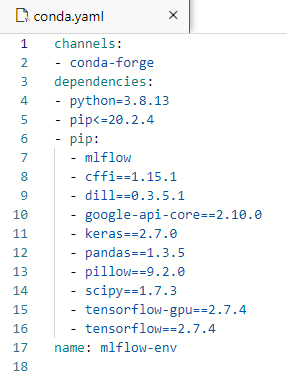

The job outputs a MLFlow model with its conda environment yml file.

When I try to deploy the model to a realtime endpoint, I get the following error:

257528-azure-ml-deploy-error.txt

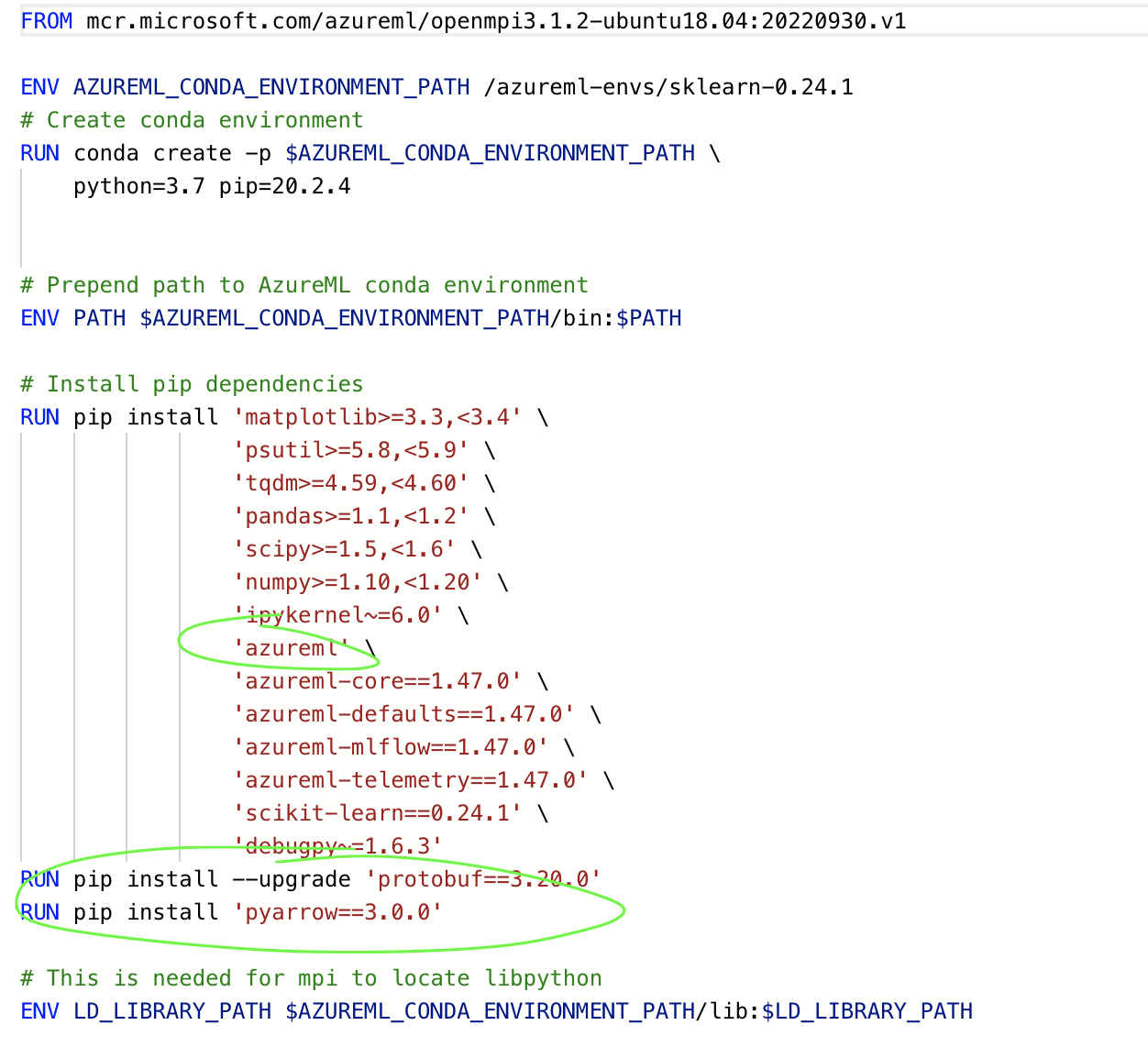

It seems to be an error related to protobuf, when loading the model:

File "/opt/miniconda/envs/userenv/lib/python3.8/site-packages/google/protobuf/descriptor.py", line 560, in __new__

_message.Message._CheckCalledFromGeneratedFile()

TypeError: Descriptors cannot not be created directly.

If this call came from a _pb2.py file, your generated code is out of date and must be regenerated with protoc >= 3.19.0.

If you cannot immediately regenerate your protos, some other possible workarounds are:

1. Downgrade the protobuf package to 3.20.x or lower.

2. Set PROTOCOL_BUFFERS_PYTHON_IMPLEMENTATION=python (but this will use pure-Python parsing and will be much slower).

More information: https://developers.google.com/protocol-buffers/docs/news/2022-05-06#python-updates

The environment is deployed automatically (the scoring script is also generated).

I have also tried different images, with different python versions (3.7) and Tensorflow versions (2.4) with no luck.

How can I solve this issue?

Thank you in advance for your support.