Hello @Ajay Prasadh Viswanathan ,

Welcome to Microsoft Q&A platform.

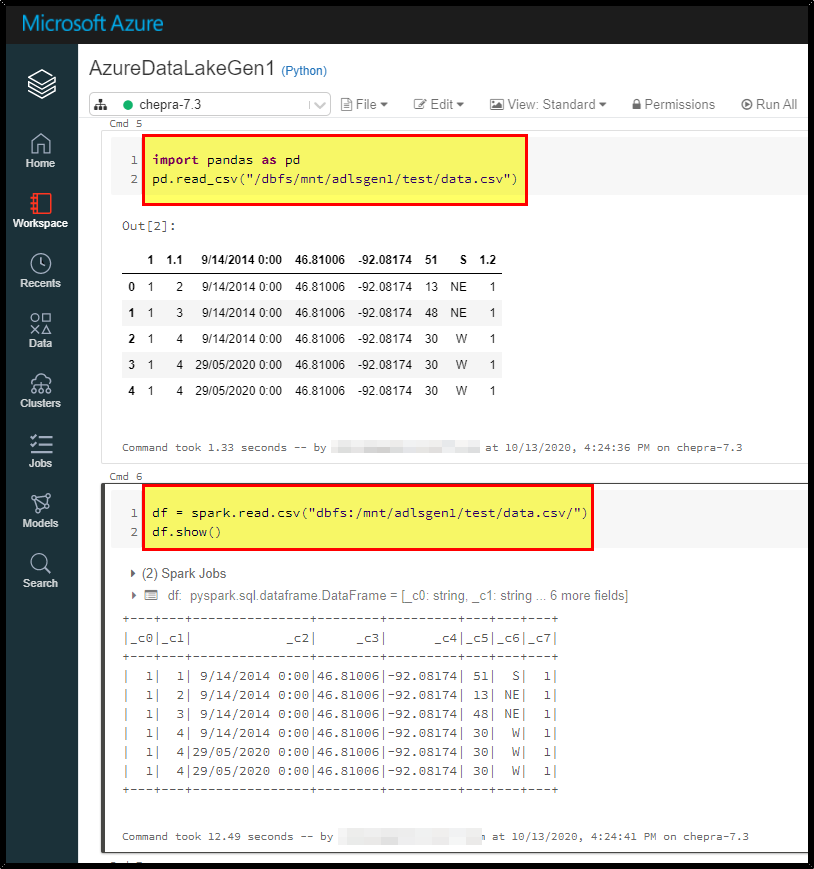

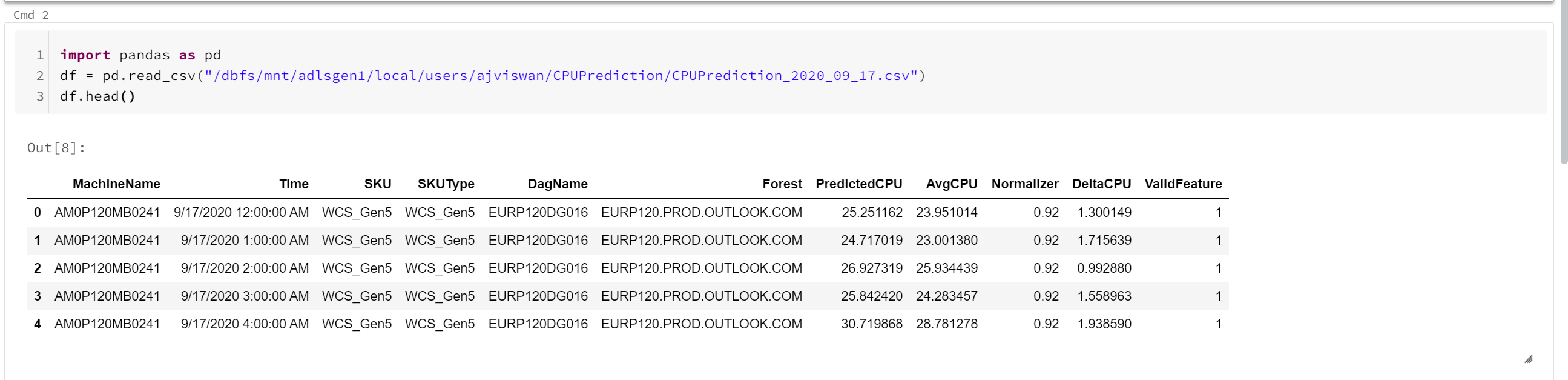

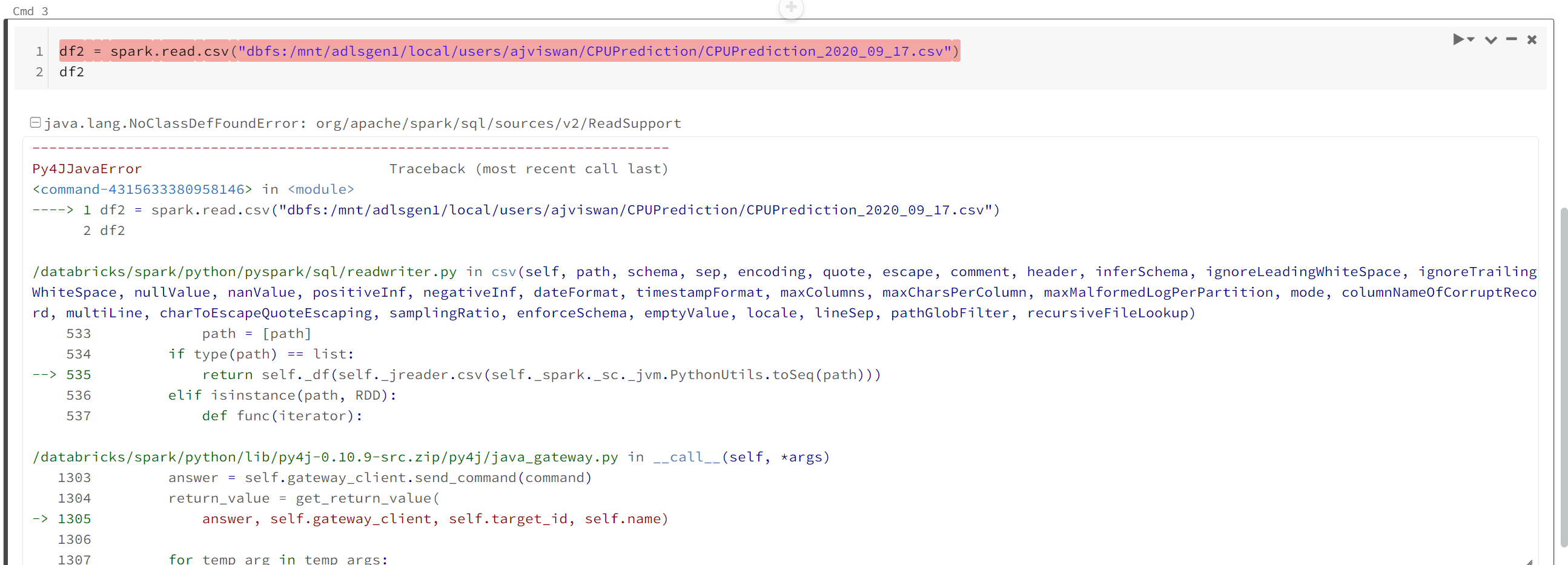

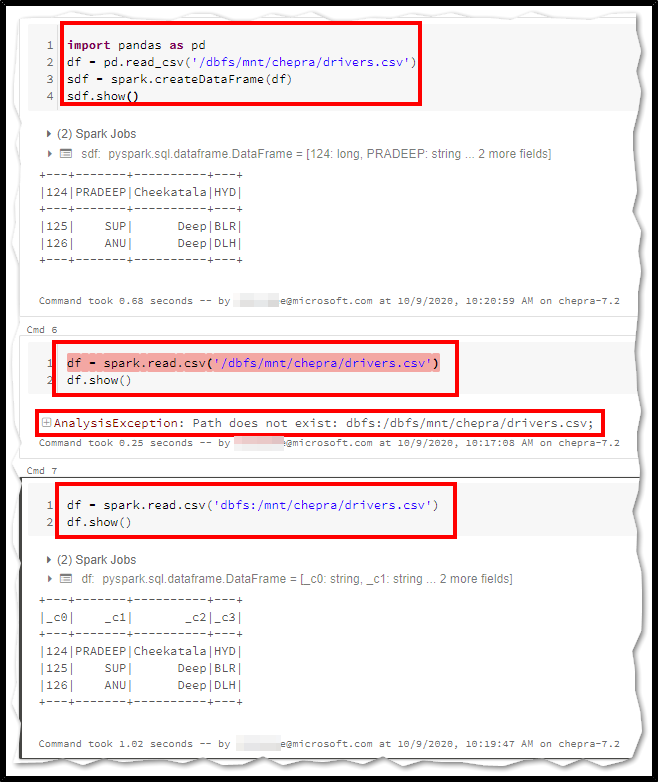

To read via spark methods from mount points you shouldn't include the /dbfs/mnt as /dbfs/mnt/ajviswan/forest_efficiency/2020-04-26_2020-05-26.csv prefix, you can use dbfs:/mnt as dbfs:/mnt/ajviswan/forest_efficiency/2020-04-26_2020-05-26.csv instead.

Hope this helps. Do let us know if you any further queries.

----------------------------------------------------------------------------------------

Do click on "Accept Answer" and Upvote on the post that helps you, this can be beneficial to other community members.