Logic Apps unfortunately doesn't have a setting for splitting a line-separated array currently, so there is a bit of parsing that needs to happen to support converting them. It may be simpler to grab the data from the API, but either is fine.

The preview version of Logic Apps will let you do the split in JS, but there isn't yet a consumption billing option for that, so probably not going that route for something small like this. The current version allows you to run code within an integration account, which has the same issue.

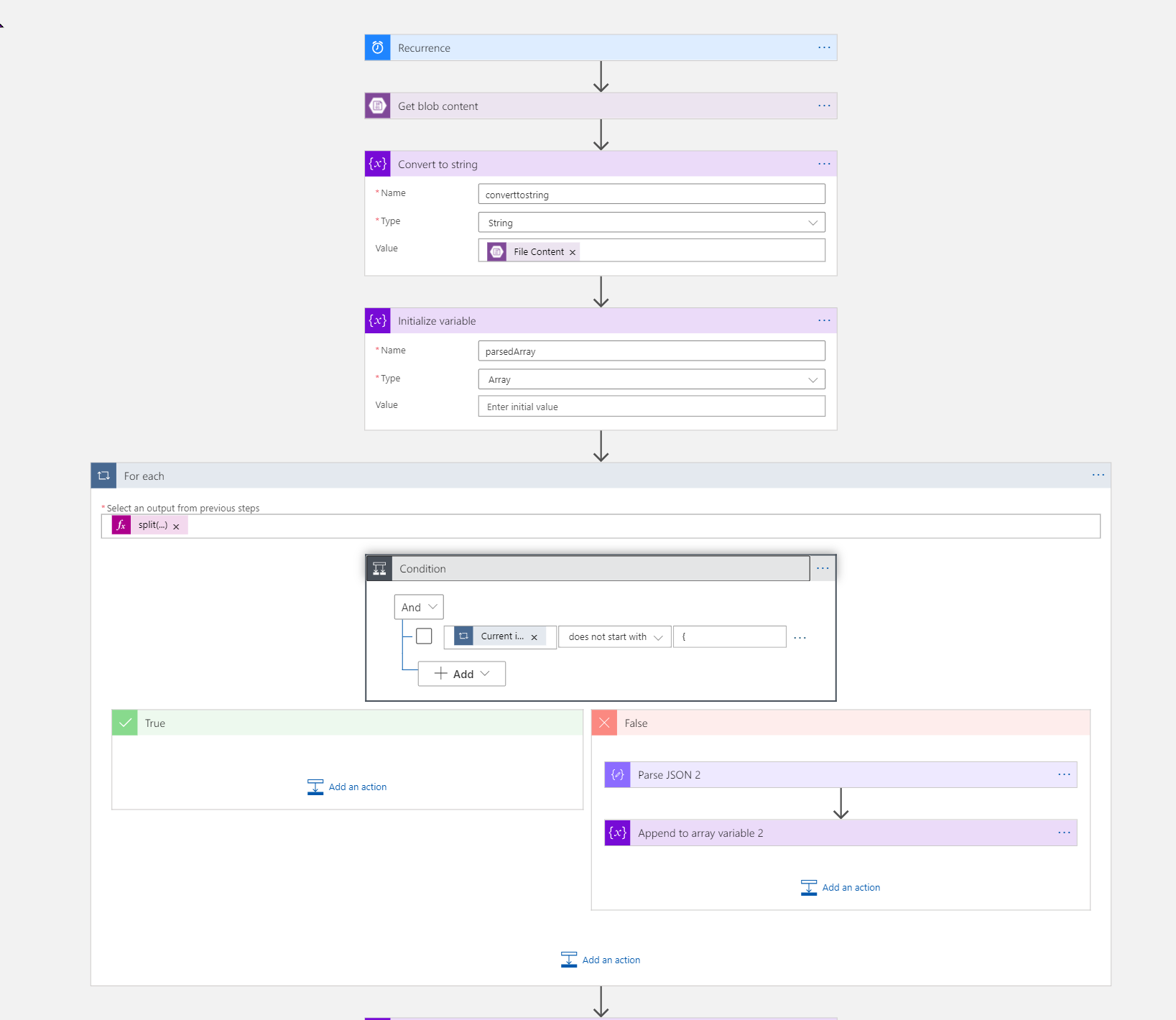

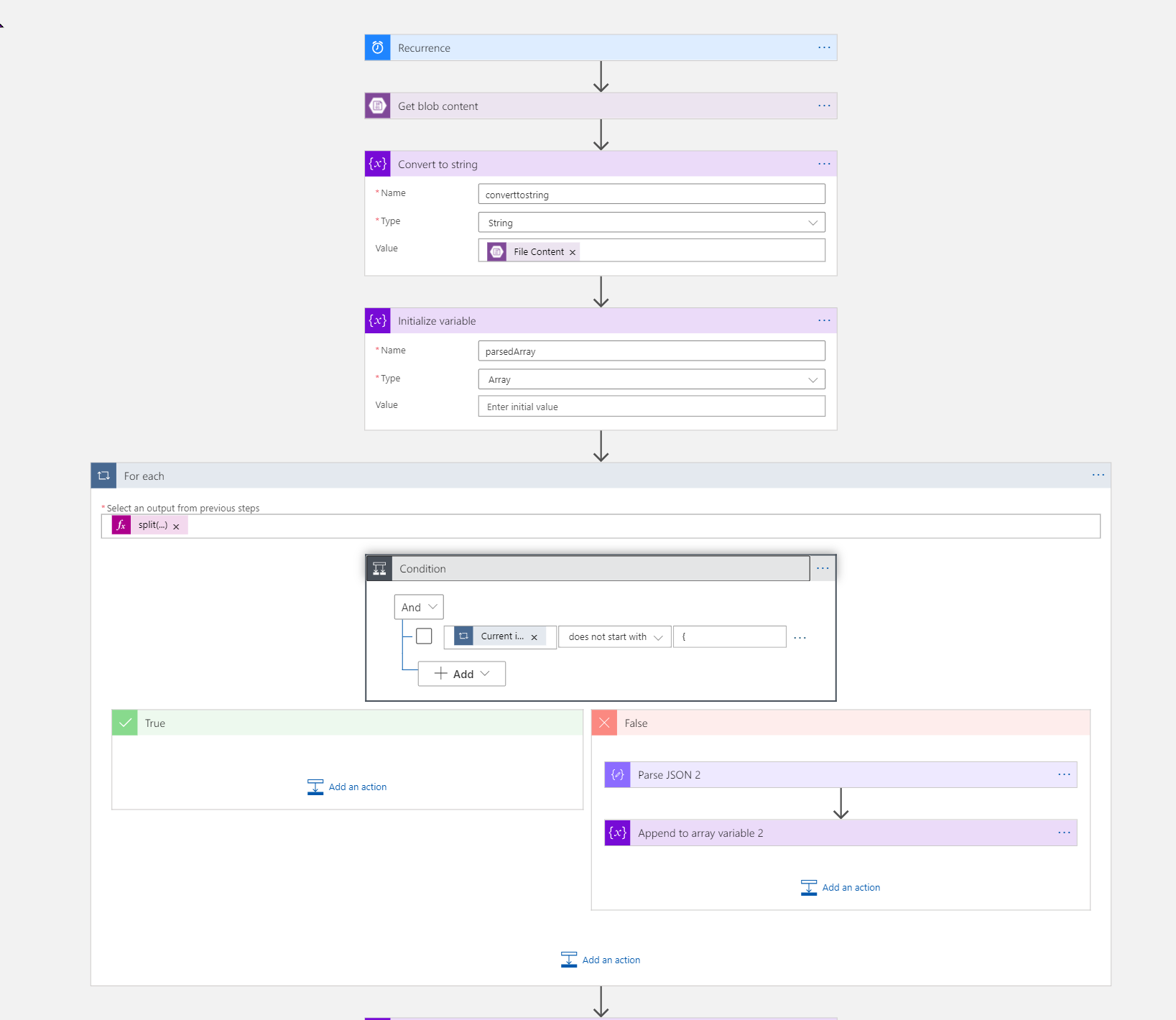

Here is the option I put together- there are usually a few different ways that will work.

- Parse the contents into a string to convert from the raw base-64 encode to something that we can work with later.

- Set up an array variable to hold values as they are parsed.

- Run a loop splitting the string on the newline character. You must edit the expression in code view. The UI isn't able to handle the newline character properly. The correct expression is

@split(variables('converttostring'), '\r\n')

- The last line is blank. so adding a check to make sure that the first character is '{' like we expect

- Parse the JSON based on whatever properties you need to pull. When generating from sample I had Logic Apps set a couple properties to integer instead of number, so just be mindful of that.

- Add the parsed data to the array

- Whatever else you want to do to the data and load it into SQL. Depending on what transformations you want you may want to put some of them before adding them to the array

Here's the JSON schema with my storage connection parameters removed

{

"definition": {

"$schema": "https://schema.management.azure.com/providers/Microsoft.Logic/schemas/2016-06-01/workflowdefinition.json#",

"actions": {

"Convert_to_string": {

"inputs": {

"variables": [

{

"name": "converttostring",

"type": "string",

"value": "@{body('Get_blob_content')}"

}

]

},

"runAfter": {

"Get_blob_content": [

"Succeeded"

]

},

"type": "InitializeVariable"

},

"For_each": {

"actions": {

"Condition": {

"actions": {},

"else": {

"actions": {

"Append_to_array_variable_2": {

"inputs": {

"name": "parsedArray",

"value": "@body('Parse_JSON_2')"

},

"runAfter": {

"Parse_JSON_2": [

"Succeeded"

]

},

"type": "AppendToArrayVariable"

},

"Parse_JSON_2": {

"inputs": {

"content": "@items('For_each')",

"schema": {

"properties": {

"average": {

"type": "number"

},

"count": {

"type": "number"

},

"metricName": {

"type": "string"

},

"resourceId": {

"type": "string"

},

"time": {

"type": "string"

},

"timeGrain": {

"type": "string"

},

"total": {

"type": "number"

}

},

"type": "object"

}

},

"runAfter": {},

"type": "ParseJson"

}

}

},

"expression": {

"and": [

{

"not": {

"startsWith": [

"@items('For_each')",

"{"

]

}

}

]

},

"runAfter": {},

"type": "If"

}

},

"foreach": "@split(variables('converttostring'), '\r\n')",

"runAfter": {

"Initialize_variable": [

"Succeeded"

]

},

"type": "Foreach"

},

"Get_blob_content": {

"inputs": {

"host": {

"connection": {

"name": "@parameters('$connections')['azureblob']['connectionId']"

}

},

"method": "get",

"path": "/datasets/default/files/@{encodeURIComponent(encodeURIComponent('XXXXXXXX'))}/content",

"queries": {

"inferContentType": true

}

},

"metadata": {

"JTJmdGVzdCUyZlBUMUguanNvbg==": "/test/PT1H.json"

},

"runAfter": {},

"type": "ApiConnection"

},

"Initialize_variable": {

"inputs": {

"variables": [

{

"name": "parsedArray",

"type": "array"

}

]

},

"runAfter": {

"Convert_to_string": [

"Succeeded"

]

},

"type": "InitializeVariable"

},

"Initialize_variable_2": {

"inputs": {

"variables": [

{

"name": "testArray",

"type": "array",

"value": "@variables('parsedArray')"

}

]

},

"runAfter": {

"For_each": [

"Succeeded"

]

},

"type": "InitializeVariable"

}

},

"contentVersion": "1.0.0.0",

"outputs": {},

"parameters": {

"$connections": {

"defaultValue": {},

"type": "Object"

}

},

"triggers": {

"Recurrence": {

"recurrence": {

"frequency": "Week",

"interval": 3

},

"type": "Recurrence"

}

}

},

"parameters": {

"$connections": {

"value": {

"azureblob": {

"connectionId": "XXXXXXXX",

"connectionName": "azureblob",

"id": "XXXXXXXX"

}

}

}

}

}