Hi everyhwere,

I am using the YouTube Analytics and Reporting API to get performance data from a Youtube channel and to store it in Azure's Data Factory (ADF) programmitcally. From the YouTube API, I get the following JSON output format:

{

"kind": "youtubeAnalytics#resultTable",

"columnHeaders": [

{

"name": string,

"dataType": string,

"columnType": string

},

],

"rows": [

[

{value}, {value}, ...

]

]

}

See link here: https://developers.google.com/youtube/analytics/reference/reports/query#response

In a first step, I used the copy activity to copy the JSON into the blob storage. Everythine fine so far. Now, I wanted to create a data flow, flatten the json and write the values into derived columns before saving it in a data sink.

Issue #1

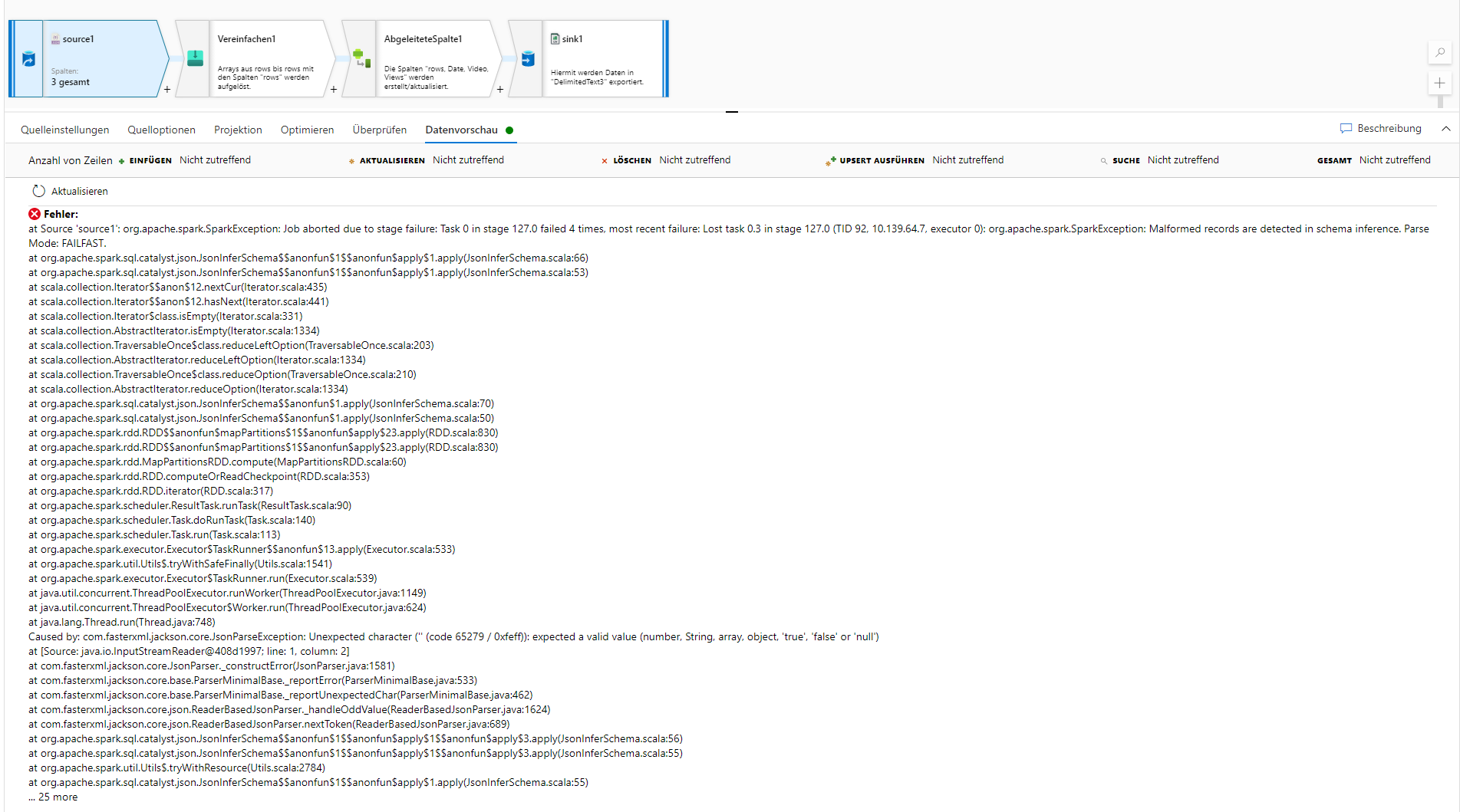

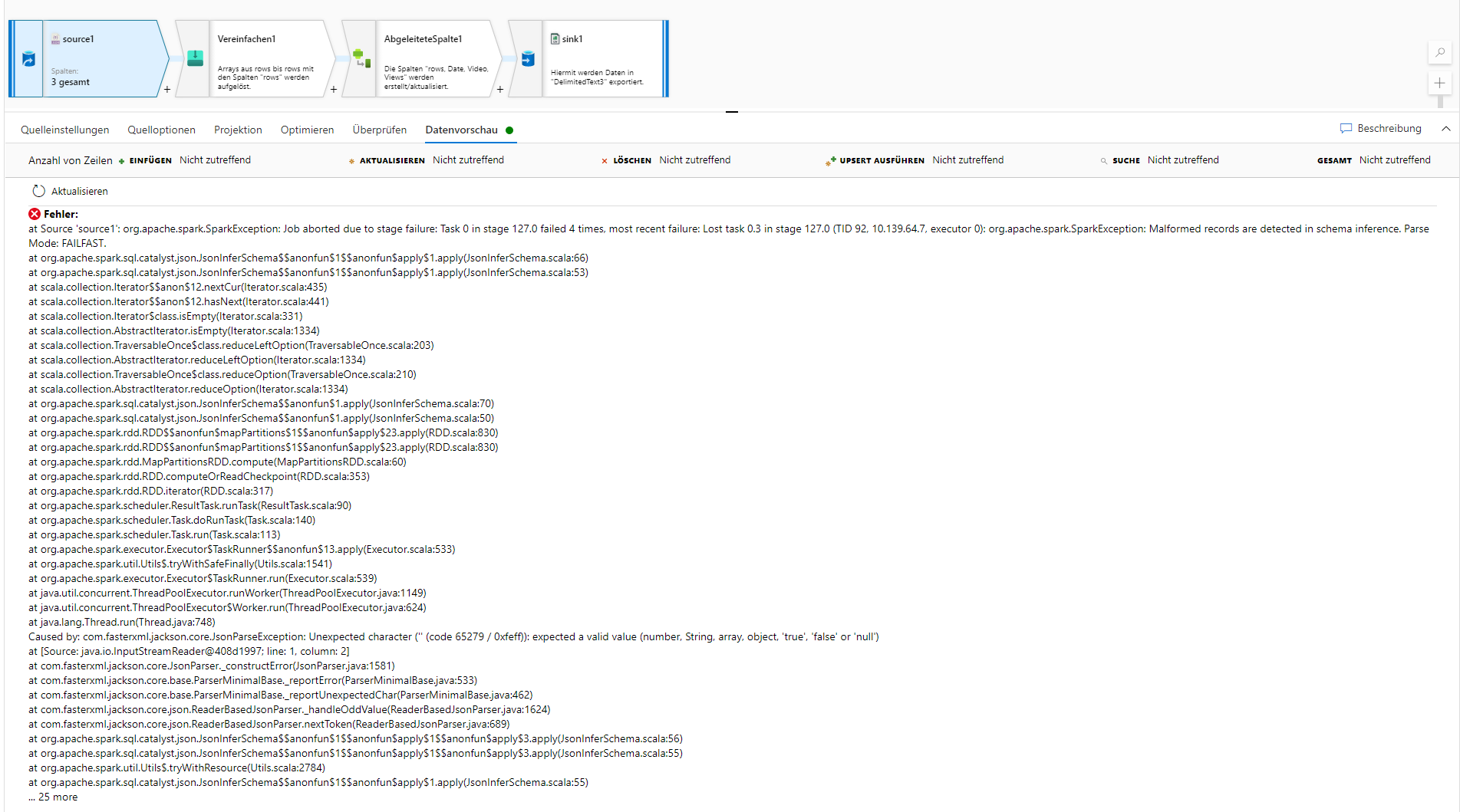

In the first step of my data flow - defining the source - I select the JSON from the blob storage and when I click on data preview, I will get this error message: SparkException: Malformed records are detected in schema inference. Parse Mode: FAILFAST, see screenshot:

I checked the JSON's schema on https://jsonformatter.curiousconcept.com/ at generally, it seems to be fine so I don't understand why ADF cannot read it properly.

I realized that ADF is putting my whole JSON into [ ] brackets which possibly causing the Spark exception error. When I delete the [ ] brackets manually by editing the JSON in the blob storage (go get the native JSON structure of the API back again), the data preview is working as excpected. In a nutshell: ADF is adding something which it later on does not like any longer, hmm hmmm. Any ideas what can be done here?

Issue #2

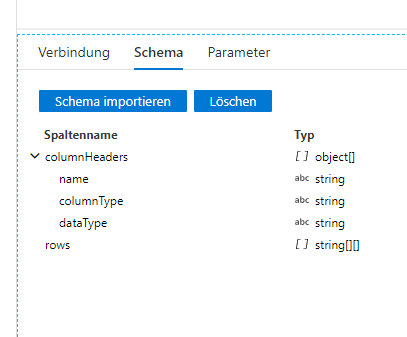

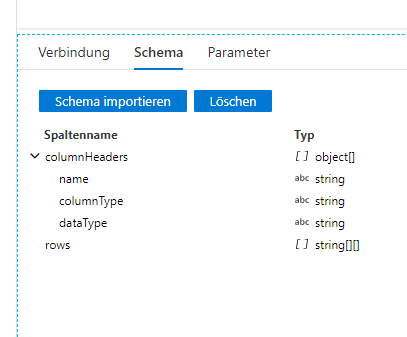

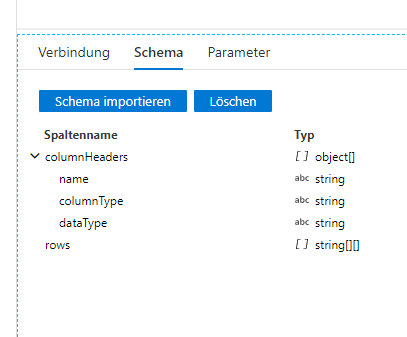

As soon as the parsing of the JSON is successful, I noticed that rows are not defined as object but as an array (in the data source linked service it's even defined as string), see both screenshots. Somehow, it should be defined as objects so that I am able to call the values within the rows, as it is for the "columnHeaders". I hope you get my pain point. Can anyone please tell me what I need to do here?

Looking forward to your replies. thanks in advance!