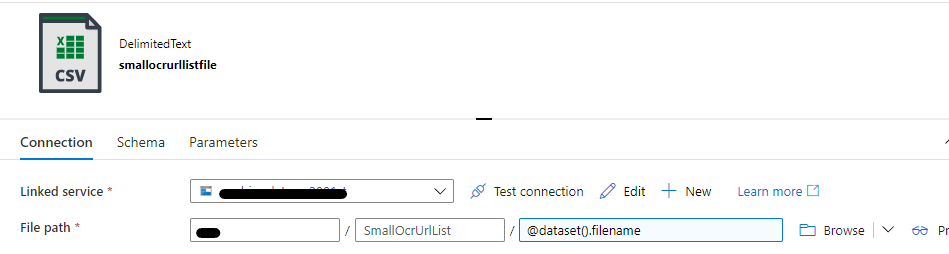

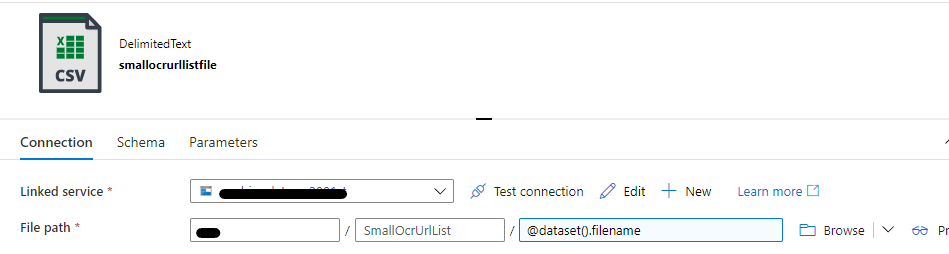

I am tring to set a data flow in ADF. The input sink from a dataset, there is a parameter in the dataset, it is defining the filename. When I do not use parameter to test connection, it is success. But if I set a parameter, it failed and throw below exception.

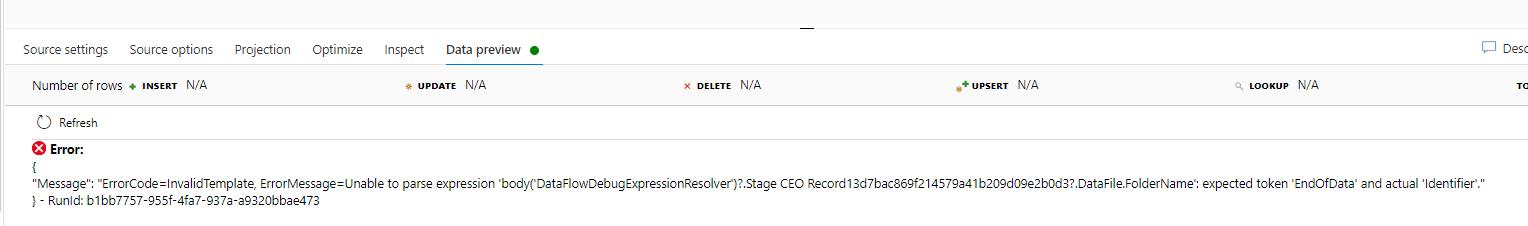

Connection failed

{

"Message": "ErrorCode=InvalidTemplate, ErrorMessage=Unable to parse expression 'body('DataFlowDebugExpressionResolver')?.Get links from SmallOcrUrlListTestConnection1463b7d26602d4a4d9087b926d630631e?.Output.outputfolder': expected token 'EndOfData' and actual 'Identifier'."

} - RunId: 78273469-cfdc-4a1c-8d3c-dbe415945deb

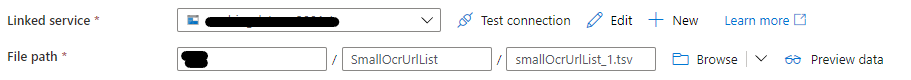

If I set the static file name, the test connection will be success.

Both way can preview data in dataset.

Dataset code:

{

"name": "smallocrurllistfile",

"properties": {

"linkedServiceName": {

"referenceName": "REFERENCENAME",

"type": "LinkedServiceReference"

},

"parameters": {

"filename": {

"type": "string",

"defaultValue": "smallOcrUrlList_1.tsv"

}

},

"annotations": [],

"type": "DelimitedText",

"typeProperties": {

"location": {

"type": "AzureBlobStorageLocation",

"fileName": {

"value": "@dataset().filename",

"type": "Expression"

},

"folderPath": "SmallOcrUrlList",

"container": "CONTAINERNAME"

},

"columnDelimiter": "\t",

"escapeChar": "\\",

"quoteChar": ""

},

"schema": [

{

"type": "String"

},

{

"type": "String"

},

{

"type": "String"

}

]

}

}

dataflow code:

{

"name": "Get links from SmallOcrUrlList",

"properties": {

"type": "MappingDataFlow",

"typeProperties": {

"sources": [

{

"dataset": {

"referenceName": "smallocrurllistfile",

"type": "DatasetReference"

},

"name": "SmallOcrUrlList"

}

],

"sinks": [

{

"dataset": {

"referenceName": "filteredSmallOcrUrlFile",

"type": "DatasetReference"

},

"name": "Output"

}

],

"transformations": [

{

"name": "SearchKeyword"

}

],

"script": "parameters{\n\tKeyword as string\n}\nsource(output(\n\t\timage_key as string,\n\t\timage_url as string,\n\t\tOCRText as string\n\t),\n\tallowSchemaDrift: true,\n\tvalidateSchema: false,\n\tignoreNoFilesFound: false) ~> SmallOcrUrlList\nSmallOcrUrlList filter(instr(lower(OCRText), $Keyword) > 0) ~> SearchKeyword\nSearchKeyword sink(allowSchemaDrift: true,\n\tvalidateSchema: false,\n\tfilePattern:'links[n].txt',\n\tmapColumn(\n\t\timage_url\n\t),\n\tpartitionBy('roundRobin', 2),\n\tskipDuplicateMapInputs: true,\n\tskipDuplicateMapOutputs: true,\n\theader: (array($Keyword))) ~> Output"

}

}

}

B.T.W, I do this to allow the data flow to batch process files in a directory in the pipeline, so I want data flow get the input from 'GetMetadata' activitiy's output.

Please tell me how to solve this problem, thanks.