Hi @bk ,

Thanks for reaching out and sorry for your experience.

As per my analysis this looks like a known limitation when trying to create excel dataset, or have "import schema", "preview data" and "refresh" on dataset, if the excel file is big.

Root Cause:

Today ADF excel in copy does not support streaming read which is limited by third party library/SDK that was used in this connector, it must load whole excel file into memory, then to find customer specified worksheet to read data row by row, cell by cell.

For all the operation ("import schema", "preview data", "refresh") on excel dataset, it is interactive operation, the data must be returned immediately before http request is timeout (100s). For big excel file, these operation cannot be finished in 100s, so timeout issue is raised.

For copy big excel file, it has low performance, network or OOM, it is the same root cause because of streaming read is not supported.

Here are few workarounds suggested by product team

If you want to move/transfer big excel file (>100MB) into other data store using ADF, you can use one of following option to work around it:

- Option-1: Register and online self-hosted integration runtime (SHIR) with powerful machine, then use copy activity to move big excel file into other data store with that IR.

- Option-2: Split big excel file into several small ones (for example, split one 100MB excel file into ten 10MB ones), then use copy activity to move the excel folder.

- Option-3: Use dataflow activity to move big excel file into other data store. In dataflow, the excel has supported streaming read with few CPU/Memory consumption. Based on a perf test, it can move/transfer 1GB excel file in dataflow within 5 minutes while using Azure Integration runtime with 32 cores.

- Option-4: You can manually convert/save big excel file as csv format, then use copy activity to move it.

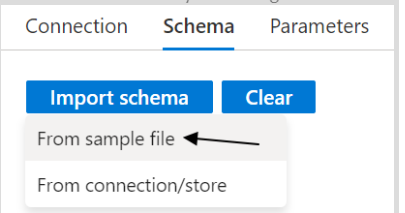

For interactive operation ("import schema", "preview data", "refresh") on excel dataset which has big file, the customer can create one sample excel file which is subset of original file, then use sample file to finish "import schema", "preview data" and "refresh". Later changing the file name back to be original file to deploy this dataset, then this excel dataset can be reused by different activity (copy, lookup, get-metadata…).

Note: If you already know from which worksheet to read data, you can directly check "Edit" of "Sheet name" to input related value.

If you just want to copy the file as-is from SFTP to your ADLS, you could try creating binary dataset for source and sink and that should help copy the same file to your ADLS.

In case if you want to do any transformation on the source file before copying to sink, and as Option-1, Option-2, Option-4 doesn't suit your requirement well, then you will have to first copy the file from your SFTP to staging location (for example lets say Azure Blob) as mentioned in my previous step (using binary dataset) and then use mapping data flow to import schema of that big Excel file (from staging location) and do the required transformation as need.

I have also escalated this issue to ADF product team to call this limitation in public documentation.

Hope the above info helps.

----------

Please don’t forget to Accept Answer and Up-Vote wherever the information provided helps you, this can be beneficial to other community members.