Hi @Ramanathan Dakshina Murthy ,

A possible solution is to create a staging folder inside you Azure Data Lake Gen2 (ADLS Gen2). Inside this staging folder you will place the delimited files that needs to be processed / transformed. Processing can be done with a Data Flow within Azure Synapse or Azure Data Factory, if the transformations are too complex you could use a notebook via Databricks or with the integrated Synapse notebooks. The source of the process should import all the delimited files, for example with a wildcard: staging_folder\*.csv.

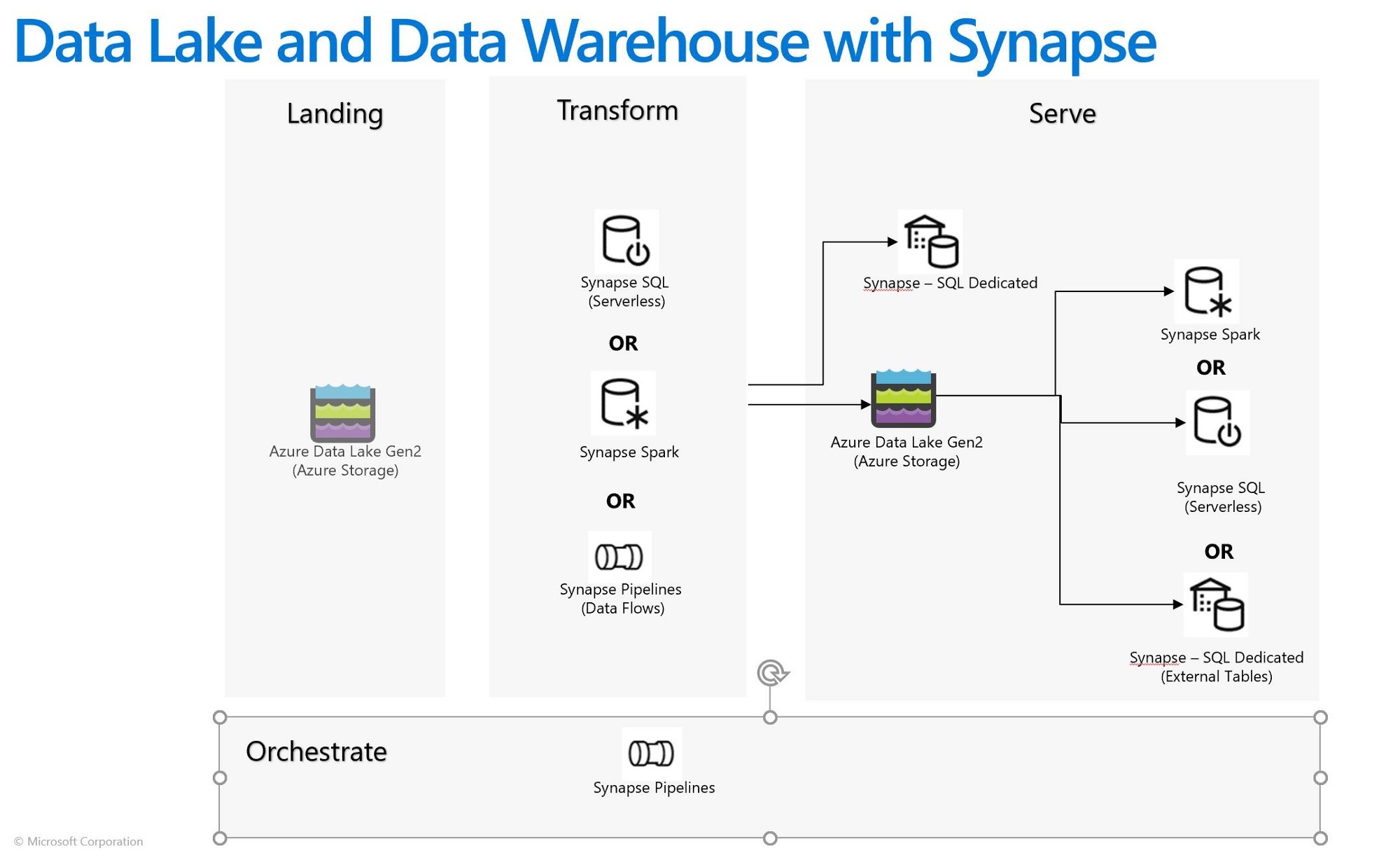

When the data is loaded into the data flow or data frame you can process data. After the processing is done, you can store the data as a Delta Lake table within your ADLS Gen2. The delimited files should be deleted from the staging folder at this moment, because you don't want that the files are processed for a second time. At the end you can create a Synapse database view (with the Synpase serverless pool) on top of the Delta Lake table within you ADLS Gen2 for retrieving the data. I added an image where you can see in an abstract way the steps I described.

A benefit of Delta Lake is that you can create partitions on your data, what makes it more performant and cost effective, if you set the partitions right. It also saves the files as Parquet which is more compressed than csv. This works with hundreds of millions of rows, but if your dataset grows by more than a few billions I would recommend replacing the Delta Lake table with a Synapse Dedicated database. A dedicated database is very expensive compared with a serverless pool, but a lot faster.

Hope this helps.