Hi @Shambhu Rai ,

Thank you for posting query in Microsoft Q&A Platform.

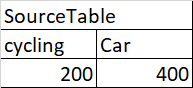

You need to add a dummyColumn to

SourceTableand then perform Unpivot of it and then perform Join withConversion Table

Kindly check below detailed implementation code & screenshots.

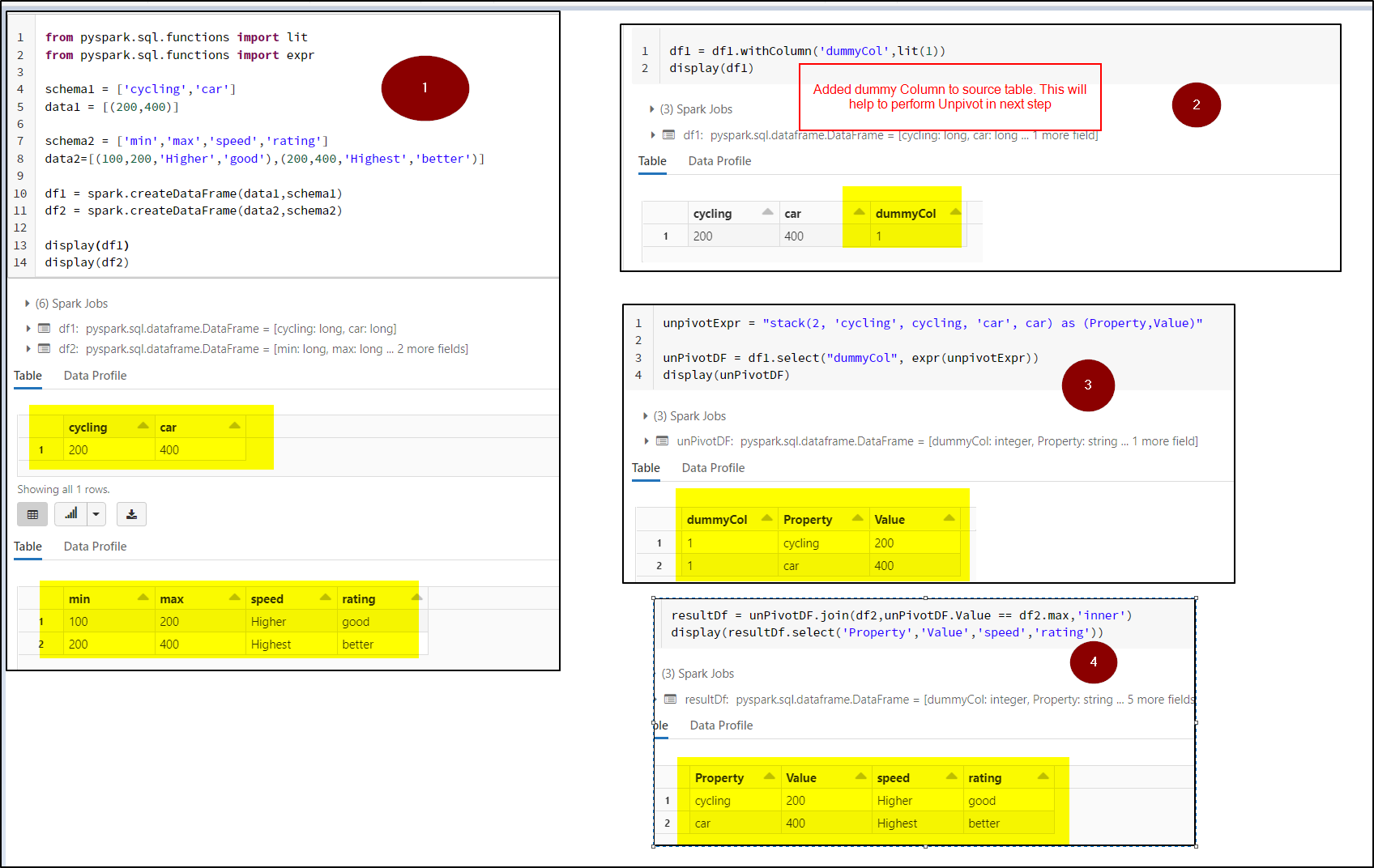

from pyspark.sql.functions import lit

from pyspark.sql.functions import expr

schema1 = ['cycling','car']

data1 = [(200,400)]

schema2 = ['min','max','speed','rating']

data2=[(100,200,'Higher','good'),(200,400,'Highest','better')]

df1 = spark.createDataFrame(data1,schema1)

df2 = spark.createDataFrame(data2,schema2)

display(df1)

display(df2)

df1 = df1.withColumn('dummyCol',lit(1))

display(df1)

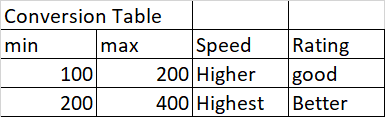

unpivotExpr = "stack(2, 'cycling', cycling, 'car', car) as (Property,Value)"

unPivotDF = df1.select("dummyCol", expr(unpivotExpr))

display(unPivotDF)

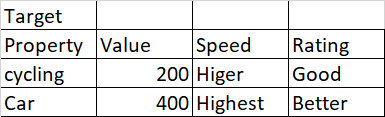

resultDf = unPivotDF.join(df2,unPivotDF.Value == df2.max,'inner')

display(resultDf.select('Property','Value','speed','rating'))

Hope this helps. Please let us know if any further queries.

-------------

Please consider hitting Accept Answer button. Accepted answers help community as well.

]

]

and upvote

and upvote  for the same. And, if you have any further query do let us know.

for the same. And, if you have any further query do let us know.