Quickstart: Create a Data workflow

Note

Data workflows is powered by Apache Airflow.

Apache Airflow is an open-source platform used to programmatically create, schedule, and monitor complex data workflows. It allows you to define a set of tasks, called operators, that can be combined into directed acyclic graphs (DAGs) to represent data pipelines.

Data workflows provide a simple and efficient way to create and manage Apache Airflow environments, enabling you to run your data workflows at scale with ease. In this quickstart, you create your first Data workflow and run a Directed Acyclic Graph (DAG) to familiarize yourself with the environment and functionalities of Data workflows.

Prerequisites

- Enable Data workflows in your Tenant.

Note

Since Data workflows is in preview state, you need to enable it through your tenant admin. If you already see Data workflows, your tenant admin may have already enabled it.

- Go to Admin Portal -> Tenant Settings -> Under Microsoft Fabric -> Expand 'Users can create and use Data workflows (preview)' section.

- Select Apply.

Create a Data workflow

You can use an existing workspace or Create a new workspace.

Expand

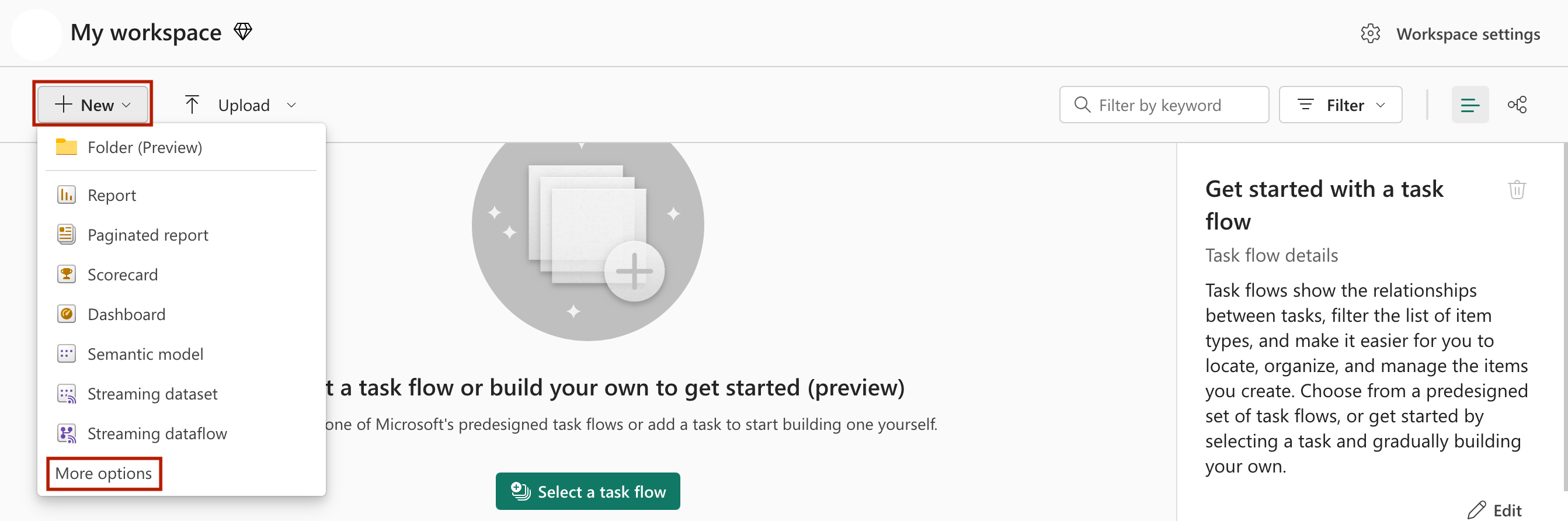

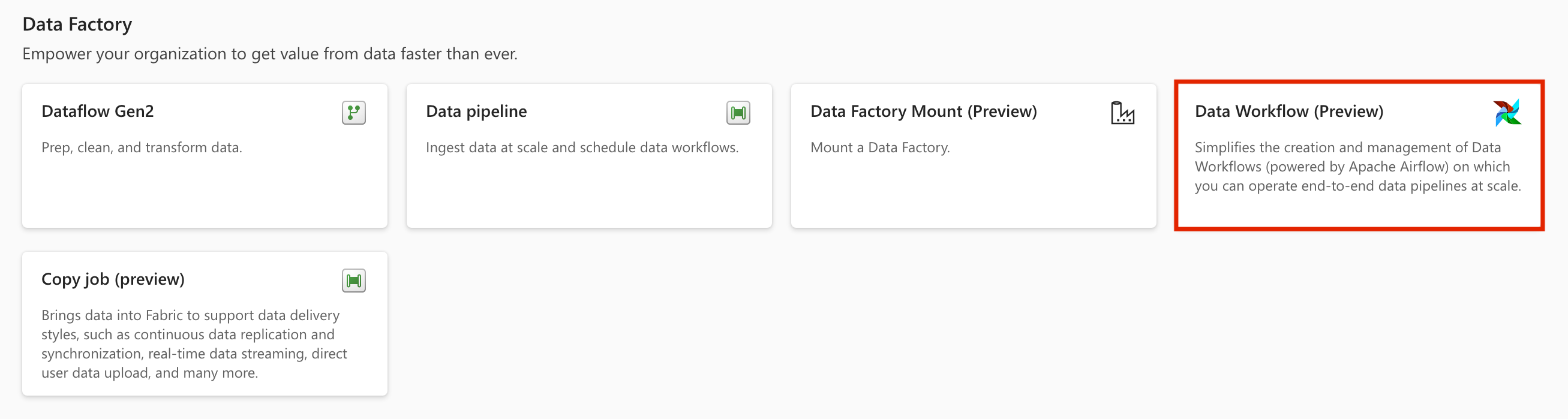

+ Newdropdown -> Click on More Options -> UnderData Factorysection -> Select Data workflows (preview)

Give a suitable name to your project and click on the "Create" button.

Create a DAG File

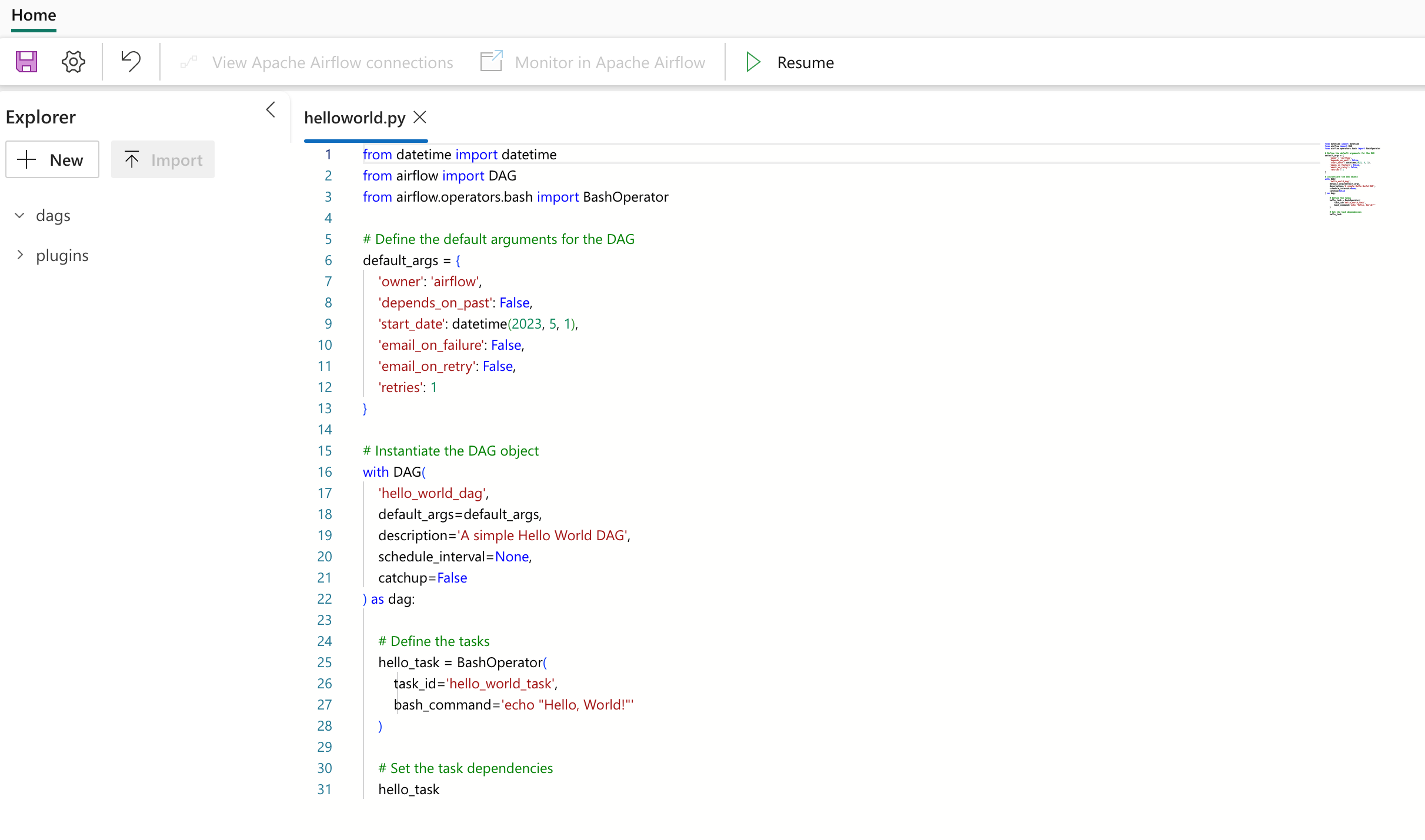

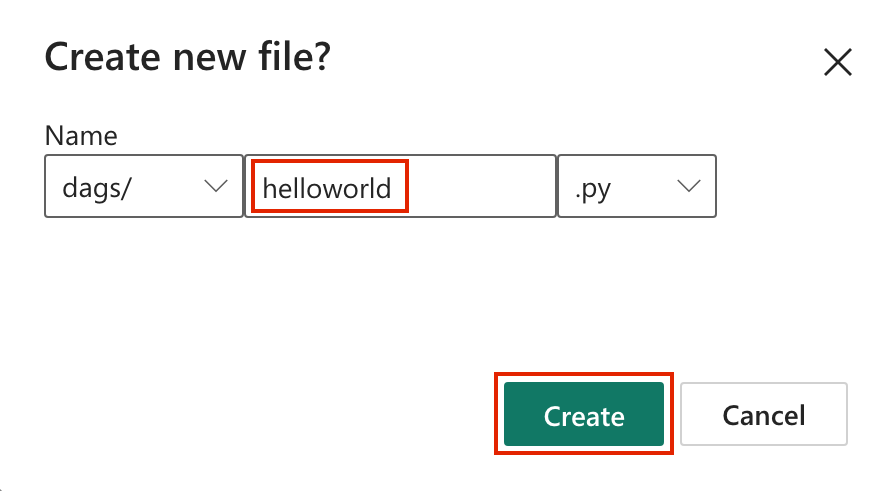

Click on "New DAG file" card -> give the name to the file and Click on "Create" button.

A boilerplate DAG code is presented to you. You can edit the file as per your requirements.

Click on "Save icon".

Run a DAG

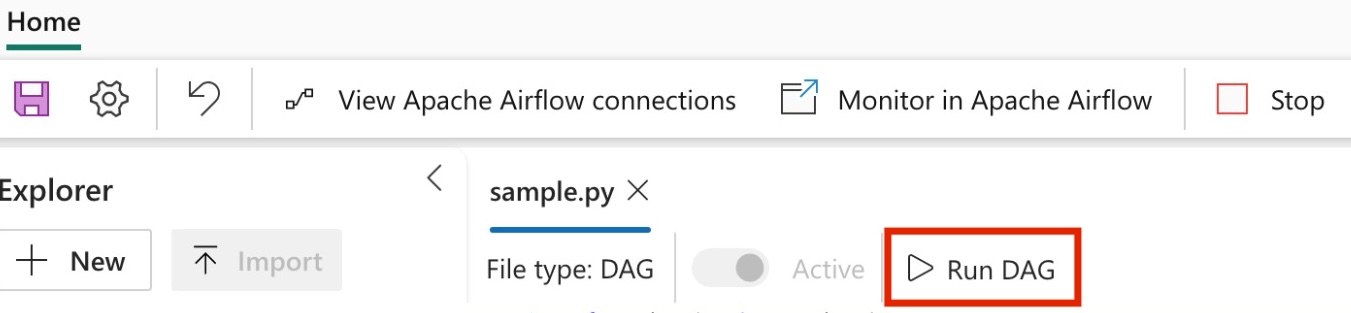

Begin by clicking on the "Run DAG" button.

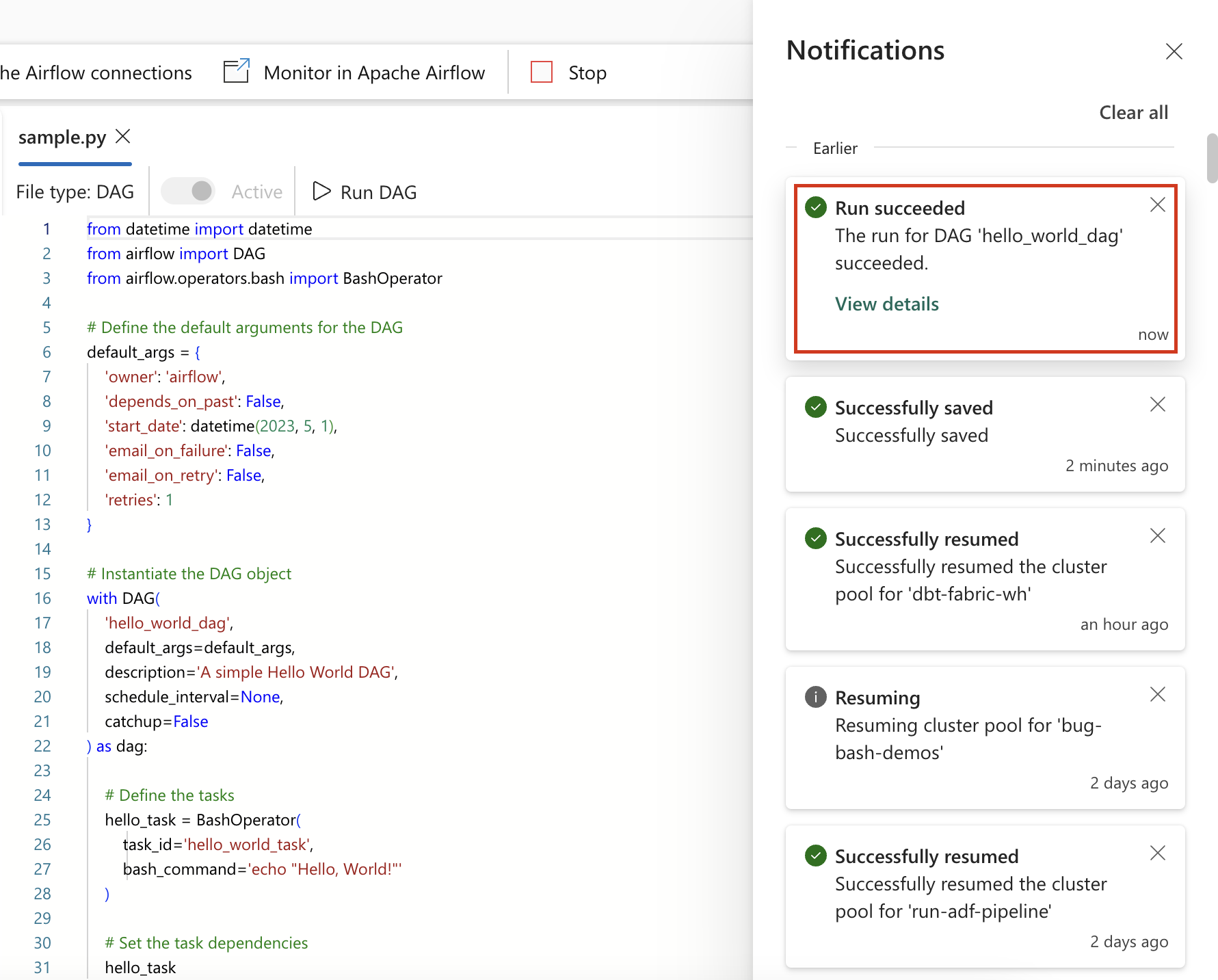

Once initiated, a notification will promptly appear indicating the DAG is running.

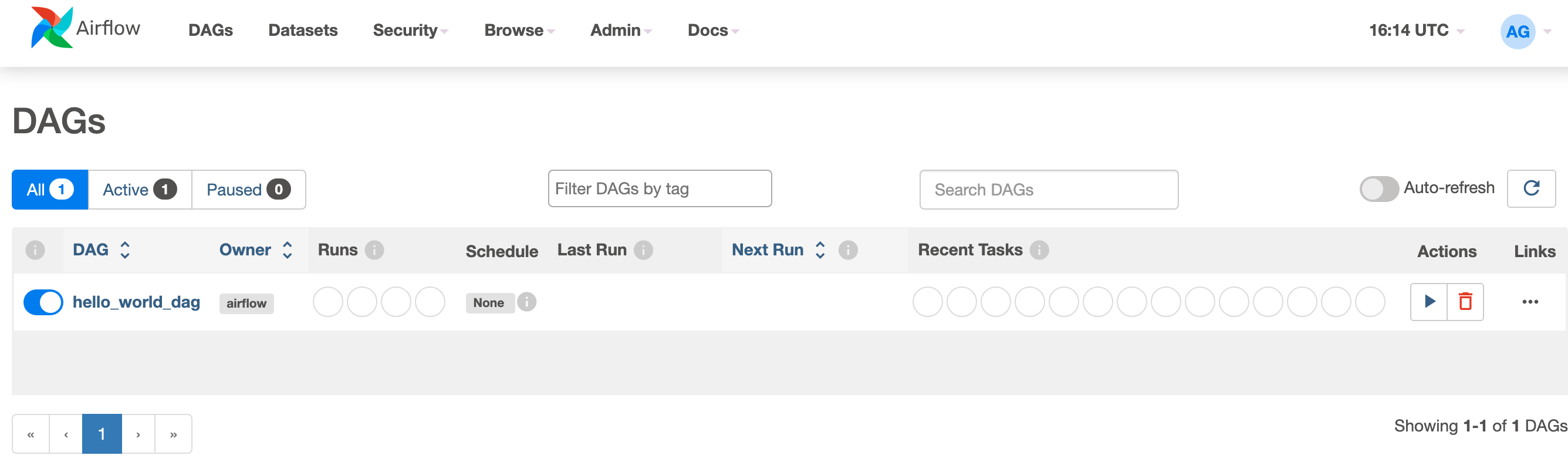

To monitor the progress of the DAG run, simply click on "View Details" within the notification center. This action will redirect you to the Apache Airflow UI, where you can conveniently track the status and details of the DAG run.

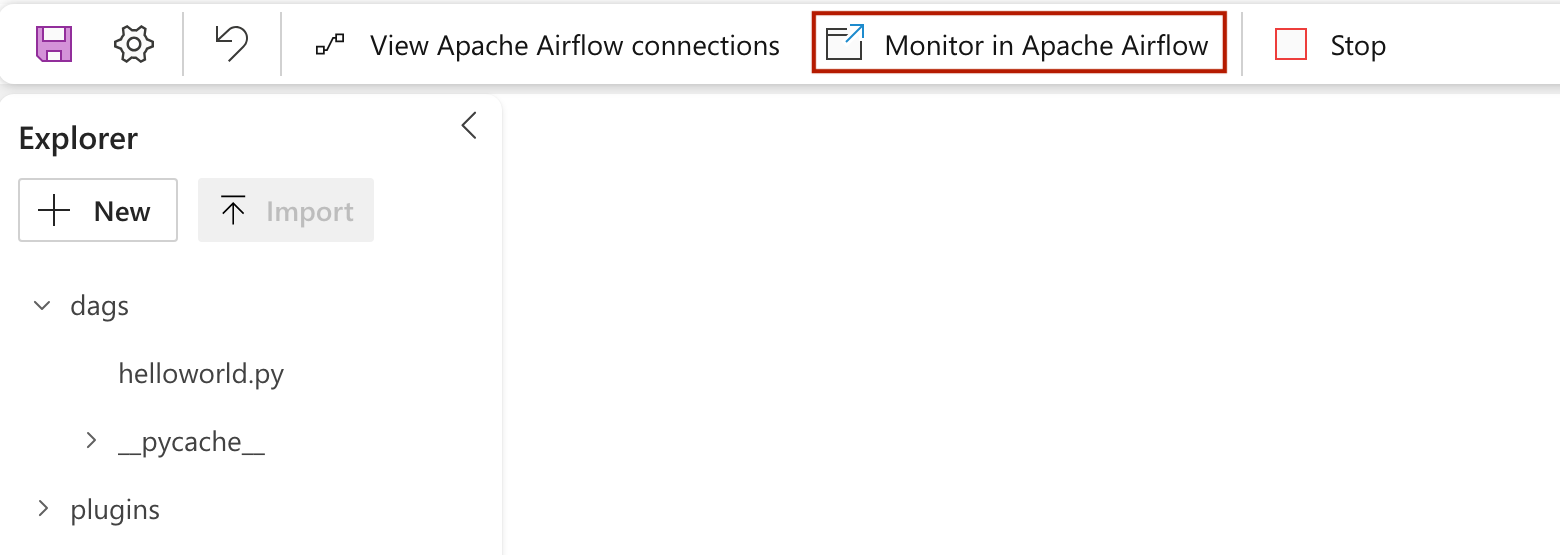

Monitor your Data workflow DAG in Apache Airflow UI

The saved dag files are loaded in the Apache Airflow UI. You can monitor them by clicking on the "Monitor in Apache Airflow" button.

Related Content

الملاحظات

قريبًا: خلال عام 2024، سنتخلص تدريجيًا من GitHub Issues بوصفها آلية إرسال ملاحظات للمحتوى ونستبدلها بنظام ملاحظات جديد. لمزيد من المعلومات، راجع https://aka.ms/ContentUserFeedback.

إرسال الملاحظات وعرضها المتعلقة بـ