إشعار

يتطلب الوصول إلى هذه الصفحة تخويلاً. يمكنك محاولة تسجيل الدخول أو تغيير الدلائل.

يتطلب الوصول إلى هذه الصفحة تخويلاً. يمكنك محاولة تغيير الدلائل.

Applies to: ✅ SQL analytics endpoint in Microsoft Fabric

The SQL analytics endpoint enables you to query data in the lakehouse using T-SQL language and TDS protocol.

Every lakehouse has one SQL analytics endpoint. The number of SQL analytics endpoints in a workspace matches the number of lakehouses and mirrored databases provisioned in that one workspace.

A background process is responsible for scanning lakehouse for changes, and keeping SQL analytics endpoint up-to-date for all the changes committed to lakehouses in a workspace. The sync process is transparently managed by Microsoft Fabric platform. When a change is detected in a lakehouse, a background process updates metadata and the SQL analytics endpoint reflects the changes committed to lakehouse tables. Under normal operating conditions, the lag between a lakehouse and SQL analytics endpoint is less than one minute. The actual length of time can vary from a few seconds to minutes depending on many factors that are discussed in this article. The background process runs only when the SQL analytics endpoint is active and it's halted after 15 minutes of inactivity.

Automatically generated schema in the SQL analytics endpoint of the Lakehouse

The SQL analytics endpoint manages the automatically generated tables so the workspace users can't modify them. Users can enrich the database model by adding their own SQL schemas, views, procedures, and other database objects.

For every Delta table in your Lakehouse, the SQL analytics endpoint automatically generates a table in the appropriate schema. For autogenerated schema data types for the SQL analytics endpoint, see Data types in Microsoft Fabric.

Tables in the SQL analytics endpoint are created with a minor delay. Once you create or update Delta Lake table in the lake, the SQL analytics endpoint table that references the Delta lake table is created/refreshed automatically.

The amount of time it takes to refresh the table is related to how optimized the Delta tables are. For more information, review Delta Lake table optimization and V-Order to learn more about key scenarios, and an in-depth guide on how to efficiently maintain Delta tables for maximum performance.

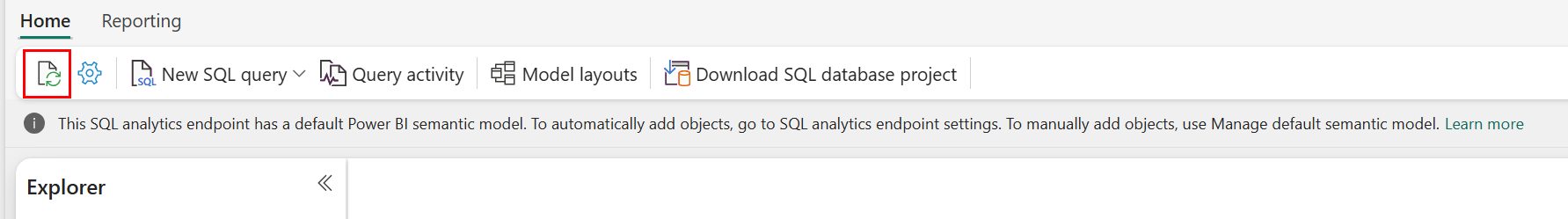

You can manually force a refresh of the automatic metadata scanning in the Fabric portal. On the page for the SQL analytics endpoint, select the Refresh button in the Explorer toolbar to refresh the schema. Go to Query your SQL analytics endpoint, and look for the refresh button, as shown in the following image.

You can also programmatically force a refresh of the automatic metadata scanning using the Refresh SQL endpoint metadata REST API.

Guidance

- Automatic metadata discovery tracks changes committed to lakehouses, and is a single instance per Fabric workspace. If you're observing increased latency for changes to sync between lakehouses and the SQL analytics endpoint, it could be due to large number of lakehouses in one workspace. In such a scenario, consider migrating each lakehouse to a separate workspace as this allows automatic metadata discovery to scale.

- Parquet files are immutable by design. When there's an update or a delete operation, a Delta table will add new parquet files with the changeset, increasing the number of files over time, depending on frequency of updates and deletes. If there's no maintenance scheduled, eventually, this pattern creates a read overhead and this impacts time it takes to sync changes to SQL analytics endpoint. To address this, schedule regular lakehouse table maintenance operations.

- In some scenarios, you might observe that changes committed to a lakehouse aren't visible in the associated SQL analytics endpoint. For example, you might have created a new table in lakehouse, but it's not yet listed in the SQL analytics endpoint. Or, you might have committed a large number of rows to a table in a lakehouse but this data isn't yet visible in the SQL analytics endpoint. We recommend initiating an on-demand metadata sync, triggered from the SQL query editor Refresh ribbon option or the Refresh SQL endpoint metadata REST API. This option forces an on-demand metadata sync, rather than waiting on the background metadata sync to finish.

- Not all Delta features are understood by the automatic sync process. For more information on the functionality supported by each engine in Fabric, see Delta Lake table format interoperability.

- If there's an extremely large volume of tables changes during the Extract Transform and Load (ETL) processing, an expected delay could occur until all the changes are processed.

Optimizing lakehouse tables for querying the SQL analytics endpoint

When the SQL analytics endpoint reads tables stored in a lakehouse, query performance is heavily influenced by the physical layout of the underlying parquet files.

A large number of small parquet files creates overhead and negatively affects query performance. To ensure predictable and efficient performance, we recommend maintaining table storage so that each parquet file contains two million rows. This row count provides a balanced level of parallelism without fragmenting the dataset into excessively small slices.

In addition to row count guidance, file size is equally important. The SQL analytics endpoint performs best when parquet files are large enough to minimize file handling overhead but not so large that they limit parallel scan efficiency. For most workloads, keeping individual parquet files close to 400 MB strikes the best balance. To achieve this balance, use the following steps:

- Set

maxRecordsPerFileto 2,000,000 before data changes occurs. - Perform your data changes (data ingestion, updates, deletes).

- Set

maxFileSizeto 400 MB. - Run

OPTIMIZE. For details on usingOPTIMIZE, refer to Table maintenance operations.

The following script provides a template for these steps, and should be executed on a lakehouse:

from delta.tables import DeltaTable

# 1. CONFIGURE LIMITS

# Cap files to 2M rows during writes. This should be done before data ingestion occurs.

spark.conf.set("spark.sql.files.maxRecordsPerFile", 2000000)

# 2. INGEST DATA

# Here, you ingest data into your table

# 3. CAP FILE SIZE (~400 MB)

spark.conf.set("spark.databricks.delta.optimize.maxFileSize", 400 * 1024 * 1024)

# 4. RUN OPTIMIZE (bin-packing)

spark.sql("""

OPTIMIZE myTable

""")

To maintain healthy file sizes, users should periodically run Delta optimization operations such as OPTIMIZE, especially for tables that receive frequent incremental writes, updates and deletes. These maintenance operations compact small files into appropriately sized ones, helping ensure the SQL analytics endpoint can process queries efficiently.

Note

For guidance on general maintenance of lakehouse tables, refer to Execute ad-hoc table maintenance on a Delta table using Lakehouse.

Partition size considerations

The choice of partition column for a delta table in a lakehouse also affects the time it takes to sync changes to SQL analytics endpoint. The number and size of partitions of the partition column are important for performance:

- A column with high cardinality (mostly or entirely made of unique values) results in a large number of partitions. A large number of partitions negatively impacts performance of the metadata discovery scan for changes. If the cardinality of a column is high, choose another column for partitioning.

- The size of each partition can also affect performance. Our recommendation is to use a column that would result in a partition of at least (or close to) 1 GB. We recommend following best practices for delta tables maintenance; optimization. For a python script to evaluate partitions, see Sample script for partition details.

A large volume of small-sized parquet files increases the time it takes to sync changes between a lakehouse and its associated SQL analytics endpoint. You might end up with large number of parquet files in a delta table for one or more reasons:

- If you choose a partition for a delta table with high number of unique values, it's partitioned by each unique value and might be over-partitioned. Choose a partition column that doesn't have a high cardinality, and results in individual partitions at least 1 GB each.

- Batch and streaming data ingestion rates might also result in small files depending on frequency and size of changes being written to a lakehouse. For example, there might be a small volume of changes coming through to the lakehouse, resulting in small parquet files. To address this, we recommend implementing regular lakehouse table maintenance.

Sample script for partition details

Use the following notebook to print a report detailing size and details of partitions underpinning a delta table.

- First, you must provide the ABSFF path for your delta table in the variable

delta_table_path.- You can get ABFSS path of a delta table from the Fabric portal Explorer. Right-click on table name, then select

COPY PATHfrom the list of options.

- You can get ABFSS path of a delta table from the Fabric portal Explorer. Right-click on table name, then select

- The script outputs all partitions for the delta table.

- The script iterates through each partition to calculate the total size and number of files.

- The script outputs the details of partitions, files per partitions, and size per partition in GB.

The complete script can be copied from the following code block:

# Purpose: Print out details of partitions, files per partitions, and size per partition in GB.

from notebookutils import mssparkutils

# Define ABFSS path for your delta table. You can get ABFSS path of a delta table by simply right-clicking on table name and selecting COPY PATH from the list of options.

delta_table_path = "abfss://<workspace id>@<onelake>.dfs.fabric.microsoft.com/<lakehouse id>/Tables/<tablename>"

# List all partitions for given delta table

partitions = mssparkutils.fs.ls(delta_table_path)

# Initialize a dictionary to store partition details

partition_details = {}

# Iterate through each partition

for partition in partitions:

if partition.isDir:

partition_name = partition.name

partition_path = partition.path

files = mssparkutils.fs.ls(partition_path)

# Calculate the total size of the partition

total_size = sum(file.size for file in files if not file.isDir)

# Count the number of files

file_count = sum(1 for file in files if not file.isDir)

# Write partition details

partition_details[partition_name] = {

"size_bytes": total_size,

"file_count": file_count

}

# Print the partition details

for partition_name, details in partition_details.items():

print(f"{partition_name}, Size: {details['size_bytes']:.2f} bytes, Number of files: {details['file_count']}")