ملاحظة

يتطلب الوصول إلى هذه الصفحة تخويلاً. يمكنك محاولة تسجيل الدخول أو تغيير الدلائل.

يتطلب الوصول إلى هذه الصفحة تخويلاً. يمكنك محاولة تغيير الدلائل.

In this tutorial, you'll learn how to use Microsoft Fabric to prepare, load, and model data for Power BI reporting. You'll use Dataflows Gen2 to ingest and transform data into a Lakehouse, orchestrate data refreshes with Pipelines, and build a dimensional model using Direct Lake mode. Finally, you'll automatically generate a report to visualize the latest sales data.

By the end of this tutorial, you'll be able to:

- Prepare and load data into a lakehouse

- Orchestrate a data pipeline to refresh data and send an email on failure

- Create a semantic model in the Lakehouse

- Automatically create a report with quick create

Prerequisites

Before you begin, ensure you have the following:

- Enable Fabric for your organization if you haven't already.

- Sign up for a free trial if you don't have access.

- Create a new workspace and assign a Fabric capacity. You can use an existing workspace, but a nonproduction workspace is recommended for this tutorial.

- Download the Power Query template file containing sample queries for Contoso data.

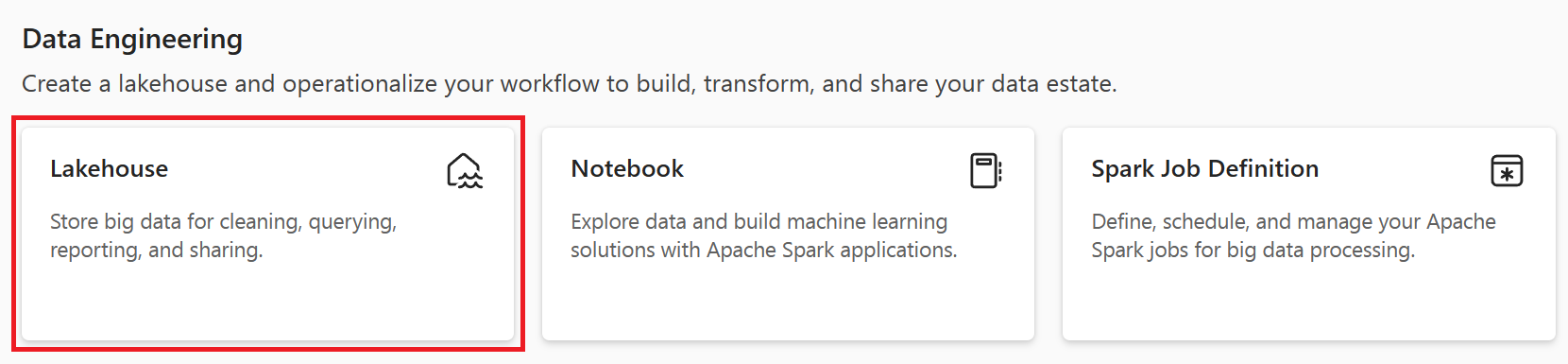

Create a lakehouse to store data

Start by creating a lakehouse to store your data. You'll use Dataflows Gen2 to prepare and transform it, and a pipeline to orchestrate scheduled refreshes and email notifications.

In your workspace, select New item at the top of the page.

On the New item creation screen, search for or select Lakehouse.

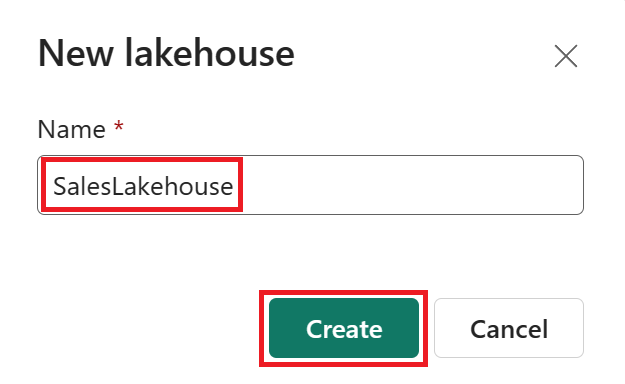

Enter SalesLakehouse as the name (names can include letters, numbers, and underscores, but not special characters or spaces), then select Create.

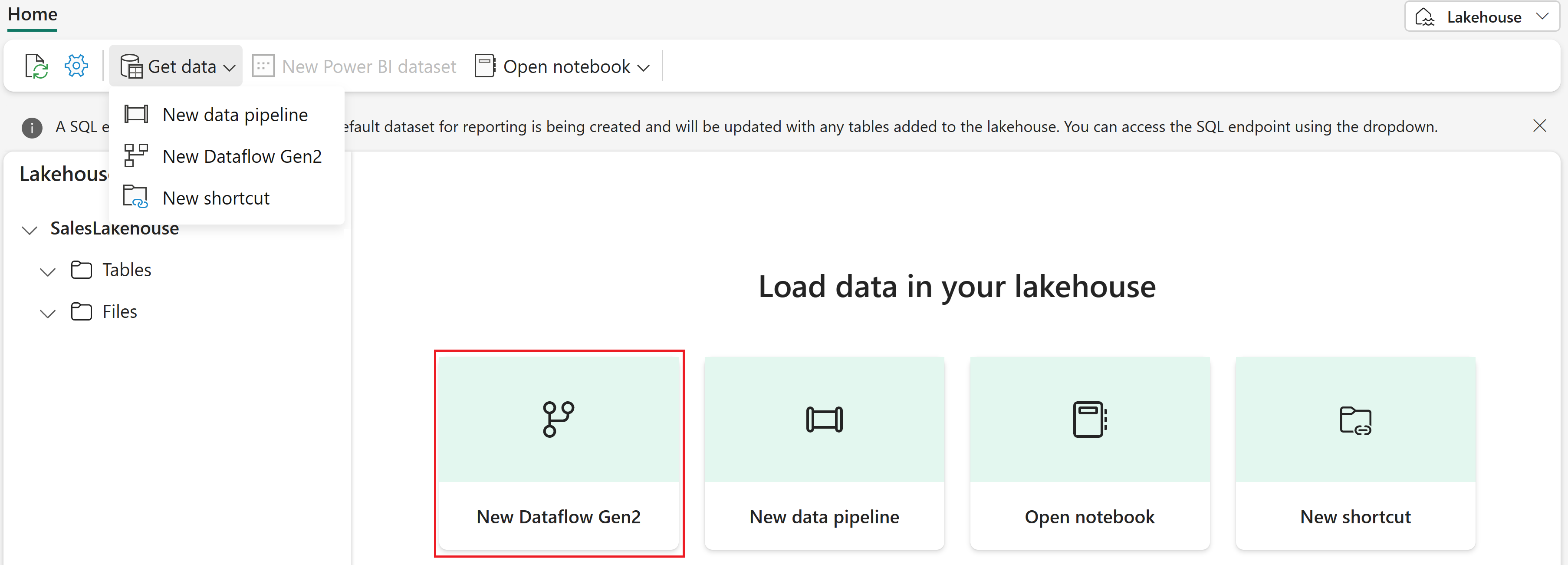

In the Lakehouse editor, select New Dataflow Gen2 from the ribbon.

Note

Alternatively, select Get data from the ribbon, then choose New Dataflow Gen2.

Name the dataflow OnlineSalesDataflow (use only letters, numbers, and underscores), then select Create.

Prepare and load data into your lakehouse using Dataflows Gen2

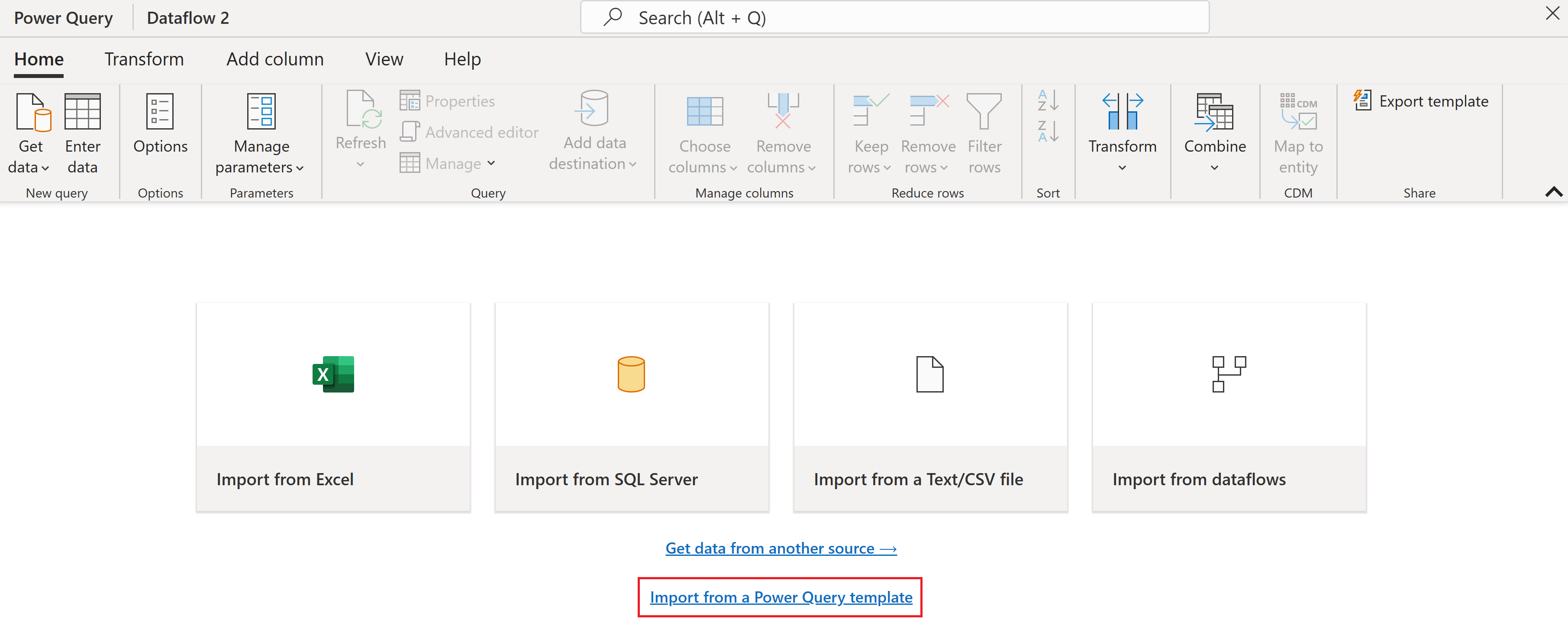

In the Power Query Online editor for Dataflows Gen2, select Import from a Power Query template and choose the ContosoSales.pqt template file you downloaded in the prerequisites.

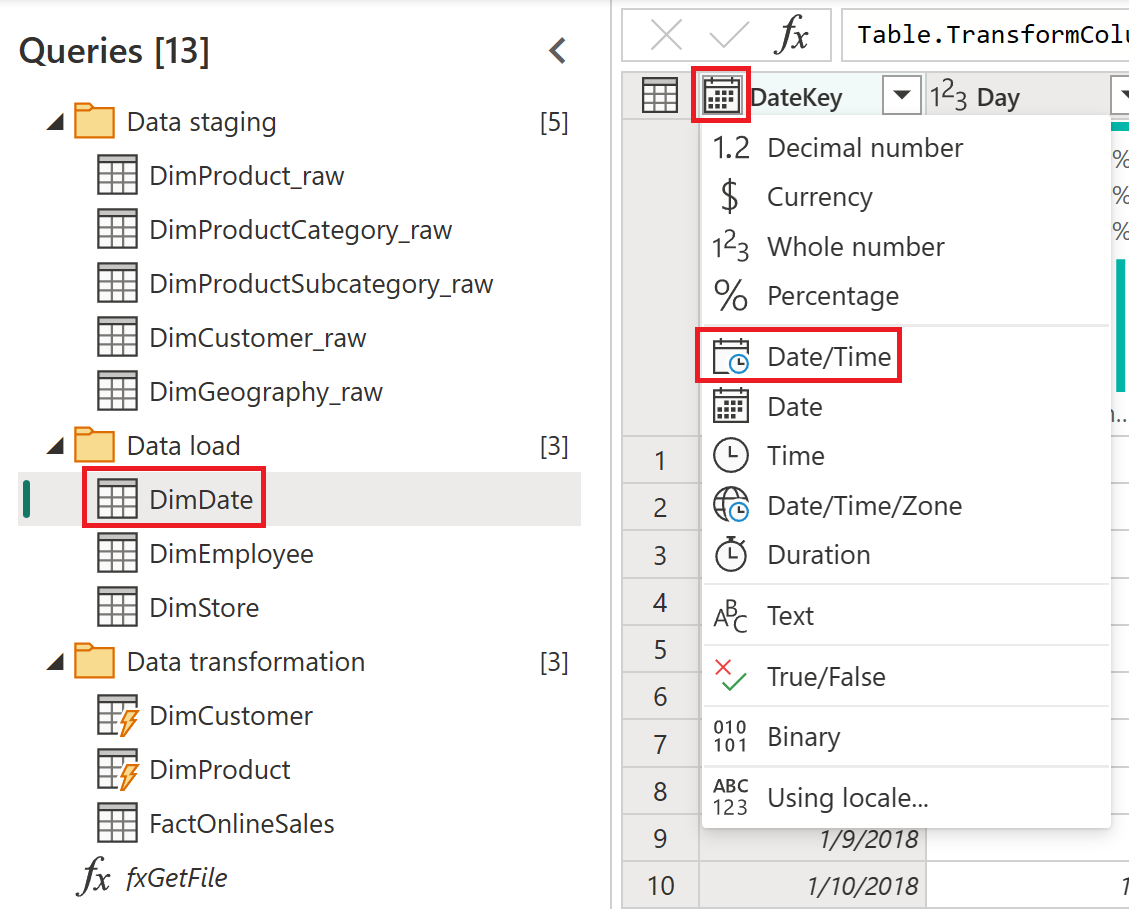

Select the DimDate query under the Data load group. If prompted, select Configure connection, set authentication to Anonymous, and select Connect.

With DimDate selected, in the data preview, find the DateKey column. Select the data type icon in the column header and choose Date/Time from the dropdown.

In the Change column type window, select Replace current.

Configure data destinations

With DimDate selected, review the data destination settings in the bottom right. Hover over the configured Lakehouse to view its properties.

The Lakehouse you created is the destination for all tables. The default update method is Replace, which overwrites previous data during each refresh.

Select the FactOnlineSales table and review its data destination settings.

Because the FactOnlineSales source changes frequently, optimize refreshes by appending new data. Remove its current data destination by selecting the X icon. Do not remove destinations for other tables.

With FactOnlineSales still selected, select the + icon to add a data destination, then choose Lakehouse.

If prompted, set authentication to Organizational account and select Next.

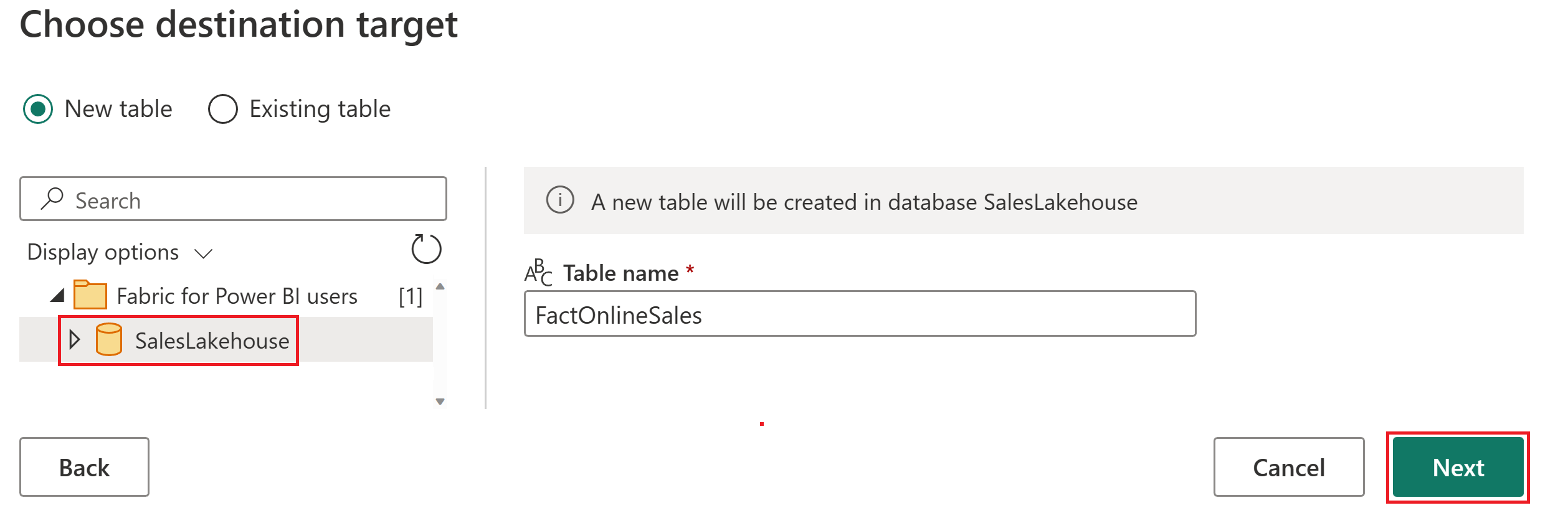

In the navigator, select your workspace and expand to view all Lakehouse items. Select SalesLakehouse and ensure New table is selected, then select Next.

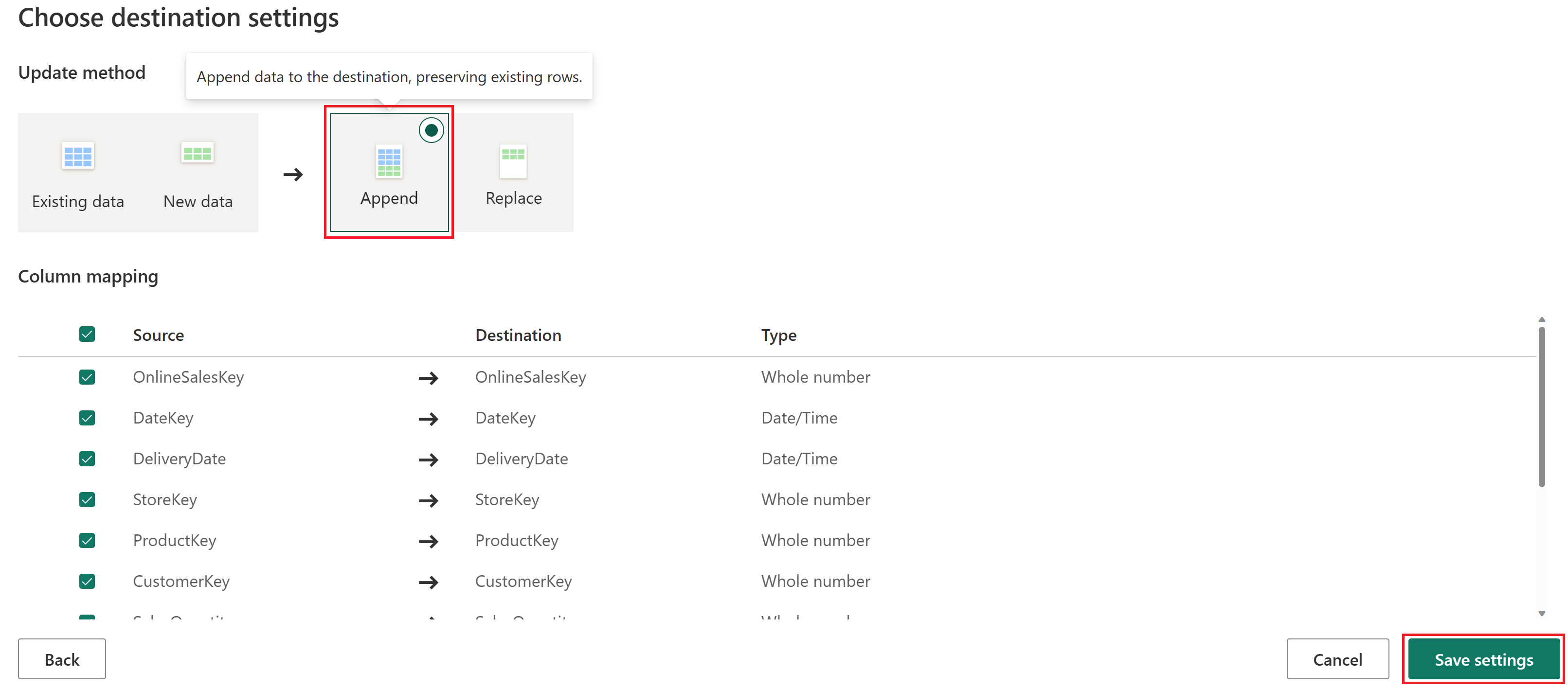

In the data destination settings panel, clear Use automatic settings, set Update method to Append, and select Save settings.

Note

The Append method adds new rows to the table during each refresh, preserving existing data.

From the Home tab, select Save & run.

To exit the Power Query Online editor, select the X on the OnlineSalesDataflow item in the left side-rail.

Note

Selecting X closes the editor but does not delete the dataflow.

Orchestrate a data pipeline

Automate the refresh of your dataflow and handle errors by sending a customized Outlook email with key details.

In your workspace, select New item.

On the New item creation screen, search for or select Data pipeline.

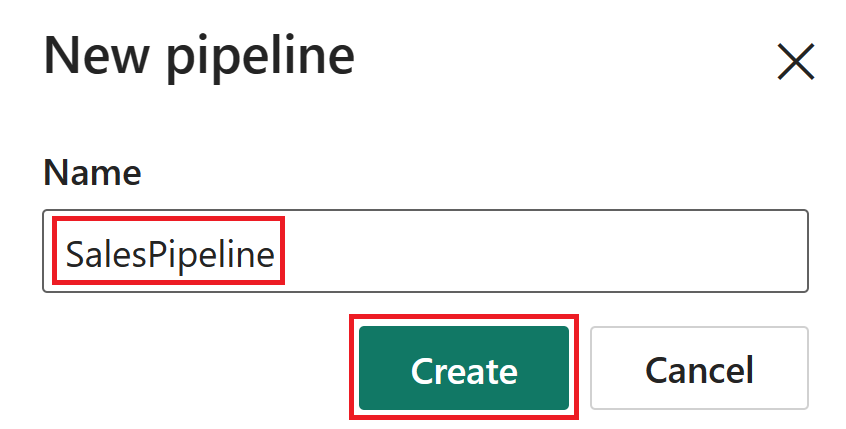

Name the pipeline SalesPipeline and select Create.

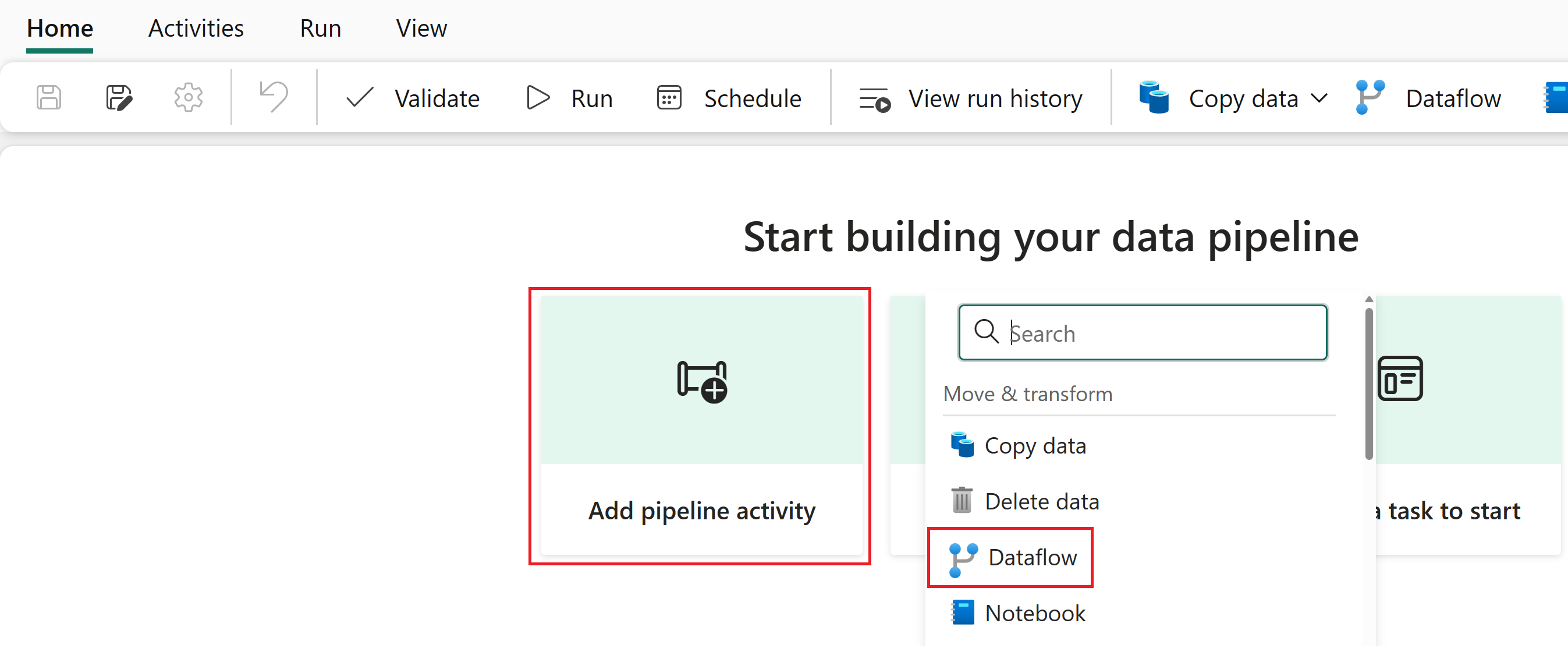

In the pipeline editor, select Pipeline activity, then choose Dataflow.

Note

You can also select Dataflow from the ribbon.

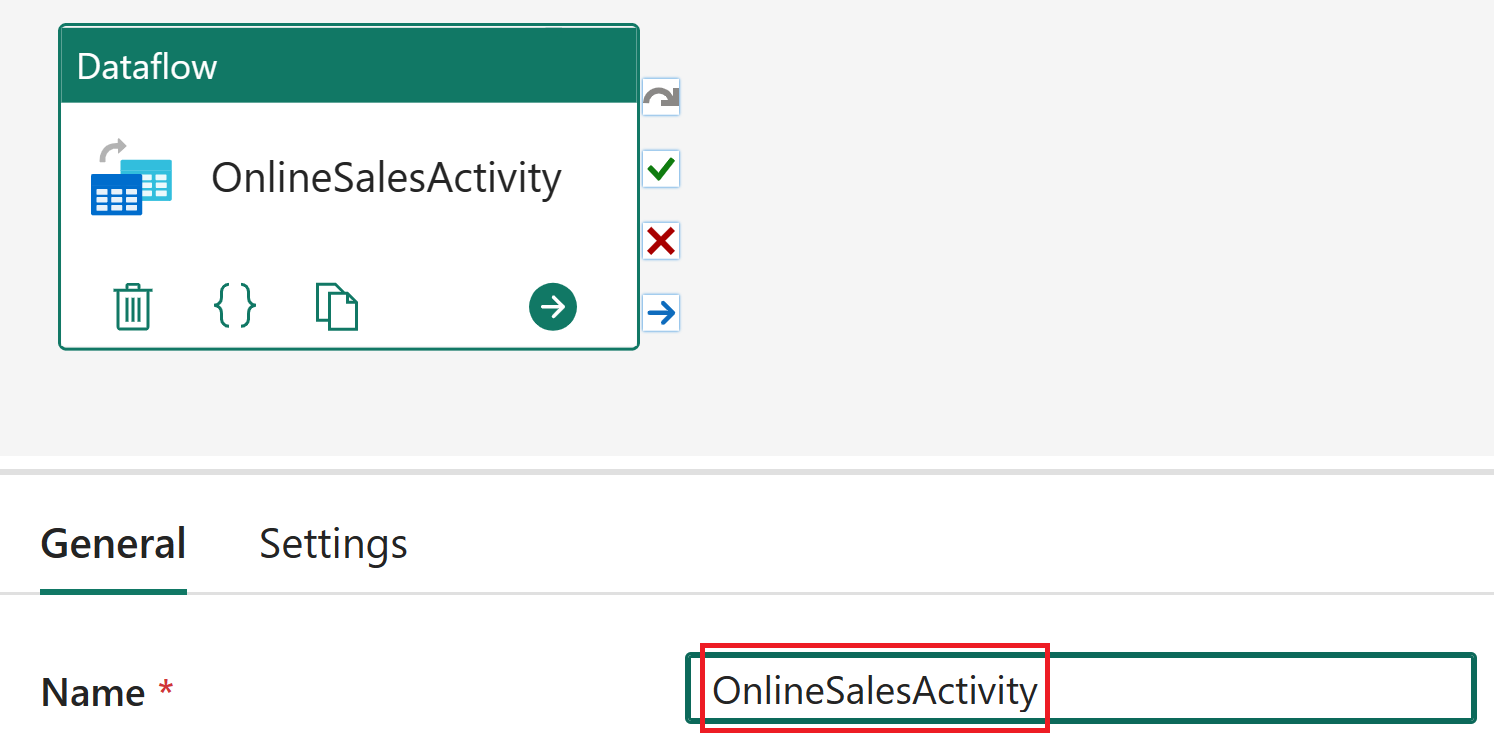

Select the dataflow activity in the pipeline editor. In the General section, set the Name to OnlineSalesActivity.

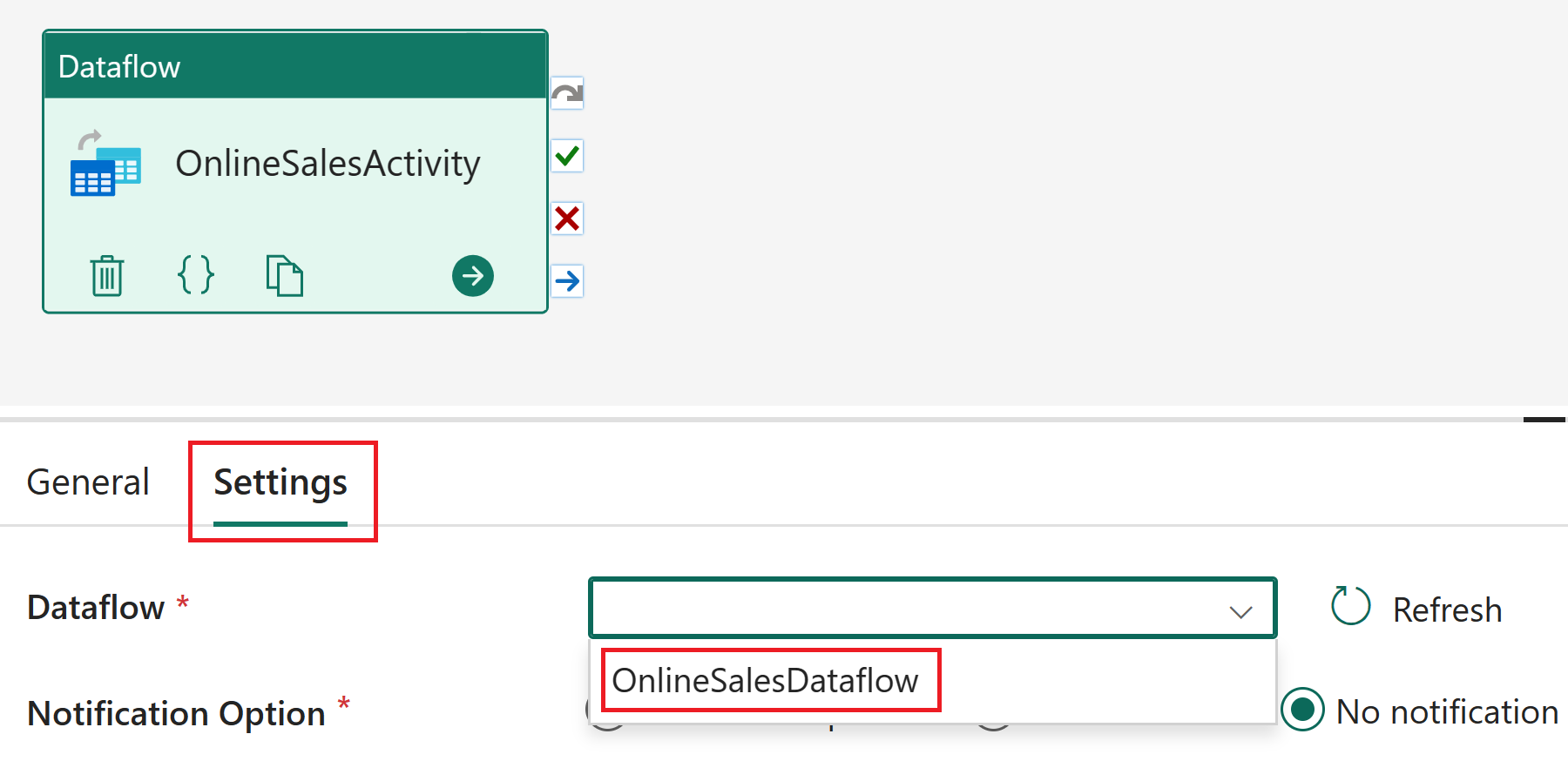

With the dataflow activity selected, go to Settings and choose OnlineSalesDataflow from the Dataflow list. If needed, select the Refresh icon to update the list.

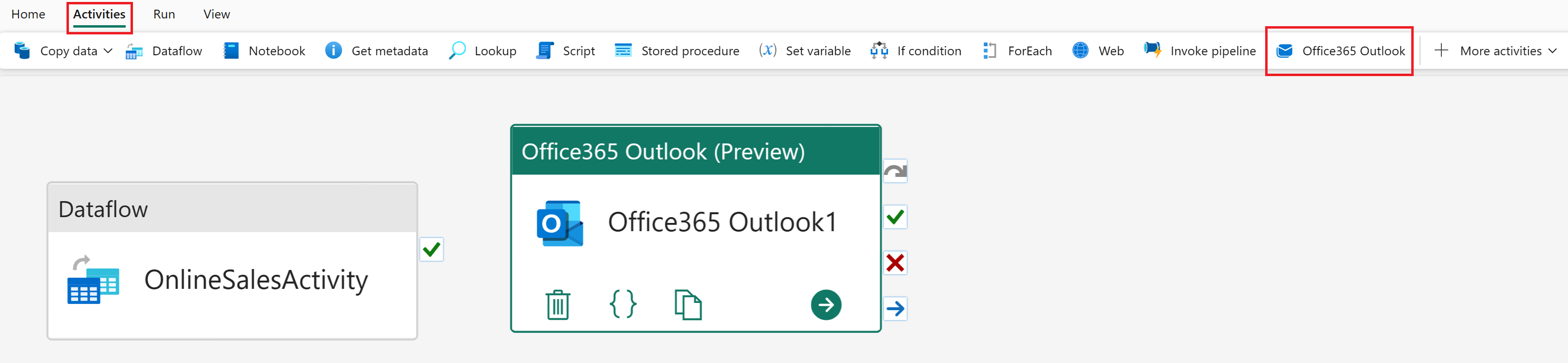

Select the Activities tab, then add the Office365 Outlook activity.

Note

If prompted with a Grant consent window, select Ok, sign in with your organizational account, and allow access.

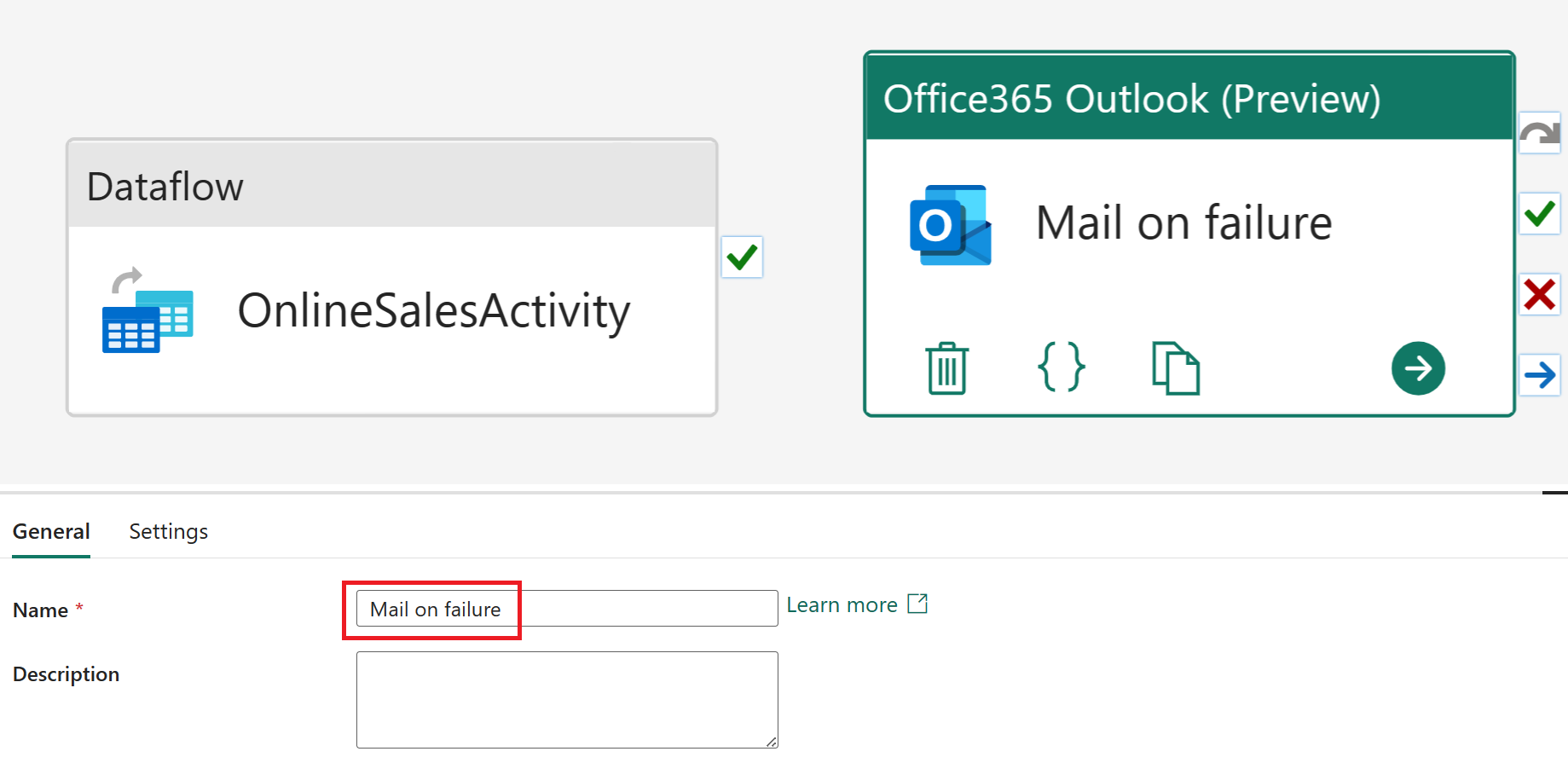

Select the Office365 Outlook activity in the pipeline editor. In the General section, set the Name to Mail on failure.

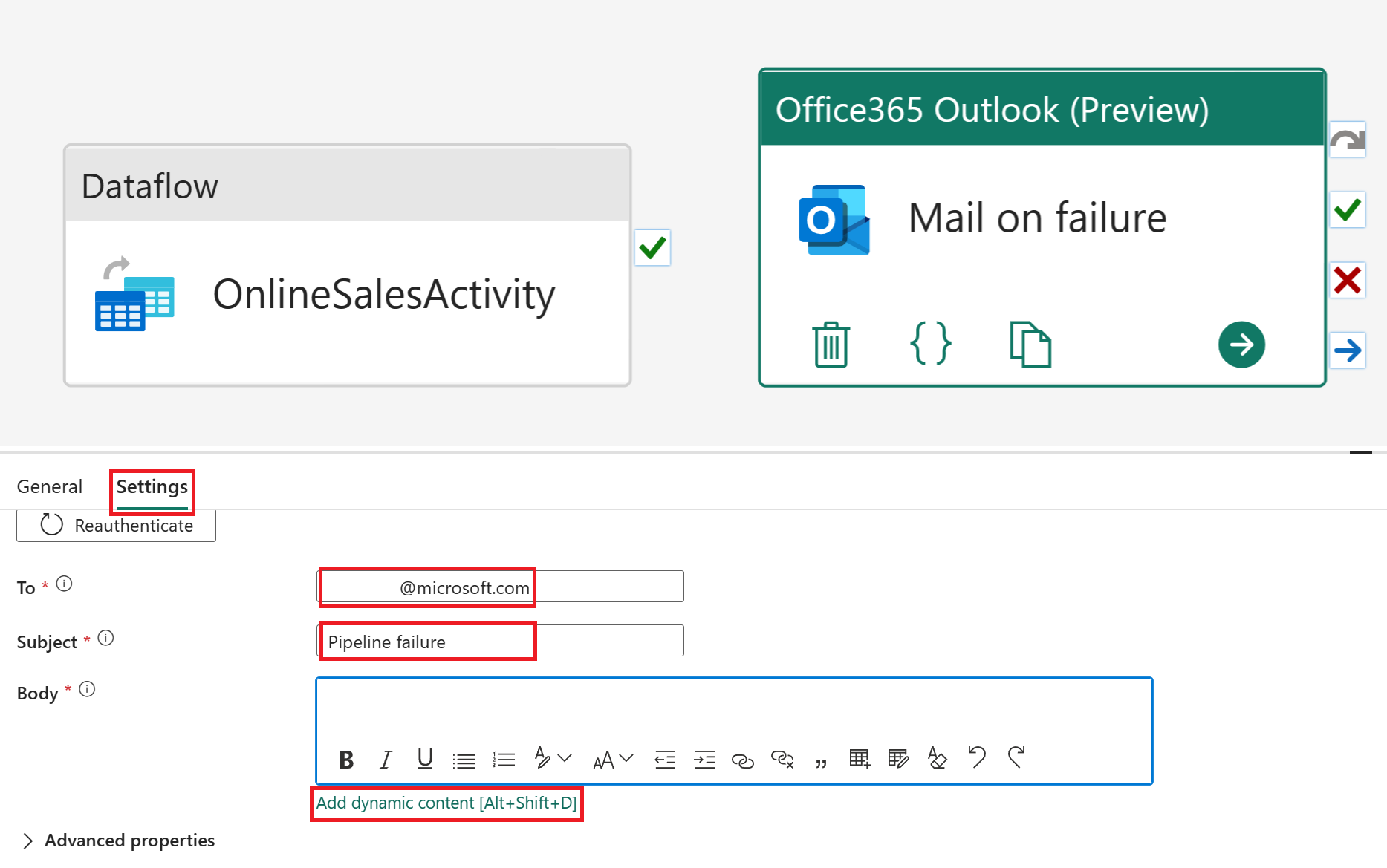

With the Office365 Outlook activity selected, go to Settings. Enter your email address in the To field and set the Subject to Pipeline failure. For the mail Body, select View in expression builder.

Note

Additional email options such as From (Send as), Cc, Bcc, and Sensitivity label are available under Advanced properties.

In the Pipeline expression builder, paste the following expression into the text block at the top of the page:

@concat( 'Pipeline: ' , pipeline().PipelineId , '<br>' , 'Workspace: ' , pipeline().WorkspaceId , '<br>' , 'Time: ' , utcnow() )This expression dynamically inserts the pipeline ID, workspace ID, and the current UTC time into the email body.

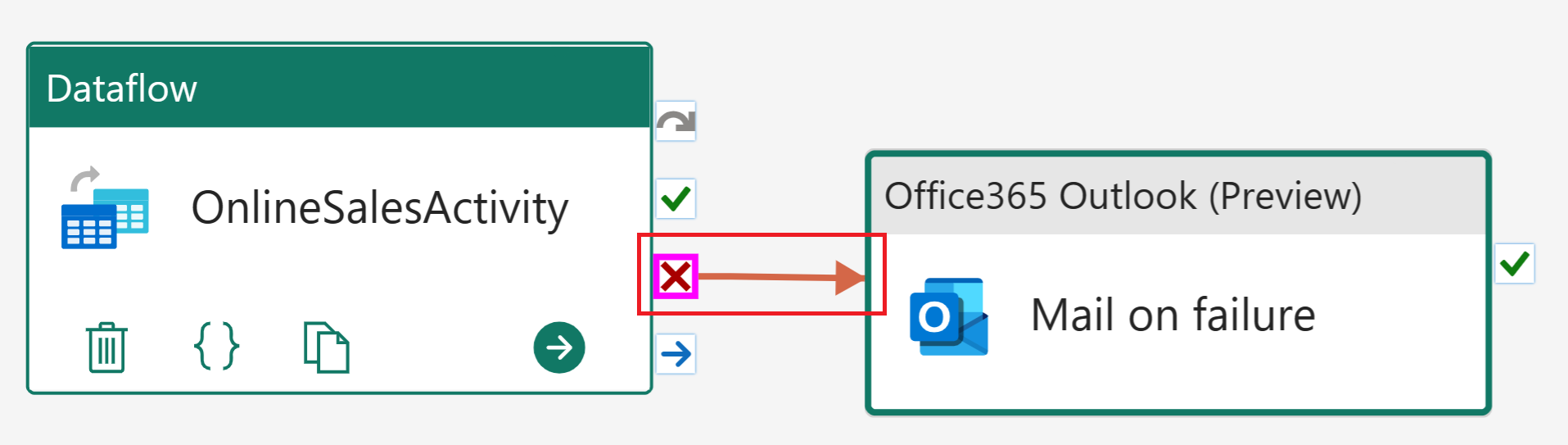

In the pipeline editor, select OnlineSalesActivity. Drag from the "X" (On fail) handle and drop it onto the Mail on failure activity. This ensures the email is sent if the dataflow activity fails.

From the Home tab, select Schedule. Configure the schedule as follows, then select Apply:

Name Value Scheduled run On Repeat Daily Time 12:00:00 AM From the Home tab, select Run. If prompted, select Save and run to continue.

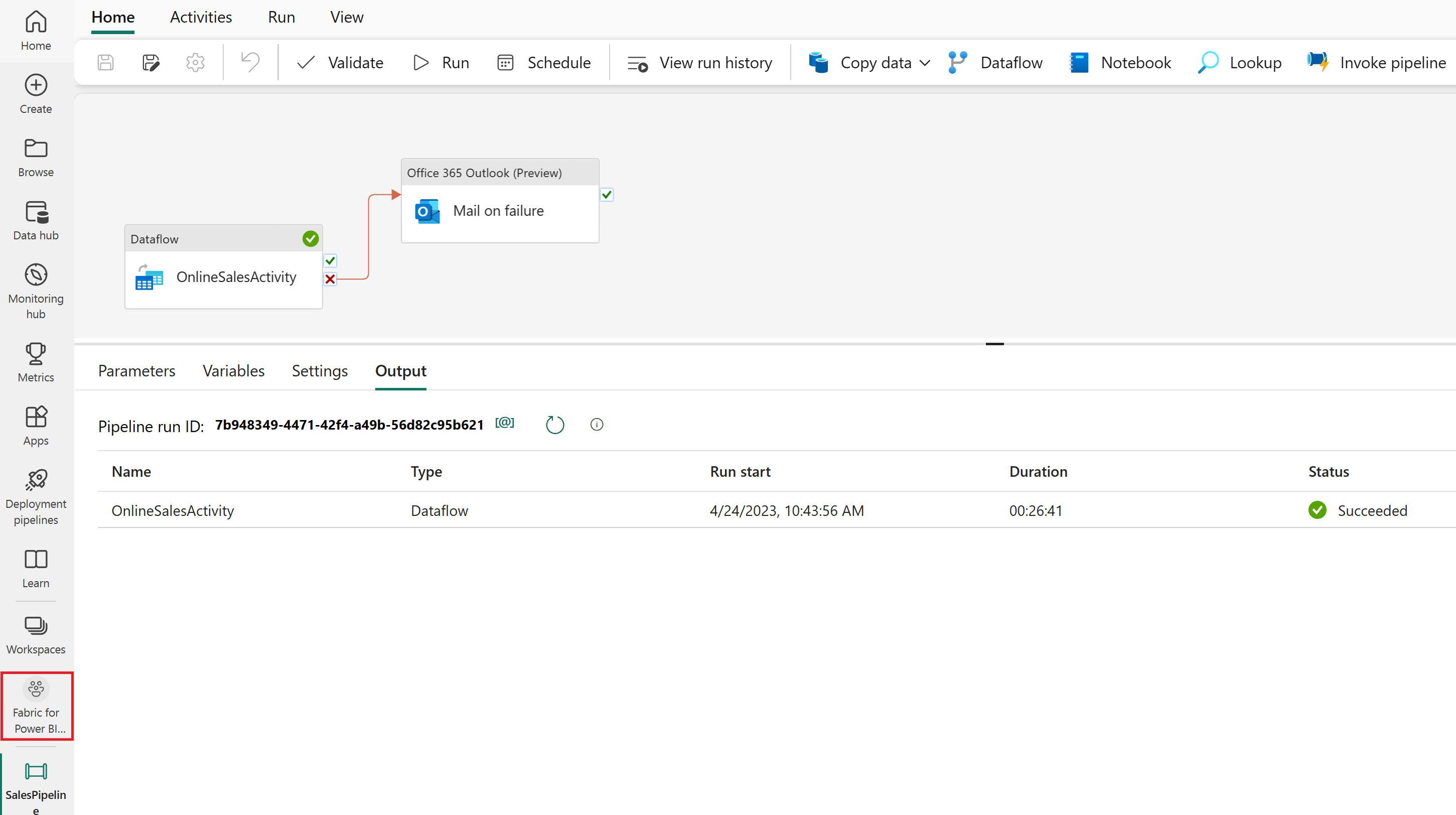

To monitor the pipeline’s status, view the Output table, which displays activity progress. The table refreshes automatically, or you can select the refresh icon to update it manually.

When the status shows Succeeded, return to your workspace to continue with the tutorial.

Create a semantic model in the Lakehouse

The data you loaded is almost ready for reporting. Next, use the tables in the lakehouse to create a semantic model—a metadata layer that organizes and transforms physical data into logical objects. The semantic model is designed to reflect your business structure and make the data easier to analyze.

In the workspace view, select the SalesLakehouse item.

Once in the Explorer, from the Home tab select New semantic model.

In the New semantic model window, name your model SalesModel. Then, expand both the dbo schema and the Tables group. Select the following tables, and select Confirm:

Table name DimCustomer DimDate DimEmployee DimProduct DimStore FactOnlineSales

Create relationships

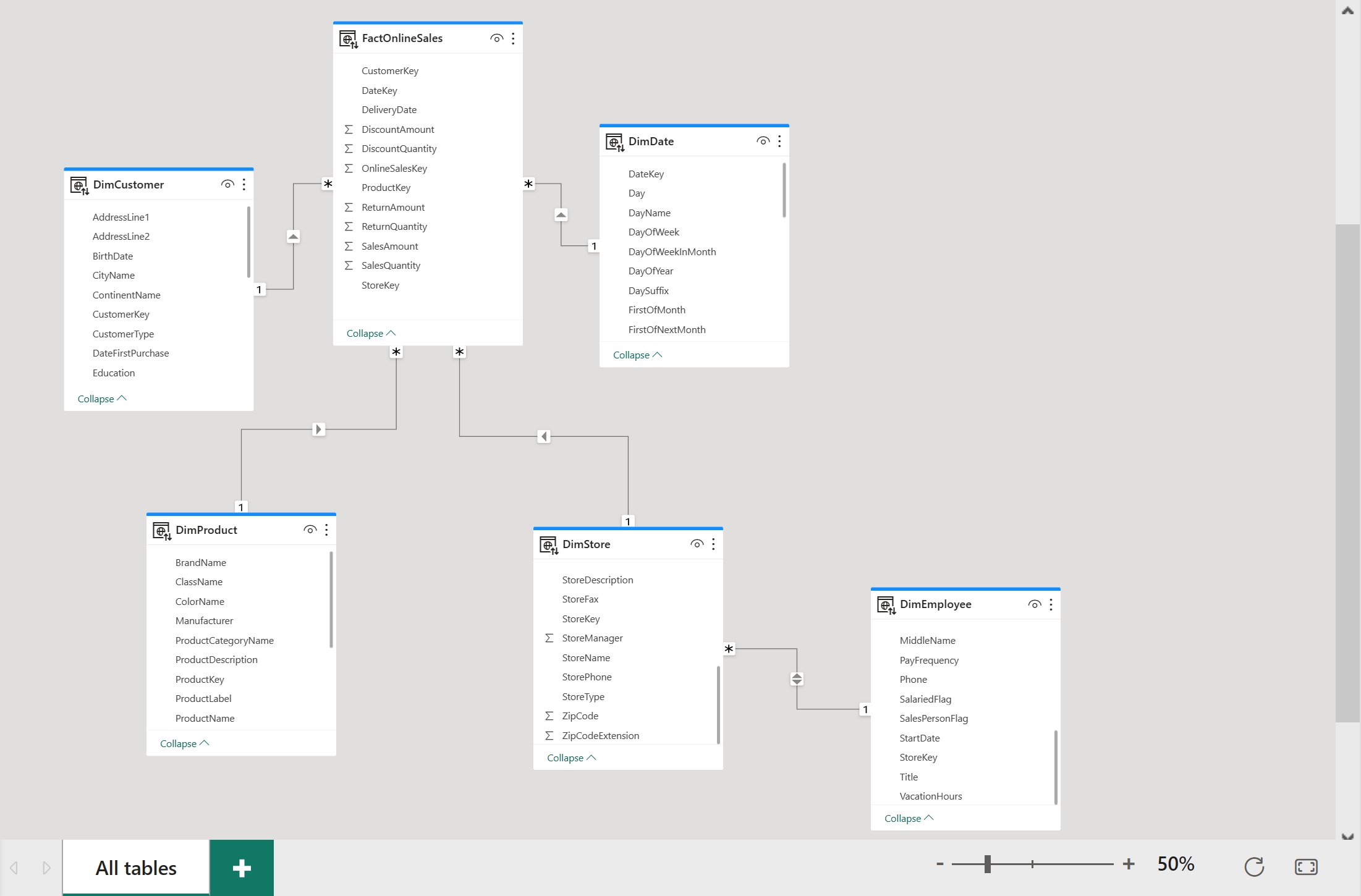

This model uses a star schema, common in data warehouses. The center of the star is a Fact table, surrounded by Dimension tables, which are related to the Fact table.

Create a relationship by dragging and dropping the CustomerKey column from the FactOnlineSales table to the CustomerKey column on the DimCustomer table.

In the Create Relationship window, ensure the following settings, then select Confirm:

Make this relationship active From: Table 1 (column) To: Table 2 (column) Cardinality Cross filter direction ☑ FactOnlineSales (CustomerKey) DimCustomer (CustomerKey) Many to one (*:1) Single Repeat these steps for each of the following relationships:

Make this relationship active From: Table 1 (column) To: Table 2 (column) Cardinality Cross filter direction ☑ FactOnlineSales (ProductKey) DimProduct (ProductKey) Many to one (*:1) Single ☑ FactOnlineSales (StoreKey) DimStore (StoreKey) Many to one (*:1) Single ☑ FactOnlineSales (DateKey) DimDate (DateKey) Many to one (*:1) Single ☑ DimStore (StoreKey) DimEmployee (StoreKey) Many to one (*:1) Both The following image shows a finished view of the semantic model with all relationships included.

Write a measure in DAX

Create a basic measure to calculate the total sales amount.

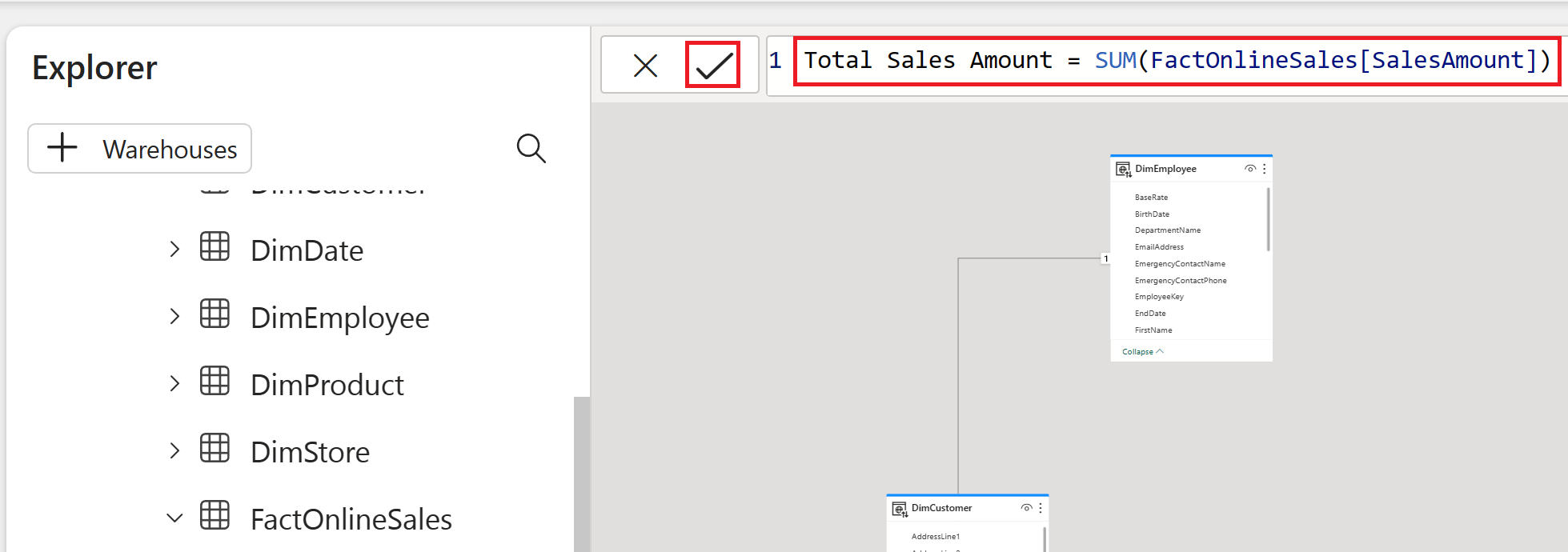

Select the FactOnlineSales table and on the Home tab, select New measure.

In the formula editor, enter the following DAX measure, then select the check mark to commit:

Total Sales Amount = SUM(FactOnlineSales[SalesAmount])

Autocreate a report

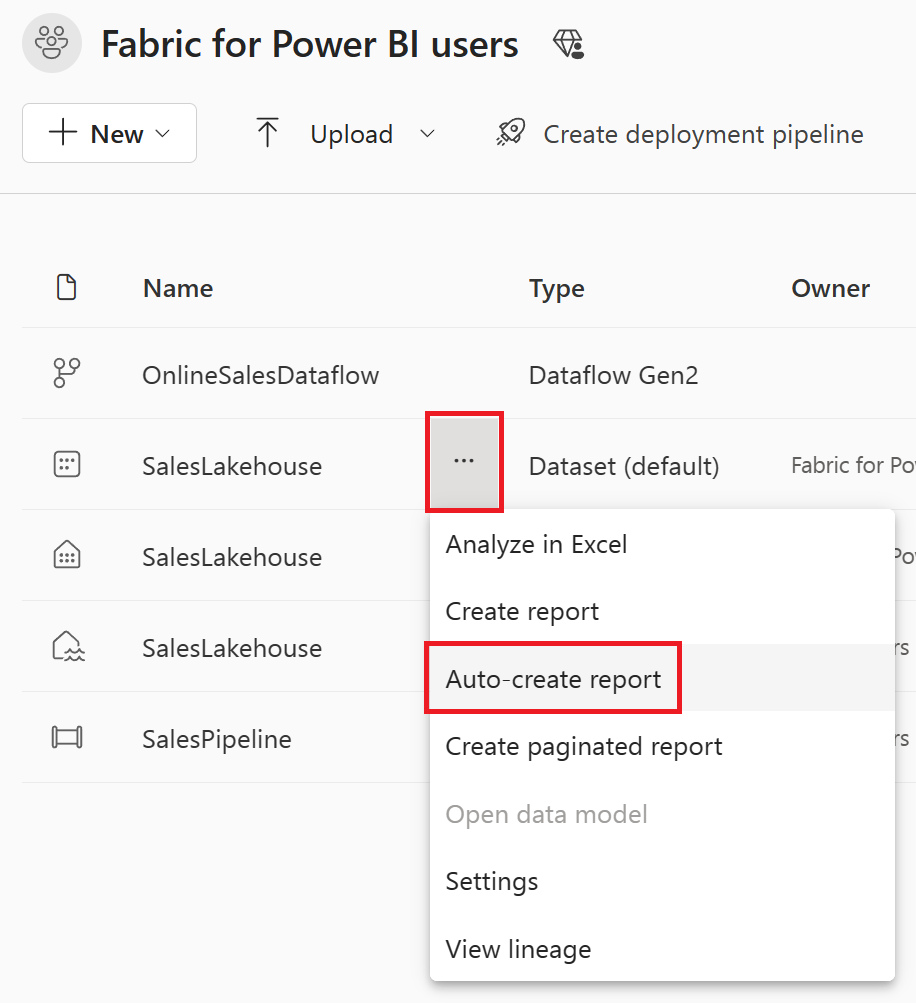

Now that you’ve modeled your data, you can quickly visualize and explore it using quick create.

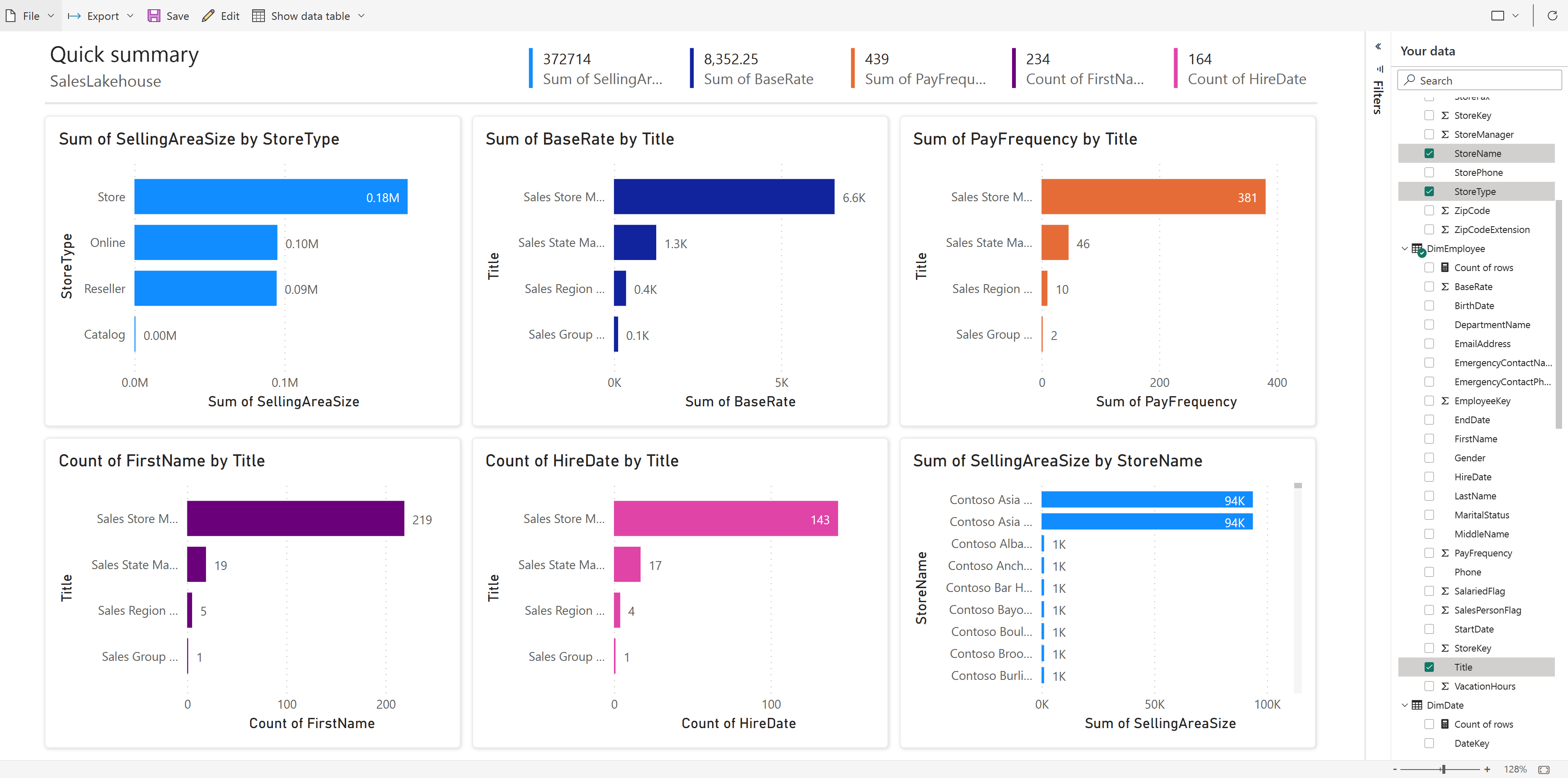

Return to your workspace by selecting it from the left side-rail. Hover over the SalesModel item, select the ellipses ( … ), and choose Auto-create report.

A report is automatically generated and updates dynamically based on column selections in the Your data pane.

Select Save from the ribbon to save a copy to the current workspace.

Note

To enter the full visual authoring experience, select Edit on the ribbon.

Learn more about quick create.

Related content

Congratulations on completing the tutorial! If you created a dedicated workspace for this tutorial, you can now choose to delete it, or remove individual items created during the process.

This tutorial demonstrates how Power BI users can easily uncover insights at any scale using Microsoft Fabric.