Run a Python notebook as a job by using the Databricks extension for Visual Studio Code

This article describes how to run a Python notebook as an Azure Databricks job by using the Databricks extension for Visual Studio Code. See What is the Databricks extension for Visual Studio Code?.

To run a Python file as an Azure Databricks job instead, see Run a Python file as a job by using the Databricks extension for Visual Studio Code. To run an R, Scala, or SQL notebook as an Azure Databricks job instead, see Run an R, Scala, or SQL notebook as a job by using the Databricks extension for Visual Studio Code.

This information assumes that you have already installed and set up the Databricks extension for Visual Studio Code. See Install the Databricks extension for Visual Studio Code.

With the extension and your code project opened, do the following:

In your code project, open the Python notebook that you want to run as a job.

Tip

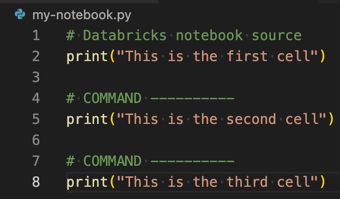

To create a Python notebook file in Visual Studio Code, begin by clicking File > New File, select Python File, and save the new file with a

.pyfile extension.To turn the

.pyfile into an Azure Databricks notebook, add the special comment# Databricks notebook sourceto the beginning of the file, and add the special comment# COMMAND ----------before each cell. For more information, see Import a file and convert it to a notebook.

Do one of the following:

In Explorer view (View > Explorer), right-click the notebook file, and then select Run File as Workflow on Databricks from the context menu.

In the notebook file editor’s title bar, click the drop-down arrow next to the play (Run or Debug) icon. Then in the drop-down list, click Run File as Workflow on Databricks.

A new editor tab appears, titled Databricks Job Run. The notebook runs as a job in the workspace, and the notebook and its output are displayed in the new editor tab’s Output area.

To view information about the job run, click the Task run ID link in the Databricks Job Run editor tab. Your workspace opens and the job run’s details are displayed in the workspace.

Feedback

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback.

Submit and view feedback for