Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

This page describes how to configure Auto Loader streams to use file notification mode to incrementally discover and ingest cloud data.

In file notification mode, Auto Loader automatically sets up a notification service and queue service that subscribes to file events from the input directory. You can use file notifications to scale Auto Loader to ingest millions of files an hour. When compared to directory listing mode, file notification mode is more performant and scalable.

You can switch between file notifications and directory listing at any time and still maintain exactly-once data processing guarantees.

Note

File notification mode isn't supported for Azure premium storage accounts because premium accounts don't support queue storage.

Warning

Changing the source path for Auto Loader is not supported for file notification mode. If file notification mode is used and the path is changed, you might fail to ingest files that are already present in the new directory at the time of the directory update.

File notification mode with and without file events enabled on external locations

There are two ways to configure Auto Loader to use file notification mode:

(Recommended) File events: you use a single file notification queue for all streams that process files from a given external location.

This approach has the following advantages over the legacy file notification mode:

- Azure Databricks can set up subscriptions and file events in your cloud storage account for you without requiring that you supply additional credentials to Auto Loader using a service credential or other cloud-specific authentication options. See (Recommended) Enable file events for an external location.

- You have fewer Azure managed identity policies to create in your cloud storage account.

- Because you no longer need to create a queue for each Auto Loader stream, it's easier to avoid hitting the cloud provider notification limits listed in Cloud resources used in legacy Auto Loader file notification mode.

- Azure Databricks automatically manages the tuning of resource requirements, so you don't need to tune parameters such as

cloudFiles.fetchParallelism. - Cleanup functionality means that you don't need to worry as much about the lifecycle of notifications that are created in the cloud, such as when a stream is deleted or fully refreshed.

If you use Auto Loader in directory listing mode, Databricks recommends migrating to file notification mode with file events. Auto Loader with file events offers significant performance improvements. Start by enabling file events for your external location, then set cloudFiles.useManagedFileEvents in your Auto Loader stream configuration.

Legacy file notification mode: You manage file notification queues for each Auto Loader stream separately. Auto Loader automatically sets up a notification service and queue service that subscribes to file events from the input directory.

This is the legacy approach.

Use file notification mode with file events

This section describes how to create and updated Auto Loader streams to use file events.

Before you begin

Setting up file events requires:

- A Azure Databricks workspace that is enabled for Unity Catalog.

- Permission to create storage credential and external location objects in Unity Catalog.

Auto Loader streams with file events require:

- Compute on Databricks Runtime 14.3 LTS or above.

Configuration instructions

The following instructions apply whether you are creating new Auto Loader streams or migrating existing streams to use the upgraded file notification mode with file events:

Create a storage credential and external location in Unity Catalog that grant access to the source location in cloud storage for your Auto Loader streams.

Enable file events for the external location. See (Recommended) Enable file events for an external location.

When you create a new Auto Loader stream or edit an existing one to work with the external location:

- If you have existing notifications-based Auto Loader streams that consume data from the external location, switch them off and delete the associated notification resources.

- Ensure that

pathRewritesis not set (this is not a common option). - Review the list of settings that Auto Loader ignores when it manages file notifications using file events. Avoid them in new Auto Loader streams and remove them from existing streams that you are migrating to this mode.

- Set the option

cloudFiles.useManagedFileEventstotruein your Auto Loader code.

For example:

autoLoaderStream = (spark.readStream

.format("cloudFiles")

...

.options("cloudFiles.useManagedFileEvents", True)

...)

If you're using Lakeflow Spark Declarative Pipelines and you already have a pipeline with a streaming table, update it to include the useManagedFileEvents option:

CREATE OR REFRESH STREAMING LIVE TABLE <table-name>

AS SELECT <select clause expressions>

FROM STREAM read_files('abfss://path/to/external/location/or/volume',

format => '<format>',

useManagedFileEvents => 'True'

...

);

Unsupported Auto Loader settings

The following Auto Loader settings are unsupported when streams use file events:

| Setting | Change |

|---|---|

useIncremental |

You no longer need to decide between the efficiency of file notifications and the simplicity of directory listing. Auto Loader with file events comes in one mode. |

useNotifications |

There is only one queue and storage event subscription per external location. |

cloudFiles.fetchParallelism |

Auto Loader with file events does not offer a manual parallelism optimization. |

cloudFiles.backfillInterval |

Azure Databricks handles backfill automatically for external locations that are enabled for file events. |

cloudFiles.pathRewrites |

This option applies only when you mount external data locations to the DBFS, which is deprecated. |

resourceTags |

You should set resource tags using the cloud console. |

For managed file events best practices, see Best practices for Auto Loader with file events.

Limitations on Auto Loader with file events

The file events service optimizes file discovery by caching the most recently created files. If Auto Loader runs infrequently, this cache can expire, and Auto Loader falls back to directory listing to discover files and update the cache. To avoid this scenario, invoke Auto Loader at least once every seven days.

For a general list of limitations on file events, see File events limitations.

Manage file notification queues for each Auto Loader stream separately (legacy)

Important

You need elevated permissions to automatically configure cloud infrastructure for file notification mode. Contact your cloud administrator or workspace admin. See:

Cloud resources used in legacy Auto Loader file notification mode

Auto Loader can set up file notifications for you automatically when you set the option cloudFiles.useNotifications to true and provide the necessary permissions to create cloud resources. In addition, you might need to provide additional options to grant Auto Loader authorization to create these resources.

The following table lists the resources that are created by Auto Loader for each cloud provider.

| Cloud Storage | Subscription Service | Queue Service | Prefix * | Limit ** |

|---|---|---|---|---|

| Amazon S3 | AWS SNS | AWS SQS | databricks-auto-ingest | 100 per S3 bucket |

| ADLS | Azure Event Grid | Azure Queue Storage | databricks | 500 per storage account |

| GCS | Google Pub/Sub | Google Pub/Sub | databricks-auto-ingest | 100 per GCS bucket |

| Azure Blob Storage | Azure Event Grid | Azure Queue Storage | databricks | 500 per storage account |

* Auto Loader names the resources with this prefix.

** How many concurrent file notification pipelines can be launched

If you must run more file-notification-based Auto Loader streams than allowed by these limits, you can use file events or a service such as AWS Lambda, Azure Functions, or Google Cloud Functions to fan out notifications from a single queue that listens to an entire container or bucket into directory-specific queues.

Legacy file notification events

Amazon S3 provides an ObjectCreated event when a file is uploaded to an S3 bucket regardless of whether it was uploaded by a put or multi-part upload.

Azure Data Lake Storage provides different event notifications for files that appear in your storage container.

- Auto Loader listens for the

FlushWithCloseevent for processing a file. - Auto Loader streams support the

RenameFileaction for discovering files.RenameFileactions require an API request to the storage system to get the size of the renamed file. - Auto Loader streams created with Databricks Runtime 9.0 and after support the

RenameDirectoryaction for discovering files.RenameDirectoryactions require API requests to the storage system to list the contents of the renamed directory.

Google Cloud Storage provides an OBJECT_FINALIZE event when a file is uploaded, which includes overwrites and file copies. Failed uploads do not generate this event.

Note

Cloud providers do not guarantee 100% delivery of all file events under very rare conditions and do not provide strict SLAs on the latency of the file events. Databricks recommends that you trigger regular backfills with Auto Loader by using the cloudFiles.backfillInterval option to guarantee that all files are discovered within a given SLA if data completeness is a requirement. Triggering regular backfills does not cause duplicates.

Required permissions for configuring file notification for Azure Data Lake Storage and Azure Blob Storage

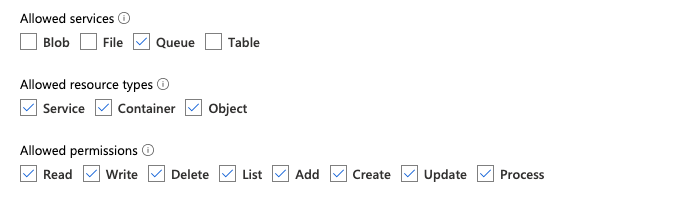

You must have read permissions for the input directory. See Azure Blob Storage.

To use file notification mode, you must provide authentication credentials for setting up and accessing the event notification services.

You can authenticate using one of the following methods:

- In Databricks Runtime 16.1 and above: Databricks service credential (recommended): Create a service credential using a managed identity and a Databricks access connector.

- Service principal: Create a Microsoft Entra ID (formerly Azure Active Directory) app and service principal in the form of client ID and client secret.

After obtaining authentication credentials, assign the necessary permissions either to the Databricks access connector (for service credentials) or to the Microsoft Entra ID app (for a service principal).

Using Azure built-in roles

Assign the access connector the following roles to the storage account in which the input path resides:

- Contributor: This role is for setting up resources in your storage account, such as queues and event subscriptions.

- Storage Queue Data Contributor: This role is for performing queue operations such as retrieving and deleting messages from the queues. This role is required only when you provide a service principal without a connection string.

Assign this access connector the following role to the related resource group:

- EventGrid EventSubscription Contributor: This role is for performing Azure Event Grid (Event Grid) subscription operations such as creating or listing event subscriptions.

For more information, see Assign Azure roles using the Azure portal.

Using a custom role

If you are concerned with the excessive permissions required for the preceding roles, you can create a Custom Role with at least the following permissions, listed below in Azure role JSON format:

"permissions": [ { "actions": [ "Microsoft.EventGrid/eventSubscriptions/write", "Microsoft.EventGrid/eventSubscriptions/read", "Microsoft.EventGrid/eventSubscriptions/delete", "Microsoft.EventGrid/locations/eventSubscriptions/read", "Microsoft.Storage/storageAccounts/read", "Microsoft.Storage/storageAccounts/write", "Microsoft.Storage/storageAccounts/queueServices/read", "Microsoft.Storage/storageAccounts/queueServices/write", "Microsoft.Storage/storageAccounts/queueServices/queues/write", "Microsoft.Storage/storageAccounts/queueServices/queues/read", "Microsoft.Storage/storageAccounts/queueServices/queues/delete" ], "notActions": [], "dataActions": [ "Microsoft.Storage/storageAccounts/queueServices/queues/messages/delete", "Microsoft.Storage/storageAccounts/queueServices/queues/messages/read", "Microsoft.Storage/storageAccounts/queueServices/queues/messages/write", "Microsoft.Storage/storageAccounts/queueServices/queues/messages/process/action" ], "notDataActions": [] } ]Then, you can assign this custom role to your access connector.

For more information, see Assign Azure roles using the Azure portal.

Required permissions for configuring file notification for Amazon S3

You must have read permissions for the input directory. See S3 connection details for more details.

To use file notification mode, attach the following JSON policy document to your IAM user or role. This IAM role is required to create a service credential for Auto Loader to authenticate with.

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "DatabricksAutoLoaderSetup",

"Effect": "Allow",

"Action": [

"s3:GetBucketNotification",

"s3:PutBucketNotification",

"sns:ListSubscriptionsByTopic",

"sns:GetTopicAttributes",

"sns:SetTopicAttributes",

"sns:CreateTopic",

"sns:TagResource",

"sns:Publish",

"sns:Subscribe",

"sqs:CreateQueue",

"sqs:DeleteMessage",

"sqs:ReceiveMessage",

"sqs:SendMessage",

"sqs:GetQueueUrl",

"sqs:GetQueueAttributes",

"sqs:SetQueueAttributes",

"sqs:TagQueue",

"sqs:ChangeMessageVisibility",

"sqs:PurgeQueue"

],

"Resource": [

"arn:aws:s3:::<bucket-name>",

"arn:aws:sqs:<region>:<account-number>:databricks-auto-ingest-*",

"arn:aws:sns:<region>:<account-number>:databricks-auto-ingest-*"

]

},

{

"Sid": "DatabricksAutoLoaderList",

"Effect": "Allow",

"Action": ["sqs:ListQueues", "sqs:ListQueueTags", "sns:ListTopics"],

"Resource": "*"

},

{

"Sid": "DatabricksAutoLoaderTeardown",

"Effect": "Allow",

"Action": ["sns:Unsubscribe", "sns:DeleteTopic", "sqs:DeleteQueue"],

"Resource": [

"arn:aws:sqs:<region>:<account-number>:databricks-auto-ingest-*",

"arn:aws:sns:<region>:<account-number>:databricks-auto-ingest-*"

]

}

]

}

where:

<bucket-name>: The S3 bucket name where your stream will read files, for example,auto-logs. You can use*as a wildcard, for example,databricks-*-logs. To find out the underlying S3 bucket for your DBFS path, you can list all the DBFS mount points in a notebook by running%fs mounts.<region>: The AWS region where the S3 bucket resides, for example,us-west-2. If you don't want to specify the region, use*.<account-number>: The AWS account number that owns the S3 bucket, for example,123456789012. If don't want to specify the account number, use*.

The string databricks-auto-ingest-* in the SQS and SNS ARN specification is the name prefix that the cloudFiles source uses when creating SQS and SNS services. Since Azure Databricks sets up the notification services in the initial run of the stream, you can use a policy with reduced permissions after the initial run (for example, stop the stream and then restart it).

Note

The preceding policy is concerned only with the permissions needed for setting up file notification services, namely S3 bucket notification, SNS, and SQS services and assumes you already have read access to the S3 bucket. If you need to add S3 read-only permissions, add the following to the Action list in the DatabricksAutoLoaderSetup statement in the JSON document:

s3:ListBuckets3:GetObject

Reduced permissions after initial setup

The resource setup permissions described above are required only during the initial run of the stream. After the first run, you can switch to the following IAM policy with reduced permissions.

Important

With the reduced permissions, you can't start new streaming queries or recreate resources in case of failures (for example, the SQS queue has been accidentally deleted); you also can't use the cloud resource management API to list or tear down resources.

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "DatabricksAutoLoaderUse",

"Effect": "Allow",

"Action": [

"s3:GetBucketNotification",

"sns:ListSubscriptionsByTopic",

"sns:GetTopicAttributes",

"sns:TagResource",

"sns:Publish",

"sqs:DeleteMessage",

"sqs:ReceiveMessage",

"sqs:SendMessage",

"sqs:GetQueueUrl",

"sqs:GetQueueAttributes",

"sqs:TagQueue",

"sqs:ChangeMessageVisibility",

"sqs:PurgeQueue"

],

"Resource": [

"arn:aws:sqs:<region>:<account-number>:<queue-name>",

"arn:aws:sns:<region>:<account-number>:<topic-name>",

"arn:aws:s3:::<bucket-name>"

]

},

{

"Effect": "Allow",

"Action": ["s3:GetBucketLocation", "s3:ListBucket"],

"Resource": ["arn:aws:s3:::<bucket-name>"]

},

{

"Effect": "Allow",

"Action": ["s3:PutObject", "s3:PutObjectAcl", "s3:GetObject", "s3:DeleteObject"],

"Resource": ["arn:aws:s3:::<bucket-name>/*"]

},

{

"Sid": "DatabricksAutoLoaderListTopics",

"Effect": "Allow",

"Action": ["sqs:ListQueues", "sqs:ListQueueTags", "sns:ListTopics"],

"Resource": "arn:aws:sns:<region>:<account-number>:*"

}

]

}

Required permissions for configuring file notification for GCS

You must have list and get permissions on your GCS bucket and on all the objects. For details, see the Google documentation on IAM permissions.

To use file notification mode, you need to add permissions for the GCS service account and the service account used to access the Google Cloud Pub/Sub resources.

Add the Pub/Sub Publisher role to the GCS service account. This allows the account to publish event notification messages from your GCS buckets to Google Cloud Pub/Sub.

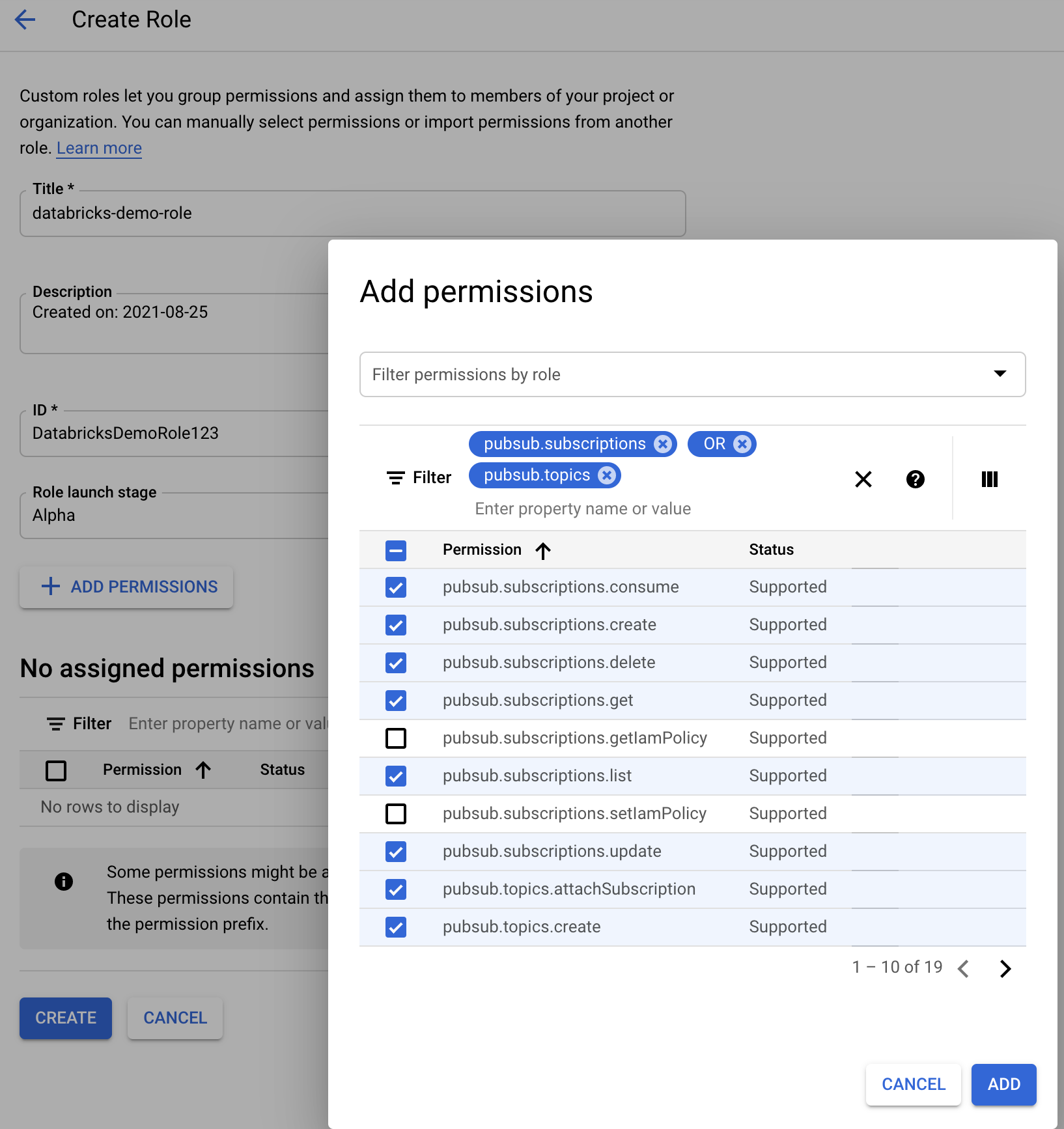

As for the service account used for the Google Cloud Pub/Sub resources, you need to add the following permissions. This service account is automatically created when you create a Databricks service credential. Service credential support is available in Databricks Runtime 16.1 and above.

pubsub.subscriptions.consume

pubsub.subscriptions.create

pubsub.subscriptions.delete

pubsub.subscriptions.get

pubsub.subscriptions.list

pubsub.subscriptions.update

pubsub.topics.attachSubscription

pubsub.topics.detachSubscription

pubsub.topics.create

pubsub.topics.delete

pubsub.topics.get

pubsub.topics.list

pubsub.topics.update

To do this, you can either create an IAM custom role with these permissions or assign pre-existing GCP roles to cover these permissions.

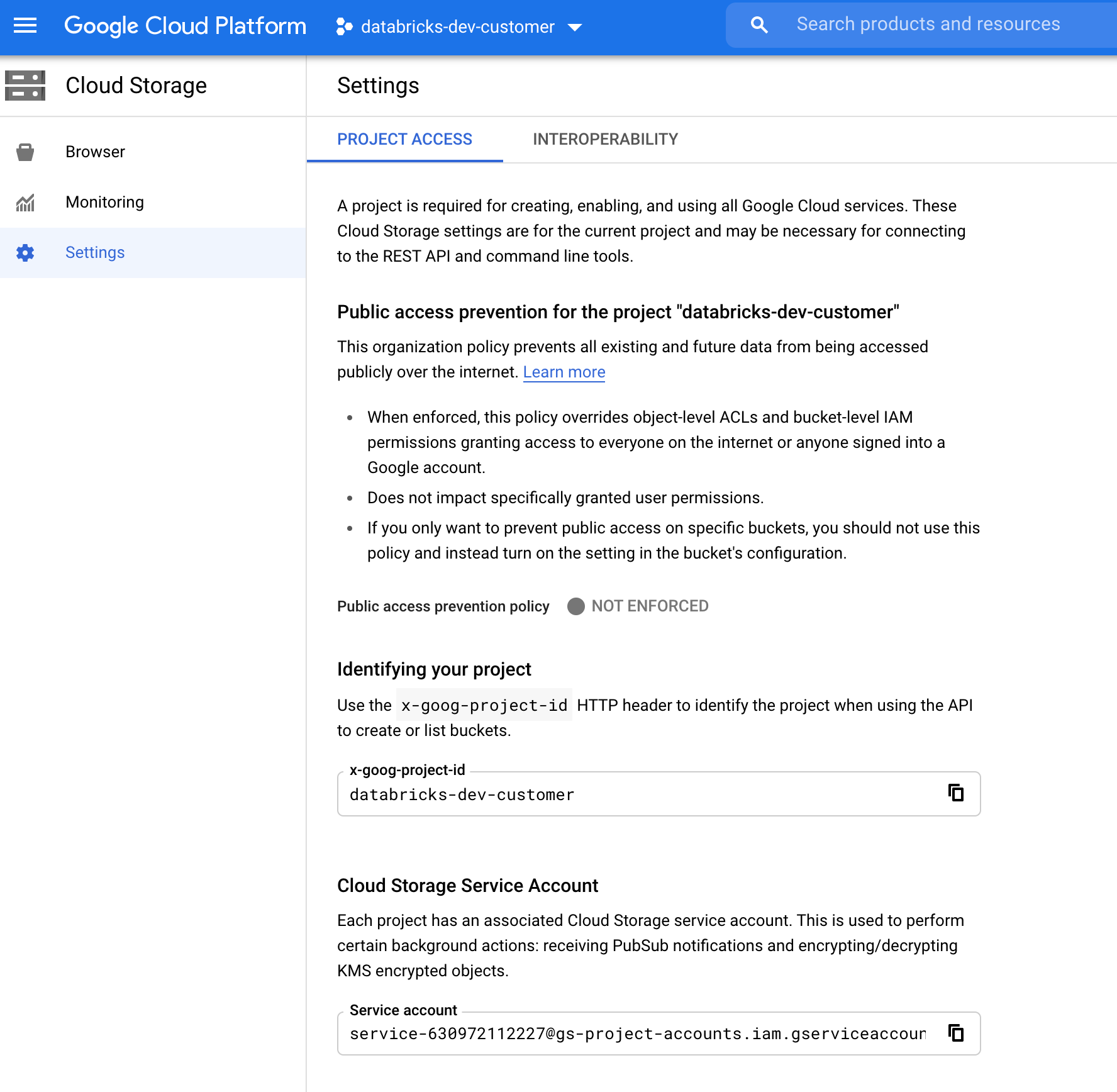

Finding the GCS Service Account

In the Google Cloud Console for the corresponding project, navigate to Cloud Storage > Settings.

The section “Cloud Storage Service Account” contains the email of the GCS service account.

Creating a Custom Google Cloud IAM Role for File Notification Mode

In the Google Cloud console for the corresponding project, navigate to IAM & Admin > Roles. Then, either create a role at the top or update an existing role. In the screen for role creation or edit, click Add Permissions. A menu appears in which you can add the desired permissions to the role.

Manually configure or manage file notification resources

Privileged users can manually configure or manage file notification resources.

- Set up the file notification services manually through the cloud provider and manually specify the queue identifier. See File notification options for more details.

- Use Scala APIs to create or manage the notifications and queuing services, as shown in the following example:

Note

You must have appropriate permissions to configure or modify cloud infrastructure. See permissions documentation for Azure, S3, or GCS.

Python

# Databricks notebook source

# MAGIC %md ## Python bindings for CloudFiles Resource Managers for all 3 clouds

# COMMAND ----------

#####################################

## Creating a ResourceManager in AWS

#####################################

# Using a Databricks service credential

manager = spark._jvm.com.databricks.sql.CloudFilesAWSResourceManager \

.newManager() \

.option("cloudFiles.region", <region>) \

.option("path", <path-to-specific-bucket-and-folder>) \

.option("databricks.serviceCredential", <service-credential-name>) \

.create()

# Using AWS access key and secret key

manager = spark._jvm.com.databricks.sql.CloudFilesAWSResourceManager \

.newManager() \

.option("cloudFiles.region", <region>) \

.option("cloudFiles.awsAccessKey", <aws-access-key>) \

.option("cloudFiles.awsSecretKey", <aws-secret-key>) \

.option("cloudFiles.roleArn", <role-arn>) \

.option("cloudFiles.roleExternalId", <role-external-id>) \

.option("cloudFiles.roleSessionName", <role-session-name>) \

.option("cloudFiles.stsEndpoint", <sts-endpoint>) \

.option("path", <path-to-specific-bucket-and-folder>) \

.create()

#######################################

## Creating a ResourceManager in Azure

#######################################

# Using a Databricks service credential

manager = spark._jvm.com.databricks.sql.CloudFilesAzureResourceManager \

.newManager() \

.option("cloudFiles.resourceGroup", <resource-group>) \

.option("cloudFiles.subscriptionId", <subscription-id>) \

.option("databricks.serviceCredential", <service-credential-name>) \

.option("path", <path-to-specific-container-and-folder>) \

.create()

# Using an Azure service principal

manager = spark._jvm.com.databricks.sql.CloudFilesAzureResourceManager \

.newManager() \

.option("cloudFiles.connectionString", <connection-string>) \

.option("cloudFiles.resourceGroup", <resource-group>) \

.option("cloudFiles.subscriptionId", <subscription-id>) \

.option("cloudFiles.tenantId", <tenant-id>) \

.option("cloudFiles.clientId", <service-principal-client-id>) \

.option("cloudFiles.clientSecret", <service-principal-client-secret>) \

.option("path", <path-to-specific-container-and-folder>) \

.create()

#######################################

## Creating a ResourceManager in GCP

#######################################

# Using a Databricks service credential

manager = spark._jvm.com.databricks.sql.CloudFilesGCPResourceManager \

.newManager() \

.option("cloudFiles.projectId", <project-id>) \

.option("databricks.serviceCredential", <service-credential-name>) \

.option("path", <path-to-specific-bucket-and-folder>) \

.create()

# Using a Google service account

manager = spark._jvm.com.databricks.sql.CloudFilesGCPResourceManager \

.newManager() \

.option("cloudFiles.projectId", <project-id>) \

.option("cloudFiles.client", <client-id>) \

.option("cloudFiles.clientEmail", <client-email>) \

.option("cloudFiles.privateKey", <private-key>) \

.option("cloudFiles.privateKeyId", <private-key-id>) \

.option("path", <path-to-specific-bucket-and-folder>) \

.create()

# Set up a queue and a topic subscribed to the path provided in the manager.

manager.setUpNotificationServices(<resource-suffix>)

# List notification services created by <AL>

from pyspark.sql import DataFrame

df = DataFrame(manager.listNotificationServices(), spark)

# Tear down the notification services created for a specific stream ID.

# Stream ID is a GUID string that you can find in the list result above.

manager.tearDownNotificationServices(<stream-id>)

Scala

/////////////////////////////////////

// Creating a ResourceManager in AWS

/////////////////////////////////////

import com.databricks.sql.CloudFilesAWSResourceManager

/**

* Using a Databricks service credential

*/

val manager = CloudFilesAWSResourceManager

.newManager

.option("cloudFiles.region", <region>) // optional, will use the region of the EC2 instances by default

.option("databricks.serviceCredential", <service-credential-name>)

.option("path", <path-to-specific-bucket-and-folder>) // required only for setUpNotificationServices

.create()

/**

* Using AWS access key and secret key

*/

val manager = CloudFilesAWSResourceManager

.newManager

.option("cloudFiles.region", <region>)

.option("cloudFiles.awsAccessKey", <aws-access-key>)

.option("cloudFiles.awsSecretKey", <aws-secret-key>)

.option("cloudFiles.roleArn", <role-arn>)

.option("cloudFiles.roleExternalId", <role-external-id>)

.option("cloudFiles.roleSessionName", <role-session-name>)

.option("cloudFiles.stsEndpoint", <sts-endpoint>)

.option("path", <path-to-specific-bucket-and-folder>) // required only for setUpNotificationServices

.create()

///////////////////////////////////////

// Creating a ResourceManager in Azure

///////////////////////////////////////

import com.databricks.sql.CloudFilesAzureResourceManager

/**

* Using a Databricks service credential

*/

val manager = CloudFilesAzureResourceManager

.newManager

.option("cloudFiles.resourceGroup", <resource-group>)

.option("cloudFiles.subscriptionId", <subscription-id>)

.option("databricks.serviceCredential", <service-credential-name>)

.option("path", <path-to-specific-container-and-folder>) // required only for setUpNotificationServices

.create()

/**

* Using an Azure service principal

*/

val manager = CloudFilesAzureResourceManager

.newManager

.option("cloudFiles.connectionString", <connection-string>)

.option("cloudFiles.resourceGroup", <resource-group>)

.option("cloudFiles.subscriptionId", <subscription-id>)

.option("cloudFiles.tenantId", <tenant-id>)

.option("cloudFiles.clientId", <service-principal-client-id>)

.option("cloudFiles.clientSecret", <service-principal-client-secret>)

.option("path", <path-to-specific-container-and-folder>) // required only for setUpNotificationServices

.create()

///////////////////////////////////////

// Creating a ResourceManager in GCP

///////////////////////////////////////

import com.databricks.sql.CloudFilesGCPResourceManager

/**

* Using a Databricks service credential

*/

val manager = CloudFilesGCPResourceManager

.newManager

.option("cloudFiles.projectId", <project-id>)

.option("databricks.serviceCredential", <service-credential-name>)

.option("path", <path-to-specific-bucket-and-folder>) // Required only for setUpNotificationServices.

.create()

/**

* Using a Google service account

*/

val manager = CloudFilesGCPResourceManager

.newManager

.option("cloudFiles.projectId", <project-id>)

.option("cloudFiles.client", <client-id>)

.option("cloudFiles.clientEmail", <client-email>)

.option("cloudFiles.privateKey", <private-key>)

.option("cloudFiles.privateKeyId", <private-key-id>)

.option("path", <path-to-specific-bucket-and-folder>) // Required only for setUpNotificationServices.

.create()

// Set up a queue and a topic subscribed to the path provided in the manager.

manager.setUpNotificationServices(<resource-suffix>)

// List notification services created by <AL>

val df = manager.listNotificationServices()

// Tear down the notification services created for a specific stream ID.

// Stream ID is a GUID string that you can find in the list result above.

manager.tearDownNotificationServices(<stream-id>)

Use setUpNotificationServices(<resource-suffix>) to create a queue and a subscription with the name <prefix>-<resource-suffix> (the prefix depends on the storage system summarized in Cloud resources used in legacy Auto Loader file notification mode. If there is an existing resource with the same name, Azure Databricks reuses the existing resource instead of creating a new one. This function returns a queue identifier that you can pass to the cloudFiles source using the identifier in File notification options. This enables the cloudFiles source user to have fewer permissions than the user who creates the resources.

Provide the "path" option to newManager only if calling setUpNotificationServices; it is not needed for listNotificationServices or tearDownNotificationServices. This is the same path that you use when running a streaming query.

The following matrix indicates which API methods are supported in which Databricks Runtime for each type of storage:

| Cloud Storage | Setup API | List API | Tear down API |

|---|---|---|---|

| Amazon S3 | All versions | All versions | All versions |

| ADLS | All versions | All versions | All versions |

| GCS | Databricks Runtime 9.1 and above | Databricks Runtime 9.1 and above | Databricks Runtime 9.1 and above |

| Azure Blob Storage | All versions | All versions | All versions |

Clean up event notification resources created by Auto Loader

Auto Loader doesn't automatically tear down file notification resources. To tear down file notification resources, you must use the cloud resource manager as shown in the previous section. You can also delete these resources manually using the cloud provider's UI or APIs.

Troubleshoot common errors

This section describes common errors when using Auto Loader with file notification mode and how to resolve them.

Failed to create Event Grid subscription

If you see the following error message when you run Auto Loader for the first time, Event Grid is not registered as a Resource Provider in the Azure subscription.

java.lang.RuntimeException: Failed to create event grid subscription.

To register Event Grid as a resource provider, do the following:

- In the Azure portal, go to your subscription.

- Click Resource Providers under the Settings section.

- Register the provider

Microsoft.EventGrid.

Authorization required to perform Event Grid subscription operations

If you see the following error message when you run Auto Loader for the first time, confirm that the Contributor role is assigned to the service principal for Event Grid and the storage account.

403 Forbidden ... does not have authorization to perform action 'Microsoft.EventGrid/eventSubscriptions/[read|write]' over scope ...

Event Grid client bypasses proxy

In Databricks Runtime 15.2 and above, Event Grid connections in Auto Loader use proxy settings from system properties by default. In Databricks Runtime 13.3 LTS, 14.3 LTS, and 15.0 to 15.2, you can manually configure Event Grid connections to use a proxy by setting the Spark Config property spark.databricks.cloudFiles.eventGridClient.useSystemProperties true. See Set Spark configuration properties on Azure Databricks.