Copy data from an HTTP endpoint by using Azure Data Factory or Azure Synapse Analytics

APPLIES TO:  Azure Data Factory

Azure Data Factory  Azure Synapse Analytics

Azure Synapse Analytics

Tip

Try out Data Factory in Microsoft Fabric, an all-in-one analytics solution for enterprises. Microsoft Fabric covers everything from data movement to data science, real-time analytics, business intelligence, and reporting. Learn how to start a new trial for free!

This article outlines how to use Copy Activity in Azure Data Factory and Azure Synapse to copy data from an HTTP endpoint. The article builds on Copy Activity, which presents a general overview of Copy Activity.

The difference among this HTTP connector, the REST connector and the Web table connector are:

- REST connector specifically support copying data from RESTful APIs;

- HTTP connector is generic to retrieve data from any HTTP endpoint, e.g. to download file. Before REST connector becomes available, you may happen to use the HTTP connector to copy data from RESTful APIs, which is supported but less functional comparing to REST connector.

- Web table connector extracts table content from an HTML webpage.

Supported capabilities

This HTTP connector is supported for the following capabilities:

| Supported capabilities | IR |

|---|---|

| Copy activity (source/-) | ① ② |

| Lookup activity | ① ② |

① Azure integration runtime ② Self-hosted integration runtime

For a list of data stores that are supported as sources/sinks, see Supported data stores.

You can use this HTTP connector to:

- Retrieve data from an HTTP/S endpoint by using the HTTP GET or POST methods.

- Retrieve data by using one of the following authentications: Anonymous, Basic, Digest, Windows, or ClientCertificate.

- Copy the HTTP response as-is or parse it by using supported file formats and compression codecs.

Tip

To test an HTTP request for data retrieval before you configure the HTTP connector, learn about the API specification for header and body requirements. You can use tools like Visual Studio, PowerShell's Invoke-RestMethod, or a web browser to validate.

Prerequisites

If your data store is located inside an on-premises network, an Azure virtual network, or Amazon Virtual Private Cloud, you need to configure a self-hosted integration runtime to connect to it.

If your data store is a managed cloud data service, you can use the Azure Integration Runtime. If the access is restricted to IPs that are approved in the firewall rules, you can add Azure Integration Runtime IPs to the allow list.

You can also use the managed virtual network integration runtime feature in Azure Data Factory to access the on-premises network without installing and configuring a self-hosted integration runtime.

For more information about the network security mechanisms and options supported by Data Factory, see Data access strategies.

Get started

To perform the Copy activity with a pipeline, you can use one of the following tools or SDKs:

- The Copy Data tool

- The Azure portal

- The .NET SDK

- The Python SDK

- Azure PowerShell

- The REST API

- The Azure Resource Manager template

Create a linked service to an HTTP source using UI

Use the following steps to create a linked service to an HTTP source in the Azure portal UI.

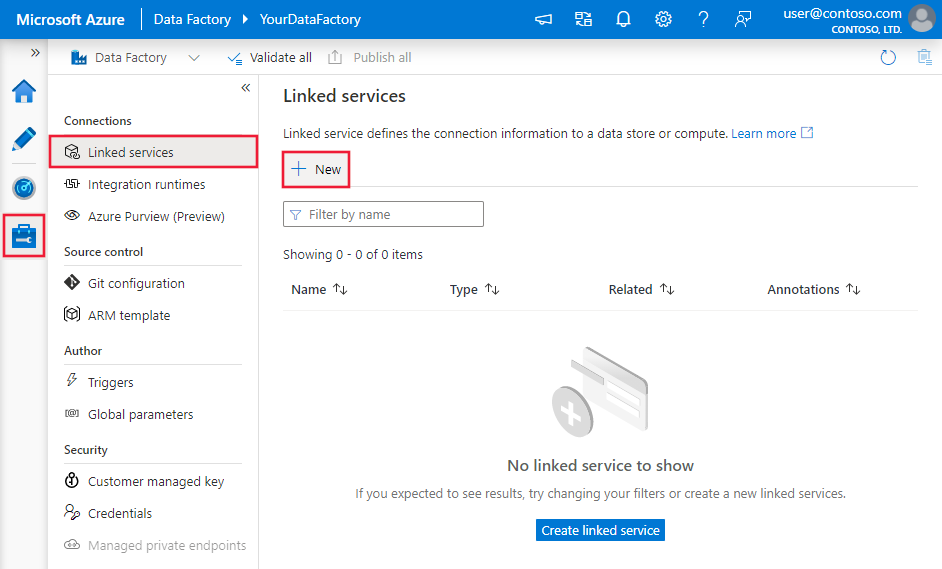

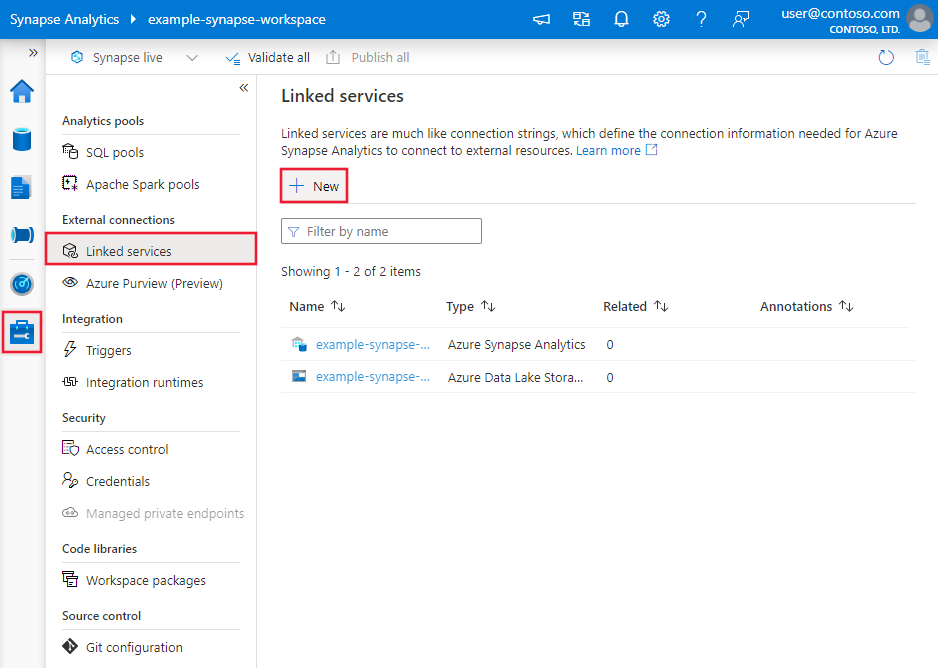

Browse to the Manage tab in your Azure Data Factory or Synapse workspace and select Linked Services, then click New:

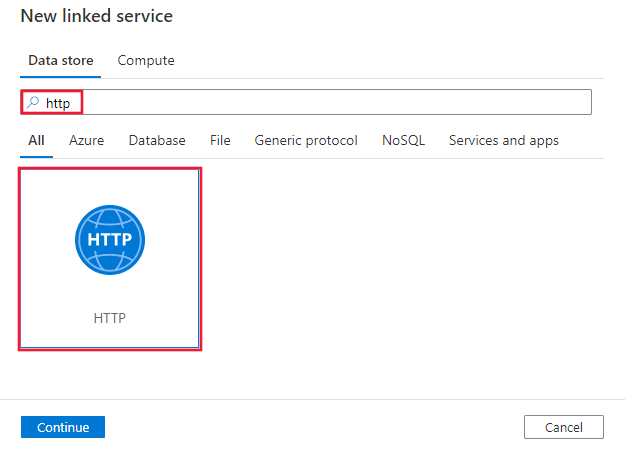

Search for HTTP and select the HTTP connector.

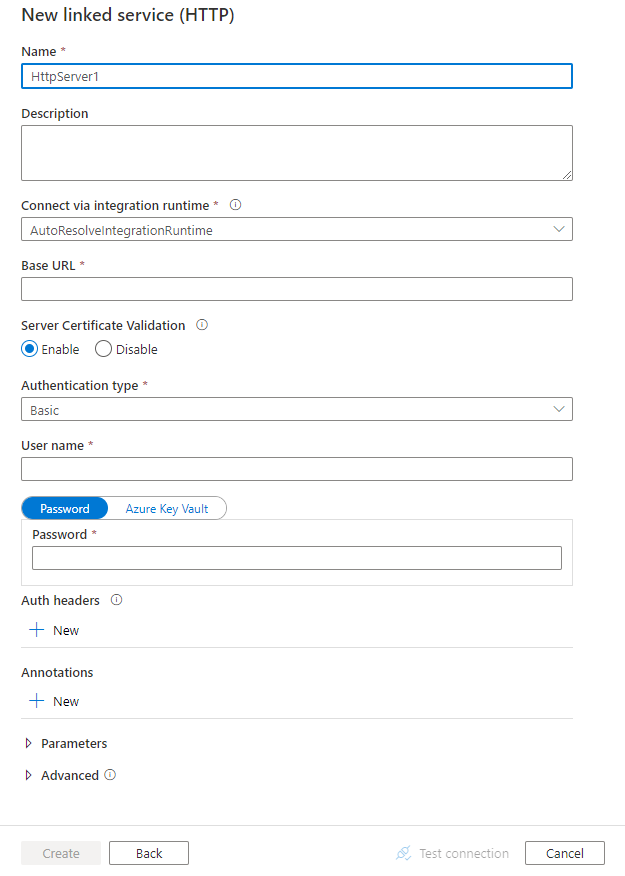

Configure the service details, test the connection, and create the new linked service.

Connector configuration details

The following sections provide details about properties you can use to define entities that are specific to the HTTP connector.

Linked service properties

The following properties are supported for the HTTP linked service:

| Property | Description | Required |

|---|---|---|

| type | The type property must be set to HttpServer. | Yes |

| url | The base URL to the web server. | Yes |

| enableServerCertificateValidation | Specify whether to enable server TLS/SSL certificate validation when you connect to an HTTP endpoint. If your HTTPS server uses a self-signed certificate, set this property to false. | No (the default is true) |

| authenticationType | Specifies the authentication type. Allowed values are Anonymous, Basic, Digest, Windows, and ClientCertificate. You can additionally configure authentication headers in authHeader property. See the sections that follow this table for more properties and JSON samples for these authentication types. |

Yes |

| authHeaders | Additional HTTP request headers for authentication. For example, to use API key authentication, you can select authentication type as “Anonymous” and specify API key in the header. |

No |

| connectVia | The Integration Runtime to use to connect to the data store. Learn more from Prerequisites section. If not specified, the default Azure Integration Runtime is used. | No |

Using Basic, Digest, or Windows authentication

Set the authenticationType property to Basic, Digest, or Windows. In addition to the generic properties that are described in the preceding section, specify the following properties:

| Property | Description | Required |

|---|---|---|

| userName | The user name to use to access the HTTP endpoint. | Yes |

| password | The password for the user (the userName value). Mark this field as a SecureString type to store it securely. You can also reference a secret stored in Azure Key Vault. | Yes |

Example

{

"name": "HttpLinkedService",

"properties": {

"type": "HttpServer",

"typeProperties": {

"authenticationType": "Basic",

"url" : "<HTTP endpoint>",

"userName": "<user name>",

"password": {

"type": "SecureString",

"value": "<password>"

}

},

"connectVia": {

"referenceName": "<name of Integration Runtime>",

"type": "IntegrationRuntimeReference"

}

}

}

Using ClientCertificate authentication

To use ClientCertificate authentication, set the authenticationType property to ClientCertificate. In addition to the generic properties that are described in the preceding section, specify the following properties:

| Property | Description | Required |

|---|---|---|

| embeddedCertData | Base64-encoded certificate data. | Specify either embeddedCertData or certThumbprint. |

| certThumbprint | The thumbprint of the certificate that's installed on your self-hosted Integration Runtime machine's cert store. Applies only when the self-hosted type of Integration Runtime is specified in the connectVia property. | Specify either embeddedCertData or certThumbprint. |

| password | The password that's associated with the certificate. Mark this field as a SecureString type to store it securely. You can also reference a secret stored in Azure Key Vault. | No |

If you use certThumbprint for authentication and the certificate is installed in the personal store of the local computer, grant read permissions to the self-hosted Integration Runtime:

- Open the Microsoft Management Console (MMC). Add the Certificates snap-in that targets Local Computer.

- Expand Certificates > Personal, and then select Certificates.

- Right-click the certificate from the personal store, and then select All Tasks > Manage Private Keys.

- On the Security tab, add the user account under which the Integration Runtime Host Service (DIAHostService) is running, with read access to the certificate.

- The HTTP connector loads only trusted certificates. If you're using a self-signed or nonintegrated CA-issued certificate, to enable trust, the certificate must also be installed in one of the following stores:

- Trusted People

- Third-Party Root Certification Authorities

- Trusted Root Certification Authorities

Example 1: Using certThumbprint

{

"name": "HttpLinkedService",

"properties": {

"type": "HttpServer",

"typeProperties": {

"authenticationType": "ClientCertificate",

"url": "<HTTP endpoint>",

"certThumbprint": "<thumbprint of certificate>"

},

"connectVia": {

"referenceName": "<name of Integration Runtime>",

"type": "IntegrationRuntimeReference"

}

}

}

Example 2: Using embeddedCertData

{

"name": "HttpLinkedService",

"properties": {

"type": "HttpServer",

"typeProperties": {

"authenticationType": "ClientCertificate",

"url": "<HTTP endpoint>",

"embeddedCertData": "<Base64-encoded cert data>",

"password": {

"type": "SecureString",

"value": "password of cert"

}

},

"connectVia": {

"referenceName": "<name of Integration Runtime>",

"type": "IntegrationRuntimeReference"

}

}

}

Using authentication headers

In addition, you can configure request headers for authentication along with the built-in authentication types.

Example: Using API key authentication

{

"name": "HttpLinkedService",

"properties": {

"type": "HttpServer",

"typeProperties": {

"url": "<HTTP endpoint>",

"authenticationType": "Anonymous",

"authHeader": {

"x-api-key": {

"type": "SecureString",

"value": "<API key>"

}

}

},

"connectVia": {

"referenceName": "<name of Integration Runtime>",

"type": "IntegrationRuntimeReference"

}

}

}

Dataset properties

For a full list of sections and properties available for defining datasets, see the Datasets article.

Azure Data Factory supports the following file formats. Refer to each article for format-based settings.

- Avro format

- Binary format

- Delimited text format

- Excel format

- JSON format

- ORC format

- Parquet format

- XML format

The following properties are supported for HTTP under location settings in format-based dataset:

| Property | Description | Required |

|---|---|---|

| type | The type property under location in dataset must be set to HttpServerLocation. |

Yes |

| relativeUrl | A relative URL to the resource that contains the data. The HTTP connector copies data from the combined URL: [URL specified in linked service][relative URL specified in dataset]. |

No |

Note

The supported HTTP request payload size is around 500 KB. If the payload size you want to pass to your web endpoint is larger than 500 KB, consider batching the payload in smaller chunks.

Example:

{

"name": "DelimitedTextDataset",

"properties": {

"type": "DelimitedText",

"linkedServiceName": {

"referenceName": "<HTTP linked service name>",

"type": "LinkedServiceReference"

},

"schema": [ < physical schema, optional, auto retrieved during authoring > ],

"typeProperties": {

"location": {

"type": "HttpServerLocation",

"relativeUrl": "<relative url>"

},

"columnDelimiter": ",",

"quoteChar": "\"",

"firstRowAsHeader": true,

"compressionCodec": "gzip"

}

}

}

Copy Activity properties

This section provides a list of properties that the HTTP source supports.

For a full list of sections and properties that are available for defining activities, see Pipelines.

HTTP as source

Azure Data Factory supports the following file formats. Refer to each article for format-based settings.

- Avro format

- Binary format

- Delimited text format

- Excel format

- JSON format

- ORC format

- Parquet format

- XML format

The following properties are supported for HTTP under storeSettings settings in format-based copy source:

| Property | Description | Required |

|---|---|---|

| type | The type property under storeSettings must be set to HttpReadSettings. |

Yes |

| requestMethod | The HTTP method. Allowed values are Get (default) and Post. |

No |

| additionalHeaders | Additional HTTP request headers. | No |

| requestBody | The body for the HTTP request. | No |

| httpRequestTimeout | The timeout (the TimeSpan value) for the HTTP request to get a response. This value is the timeout to get a response, not the timeout to read response data. The default value is 00:01:40. | No |

| maxConcurrentConnections | The upper limit of concurrent connections established to the data store during the activity run. Specify a value only when you want to limit concurrent connections. | No |

Example:

"activities":[

{

"name": "CopyFromHTTP",

"type": "Copy",

"inputs": [

{

"referenceName": "<Delimited text input dataset name>",

"type": "DatasetReference"

}

],

"outputs": [

{

"referenceName": "<output dataset name>",

"type": "DatasetReference"

}

],

"typeProperties": {

"source": {

"type": "DelimitedTextSource",

"formatSettings":{

"type": "DelimitedTextReadSettings",

"skipLineCount": 10

},

"storeSettings":{

"type": "HttpReadSettings",

"requestMethod": "Post",

"additionalHeaders": "<header key: header value>\n<header key: header value>\n",

"requestBody": "<body for POST HTTP request>"

}

},

"sink": {

"type": "<sink type>"

}

}

}

]

Lookup activity properties

To learn details about the properties, check Lookup activity.

Legacy models

Note

The following models are still supported as-is for backward compatibility. You are suggested to use the new model mentioned in above sections going forward, and the authoring UI has switched to generating the new model.

Legacy dataset model

| Property | Description | Required |

|---|---|---|

| type | The type property of the dataset must be set to HttpFile. | Yes |

| relativeUrl | A relative URL to the resource that contains the data. When this property isn't specified, only the URL that's specified in the linked service definition is used. | No |

| requestMethod | The HTTP method. Allowed values are Get (default) and Post. | No |

| additionalHeaders | Additional HTTP request headers. | No |

| requestBody | The body for the HTTP request. | No |

| format | If you want to retrieve data from the HTTP endpoint as-is without parsing it, and then copy the data to a file-based store, skip the format section in both the input and output dataset definitions. If you want to parse the HTTP response content during copy, the following file format types are supported: TextFormat, JsonFormat, AvroFormat, OrcFormat, and ParquetFormat. Under format, set the type property to one of these values. For more information, see JSON format, Text format, Avro format, Orc format, and Parquet format. |

No |

| compression | Specify the type and level of compression for the data. For more information, see Supported file formats and compression codecs. Supported types: GZip, Deflate, BZip2, and ZipDeflate. Supported levels: Optimal and Fastest. |

No |

Note

The supported HTTP request payload size is around 500 KB. If the payload size you want to pass to your web endpoint is larger than 500 KB, consider batching the payload in smaller chunks.

Example 1: Using the Get method (default)

{

"name": "HttpSourceDataInput",

"properties": {

"type": "HttpFile",

"linkedServiceName": {

"referenceName": "<HTTP linked service name>",

"type": "LinkedServiceReference"

},

"typeProperties": {

"relativeUrl": "<relative url>",

"additionalHeaders": "Connection: keep-alive\nUser-Agent: Mozilla/5.0\n"

}

}

}

Example 2: Using the Post method

{

"name": "HttpSourceDataInput",

"properties": {

"type": "HttpFile",

"linkedServiceName": {

"referenceName": "<HTTP linked service name>",

"type": "LinkedServiceReference"

},

"typeProperties": {

"relativeUrl": "<relative url>",

"requestMethod": "Post",

"requestBody": "<body for POST HTTP request>"

}

}

}

Legacy copy activity source model

| Property | Description | Required |

|---|---|---|

| type | The type property of the copy activity source must be set to HttpSource. | Yes |

| httpRequestTimeout | The timeout (the TimeSpan value) for the HTTP request to get a response. This value is the timeout to get a response, not the timeout to read response data. The default value is 00:01:40. | No |

Example

"activities":[

{

"name": "CopyFromHTTP",

"type": "Copy",

"inputs": [

{

"referenceName": "<HTTP input dataset name>",

"type": "DatasetReference"

}

],

"outputs": [

{

"referenceName": "<output dataset name>",

"type": "DatasetReference"

}

],

"typeProperties": {

"source": {

"type": "HttpSource",

"httpRequestTimeout": "00:01:00"

},

"sink": {

"type": "<sink type>"

}

}

}

]

Related content

For a list of data stores that Copy Activity supports as sources and sinks, see Supported data stores and formats.