Azure Cosmos DB customer profile: Jet.com

Jet.com powers an innovative e-commerce engine on Azure in less than 12 months!

Authored by Anna Skobodzinski, Aravind Krishna, and Shireesh Thota from Microsoft, in conjunction with Scott Havens from Jet.com.

This article is part of a series about customers who’ve worked closely with Microsoft on Azure over the last year. We look at why they chose Azure services and take a closer look at the design of their solution.

In this installment, we profile Jet.com, a fast-growing technology company with an e-commerce platform built entirely on Microsoft Azure, including the development and delivery of infrastructure, using both .NET and open-source technologies. To learn more about Jet.com, see the Jet.com video from the Microsoft Build 2018 conference:

[embed]https://youtu.be/dSCzCaiWgLM[/embed]

Headquartered in Hoboken, New Jersey, Jet.com was cofounded by entrepreneur Marc Lore, perhaps best known as the creator of the popular Diapers.com e-commerce site that he eventually sold to Amazon. Jet.com has been growing fast since it was founded in 2014 and has sold 12 million products, from jeans to diapers. On August 8, 2016, Walmart acquired Jet.com, which is now a subsidiary of Walmart.com. Today the entrepreneur and his team compete head-on with Amazon.com through an innovative online marketplace called Jet.com.

Jet.com microservices approach to e-commerce

To compete with other online retailers, Jet.com continuously innovates. For example, Jet.com has a unique pricing engine that makes price adjustments in real-time. Lower prices encourage customers to buy more items at once and purchase items that are in the same distribution center.

For innovations like this, the technology teams need to be able to make changes to individual service components without having deep interdependencies. So they developed a functionality-first approach based on an event-sourced model that relies on microservices and agile processes.

Built in less than 12 months, the Jet.com platform is composed of open source software, Microsoft technologies such as Visual F#, and Azure platform-as-a-service (PaaS) services such as Azure Cosmos DB.

"We are forced to design systems with an eye on predicting what we’ll need them to do at 100x the volume."

- Jeremy Kimball: VP Engineering, Jet.com

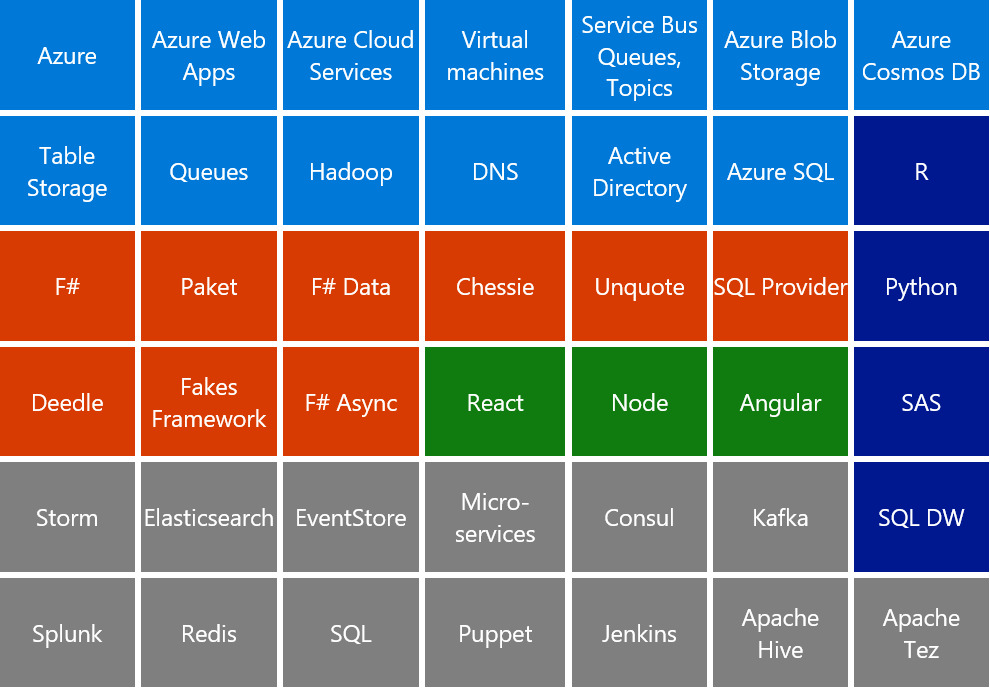

Figure 1. The Jet.com technology stack mixes, third-party software, open source and Microsoft technologies and services on Azure, color-coded by technology.

Flexible microservices

If you’re running a large stack, you don’t want to take down all your service instances to deploy an update. That’s one reason why the core components of the Jet.com platform—for example, order processing, inventory management, and pricing—are each composed of hundreds of microservices. A microservice does one thing and only that one thing. This simplicity makes each microservice elastic, resilient, minimal, and complete, and their composability allows engineers to distribute complexity more evenly across a large system.

If Jet.com engineers need to change a service or add a feature, they can do so without affecting other parts of the system. The microservices architecture supports their fast-paced, continuous delivery and deployment cycles. The team can also scale their platform easily and still update services independently.

Event-sourced architecture

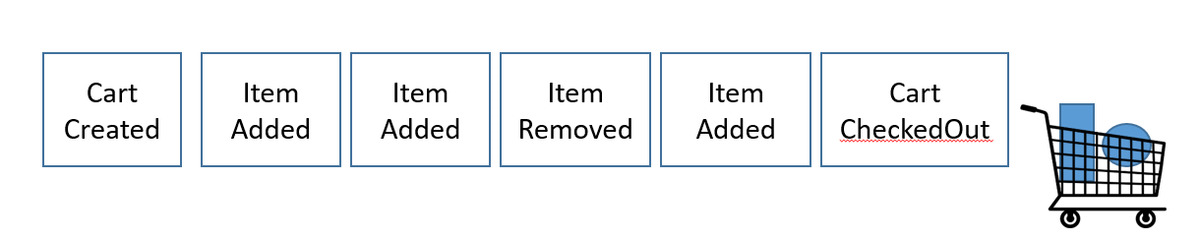

An event sourcing pattern is the basis for communication among Jet.com microservices. The conventional design pattern for a retail and order processing system typically stores the state of orders in a database, then queries the database to discover the current state and updates with the latest state. But what if a system also needs to know how it arrived at the current state? An event sourcing pattern solves this problem by storing all changes to an application state as a sequence of events.

For example, when an item is placed into a shopping cart at Jet.com, an order-processing microservice first places an event on the event stream. Then the inventory processing system reads the event off the event stream and makes a reservation with one of the merchants in the Jet.com ecosystem. The pricing engine reads these events, calculates pricing, and places a new event on the event stream, and so on.

"When we were building Jet's next-generation event sourcing platform, CosmosDB offered the low latency, high throughput, global availability, and rich feature set that are critical to our success."

- Scott Havens: Director of Software Engineering, Jet.com

Figure 2. Event sourcing model drives the Jet.com architecture.

Events can be queried, and the event log can be used to reconstruct past states. Performing writes in an append-only manner via event sourcing is fast and scalable for systems that are write-heavy, in particular when used in combination with a write-optimized database.

Built for speed: the next-generation architecture

With ambitious goals and rapid growth, the Jet.com engineers always look for ways to enhance the shopping experience for their customers and improve their marketing systems. As their customer traffic grew, so too has the demand for scalability. So the team looked for efficiency gains in the inventory system that tracks available quantities of all SKUs from all partner merchants. This system also tracks the quantities held in reserve for orders in progress. Sellable quantities are shared with the Jet.com pricing engine, with the goal of minimizing reject rates from the merchants due to lack of inventory.

To make their services faster, smarter, and more efficient, Jet kicked off Panther, the next-generation inventory processing system. Panther needed to meet several important goals:

- Improve the customer experience by reserving inventory earlier in the order pipeline.

- Enhance insights for the marketing and operations teams by providing more historical data and better analytics.

- Unify inventory management responsibilities typically spread across multiple systems and teams.

Jet’s existing inventory management system was not event sourced. The open source event storage system could not meet the latency and throughput requirements of inventory processing in an event-sourced manner at that scale. The Panther team knew that the existing storage system would not scale sufficiently to handle their ever-growing user base. Implemented as an infrastructure-as-a-service (IaaS) solution, its management was handled by the team as well. They wanted a solution that was easier to manage, supported high availability, offered replication across multiple geographic locations, and above all, performed well—backed up by a solid service-level agreement (SLA).

They prototyped a Panther system based on Azure Cosmos DB, a globally-distributed, massively scalable, low-latency database service on Azure. When the prototype showed promising results, they knew they had their solution. Panther uses a globally distributed Azure Cosmos DB for the event storage (sourcing) and event processing. Events from upstream systems are ingested directly into Azure Cosmos DB. The data is partitioned by the order ID and other properties, and their collections are scaled elastically to meet the demand. A microservice listens to changes that are written to the collections and emits new events to the event bus in the order they were committed in the database for the downstream services to consume.

Figure 3. The Panther inventory system dataflow. All services are written in F#. View a larger version of this diagram.

Implementing event storage

Jet.com chose Azure Cosmos DB for Panther because it met their critical needs:

- To serve a massive scale of both writes and reads with a low, single-digit millisecond latency.

- To distribute their data globally, while serving requests locally to the application in each region.

- To elastically scale both storage and throughput on demand, independently, at any time, and worldwide.

- To guarantee the first-class, comprehensive SLAs for availability, latency, throughput, and consistency.

The event sourcing pattern requires a high volume of writes from the database, since every action or inter-service communication is handled through a database write command. Jet.com chose Azure Cosmos DB largely because, as a write-optimized database, it could handle the massive ingestion rates they needed.

They also needed the elasticity of storage and throughput to manage the costs carefully. Like most major retailers, Jet.com anticipates big peaks during the key shopping days, when the expected rate of events can increase 10 to 20 times compared to the norm. With Azure Cosmos DB, Jet.com can fine-tune the provisioned throughput on an hourly basis and pay only for what they need for a given week, or even hour, and change it at any time worldwide.

For example, during November 2016, Jet.com provisioned a single geo-replicated Azure Cosmos DB collection for order event streams with a configured throughput between 1 to 10 million request units (RUs) per second. The collection was partitioned using order ID as the partitioning key. Their access patterns retrieved events of a certain type for a certain order ID and the time range, so their service and database scaled seamlessly as the number of customers and orders grew. During Black Friday and Cyber Monday, the most popular shopping days, they were able to run with a provisioned throughput of 1 trillion RUs in 24 hours to satisfy their customer demand.

Low-latency operations

The event store running on Azure Cosmos DB was a mission-critical component for Panther. Not only was fast throughput essential, low latencies were an absolute requirement, and microservices had to operate smoothly. Azure Cosmos DB provides comprehensive, industry-leading SLAs—not just for 99.99 percent availability within a region and 99.999 percent availability across regions, but also for guaranteed single-digit-millisecond latency, consistency, and throughput. As a part of the latency SLA, Azure Cosmos DB guarantees that the database will complete more than 99 percent of reads under 10 ms and 99 percent of writes under 15 ms. On average, the observed latency numbers are substantially lower. This guarantee, backed up by the SLA, was an important benefit for Jet.com’s operations.

With rigorous latency objectives for the Panther service APIs, Jet.com actively monitored the 99th and 99.9th percentile request times for their APIs. These times were tied to the performance of the underlying NoSQL database calls. To meet these latency goals, the Jet.com engine had been exhausting its operational cycles to manage the scaling and configuration of their pre-Panther database. With the move to Azure Cosmos DB, the operational load has significantly lightened, so the Jet engineers can spend more time on other core services.

Turnkey global distribution

For Panther, operational and scalability improvements were important, but global distribution was mandatory. Azure Cosmos DB offered a turnkey solution that was a game-changer.

Most Panther services ran in the Azure East US2 region, but the data needed to be available in multiple locations worldwide, including the Azure West US region, where the data was used to perform less latency-critical business processes. The read region in the West had to be able to read the data in the order it was written by the write region in the East, and it had to happen with no more than a 15-minute delay. Azure Cosmos DB tunable consistency models help the team navigate the needs of various scenarios with minimal code and guaranteed correctness. For instance, relying on the bounded staleness consistency model ensures the 15-minute business requirement is met.

Azure Cosmos DB change feed support

Jet.com engineers wanted to ingest events related to Panther into persistent storage in Azure Cosmos DB as soon as possible. Then, they wanted to consume these events from many microservices to perform other activities such as making reservations with ecosystem merchants and updating an order’s fulfillment status. For correct order processing, it was crucial that these events were processed once and exactly once, and in the committed order, in Azure Cosmos DB.

To do this, the Jet.com engineers worked closely with the Azure Cosmos DB team at Microsoft to develop change feed support. The change feed APIs provide a way to read documents from a collection in the order in which they are modified. Azure Cosmos DB provides a distributed API that can be used to process the changes available in different ranges of partition keys in parallel, so that Jet.com can use a pool of machines to distribute the processing of events without having to manage the orchestration of work across the many machines.

Summary

Teams often take a lift-and-shift approach to the cloud using the technologies they’ve used before. To gain the scale they needed affordably, Jet.com realized that they had to use products designed for the cloud, and they were willing to try something new. The result was an innovative e-commerce engine running in production at massive scale and built in less than 12 months.

Within just a few weeks, a prototype based on Service Fabric proved that Panther could support the massive scale and the functionality Jet needed plus high availability and blazing fast performance across multiple regions. But what really made Panther possible was adding Azure Cosmos DB for the event store. Coupling an event-sourcing pattern with a microservices-based architecture gave them the flexibility they needed to keep improving Jet.com and delight their customers.