Delivering Consistency and Accuracy: Improvements for Azure Data Lake Store Reporting

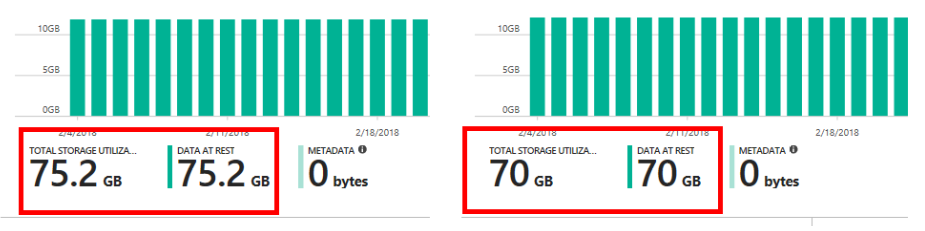

Have you ever uploaded a 70GB file from Windows into your Azure Data Lake Store and noticed that the total shown in the data explorer showed as 75.2 GB?

Today, we are fixing that!

Previously when reporting the size, we aggregated the total using the technically correct term (1GB = 1E+9 bytes). Whenever calculating the total using PowerShell or other useful developer tools, we would see a discrepancy:

[caption id="attachment_9505" align="alignnone" width="419"] Calculating the number of bytes in 1GB using PowerShell[/caption]

Calculating the number of bytes in 1GB using PowerShell[/caption]

This naturally led to confusion around how much storage is actually being used, the final bill, how to accurately project growth, and similar key questions that arise when working with and managing massive amounts of data.

Our new experience delivers a more consistent and precise reporting of storage utilization: all aggregations are done using powers of 2, where 1GB = 1024^3 bytes. This brings consistency between our billing, the monthly plan options, and the usage reporting for Azure Data Lake Store.

We are keeping all accounts, billing, and plans constant, so there is zero impact to billing or changes to the monthly plans. The billing process has always been aggregated on a base 2, which means that there will not be sudden changes in our billing process for any customer. Although the number can be lower with the new calculation, there is no data loss stemming from this update.

[caption id="attachment_9555" align="alignnone" width="925"] Azure Data Lake Store overview before the update to the calculation (left), current calculation (right) [/caption]

Azure Data Lake Store overview before the update to the calculation (left), current calculation (right) [/caption]

Making the calculations transparent

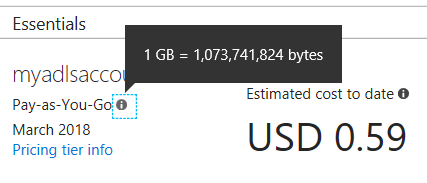

We decided to use the naming from the base 10 (SI) units because they are highly familiar to most of our users. Although technically not the exact unit for the calculation, we believe that the tradeoff is worth the simplicity of the naming and familiarity with the SI units. We’re also being explicit about the equivalence in all Azure Data Lake Store pages that show the units:

[caption id="attachment_9495" align="alignnone" width="427"] Showing the aggregation equivalence in the ADLS overview[/caption]

Showing the aggregation equivalence in the ADLS overview[/caption]

With this improvement to Azure Data Lake Store, we remain committed to delivering not only a great service, but high-quality experiences. These make working and managing massive amounts of data easier for everyone – from highly experienced data administrators, to newcomers learning about big data.

We are actively looking at your feedback and comments; reach out to us on https://aka.ms/adlfeedback to share thoughts and experiences to make the product better.

P.S. More details on the units and the calculations

In brief, this is because the units used to measure large amounts of data (Kilobytes, Megabyte, Gigabytes, Terabytes, Petabytes, and so on) are part of the metric system that uses units in powers of 10, where a kilobyte represents 10^3 bytes (1,000 bytes). Some operating systems (such as Windows), calculate and report storage using powers of 2 because internally, computers use binary to manage memory addresses. This binary approach means that a kilobyte (usually 1,000 bytes) is calculated as 1024 bytes (2^10 bytes).[1]

| Metric unit (SI) | Total bytes | IEC unit | Total bytes | IEC vs metric difference | |

| Kilobyte (kB) | 1,000 | Kibibyte (KiB) | 1,024 | 24 B | |

| Megabyte (MB) | 1,000,000 | Mebibyte (MiB) | 1,048,576 | 48.5 kB | |

| Gigabyte (GB) | 1,000,000,000 | Gibibyte (GiB) | 1,073,741,824 | 73.7 MB | |

| Terabyte (TB) | 1E+12 | Tebibyte (TiB) | 1.09951E+12 | 99.5 GB | |

| Petabyte (PB) | 1E+15 | Pebibyte (PiB) | 1.1259E+15 | 125.9 TB | |

| Exabyte (EB) | 1E+18 | Exbibyte (EiB) | 1.15292E+18 | 152.9 PB | |

| Zettabyte (ZB) | 1E+21 | Zebibyte (ZiB) | 1.18059E+21 | 180.5 EB | |

| Yottabyte (YB) | 1E+24 | Yobibyte (YiB) | 1.20893E+24 | 208.9 ZB |

As you can see from the table above (based on this Wikipedia article), the, at the Kilo stage, we have a total difference of 24 bytes. Not enough to store a song or even a very very small jpg image. As we scale up, the difference starts to become more noticeable. Tthe small difference becomes massive as the scale increases – an inconsistent definition at the higher levels can lead to enough data difference as could fit an entire data center, or even more.

[1] Other Microsoft teams, manufacturers, and even famous comics have written about it.

Comments

- Anonymous

March 05, 2018

(This comment has been deleted per user request)