Make another small step, with the JavaScript Console Pig in HDInsight

Our previous blog, MapReduce on 27,000 books using multiple storage accounts and HDInsight showed you how to run the Java version of the MapReduce code against the Gutenberg dataset we uploaded to the blog storage. We also explained how you can add multiple storage accounts and access them from your HDInsight cluster. In this blog, we’ll take a smaller step and show you how this works with the JavaScript example, and see if it can operate on a real dataset.

The JavaScript Console gives you simpler syntax, and a convenient web interface. You can do quick tasks such as running a query, and check on your data without having to RDP into your HDInsight cluster head node. It is for convenience only; not meant for complex workflow. The JavaScript has a few features built in, that includes being able to use the HDFS commands such as ls, mkdir, copy files, etc. Moreover, it allows you to invoke pig commands.

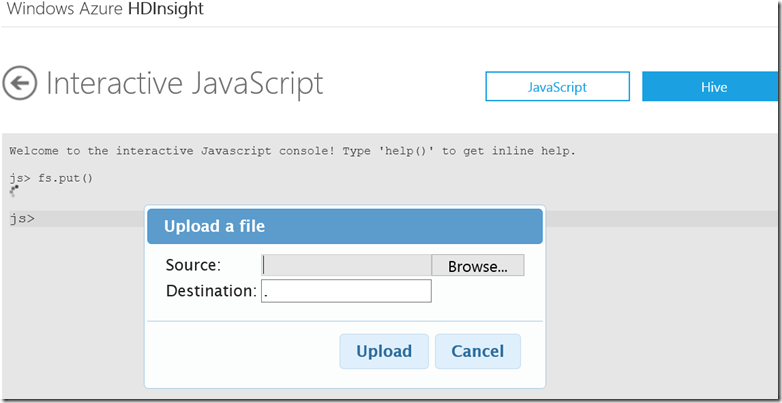

Let's go through the process of running the PIG Script with the entire Gutenberg collection, we first uploaded the MapReduce word count file, WordCount.js [link] by typing fs.put() it brings up dialog box for you to upload the WordCount.js file.

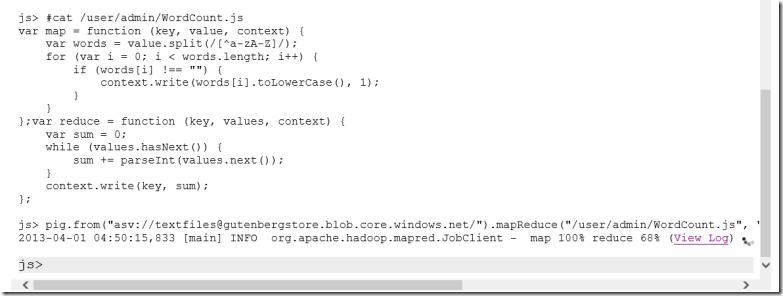

Next, you can verify that the WordCount.js file has been uploaded properly by typing #cat /user/admin/WordCount.js. As you noticed, HDFS commands that normally looks like: hdfs dfs –ls has been abstracted to #ls.

We then ran a Pig command to kick off a set of map reduce operations. The JavaScript below is compiled into Pig Latin Java and then executed.

pig.from("asv://textfiles@gutenbergstore.blob.core.windows.net/").mapReduce("/user/admin/WordCount.js", "word, count:long").orderBy("count DESC").take(10).to("DaVinciTop10")

- Load files from ASV storage, notice the format, asv://container@storageAccoutURL.

- Run MapReduce on the dataset using WordCount.js, results are in the format of, words, and count key value pair.

- Sort the key value dictionary by descending count value.

- Copy top 10 of the values to the DaVinciTop10 directory in the default HDFS.

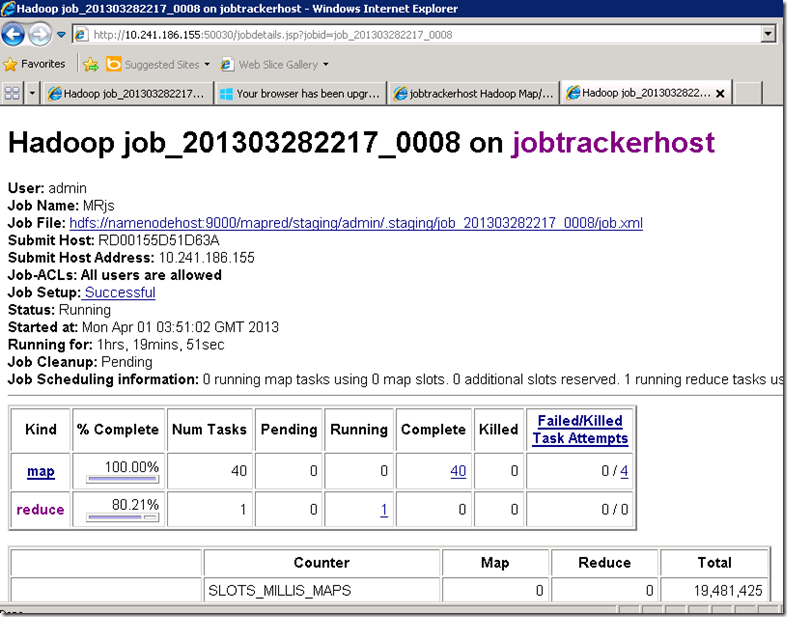

This process may take 10s of minutes to complete, since the dataset is rather large.

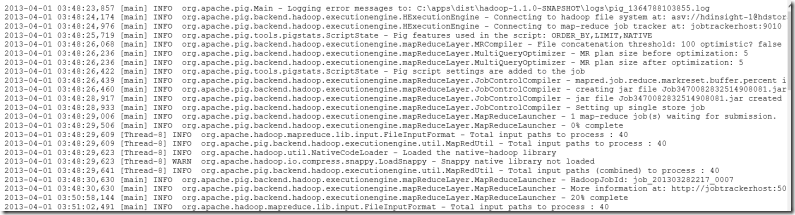

The View Log link provides detailed progress logs.

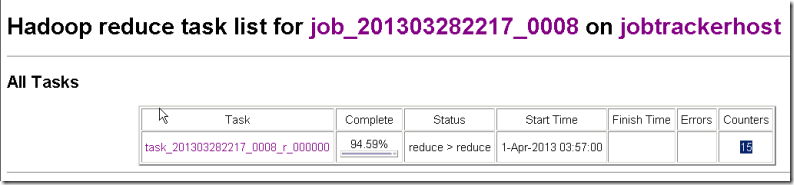

You can check the progress by RDP into the HeadNode, it will give you more detailed progress than the “View Log” link on the JavaScript Console.

Click on The Reduce link in the Table above to check on the Reduce Job, notice there shuffle and sort processes. Shuffle basically is the process where the reducer is fed with output with all the mappers output that it needs to process.

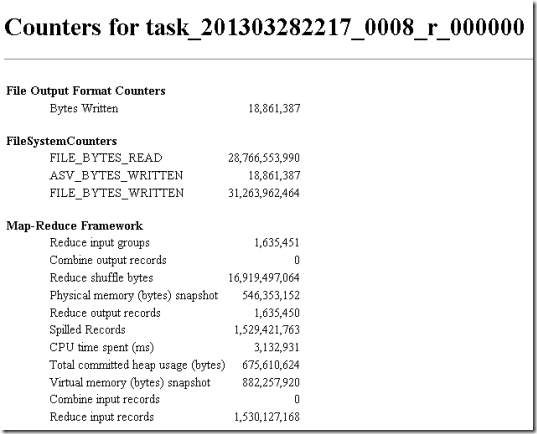

Click into the Counters link, there are significant amount of data being read and written in this process. The nice thing about Map Reduce jobs is that you can speed up the process by adding more compute resources. The mapping phase can be significantly speed up by running more processes in parallel.

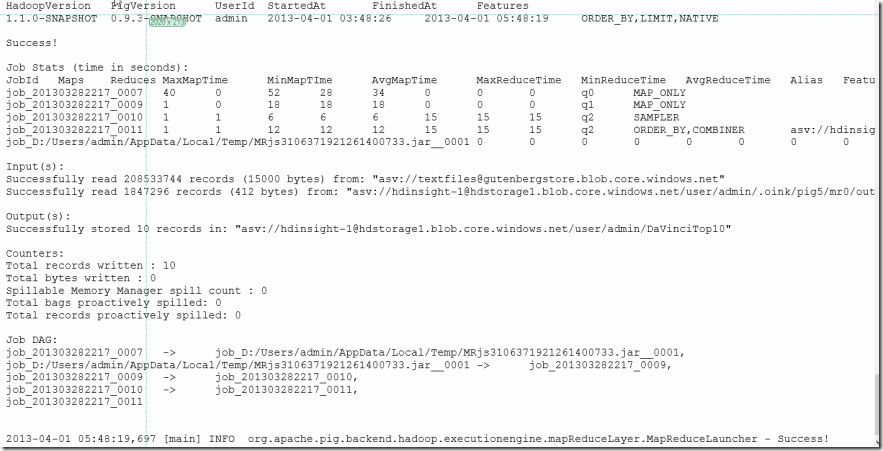

When everything finishes, the summary page tells us that the pig script was really about 5 different jobs, 07 – 11. for learning purposes, I’ve posted my results at: https://github.com/wenming/BigDataSamples/blob/master/gutenberg/results.txt

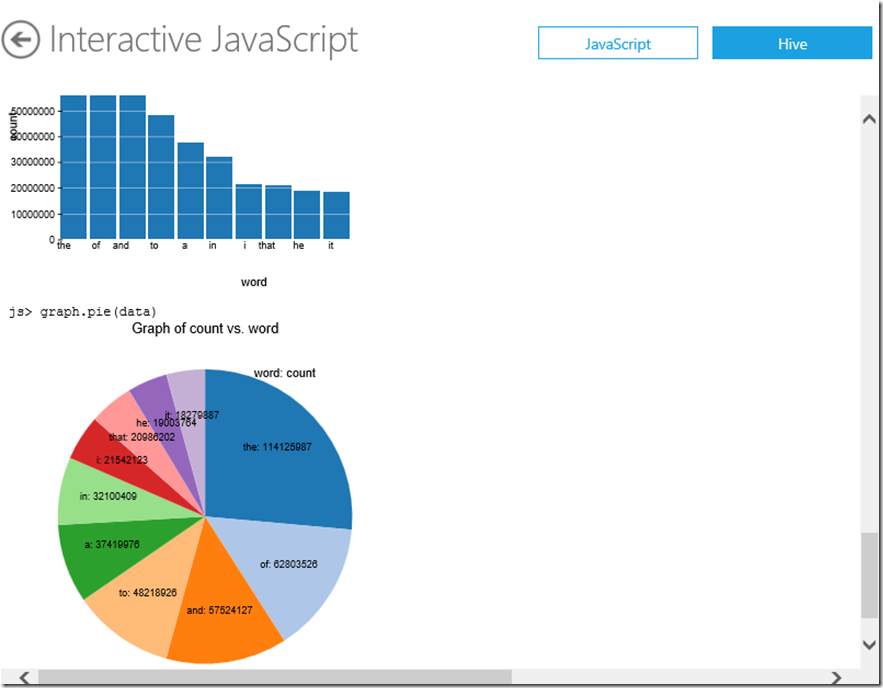

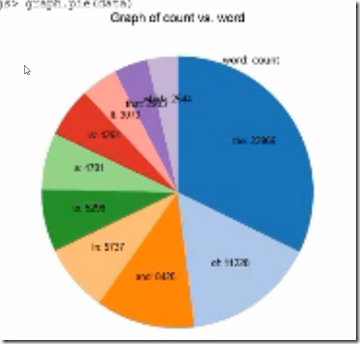

The JavaScript Console also provides you with simple graph functions.

file = fs.read("DaVinciTop10")

data = parse(file.data, "word, count:long")

graph.bar(data)

graph.pie(data)

When we compare the entire Gutenberg collection with just the Davinci.txt file, there’s a significant difference, with our new data we can certainly estimate the occurrences of these top words in the English language more accurately than just looking through 1 book.

Conclusion

More data always gives us more confidence, that’s why big data processing is so important. When it comes to processing large amounts of data, parallel big data processing tools such as HDInsight (Hadoop) can deliver results faster than running them on single workstations. Map Reduce is like the assembly language of Big Data, Higher level languages such as PIG Latin can be decomposed into a series of map reduce jobs for us.