The basics of SMB Multichannel, a feature of Windows Server 2012 and SMB 3.0

1. Introduction

Windows Server 2012 includes a new feature called SMB Multichannel, part of the SMB 3.0 protocol, which increases the network performance and availability for File Servers.

1.1. Benefits

SMB Multichannel allows file servers to use multiple network connections simultaneously and provides the following capabilities:

- Increased throughput. The file server can simultaneously transmit more data using multiple connections for high speed network adapters or multiple network adapters.

- Network Fault Tolerance. When using multiple network connections at the same time, the clients can continue to work uninterrupted despite the loss of a network connection.

- Automatic Configuration: SMB Multichannel automatically discovers the existence of multiple available network paths and dynamically adds connections as required.

1.2. Requirements

SMB Multichannel requires the following:

- At least two computers running Windows Server 2012 or Windows 8.

At least one of the configurations below:

- Multiple network adapters

- One or more network adapters that support RSS (Receive Side Scaling)

- One of more network adapters configured with NIC Teaming

- One or more network adapters that support RDMA (Remote Direct Memory Access)

2. Configuration

2.1. Installing

SMB Multichannel is enabled by default. There is no need to install components, roles, role services or features.

The SMB client will automatically detect and use multiple network connections if a proper configuration is identified.

2.2. Disabling

SMB Multichannel is enabled by default and there is typically no need to disable it.

However, if you want to disable SMB Multichannel (for testing purposes, for instance), you can use the following PowerShell cmdlets:On the SMB server side:

Set-SmbServerConfiguration -EnableMultiChannel $false

On the SMB client side:

Set-SmbClientConfiguration -EnableMultiChannel $false

Note: Disabling the feature on either the client or the server prevent the systems from using it.

2.3. Re-enabling

You can re-enable SMB Multichannel after you disabled it by using:

On the SMB server side:

Set-SmbServerConfiguration -EnableMultiChannel $true

On the SMB client side:

Set-SmbClientConfiguration -EnableMultiChannel $true

Note: You need to enable the feature on both the client or the server to start using it again.

3. Sample Configurations

This section provides details on how SMB Multichannel works with a few different configurations using a variety of Network Interface Cards (NIC). Please note that these are only samples and many other configurations not detailed here are possible.

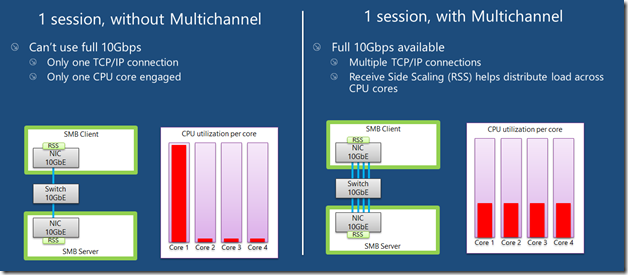

3.1. Single RSS-capable NIC

This typical configuration involves an SMB client and SMB Server configured with a single 10GbE NIC. Without SMB multichannel, if there is only one SMB session established, SMB uses a single TCP/IP connection, which naturally gets affinitized with a single CPU core. If lots of small IOs are performed, it’s possible for that core to become a performance bottleneck.

Most NICs today offer a capability called Receive Side Scaling (RSS), which allows multiple connections to be spread across multiple CPU cores automatically. However, when using a single connection, RSS cannot help.

With SMB Multichannel, if the NIC is RSS-capable, SMB will create multiple TCP/IP connections for that single session, avoiding a potential bottleneck on a single CPU core when lots of small IOs are required.

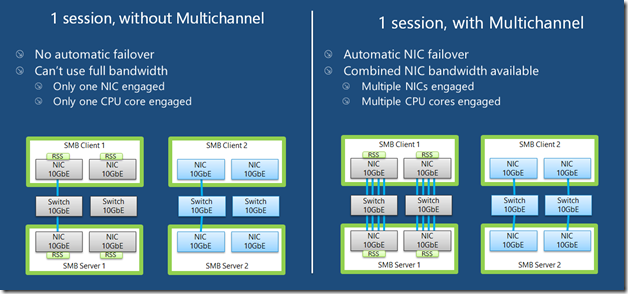

3.2. Multiple NICs

When using multiple NICs without SMB multichannel, if there is only one SMB session established, SMB creates a single TCP/IP connection using only one of the many NICs available. In this case, not only it’s not possible to aggregate the bandwidth of the multiple NICs (achieve 2Gbps when using two 1GbE NICs, for instance), but there is a potential for failure if the specific NIC chosen is somehow disconnected or disabled.

With Multichannel, SMB will create multiple TCP/IP connections for that single session (at least one per interface or more if they are RSS-capable). This allows SMB to use the combined NIC bandwidth available and makes it possible for the SMB client to continue to work uninterrupted if a NIC fails.

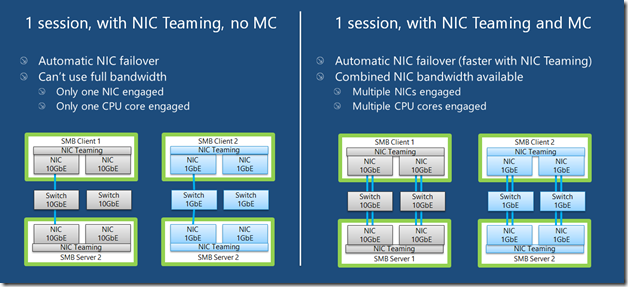

3.3. Teamed NICs

Windows Server 2012 supports the ability to combine multiple NICs into one using a new feature commonly referred to as NIC teaming. Although a team always provides fault tolerance, SMB without Multichannel will create only one TCP/IP connection per team, leading to limitations in both the number of CPU cores engaged and the use of the full team bandwidth.

SMB Multichannel will create multiple TCP/IP connections, allowing for better balancing across CPU cores with a single SMB session and better use of the available bandwidth. NIC Teaming will continue to offer the failover capability, which will work faster than using SMB Multichannel by itself. NIC Teaming is also recommended because it offers failover capabilities to other workloads that do not rely on SMB and therefore cannot benefit from the failover capabilities of SMB Multichannel.

Note: A team of RDMA-capable NICs is always reported as non-RDMA capable. If you intend to use the RDMA capabilities of the NIC, do not team them.

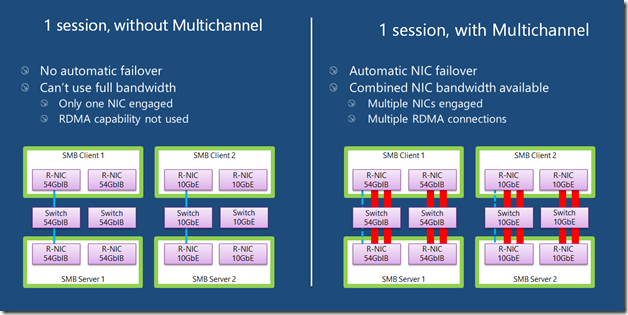

3.4. Single or Multiple RDMA NICs

SMB Multichannel is the feature responsible for detecting the RDMA capabilities of NICs to enable the SMB Direct feature (SMB over RDMA). Without SMB Multichannel, SMB will use regular TCP/IP with these RDMA-capable NICs (they all provide a TCP/IP stack side-by-side with the new RDMA stack).

With SMB Multichannel, SMB will detect the RDMA capability and create multiple RDMA connections for that single session (two per interface). This allows SMB to use the high throughput, low latency and low CPU utilization offered by these RDMA NICs. It will also offer fault tolerance if you’re using multiple RDMA interfaces.

Note 1: A team of RDMA-capable teams is reported as non-RDMA capable. If you intend to use the RDMA capability of the NIC, do not team them.

Note 2: After at least one RDMA connection is created, the TCP/IP connection used for the original protocol negotiation is no longer used. However, that connection is kept around in case the RDMA connections fail.

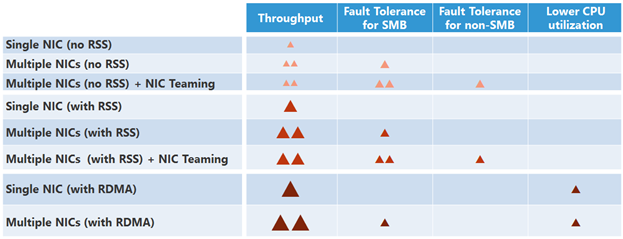

3.5. Multichannel, RDMA and NIC Teaming compatibility

Here’s a table summarizing the different capabilities available when combining SMB Multichannel, RDMA (SMB Direct) and NIC Teaming:

For non-RDMA NICs, your best bet is combining NIC Teaming with SMB Multichannel. This will give you the best throughput, plus fault tolerance for applications using SMB and other protocols.

When using RDMA NICs, LBFO is not a good option, since it disables the RDMA capability of the NIC.

3.6. Sample Configurations that do not use SMB Multichannel

The following are sample network configurations that do not use SMB Multichannel:

- Single non-RSS-capable network adapters. This configuration would not benefit from multiple network connections, so SMB Multichannel is not used.

- Network adapters of different speeds. SMB Multichannel will choose to use the faster network adapter. Only network interfaces of same type (RDMA, RSS or none) and speed will be used simultaneously by SMB Multichannel, so the slower adapter will be idle.

4. Operations

Here are a few common scenarios for testing SMB Multichannel:

4.1. Compare a file copy with and without SMB Multichannel

To measure the increased throughput provided by SMB Multichannel, follow these steps:

- Setup SMB Multichannel in one the configurations described earlier

- Measure the time to perform a long-running file copy using SMB Multichannel

- Disable SMB Multichannel (see instructions in previous topic).

- Measure the time it takes to perform the same file copy without SMB Multichannel

- Re-enable SMB Multichannel (see instructions in previous topic).

- Compare the two results.

Note: to avoid the effects of caching, you should:

- Copy a large amount of data (more data than would fit on memory).

- Perform the copy twice, using first copy as a warm-up and timing only the second copy.

- Restart both the server and the client before each test to make sure they operate under similar conditions.

4.2. Fail one of multiple NICs during a file copy with SMB Multichannel

To confirm the failover capability of SMB Multichannel, follow these steps:

- Make sure SMB Multichannel is operating in a multi-NIC configuration.

- Perform a long-running file copy.

- While the file copy is running, simulate a failure one of the network paths by disconnecting one of the cables (or by disabling one of the NICs)

- Confirm that the file copy continues using the surviving NIC, without any file copy errors.

Note: Make sure there are no other workloads using the disconnected/disabled path, to avoid failures in workloads that do not leverage SMB Multichannel.

5. Troubleshooting

Here are troubleshooting tips for SMB Multichannel.

5.1. Verifying if you’re using SMB Multichannel

You can use the following steps to verify you are using SMB Multichannel.

Step 1: Verify network adapter configuration

Use the following PowerShell cmdlets to verify you have multiple NICs and/or to verify the RSS and RDMA capabilities of the NICs. Run on both the SMB server and the SMB client.

Get-NetAdapter

Get-NetAdapterRSS

Get-NetAdapterRDMA

Get-NetAdapterHardwareInfo

Step 2: Verify SMB configuration

Use the following PowerShell cmdlets to make sure SMB Multichannel is enabled, confirm the NICs are being properly recognized by SMB and that their RSS and RDMA capabilities are being properly identified.

On the SMB client, run the following PowerShell cmdlets:

Get-SmbClientConfiguration | Select EnableMultichannel

Get-SmbClientNetworkInterface

On the SMB server, run the following PowerShell cmdlets:

Get-SmbServerConfiguration | Select EnableMultichannel

Get-SmbServerNetworkInterface

Step 3: Verify the SMB connection

On the SMB client, start a long-running file copy to create a lasting session with the SMB Server. While the copy is ongoing, open a PowerShell window and run the following cmdlets to verify the connection is using the right version of SMB and that SMB Multichannel is working:

Get-SmbConnection

Get-SmbMultichannelConnection

Get-SmbMultichannelConnection -IncludeNotSelected

5.2. View SMB Multichannel Events (Optional)

SMB 3.0 offers a “Object State Diagnostic” event log that can be used to troubleshoot Multichannel (and therefore RDMA) connections.

Keep in mind that this is a debug log, so it’s very verbose and requires a special procedure for gathering the events. You can follow the steps below:Step 1: Capture the events

First, enable the log in Event Viewer:

- Open Server Manager

- In Server Manager, click on “Tools”, then “Event Viewer”

- On the menu, select “View” then “Show Analytic and Debug Logs”

- Expand the tree on the left: Applications and Services Log, Microsoft, Windows, SMB Client, ObjectStateDiagnostic

- On the “Actions” pane on the right, select “Enable Log”

- Click OK to confirm the action.

After the log is enabled, perform the operation that requires an RDMA connection. For instance, copy a file or run a specific operation.

If you’re using mapped drives, be sure to map them after you enable the log, or else the connection events won’t be properly captured.Next, disable the log in Event Viewer:

- In Event Viewer, make sure you select Applications and Services Log, Microsoft, Windows, SMB Client, ObjectStateDiagnostic

- On the “Actions” page on the right, “Disable Log”

Step 2: Option 1: View the events in Event Viewer

You can review the events on the log in Event Viewer. You can filter the log to include only the SMB events that confirm that you have an SMB Multichannel or only error events.

The “Smb_MultiChannel” keyword will filter for connection, disconnection and error events related to SMB. You can also filter by event numbers 30700 to 30706.

- Click on the “ObjectStateDiagnostic” item on the tree on the left.

- On the “Actions” pane on the right, select “Filter Current Log…”

- Click on “Filter Current Log…” on the Actions pane on the right and enter “30700-30705” on the filter for Event IDs.

To view the SMB Multichannel events using Event Viewer, use the following steps on the SMB Client:

- In Event Viewer, expand the tree on the left to show “Applications and Service Logs”, “Microsoft”, “Windows”, “SMB Client”, “Operational”

- Click on “Filter Current Log…” on the Actions pane on the right and enter “30700-30705” on the filter for Event IDs.

To view only errors:

- Click on “Filter Current Log…” on the Actions pane on the right and click on the checkbox labeled “Errors”.

Step 2: Option 2: View the events using PowerShell

You can also use a PowerShell window and run the following cmdlets to view the events.

To see any RDMA-related connection events, you can use the following:

Get-WinEvent -LogName Microsoft-Windows-SMBClient/ObjectStateDiagnostic -Oldest |

? Message -match "RDMA"

To view all types of SMB Multichannel events , use the following cmdlet on the SMB Client:

Get-WinEvent -LogName Microsoft-Windows-SMBClient/ObjectStateDiagnostic –Oldest |

? { $_.Id -ge 30700 –and $_.Id –le 30705 }To list only errors, use the following cmdlet:

Get-WinEvent -LogName Microsoft-Windows-SMBClient/ObjectStateDiagnostic -Oldest |

? { $_.Id -ge 30700 –and $_.Id –le 30705 –and $_.Level –eq 2 }

5.3. View SMB Performance Counters

There are several Performance counters related to SMB in Windows Server 2012. To view SMB-related performance information, follow these steps:

- Open Server Manager

- In the Tools menu, open Performance Monitor

- In Performance Monitoring, click on “Performance Monitor” on the tree on the left.

- Switch to the Report View by pressing CTRL-G twice (or using the icon on the toolbar)

- Add performance counters to the view by pressing CTRL-N (or using the icon on the toolbar)

The following Performance Counters are useful when looking at activities related to SMB, SMB Direct and Network Direct:

Counter Name Shows information for SMB Server Shares Shares on the SMB Server SMB Server Sessions Sessions on the SMB Server SMB Client Shares Shares on the SMB Client

6. Number of SMB Connections per Interface

SMB Multichannel will use a different number of connections depending on the type of interface:

- For RSS-capable interfaces, 4 TCP/IP connections per interface are used

- For RDMA-capable interfaces, 2 RDMA connections per interface are used

- For all other interfaces, 1 TCP/IP connection per interface is used

There is also a limit of 8 connections total per client/server pair which will limit the number connections per interface.

For instance, if you have 3 RSS-capable interfaces, you will end up with 3 connections on the first, 3 connections on the second and 2 connections on the third interface.

We recommend that you keep the default settings for SMB Multichannel. However, those parameters can be adjusted.

6.1. Total Connections per client/server pair

You can configure the maximum total number of connections per client/server pair using:

Set-SmbClientConfiguration –MaximumConnectionCountPerServer <n>

6.2. Connections per RSS-capable NIC

You can configure the number SMB Multichannel connections per RSS-capable network interface using the PowerShell cmdlet:

Set-SmbClientConfiguration -ConnectionCountPerRssNetworkInterface <n>

6.3. Connections per RDMA-capable NIC

It is even less likely that you’ll need to adjust the number of connections per RDMA-capable interface.

That can be configured via a registry key using the following PowerShell command:

Set-ItemProperty -Path ` "HKLM:\SYSTEM\CurrentControlSet\Services\LanmanWorkstation\Parameters" `

ConnectionCountPerRdmaNetworkInterface -Type DWORD -Value <n> –Force

6.4. Connections for other types of NIC

For NICs that are not RSS-capable or RDMA-capable, there is likely no benefit of using multiple connections. In fact, this will likely reduce your performance.

However, for troubleshooting purposes, there is also a registry key to change the default settings of 1 connection per NIC.

That can be configured via a registry key using the following PowerShell command:

Set-ItemProperty -Path ` "HKLM:\SYSTEM\CurrentControlSet\Services\LanmanWorkstation\Parameters" `

ConnectionCountPerNetworkInterface -Type DWORD -Value <n> –Force

7. SMB Multichannel Constraints

Because of feedback during the Beta, we added in the Release Candidate a new set of cmdlets using the SmbMultichannelConstraint noun to allow you to control the SMB Multichannel behavior in more complex network configurations.

For instance, if you have 4 NICs in your system and you want the SMB client to use only two specific NICs when you access server FS1, you can accomplish that with the following PowerShell cmdlets:

New-SmbMultichannelConstraint -ServerName FS1 -InterfaceIndex 12, 19

Or

New-SmbMultichannelConstraint -ServerName FS1 -InterfaceAlias RDMA1, RDMA2

7.1. Notes on SMB Multichannel Constraints

Here are a few notes and comments on how to use SMB Multichannel Constraints:

- This is configured on the SMB 3.0 client and it’s persisted in the registry.

- Constraints are configured per server because you can configure different set of interfaces for each server if required.

- You list the interfaces you want to use, not the interfaces you want to avoid.

- You get a list of interfaces in your system using Get-NetAdapter.

- For servers not specified, the default SMB Multichannel behavior for that client continues to apply.

- For a reference of other cmdlets related to SMB Multichannel Constraints, check the blog post on the basics of SMB PowerShell

7.2. Recommendation: keep the default behavior, avoid constraints

This is an advanced setting, available only via PowerShell (or WMI). Please be careful when configuring these SMB Multichannel constraints, because you have the potential to apply a less-than-ideal configuration and end up with something that does not provide you the best level of network fault tolerance (also known as “shooting yourself in the foot”).

This is specially true if you add/remove network adapters from your system and forget to adjust your constraints accordingly. We highly recommend that you stick to the default, let-SMB-Multichannel-figure-it-out configuration if at all possible, since this will let SMB 3.0 dynamically and automatically adjust to any changes in network configuration shortly after it happens.

8. Conclusion

I hope this blog post has helped you understand the basics of SMB Multichannel. I would encourage you to try it out in any of the configurations shown.

For additional information on the Windows Server 2012 File Server, SMB 3.0 and SMB Direct, see the links at Updated Links on Windows Server 2012 File Server and SMB 3.0.

Update on 5/14: Minor content update

Update on 6/28: Added section on SMB Multichannel Constraints

Comments

- Anonymous

January 01, 2003

Jose,Do you know if there are any plans to backport SMB 3.0 to Windows 7? Or is SMB 3.0 already baked in? - Anonymous

January 01, 2003

@JeffreyWindows 7 implements SMB 2.1.There are way too many new features in SMB 3.0 to back port to previous Windows versions.Obviously Windows 8 and Windows Server 2012 will be able to talk SMB to Windows 7, but they will negotiate down to SMB 2.1 in that case. The protocol clearly describes the scenario of negotiating with previous versions. - Anonymous

January 01, 2003

@JeffreyYes, that is correct.Assuming you are using SMB 3.0 (Windows Server 2012 failover cluster and Windows 8 clients), your users won't lose their sessions. - Anonymous

January 01, 2003

The comment has been removed - Anonymous

January 01, 2003

Nice! Can you go over how this would impact using win2012 as a fail-over cluster for file serving? Specifically, i'm grasping at straws, if the node goes down that hosts your file share, currently that session is lost and you have to re-establish against the new server, it almost sounds like that may not be an issue now? - Anonymous

January 01, 2003

If you have dual network adapters for every node of your file server cluster, you can certainly configure it to use SMB Multichannel. If only one network path fails, you will continue to use the same node if you configure your network resources with "OR" dependencies.See configuration 2.8 at this post: blogs.technet.com/.../using-the-multiple-nics-of-your-file-server-running-windows-server-2008-and-2008-r2.aspx. Also, item 4 on the same blog post has some additional details on how this works from a clustering perspective.We also have a feature in SMB 3.0 called Transparent Failover. This allows file shares to continue to work from another cluster node when the entire node fails. SMB 3.0 essentially resumes the sessions on one of the surviving nodes, without any impact to the application. - Anonymous

January 01, 2003

The comment has been removed - Anonymous

June 08, 2012

Jose,In 3.4 with multiple RDMA adapters on both source and destination servers, how should IP settings be configured on those adapters? Should both adapters on each server be in the same VLAN and both configured with a default gateway? We would like to do RDMA over 10 Gb and have fault tolerance. - Anonymous

June 28, 2012

The comment has been removed - Anonymous

July 06, 2012

nice!! very usefullThanks a lot for sharing this awesome blog post. I also have been looking everywhere for this.<SNIP> - Anonymous

January 27, 2014

Having decided to start this blog to convey my experience with network analysis and troubleshooting, - Anonymous

February 27, 2014

The comment has been removed - Anonymous

August 05, 2014

Pingback from #StorageGlory Achieved : 30 Days on a Windows SAN | Agnostic Computing - Anonymous

August 05, 2014

Pingback from #StorageGlory Achieved : 30 Days on a Windows SAN | Agnostic Computing - Anonymous

August 05, 2014

Pingback from #StorageGlory Achieved : 30 Days on a Windows SAN | Agnostic Computing - Anonymous

August 05, 2014

Pingback from #StorageGlory Achieved : 30 Days on a Windows SAN | Agnostic Computing - Anonymous

August 05, 2014

Pingback from #StorageGlory Achieved : 30 Days on a Windows SAN | Agnostic Computing - Anonymous

July 09, 2015

The comment has been removed - Anonymous

April 22, 2016

Hello and thanks for this great blog.We are using a 2-node SOF-Cluster as SMB3 storage for our Hyper-V Cluster. I can see following error messages in SMBClient/Connectivity Event log every 10 minutes:The network connection failed.Error: {Device Timeout}The specified I/O operation on %hs was not completed before the time-out period expired.Server name: fe80::dced:600c:330b:a63b%19Server address: 192.168.120.122:445Connection type: WskGuidance:This indicates a problem with the underlying network or transport, such as with TCP/IP, and not with SMB ...This IPv6 address (name) and the IPv4 address is from the Microsoft Failover Cluster Virtual Adpater of the other storage node. I can't find the Problem or is it no problem. Any help?Andreas- Anonymous

April 22, 2016

That server name is usually associated with the cluster internal network.The fact that it happens every 10 minutes probably means that it failed to connect and it's retrying after a while.You should probably check if the cluster nodes can talk to each other via this 192.168.120.* network.- Anonymous

April 28, 2016

The Cluster nodes can ping each other node via this Network without any Problems. Why the SMBClient from one node will reach the other node via Cluster communication Network? SMB should use other more faster Networks.Andreas - Anonymous

May 04, 2016

Now, i have disabled SMB multichannelconnection because we have only one fast SMB Connection over Infiniband actually. Error goes away. Or should it be better to set multichannelconstraints ?Andreas- Anonymous

July 26, 2016

@AndreasDo you have any problems after disabling Multichannle, I had have the same situation bit with emulex ROCE and Win 2016 TP5 storage spaces. But I'm not sure do I have solution for my problems. But in logs don't have errors about RDMA adapters

- Anonymous

- Anonymous

- Anonymous

- Anonymous

June 21, 2016

How would this work if a host has 2 nics, one is routed L3 and the other is not, L2 only. Connection target is on the L2 subnet - Anonymous

July 07, 2016

Hi,I'm having trouble getting SMB Multichannel to work and am out of ideas what else to try.Configuration:- Switch: TP-Link TL-SG2210P- PC 1: 4C/8T CPU, Intel I350-T4 V2 two 1 Gbps connections to the switch, Windows 8.1 Pro x64- PC 2 4C/8T CPU, Intel I350-T2 V2 two 1 Gbps connections to the switch, Windows 10 Pro x64- The switch has the latest firmware installed and has been reset to factory defaults to avoid any past LAG settings from interfering- All Intel NICs have the latest Intel drivers installed (from the ethernet connections package 21.0, I extracted the drivers only to avoid issues with the separate Intel management software)- Neither at the PCs nor the switch are any LAG/teaming settings enabled manually- Each IPV4 address is set manually, each NIC can ping every other NIC (IPv6 is disabled)- Large file transfers via Windows Explorer (accessed the shares via \PCxy, not their IP addresses) are stuck at 113 MB/s (118 MB/s with Jumbo Frames enabled), during which only one NIC on each PC is at 99 %, the other one is at 0 % activity- Windows PowerShell cmdlets show on each PC:1) "get-NetAdapterRSS": RSS is active at the connected NICs2) "get-SMBServerNetworkInterface": "RSS Capable : True"3) "get-SMBClientNetworkInterface": "RSS Capable : True"4) "get-SMBServerConfiguration": "EnableMultiChannel : True"5) "get-SMBClientConfiguration": "EnableMultiChannel : True"6) "Get-SmbMultichannelConnection" during a long still active file transfer doesn't show anythingI'm a little baffled what to check next since SMB Multichannel should "just" work with suitable software/hardware combinations. - Anonymous

October 30, 2016

Hi Jose,Can you please let me know the max no of RDMA NIC's that we can have on a single SMB Server-Client pair.. ? We have a scale up server where we can have upwards of 8 RDMA NIC's and upwards too.- Anonymous

December 12, 2016

You will be limited by the number of slots and the number of PCI lanes available.

- Anonymous

- Anonymous

December 01, 2016

hi all, does RSS work together with RDMA? if i have RDMA cards, does that mean RSS is not on? section 3 does not mention if rss is working when using RDMA ( SMB DIRECT)thanks - Anonymous

January 17, 2017

What does triangle mark mean in different color, number, and size in compatibility table at ch3.5?@@- Anonymous

February 12, 2017

That was just an attempt to show which one is better.

- Anonymous

- Anonymous

February 23, 2017

does RSS work together with RDMA? if i have RDMA cards, does that mean RSS is not on? section 3 does not mention if rss is working when using RDMA ( SMB DIRECT)thanks- Anonymous

February 24, 2017

RDMA adapters use a much more sophisticated mechanism, which is many times better than RSS. Instead of using multiple cores of your main CPU, it uses the CPU of the adapter.

- Anonymous

- Anonymous

March 02, 2017

Even if IP addresses of different segments are assigned to each NIC, is this multi-channel function used and communication is to be carried out?Or, as for the NIC that can not be reached by the opponent, it is not used beforehand.- Anonymous

May 16, 2017

It should work even in that case. You should try it out...

- Anonymous

- Anonymous

October 20, 2017

I thought:1. That NICS need to be the same for SMB to work seamlessly 2. That you should leave one NIC out of the scope of constraint for out of band management of the server were the SMB multichannel may be saturated with traffic.