Deploying Deep Learning Models on Kubernetes with GPUs

This post is authored by Mathew Salvaris and Fidan Boylu Uz, Senior Data Scientists at Microsoft.

One of the major challenges that data scientists often face is closing the gap between training a deep learning model and deploying it at production scale. Training of these models is a resource intensive task that requires a lot of computational power and is typically done using GPUs. The resource requirement is less of a problem for deployment since inference tends not to pose as heavy a computational burden as training. However, for inference, other goals also become pertinent such as maximizing throughput and minimizing latency. When inference speed is a bottleneck, GPUs show considerable performance gains over CPUs. Coupled with containerized applications and container orchestrators like Kubernetes, it is now possible to go from training to deployment with GPUs faster and more easily while satisfying latency and throughput goals for production grade deployments.

In this tutorial, we provide step-by-step instructions to go from loading a pre-trained Convolutional Neural Network model to creating a containerized web application that is hosted on Kubernetes cluster with GPUs on Azure Container Service (AKS). AKS makes it quick and easy to deploy and manage containerized applications without much expertise in managing Kubernetes environment. It eliminates complexity and operational overhead of maintaining the cluster by provisioning, upgrading, and scaling resources on demand, without taking the applications offline. AKS reduces the cost and complexity of using a Kubernetes cluster by managing the master nodes for which the user does no incur a cost. Azure Container Service has been available for a while and similar approach was provided in a previous tutorial to deploy a deep learning framework on Marathon cluster with CPUs . In this tutorial, we focus on two of the most popular deep learning frameworks and provide the step-by-step instructions to deploy pre- trained models on Kubernetes cluster with GPUs.

The tutorial is organized in two parts, one for each deep learning framework, specifically TensorFlow and Keras with TensorFlow backend. Under each framework, there are several notebooks that can be executed to perform the following steps:

- Develop the model that will be used in the application.

- Develop the API module that will initialize the model and make predictions.

- Create Docker image of the application with Flask and Nginx.

- Test the application locally.

- Create an AKS cluster with GPUs and deploy the web app.

- Test the web app hosted on AKS.

- Perform speed tests to understand latency of the web app.

Below, you will find short descriptions of the steps above.

Develop the Model

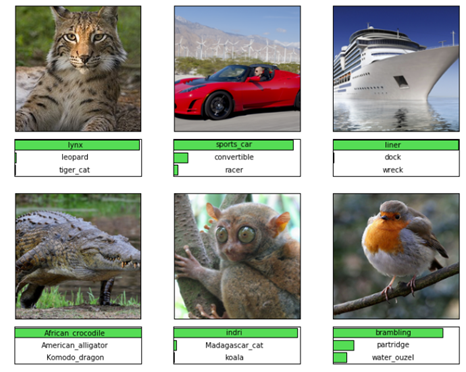

As the first step of the tutorial, we load the pre-trained ResNet152 model, pre-process an example image to the required format and call the model to find the top predictions. The code developed in this step will be used in the next step when we develop the API module that initializes the model and makes predictions.

Develop the API

In this step, we develop the API that will call the model. This driver module initializes the model, transforms the input so that it is in the appropriate format and defines the scoring method that will produce the predictions. The API will expect the input to be in JSON format. Once a request is received, the API will convert the JSON encoded request into the image format. The first function of the API loads the model and returns a scoring function. The second function processes the images and uses the first function to score them.

Create Docker Image

In this step, we create the Docker image that has three main parts, the web application, the pretrained model and the driver module for executing the model based on the requests made to the web application. The Docker image is based on a Nvidia image to which we only add the necessary Python dependencies and install the deep learning framework to keep the image as lightweight as possible. The Flask web app will be running on the default port 80 which is exposed on the docker image and Nginx is used to create a proxy from port 80 to port 5000. Once the container is built, we push it to a public Docker hub account for AKS cluster to pull it in later steps.

Test the Application Locally

In this step, we test our docker image by pulling it and running it locally. This step is especially important to make sure the image performs as expected before we go through the entire process of deploying to AKS. This will reduce the debugging time substantially by checking if we can send requests to the Docker container and receive predictions back properly.

Create and AKS Cluster and Deploy

In this step, we use Azure CLI to login to Azure, create a resource group for AKS and create the cluster. We create an AKS cluster with 1 node using Standard NC6 series with 1 GPU. After the AKS cluster is created, we connect to the cluster and deploy the application by defining the Kubernetes manifest where we provide the image name, map port 80 and specify Nvidia library locations. We set the number of Kubernetes replicas to 1 which can later be scaled up to meet certain throughput requirements (the latter is out of scope for this tutorial). Kubernetes also has a dashboard that can simply be accessed through a web browser.

Test the Web App

In this step, we test the web application that is deployed on AKS to quickly check if it can produce predictions against images that are sent to the service.

Perform Speed Tests

In this step, we use the deployed service to measure the average response time by sending 100 asynchronous requests with only four concurrent requests at any time. These types of tests are particularly important to perform, especially for deployments with low latency requirements to make sure the cluster is scaled to meet the demand. The result of the tests suggest that the average response times are less than a second for both frameworks with TensorFlow (~20 images/sec) being much faster than its Keras (~12 images/sec) counterpart on a single K80 GPU.

As a last step, to delete the AKS and free up the Azure resources, we use the commands provided at the end of the notebook where AKS was created.

We hope you give this tutorial a try! Reach out to us with any comments or questions below.

Mathew & Fidan

Acknowledgements

We would like to thank William Buchwalter for helping us craft the Kubernetes manifest files, Daniel Grecoe for testing the throughput of the models and lastly Danielle Dean for the useful discussions and proofreading of the blog post.