Hinweis

Für den Zugriff auf diese Seite ist eine Autorisierung erforderlich. Sie können versuchen, sich anzumelden oder das Verzeichnis zu wechseln.

Für den Zugriff auf diese Seite ist eine Autorisierung erforderlich. Sie können versuchen, das Verzeichnis zu wechseln.

Reviewed by: Jos de Bruijn,Mike Weiner, Mike Ruthruff , Kun Cheng

As we discussed in a prior blog on considerations around validation errors , part of transaction processing on memory optimized tables include transactions taking dependencies on other transactions during validation and commit processing. With SQL Server 2014 and SQL Server 2016, there is a limit of 8 incoming or outgoing transaction dependencies that can be taken. In other words, up-to 8 transactions can take a dependency on a single transaction TX1 (incoming dependency) or alternatively a transaction can depend on 8 other transactions. If any more than 8 incoming or outgoing dependencies are taken, they fail with the error below:

Error 41839 – "Transaction exceeded the maximum number of commit dependencies and the last statement was aborted. Retry the statement."

Note: Error 41839 was introduced in SQL Server 2016. In SQL Server 2014 the error number 41301 covered both normal dependency failures and the scenario discussed here on dependency limits being exceeded, which made it hard to distinguish between the two distinct cases.

The window of time in which the dependency is taken is very short and only during validation and commit processing and most workloads will never run into this. There are valid application scenarios though that can hit this error. One of the most common scenarios where we have seen this error is when a large number of read transactions take a dependency on the same write transaction. This can happen for example if the same record (or range of records) in a table may be frequently updated and at that commit time those same records are accessed by a large number of read threads all trying to query that range.

We are more likely to see this when

- The write transaction commit takes a longer period of time. Long here is relative, it could be due to Log IO bottleneck when doing the commit or potentially a synchronous Availability group replica which has a commit delay or the statement itself is not indexed appropriately.

- The read transactions could have a missing index and as a result process more rows or take a longer time.

- Repeatable read or serializable isolation levels are being used.

- Artificial workloads (testing) can be more susceptible to it if querying the same record which is not the normal pattern.

- A more complex case involving many different types of transactions and incoming and outgoing dependencies.

Let us walk through reproducing and troubleshooting the scenario with the script below:

-- Setup script to Create a database and necessary objects

CREATE DATABASE IMOLTP

GO

-- create database with a memory-optimized filegroup and a container.

ALTER DATABASE IMOLTP ADD FILEGROUP IMOLTP_MOD CONTAINS MEMORY_OPTIMIZED_DATA

ALTER DATABASE IMOLTP ADD FILE (NAME='imoltp_mod1', FILENAME='D:\temp\imoltp_mod1')

TO FILEGROUP IMOLTP_MOD

ALTER DATABASE IMOLTP SET MEMORY_OPTIMIZED_ELEVATE_TO_SNAPSHOT=ON

GO

USE IMOLTP

go

--Create Events table and insert a few rows

CREATE TABLE tblEvent( EventID int not null PRIMARY KEY NONCLUSTERED,

EventName nvarchar(100),

EventDescription nvarchar(max)

) WITH(MEMORY_OPTIMIZED=on)

GO

INSERT INTO tblEvent VALUES (1,'OldEvent',REPLICATE(N'o',30000))

INSERT INTO tblEvent VALUES (2,'NewestEvent',REPLICATE(N'n',30000))

GO

Read Transaction: We will simulate a scenario where there are a large number of readers going after the same record which is the latest event record and executing the statement below

DECLARE @EventName nvarchar(5);

SELECT @EventName= EventName from tblEvent where EventID=2

In order to do that, we will use RML utilities and specifically ostress.exe from the RML utilities to simulate 100 connections doing that same reader type activity

C:\RML Utilities> ostress.exe -SMySQLServerName -E -dIMOLTP -n100 -r100000 -Q"DECLARE @EventName nvarchar(25); SELECT @EventName=EventName from tblEvent where EventID=2" -q

Parameters: -n = 100 threads, -r = 100000 iterations, -S = ServerName , -q = quiet mode , -Q = Query to run.

Write Transaction: We have a transaction that needs to update the description of the event, the update below is not realistic but it can be any transaction that changes that record forcing other transactions to take a dependency on it. The longer the execution of this transaction, the more the dependencies could pile up.

BEGIN TRAN

update tblEvent set EventDescription = REPLICATE(N'a', 30000) where EventID=2

COMMIT

GO

Once you issue the commit statement above, on the DOS window that was running the select statements, you will see errors such as:

07/04/16 19:33:15.508 [0x0000AA0C] [spid 105] SQLState: 42000, Native Error: 41839, Severity: 16, State: 153, Line: 1 [SQL Server] Transaction exceeded the maximum number of commit dependencies and the last statement was aborted. Retry the statement.

From the individual error itself, all you know is that it has exceeded number of commit dependencies. You can view commit dependencies either via Extended events or for longer running transactions perhaps with sys.dm_db_xtp_transactions DMV.

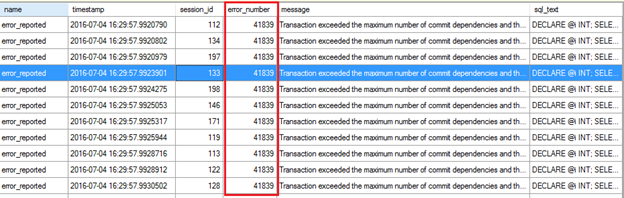

To help troubleshoot this issue one can create an Extended Event session, detailed below, to track how many dependency errors we are hitting as well as view the statements that take dependencies.

-- Dependency Diagnostics Extended Events

-- Tracks error 41839 as well as TX dependency acquires

CREATE EVENT SESSION [TraceUserDependency] ON SERVER

ADD EVENT sqlserver.error_reported(

ACTION(sqlserver.session_id,sqlserver.sql_text)

WHERE ([error_number]=(41839))),

ADD EVENT XtpEngine.dependency_acquiredtx_event(

ACTION(sqlserver.session_id,sqlserver.sql_text,sqlserver.transaction_id,sqlserver.tsql_frame,sqlserver.tsql_stack)),

ADD EVENT XtpEngine.waiting_for_dependenciestx_event(

ACTION(sqlserver.session_id,sqlserver.sql_text,sqlserver.transaction_id,sqlserver.tsql_frame,sqlserver.tsql_stack))

ADD TARGET package0.event_file(SET filename=N'd:\temp\TraceUserErrors_Dependency.xel',max_file_size=(250),max_rollover_files=(2)),

ADD TARGET package0.histogram(SET filtering_event_name=N'sqlserver.error_reported',source=N'error_number',source_type=(0))

WITH (STARTUP_STATE=OFF)

GO

-- START

ALTER EVENT SESSION [TraceUserDependency] on SERVER STATE = START

Now Rerun the Read transactions with RML and the write Transactions and you will be able to look at the output of the Extended Events which shows 8 dependencies all on the same transaction ID ( which is our write transaction – see TxIdDependentOn column) from different sessions and different transactions ( see transaction_id column).

Any further dependencies that exceed the 8 limit will fail with the Error 41839. The Extended Event trace output also allows you to see if the transactions failing are performing the same work or are different transactions so that you can tune accordingly.

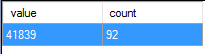

From the Extended Event histogram you can also see 92 statements hit the error 41839 which indicates there were more than 8 dependencies on this transaction.

Considerations around resolving the dependency errors:

As a first step to help avoid this error, one should evaluate to ensure there are no performance problems such as non-ideal or missing indexes or an IO bottleneck on the transaction log. In order to rule out log related bottleneck, consider setting delayed durability to the database as a test only as that should eliminate latency of writing to the log before the commit processing is complete.

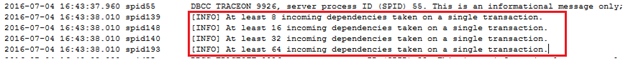

If neither of these are factors in consideration, you can use Trace flag 9926 documented here . This can be enabled as a startup option or enabled on the fly with DBCC TRACEON as shown below.

If you re-run the same steps with the Trace flag 9926 enabled, you will not get that error anymore.

DBCC TRACEON(9926,-1)

GO

If you look at the errorlog, you will see a few messages that are only enabled with that trace flag. These indicate that we have crossed the dependency limit, and a message is written to the log at every factor of 2, when 8,16,32,64… dependencies are exceeded. The message below is specifically for "incoming" dependencies but it can be for "outgoing" dependencies as well.

For more details about transaction handling with memory optimized tables see the MSDN article: https://msdn.microsoft.com/library/mt668435.aspx

Denzil Ribeiro ( @denzilribeiro )