(Cross-Post) Monitoring, Diagnosing and Troubleshooting Azure Storage

Diagnosing and troubleshooting issues in modern online applications is more complex than in traditional client-server applications because they include:

- Complex topologies with components running PaaS or IaaS infrastructure, on-premises, on mobile devices, or some combination of these

- Network traffic that traverses public and private networks; including devices with unpredictable connectivity

- Multiple storage technologies such as Microsoft Azure Storage Tables, Blobs, Queues, or Files in addition to other data stores such as relational databases.

The Azure Storage service includes sophisticated capabilities to help you to manage these challenges. These capabilities include enabling you to monitor the Storage services your application uses for any unexpected changes in behavior (such as slower than usual response times) as well as extensive logging capabilities – both in the storage service and client applications developed with the storage client libraries. The information you obtain from both monitoring and logging will help you to diagnose and troubleshoot the issue and determine the appropriate steps you can take to remediate it. The analytics capability has been designed not to affect the performance of your operations, plus includes the ability to define retention policies for managing the amount of storage consumed.

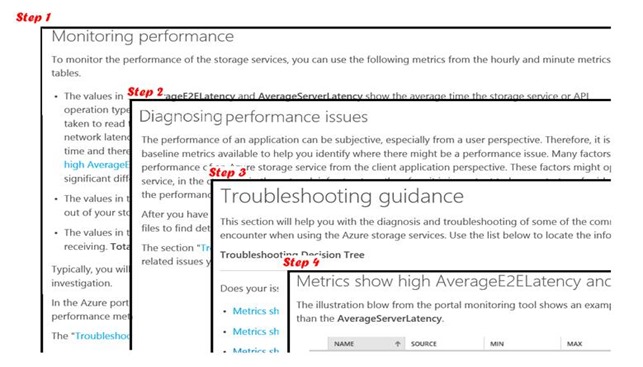

To help you learn how to manage the health of your online services we have developed the Storage Monitoring, Diagnosing and Troubleshooting Guide. This includes prescriptive guidance on monitoring and diagnosing problems related to health, availability, performance and capacity – as well as actionable troubleshooting guidance for the top issues that ourselves and our support teams face when working with customers. For anyone building Storage based applications we believe this guide is essential reading! The illustration below shows extracts from each of the sections focused on how to manage the performance of your application.

Example Scenario – Investigating the performance degradation of an application

To illustrate how the guide can help you, we will walk through a scenario that we see frequently – and that is where a customer reports that the performance of their application is slower than normal. If the customer doesn’t have a monitoring strategy in place it can be difficult and time consuming to determine the root cause of this problem.

The monitoring section of the guide walks you through some key metrics that you should be capturing and monitoring on an ongoing basis looking for unexpected changes in these values as an indicator that you may have an issue that needs investigation. Two metrics that are relevant to this particular scenario are the AverageE2ELatency and AverageServerLatency metrics. AverageE2ELatency is a measure of end-to-end latency that includes the time taken by our service to read the request from the client and send the response back to the client in addition to the time taken to process the request (therefore includes network latency once the request reaches the storage service); AverageServerLatency is a measure of just the processing time by our service and therefore excludes any network latency related to communicating with the client or latencies associated with the client application populating and consuming the send and response buffers.

From looking at the metrics data in the portal (see the illustration above) the AverageE2Elatency is significantly higher than the AverageServerLatency. The first thing you would now want to do is compare this with baseline metrics that you should establish through ongoing monitoring of your service – and initially during performance testing of your application and a limited release to production. For now we will assume this discrepancy is indeed an issue outside of the Azure Storage Service – at which point we can use the troubleshooting decision tree to take you to the section "Metrics show high AverageE2ELatency and low AverageServerLatency". This section then discusses different possibilities to explore:

- Client performance issues - Possible reasons for the client responding slowly include having a limited number of available connections or threads, or being low on resources such as CPU, memory or network bandwidth. You may be able to resolve the issue by modifying the client code to be more efficient (for example by using asynchronous calls to the storage service), or by using a larger Virtual Machine (with more cores and more memory)… See here for more information.

- Network latency issues - Typically, high end-to-end latency caused by the network is due to transient conditions. You can investigate both transient and persistent network issues such as dropped packets by using tools such as Wireshark or Microsoft Message Analyzer. For more information about using Wireshark to troubleshoot network issues, see "Appendix 2: Using Wireshark to capture network traffic." For more information about using Microsoft Message Analyzer to troubleshoot network issues, see "Appendix 3: Using Microsoft Message Analyzer to capture network traffic."… See here for more information.

After reading the client performance section and verifying that the discrepancy between AverageE2ELatency and AverageServerLatency was only occurring for queue operations and not for blob operations the guide directs you to read an article about the Nagle algorithm and how it is not friendly towards small requests. After appropriate testing this was identified as being the problem and the code was updated to turn off Nagle’s algorithm. Doing so and monitoring our AverageE2ELatency after the change showed a marked improvement in AverageE2ELatency.

Other issues that can cause intermittent fluctuations in response times include poor network conditions and server side failures. In either of these situations the client application may retry an operation thus increasing the perceived latency for that particular operation. In these cases you would likely see both AverageE2ELatency and AverageServerLatency being low. In this situation the troubleshooting decision tree would take you to this section “Metrics show low AverageE2ELatency and low AverageServerLatency but the client is experiencing high latency" which walks you through appropriate troubleshooting techniques. The section on "End-to-end tracing” also provides additional information on how client and server request correlation can assist in troubleshooting these types of issues.

Hopefully this scenario demonstrates the importance of proactively monitoring your service plus having a good understanding of the different tools available to assist you when problems do occur.

Summary and Call to Action

To help you learn how to manage the health of your online services we have developed the Storage Monitoring, Diagnosing and Troubleshooting Guide. This includes prescriptive guidance on monitoring and diagnosing problems related to health, capacity, availability and performance – as well as actionable suggestions for troubleshooting top issues that ourselves and our support teams face in working with customers.

We have also looked at related guidance and created / updated content relating to:

- Enabling Metrics and Logs using either the Azure Portal, PowerShell or via APIs See: Enabling Storage Metrics and Viewing Metrics Data and Enabling Storage Logging and Accessing Log Data

- Enabling logging within the .NET and Java Client libraries – this is especially useful when diagnosing application specific issues such as client connectivity. See: Client-side Logging with the .NET Storage Client Library and Client-side Logging with the Microsoft Azure Storage SDK for Java

- Instructions for using networking tools to diagnose network connectivity issues. See: Analyzing Network Traffic using Network Analysis Tools, and

- Instructions for correlating data from storage logs, network logs and client libs. See: End-to-End Tracing

In terms of next steps we recommend:

- Enable metrics and logging on your production storage accounts – as it is not enabled by default

- Read the monitoring and diagnosing sections of the guide so you can update your management policies

- Book mark the guide to assist with troubleshooting when problems do occur

We hope this guidance makes managing your online services much simpler!

Jason Hogg