Scale single database resources in Azure SQL Database

Applies to:

Azure SQL Database

This article describes how to scale the compute and storage resources available for Azure SQL Database in the provisioned compute tier. Alternatively, the serverless compute tier provides compute autoscaling and bills per second for compute used.

After initially picking the number of vCores or DTUs, you can scale a single database up or down dynamically based on actual experience using:

Important

Under some circumstances, you might need to shrink a database to reclaim unused space. For more information, see Manage file space for databases in Azure SQL Database.

Note

Microsoft Entra ID was previously known as Azure Active Directory (Azure AD).

Impact

Changing the service tier or compute size of mainly involves the service performing the following steps:

Create a new compute instance for the database.

A new compute instance is created with the requested service tier and compute size. For some combinations of service tier and compute size changes, a replica of the database must be created in the new compute instance, which involves copying data and can strongly influence the overall latency. Regardless, the database remains online during this step, and connections continue to be directed to the database in the original compute instance.

Switch routing of connections to a new compute instance.

Existing connections to the database in the original compute instance are dropped. Any new connections are established to the database in the new compute instance. For some combinations of service tier and compute size changes, database files are detached and reattached during the switch. Regardless, the switch can result in a brief service interruption when the database is unavailable generally for less than 30 seconds and often for only a few seconds. If there are long-running transactions running when connections are dropped, the duration of this step can take longer in order to recover aborted transactions. Accelerated database recovery can reduce the impact from aborting long running transactions.

Important

No data is lost during any step in the workflow. Make sure that you have implemented some retry logic in the applications and components that are using Azure SQL Database while the service tier is changed.

Latency

The estimated latency to change the service tier, scale the compute size of a single database or elastic pool, move a database in/out of an elastic pool, or move a database between elastic pools is parameterized as follows:

| Database scaling latency | To Basic single database, Standard single database (S0-S1) |

To Standard single database (S2-S12), General Purpose single database, Basic elastic pooled database, Standard elastic pooled database, General Purpose pooled database |

To Premium single database or pooled database, Business Critical single database or pooled database |

To Hyperscale single database or pooled database |

|---|---|---|---|---|

| From Basic single database, Standard single database (S0-S1) |

- Constant time latency independent of space used. - Typically, less than 5 minutes. |

- Latency proportional to database space used due to data copying. - Typically, less than 1 minute per GB of space used. |

- Latency proportional to database space used due to data copying. - Typically, less than 1 minute per GB of space used. |

- Latency proportional to database space used due to data copying. - Typically, less than 1 minute per GB of space used. |

| From Basic pooled database, Standard single database (S2-S12), Standard pooled database, General Purpose single database or pooled database |

- Latency proportional to database space used due to data copying. - Typically, less than 1 minute per GB of space used. |

- For single databases, constant time latency independent of space used. - Typically, less than 5 minutes for single databases. - For elastic pools, proportional to the number of databases. |

- Latency proportional to database space used due to data copying. - Typically, less than 1 minute per GB of space used. |

- Latency proportional to database space used due to data copying. - Typically, less than 1 minute per GB of space used. |

| From Premium single database or pooled database, Business Critical single database or pooled database |

- Latency proportional to database space used due to data copying. - Typically, less than 1 minute per GB of space used. |

- Latency proportional to database space used due to data copying. - Typically, less than 1 minute per GB of space used. |

- Latency proportional to database space used due to data copying. - Typically, less than 1 minute per GB of space used. |

- Latency proportional to database space used due to data copying. - Typically, less than 1 minute per GB of space used. |

| From Hyperscale single database or pooled database | N/A | See Reverse migrate from Hyperscale for supported scenarios and limitations. | N/A | - Constant time latency independent of space used. - Typically, less than 2 minutes. |

Note

- Additionally, for Standard (S2-S12) and General Purpose databases, latency for moving a database in/out of an elastic pool or between elastic pools will be proportional to database size if the database is using Premium File Share (PFS) storage.

- In the case of moving a database to/from an elastic pool, only the space used by the database impacts the latency, not the space used by the elastic pool.

- To determine if a database is using PFS storage, execute the following query in the context of the database. If the value in the AccountType column is

PremiumFileStorageorPremiumFileStorage-ZRS, the database is using PFS storage.

SELECT s.file_id,

s.type_desc,

s.name,

FILEPROPERTYEX(s.name, 'AccountType') AS AccountType

FROM sys.database_files AS s

WHERE s.type_desc IN ('ROWS', 'LOG');

Note

- The zone redundant property will remain the same by default when scaling a single database from the Business Critical to the General Purpose tier.

- Latency for the scaling operation when zone redundancy is changed for a General Purpose single database is proportional to database size.

Tip

To monitor in-progress operations, see: Manage operations using the SQL REST API, Manage operations using CLI, Monitor operations using T-SQL and these two PowerShell commands: Get-AzSqlDatabaseActivity and Stop-AzSqlDatabaseActivity.

Monitor or cancel scaling changes

A service tier change or compute rescaling operation can be monitored and canceled.

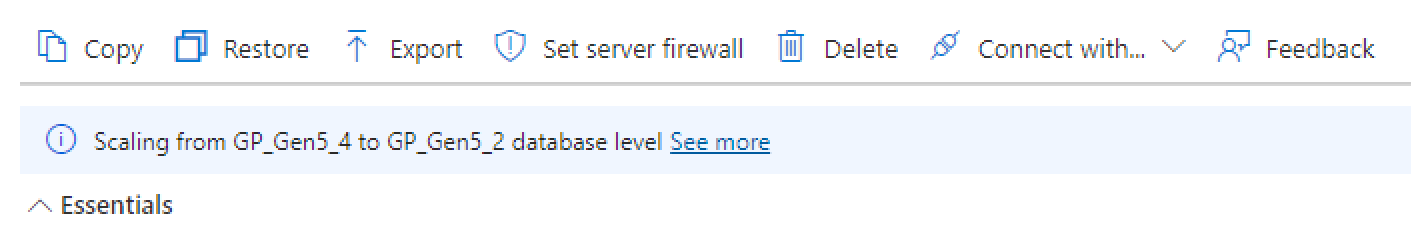

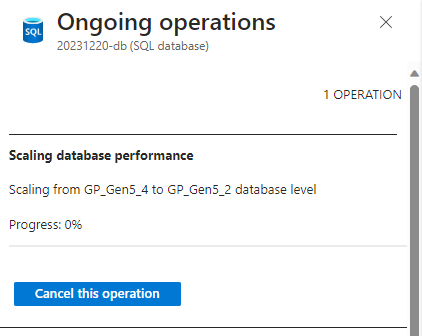

In the SQL database Overview page, look for the banner indicating a scaling operation is ongoing, and select the See more link for the deployment in progress.

On the resulting Ongoing operations page, select Cancel this operation.

Permissions

To scale databases via Transact-SQL: ALTER DATABASE is used. To scale a database a login must be either the server admin login (created when the Azure SQL Database logical server was provisioned), the Microsoft Entra admin of the server, a member of the dbmanager database role in master, a member of the db_owner database role in the current database, or dbo of the database. For more information, see ALTER DATABASE.

To scale databases via the Azure portal, PowerShell, Azure CLI, or REST API: Azure RBAC permissions are needed, specifically the Contributor, SQL DB Contributor role, or SQL Server Contributor Azure RBAC roles. For more information, see Azure RBAC built-in roles.

Additional considerations

- If you're upgrading to a higher service tier or compute size, the database max size doesn't increase unless you explicitly specify a larger size (maxsize).

- To downgrade a database, the database used space must be smaller than the maximum allowed size of the target service tier and compute size.

- When downgrading from Premium to the Standard tier, an extra storage cost applies if both (1) the max size of the database is supported in the target compute size, and (2) the max size exceeds the included storage amount of the target compute size. For example, if a P1 database with a max size of 500 GB is downsized to S3, then an extra storage cost applies since S3 supports a max size of 1 TB and its included storage amount is only 250 GB. So, the extra storage amount is 500 GB – 250 GB = 250 GB. For pricing of extra storage, see Azure SQL Database pricing. If the actual amount of space used is less than the included storage amount, then this extra cost can be avoided by reducing the database max size to the included amount.

- When upgrading a database with geo-replication enabled, upgrade its secondary databases to the desired service tier and compute size before upgrading the primary database (general guidance for best performance). When upgrading to a different edition, it's a requirement that the secondary database is upgraded first.

- When downgrading a database with geo-replication enabled, downgrade its primary databases to the desired service tier and compute size before downgrading the secondary database (general guidance for best performance). When downgrading to a different edition, it's a requirement that the primary database is downgraded first.

- The restore service offerings are different for the various service tiers. If you're downgrading to the Basic tier, there's a lower backup retention period. See Automated backups in Azure SQL Database.

- The new properties for the database aren't applied until the changes are complete.

- When data copying is required to scale a database (see Latency) when changing the service tier, high resource utilization concurrent to the scaling operation can cause longer scaling times. With Accelerated database recovery, rollback of long running transactions isn't a significant source of delay, but high concurrent resource usage might leave less compute, storage, and network bandwidth resources for scaling, particularly for smaller compute sizes.

Billing

You're billed for each hour a database exists using the highest service tier + compute size that applied during that hour, regardless of usage or whether the database was active for less than an hour. For example, if you create a single database and delete it five minutes later your bill reflects a charge for one database hour.

Change storage size

vCore-based purchasing model

Storage can be provisioned up to the data storage max size limit using 1-GB increments. The minimum configurable data storage is 1 GB. For data storage max size limits in each service objective, see resource limit documentation pages for Resource limits for single databases using the vCore purchasing model and Resource limits for single databases using the DTU purchasing model.

Data storage for a single database can be provisioned by increasing or decreasing its max size using the Azure portal, Transact-SQL, PowerShell, Azure CLI, or REST API. If the max size value is specified in bytes, it must be a multiple of 1 GB (1,073,741,824 bytes).

The amount of data that can be stored in the data files of a database is limited by the configured data storage max size. In addition to that storage, Azure SQL Database automatically adds 30% more storage to be used for the transaction log. The price of storage for a single database or an elastic pool is the sum of data storage and transaction log storage amounts multiplied by the storage unit price of the service tier. For example, if data storage is set to 10 GB, the additional transaction log storage is 10 GB * 30% = 3 GB, and the total amount of billable storage is 10 GB + 3 GB = 13 GB.

Note

The maximum size of the transaction log file is managed automatically, and in some cases can be greater than 30% of the data storage maximum size. This does not increase the price of storage for the database.

Azure SQL Database automatically allocates 32 GB per vCore for the

tempdbdatabase.tempdbis located on the local SSD storage in all service tiers. The cost oftempdbis included in the price of a single database or an elastic pool.For details on storage price, see Azure SQL Database pricing.

Important

Under some circumstances, you might need to shrink a database to reclaim unused space. For more information, see Manage file space for databases in Azure SQL Database.

DTU-based purchasing model

- The DTU price for a single database includes a certain amount of storage at no additional cost. Extra storage beyond the included amount can be provisioned for an additional cost up to the max size limit in increments of 250 GB up to 1 TB, and then in increments of 256 GB beyond 1 TB. For included storage amounts and max size limits, see Single database: Storage sizes and compute sizes.

- Extra storage for a single database can be provisioned by increasing its max size using the Azure portal, Transact-SQL, PowerShell, the Azure CLI, or the REST API.

- The price of extra storage for a single database is the extra storage amount multiplied by the extra storage unit price of the service tier. For details on the price of extra storage, see Azure SQL Database pricing.

Important

Under some circumstances, you might need to shrink a database to reclaim unused space. For more information, see Manage file space for databases in Azure SQL Database.

Geo-replicated database

To change the database size of a replicated secondary database, change the size of the primary database. This change will then be replicated and implemented on the secondary database as well.

P11 and P15 constraints when max size greater than 1 TB

More than 1 TB of storage in the Premium tier is currently available in all regions except: China East, China North, Germany Central, and Germany Northeast. In these regions, the storage max in the Premium tier is limited to 1 TB. The following considerations and limitations apply to P11 and P15 databases with a maximum size greater than 1 TB:

- If the max size for a P11 or P15 database was ever set to a value greater than 1 TB, then can it only be restored or copied to a P11 or P15 database. Later, the database can be rescaled to a different compute size provided the amount of space allocated at the time of the rescaling operation doesn't exceed max size limits of the new compute size.

- For active geo-replication scenarios:

- Setting up a geo-replication relationship: If the primary database is P11 or P15, the secondary(ies) must also be P11 or P15. Lower compute sizes are rejected as secondaries since they aren't capable of supporting more than 1 TB.

- Upgrading the primary database in a geo-replication relationship: Changing the maximum size to more than 1 TB on a primary database triggers the same change on the secondary database. Both upgrades must be successful for the change on the primary to take effect. Region limitations for the more than 1-TB option apply. If the secondary is in a region that doesn't support more than 1 TB, the primary isn't upgraded.

- Using the Import/Export service for loading P11/P15 databases with more than 1 TB isn't supported. Use SqlPackage to import and export data.