Copy data to or from Azure Cosmos DB for MongoDB using Azure Data Factory or Synapse Analytics

APPLIES TO:  Azure Data Factory

Azure Data Factory  Azure Synapse Analytics

Azure Synapse Analytics

Tip

Try out Data Factory in Microsoft Fabric, an all-in-one analytics solution for enterprises. Microsoft Fabric covers everything from data movement to data science, real-time analytics, business intelligence, and reporting. Learn how to start a new trial for free!

This article outlines how to use Copy Activity in Azure Data Factory and Synapse Analytics pipelines to copy data from and to Azure Cosmos DB for MongoDB. The article builds on Copy Activity, which presents a general overview of Copy Activity.

Note

This connector only supports copy data to/from Azure Cosmos DB for MongoDB. For Azure Cosmos DB for NoSQL, refer to the Azure Cosmos DB for NoSQL connector. Other API types are not currently supported.

Supported capabilities

This Azure Cosmos DB for MongoDB connector is supported for the following capabilities:

| Supported capabilities | IR | Managed private endpoint |

|---|---|---|

| Copy activity (source/sink) | ① ② | ✓ |

① Azure integration runtime ② Self-hosted integration runtime

You can copy data from Azure Cosmos DB for MongoDB to any supported sink data store, or copy data from any supported source data store to Azure Cosmos DB for MongoDB. For a list of data stores that Copy Activity supports as sources and sinks, see Supported data stores and formats.

You can use the Azure Cosmos DB for MongoDB connector to:

- Copy data from and to the Azure Cosmos DB for MongoDB.

- Write to Azure Cosmos DB as insert or upsert.

- Import and export JSON documents as-is, or copy data from or to a tabular dataset. Examples include a SQL database and a CSV file. To copy documents as-is to or from JSON files or to or from another Azure Cosmos DB collection, see Import or export JSON documents.

Get started

To perform the Copy activity with a pipeline, you can use one of the following tools or SDKs:

- The Copy Data tool

- The Azure portal

- The .NET SDK

- The Python SDK

- Azure PowerShell

- The REST API

- The Azure Resource Manager template

Create a linked service to Azure Cosmos DB for MongoDB using UI

Use the following steps to create a linked service to Azure Cosmos DB for MongoDB in the Azure portal UI.

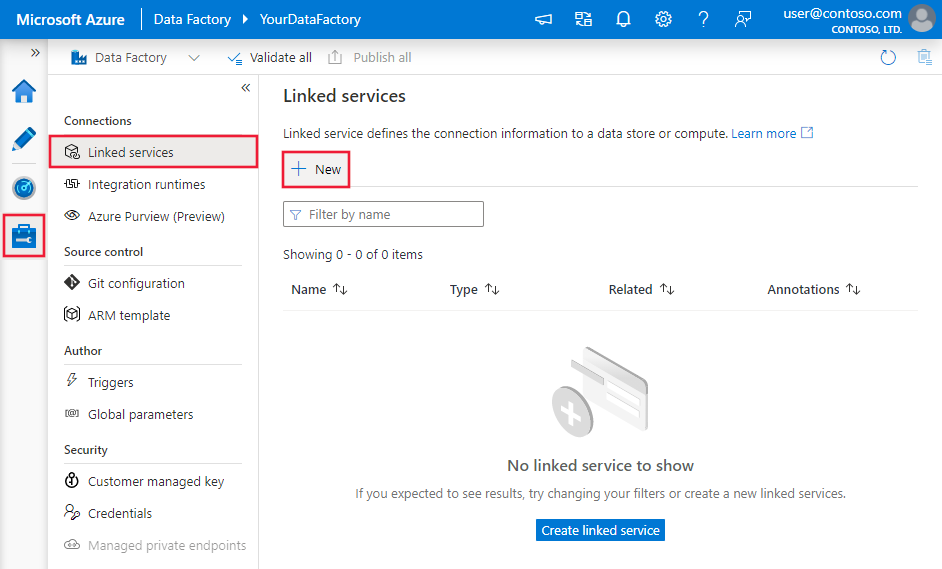

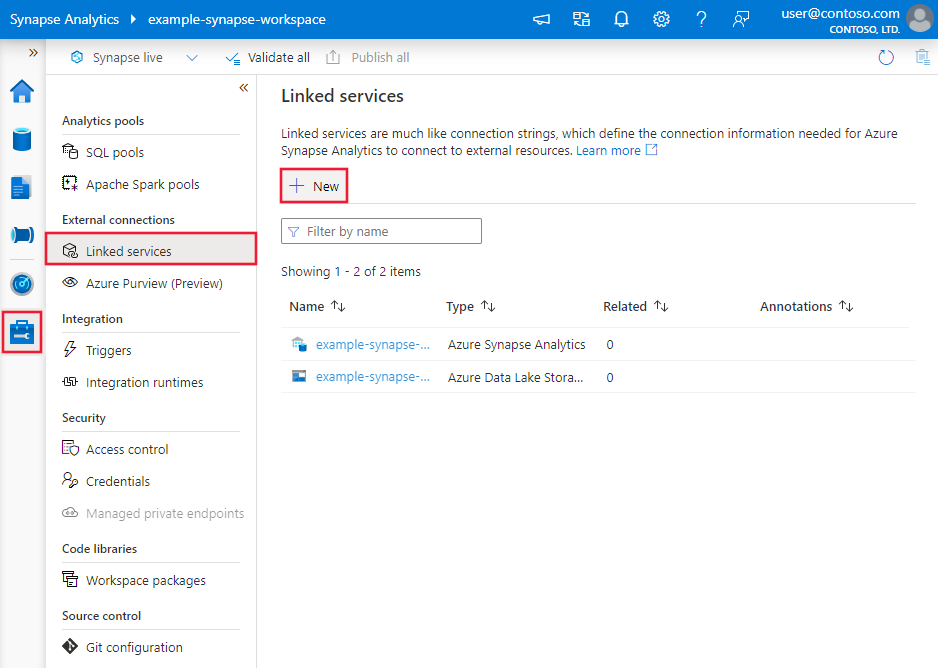

Browse to the Manage tab in your Azure Data Factory or Synapse workspace and select Linked Services, then click New:

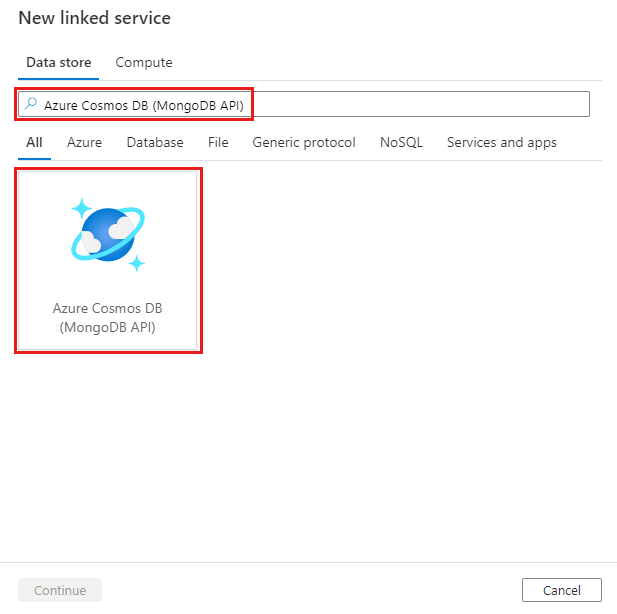

Search for Azure Cosmos DB for MongoDB and select that connector.

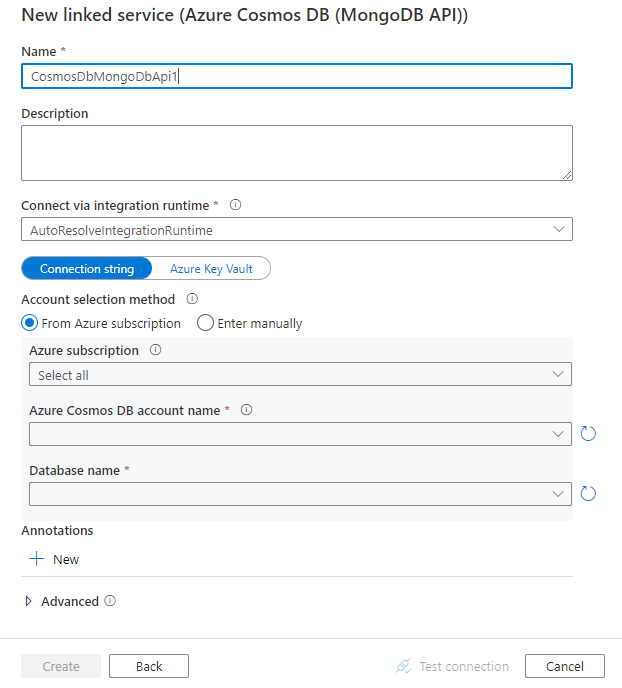

Configure the service details, test the connection, and create the new linked service.

Connector configuration details

The following sections provide details about properties you can use to define Data Factory entities that are specific to Azure Cosmos DB for MongoDB.

Linked service properties

The following properties are supported for the Azure Cosmos DB for MongoDB linked service:

| Property | Description | Required |

|---|---|---|

| type | The type property must be set to CosmosDbMongoDbApi. | Yes |

| connectionString | Specify the connection string for your Azure Cosmos DB for MongoDB. You can find it in the Azure portal -> your Azure Cosmos DB blade -> primary or secondary connection string. For 3.2 server version, the string pattern is mongodb://<cosmosdb-name>:<password>@<cosmosdb-name>.documents.azure.com:10255/?ssl=true&replicaSet=globaldb. For 3.6+ server versions, the string pattern is mongodb://<cosmosdb-name>:<password>@<cosmosdb-name>.mongo.cosmos.azure.com:10255/?ssl=true&replicaSet=globaldb&retrywrites=false&maxIdleTimeMS=120000&appName=@<cosmosdb-name>@.You can also put a password in Azure Key Vault and pull the password configuration out of the connection string. Refer to Store credentials in Azure Key Vault with more details. |

Yes |

| database | Name of the database that you want to access. | Yes |

| isServerVersionAbove32 | Specify whether the server version is above 3.2. Allowed values are true and false(default). This will determine the driver to use in the service. | Yes |

| connectVia | The Integration Runtime to use to connect to the data store. You can use the Azure Integration Runtime or a self-hosted integration runtime (if your data store is located in a private network). If this property isn't specified, the default Azure Integration Runtime is used. | No |

Example

{

"name": "CosmosDbMongoDBAPILinkedService",

"properties": {

"type": "CosmosDbMongoDbApi",

"typeProperties": {

"connectionString": "mongodb://<cosmosdb-name>:<password>@<cosmosdb-name>.documents.azure.com:10255/?ssl=true&replicaSet=globaldb",

"database": "myDatabase",

"isServerVersionAbove32": "false"

},

"connectVia": {

"referenceName": "<name of Integration Runtime>",

"type": "IntegrationRuntimeReference"

}

}

}

Dataset properties

For a full list of sections and properties that are available for defining datasets, see Datasets and linked services. The following properties are supported for Azure Cosmos DB for MongoDB dataset:

| Property | Description | Required |

|---|---|---|

| type | The type property of the dataset must be set to CosmosDbMongoDbApiCollection. | Yes |

| collectionName | The name of the Azure Cosmos DB collection. | Yes |

Example

{

"name": "CosmosDbMongoDBAPIDataset",

"properties": {

"type": "CosmosDbMongoDbApiCollection",

"typeProperties": {

"collectionName": "<collection name>"

},

"schema": [],

"linkedServiceName":{

"referenceName": "<Azure Cosmos DB for MongoDB linked service name>",

"type": "LinkedServiceReference"

}

}

}

Copy Activity properties

This section provides a list of properties that the Azure Cosmos DB for MongoDB source and sink support.

For a full list of sections and properties that are available for defining activities, see Pipelines.

Azure Cosmos DB for MongoDB as source

The following properties are supported in the Copy Activity source section:

| Property | Description | Required |

|---|---|---|

| type | The type property of the copy activity source must be set to CosmosDbMongoDbApiSource. | Yes |

| filter | Specifies selection filter using query operators. To return all documents in a collection, omit this parameter or pass an empty document ({}). | No |

| cursorMethods.project | Specifies the fields to return in the documents for projection. To return all fields in the matching documents, omit this parameter. | No |

| cursorMethods.sort | Specifies the order in which the query returns matching documents. Refer to cursor.sort(). | No |

| cursorMethods.limit | Specifies the maximum number of documents the server returns. Refer to cursor.limit(). | No |

| cursorMethods.skip | Specifies the number of documents to skip and from where MongoDB begins to return results. Refer to cursor.skip(). | No |

| batchSize | Specifies the number of documents to return in each batch of the response from MongoDB instance. In most cases, modifying the batch size will not affect the user or the application. Azure Cosmos DB limits each batch cannot exceed 40MB in size, which is the sum of the batchSize number of documents' size, so decrease this value if your document size being large. | No (the default is 100) |

Tip

ADF support consuming BSON document in Strict mode. Make sure your filter query is in Strict mode instead of Shell mode. More description can be found in the MongoDB manual.

Example

"activities":[

{

"name": "CopyFromCosmosDBMongoDBAPI",

"type": "Copy",

"inputs": [

{

"referenceName": "<Azure Cosmos DB for MongoDB input dataset name>",

"type": "DatasetReference"

}

],

"outputs": [

{

"referenceName": "<output dataset name>",

"type": "DatasetReference"

}

],

"typeProperties": {

"source": {

"type": "CosmosDbMongoDbApiSource",

"filter": "{datetimeData: {$gte: ISODate(\"2018-12-11T00:00:00.000Z\"),$lt: ISODate(\"2018-12-12T00:00:00.000Z\")}, _id: ObjectId(\"5acd7c3d0000000000000000\") }",

"cursorMethods": {

"project": "{ _id : 1, name : 1, age: 1, datetimeData: 1 }",

"sort": "{ age : 1 }",

"skip": 3,

"limit": 3

}

},

"sink": {

"type": "<sink type>"

}

}

}

]

Azure Cosmos DB for MongoDB as sink

The following properties are supported in the Copy Activity sink section:

| Property | Description | Required |

|---|---|---|

| type | The type property of the Copy Activity sink must be set to CosmosDbMongoDbApiSink. | Yes |

| writeBehavior | Describes how to write data to Azure Cosmos DB. Allowed values: insert and upsert. The behavior of upsert is to replace the document if a document with the same _id already exists; otherwise, insert the document.Note: The service automatically generates an _id for a document if an _id isn't specified either in the original document or by column mapping. This means that you must ensure that, for upsert to work as expected, your document has an ID. |

No (the default is insert) |

| writeBatchSize | The writeBatchSize property controls the size of documents to write in each batch. You can try increasing the value for writeBatchSize to improve performance and decreasing the value if your document size being large. | No (the default is 10,000) |

| writeBatchTimeout | The wait time for the batch insert operation to finish before it times out. The allowed value is timespan. | No (the default is 00:30:00 - 30 minutes) |

Tip

To import JSON documents as-is, refer to Import or export JSON documents section; to copy from tabular-shaped data, refer to Schema mapping.

Example

"activities":[

{

"name": "CopyToCosmosDBMongoDBAPI",

"type": "Copy",

"inputs": [

{

"referenceName": "<input dataset name>",

"type": "DatasetReference"

}

],

"outputs": [

{

"referenceName": "<Document DB output dataset name>",

"type": "DatasetReference"

}

],

"typeProperties": {

"source": {

"type": "<source type>"

},

"sink": {

"type": "CosmosDbMongoDbApiSink",

"writeBehavior": "upsert"

}

}

}

]

Import and export JSON documents

You can use this Azure Cosmos DB connector to easily:

- Copy documents between two Azure Cosmos DB collections as-is.

- Import JSON documents from various sources to Azure Cosmos DB, including from MongoDB, Azure Blob storage, Azure Data Lake Store, and other file-based stores that the service supports.

- Export JSON documents from an Azure Cosmos DB collection to various file-based stores.

To achieve schema-agnostic copy:

- When you use the Copy Data tool, select the Export as-is to JSON files or Azure Cosmos DB collection option.

- When you use activity authoring, choose JSON format with the corresponding file store for source or sink.

Schema mapping

To copy data from Azure Cosmos DB for MongoDB to tabular sink or reversed, refer to schema mapping.

Specifically for writing into Azure Cosmos DB, to make sure you populate Azure Cosmos DB with the right object ID from your source data, for example, you have an "id" column in SQL database table and want to use the value of that as the document ID in MongoDB for insert/upsert, you need to set the proper schema mapping according to MongoDB strict mode definition (_id.$oid) as the following:

After copy activity execution, below BSON ObjectId is generated in sink:

{

"_id": ObjectId("592e07800000000000000000")

}

Related content

For a list of data stores that Copy Activity supports as sources and sinks, see supported data stores.