Copy new and changed files by LastModifiedDate with Azure Data Factory

APPLIES TO:  Azure Data Factory

Azure Data Factory  Azure Synapse Analytics

Azure Synapse Analytics

Tip

Try out Data Factory in Microsoft Fabric, an all-in-one analytics solution for enterprises. Microsoft Fabric covers everything from data movement to data science, real-time analytics, business intelligence, and reporting. Learn how to start a new trial for free!

This article describes a solution template that you can use to copy new and changed files only by LastModifiedDate from a file-based store to a destination store.

About this solution template

This template first selects the new and changed files only by their attributes LastModifiedDate, and then copies those selected files from the data source store to the data destination store.

The template contains one activity:

- Copy to copy new and changed files only by LastModifiedDate from a file store to a destination store.

The template defines six parameters:

- FolderPath_Source is the folder path where you can read the files from the source store. You need to replace the default value with your own folder path.

- Directory_Source is the subfolder path where you can read the files from the source store. You need to replace the default value with your own subfolder path.

- FolderPath_Destination is the folder path where you want to copy files to the destination store. You need to replace the default value with your own folder path.

- Directory_Destination is the subfolder path where you want to copy files to the destination store. You need to replace the default value with your own subfolder path.

- LastModified_From is used to select the files whose LastModifiedDate attribute is after or equal to this datetime value. In order to select the new files only, which hasn't been copied last time, this datetime value can be the time when the pipeline was triggered last time. You can replace the default value '2019-02-01T00:00:00Z' to your expected LastModifiedDate in UTC timezone.

- LastModified_To is used to select the files whose LastModifiedDate attribute is before this datetime value. In order to select the new files only, which weren't copied in prior runs, this datetime value can be the present time. You can replace the default value '2019-02-01T00:00:00Z' to your expected LastModifiedDate in UTC timezone.

How to use this solution template

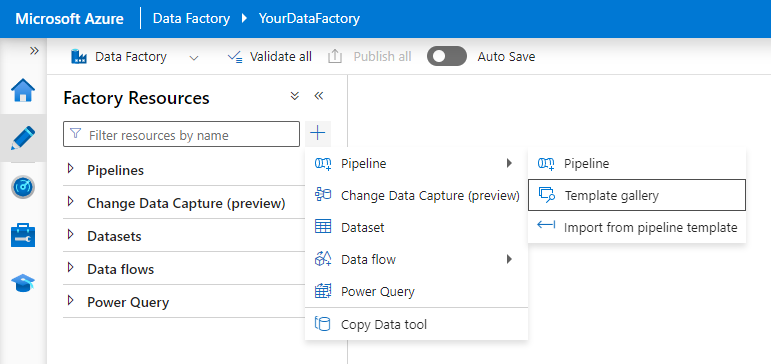

Navigate to the Template Gallery from the Author tab in Azure Data Factory, then choose the + button, Pipeline, and finally Template Gallery.

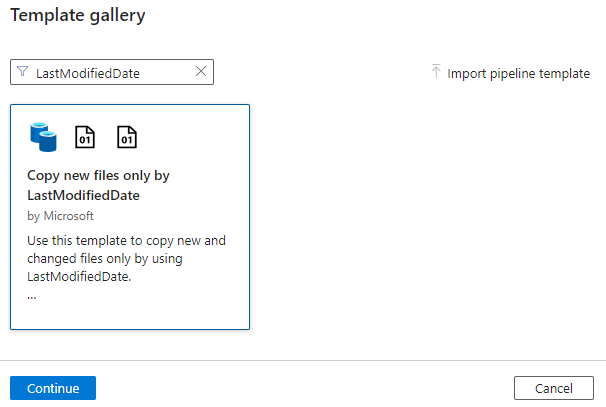

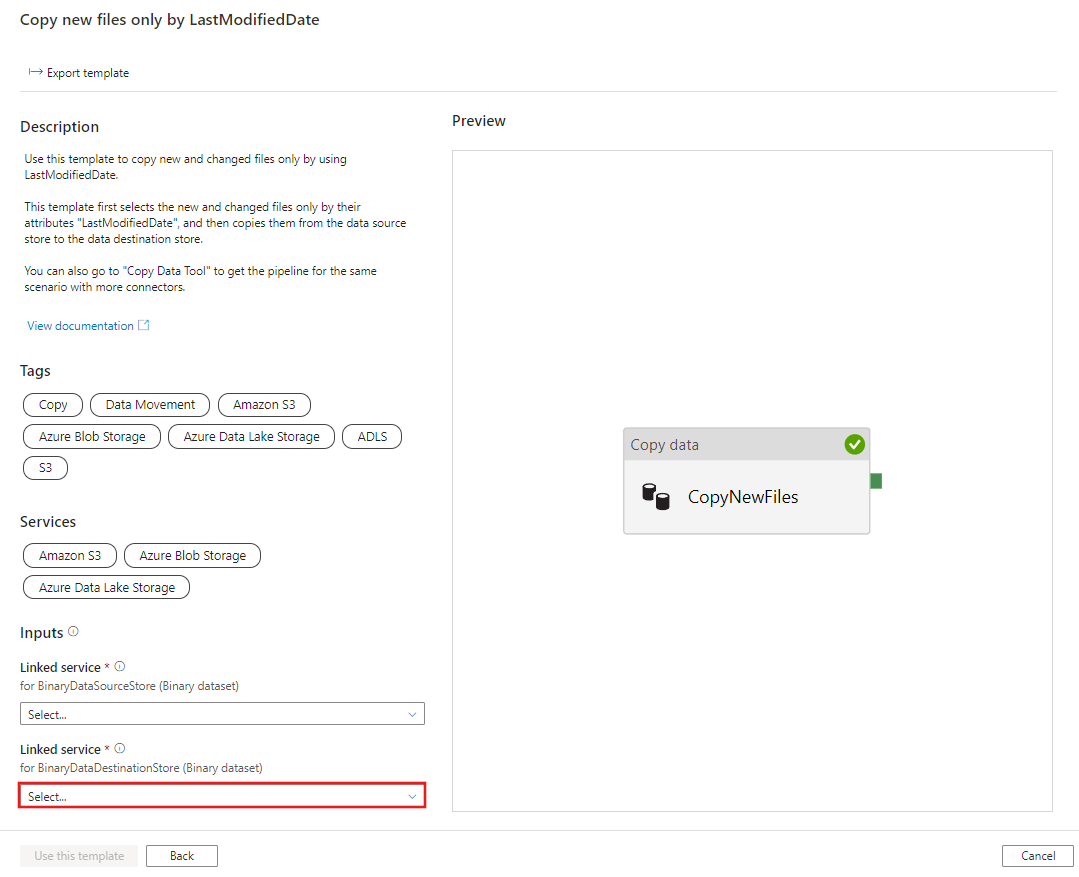

Search for the template Copy new files only by LastModifiedDate, select it, and then select Continue.

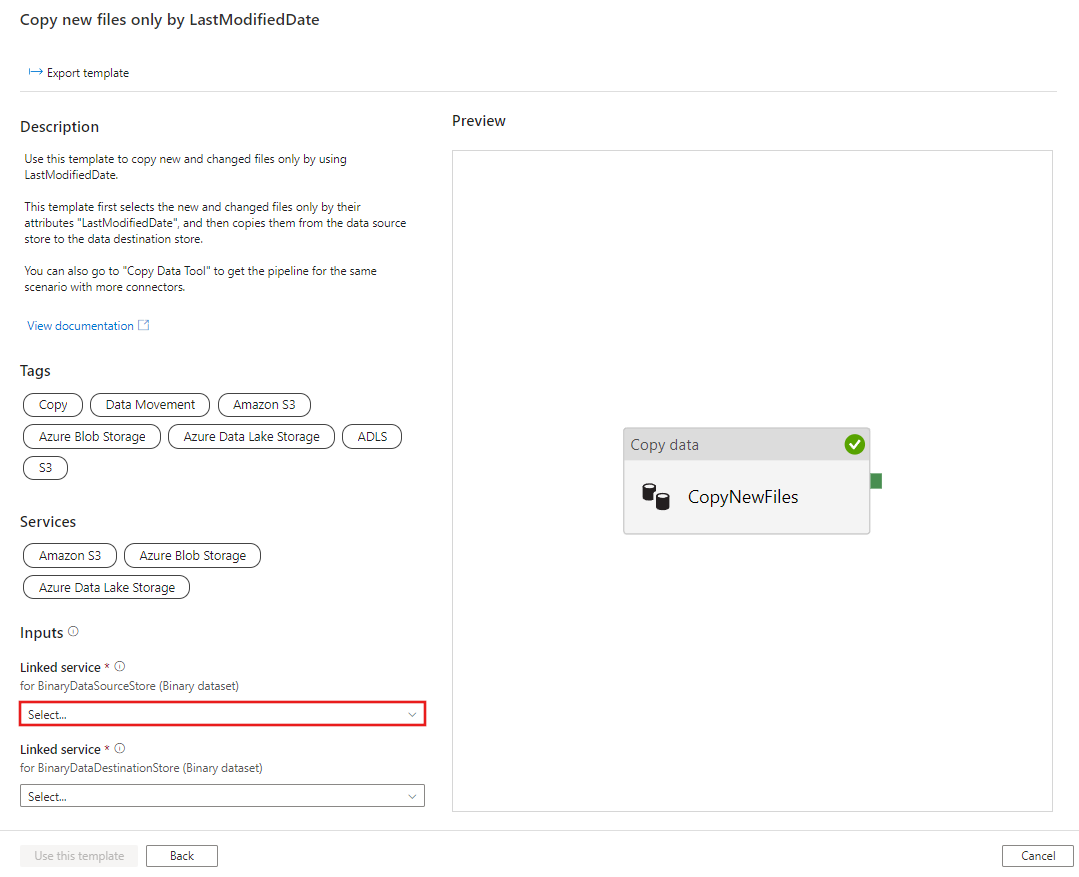

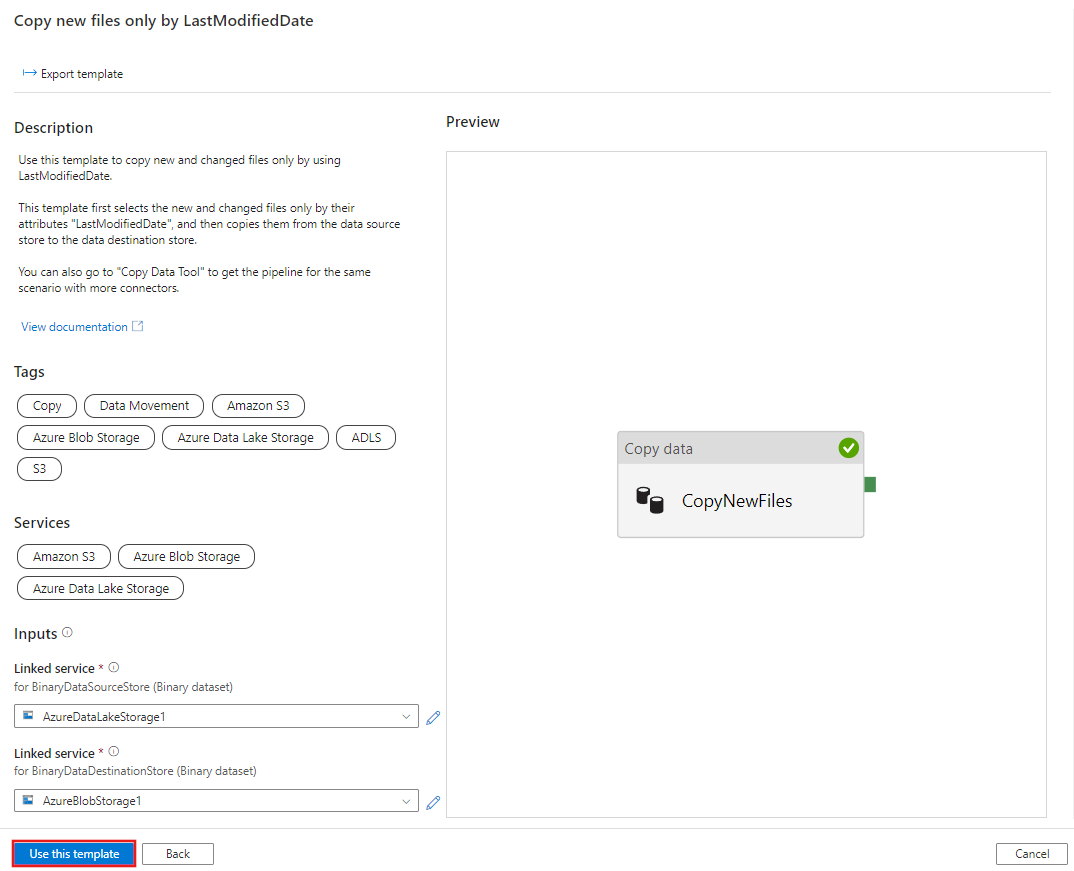

Create a New connection to your destination store. The destination store is where you want to copy files to.

Create a New connection to your source storage store. The source storage store is where you want to copy files from.

Select Use this template.

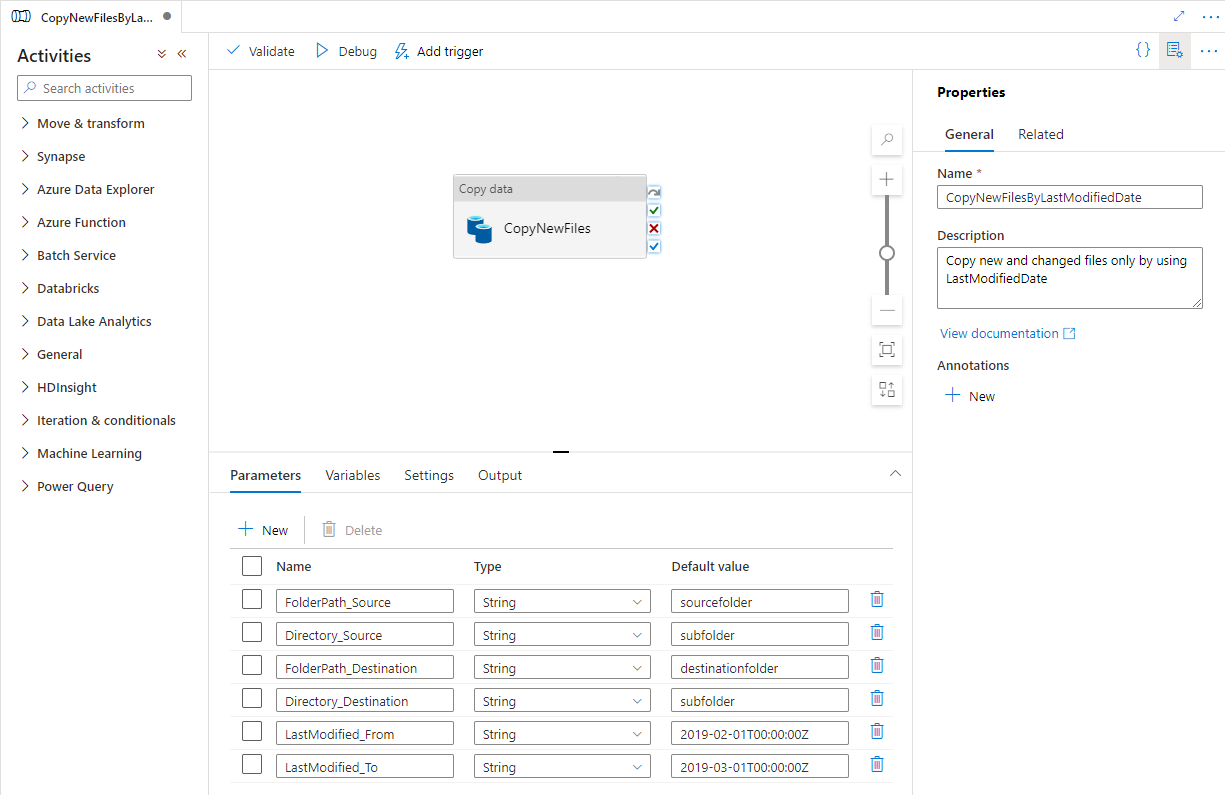

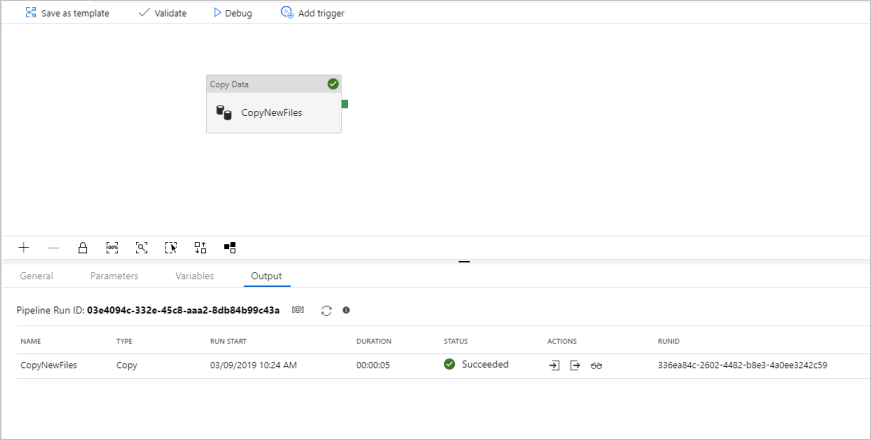

You see the pipeline available in the panel, as shown in the following example:

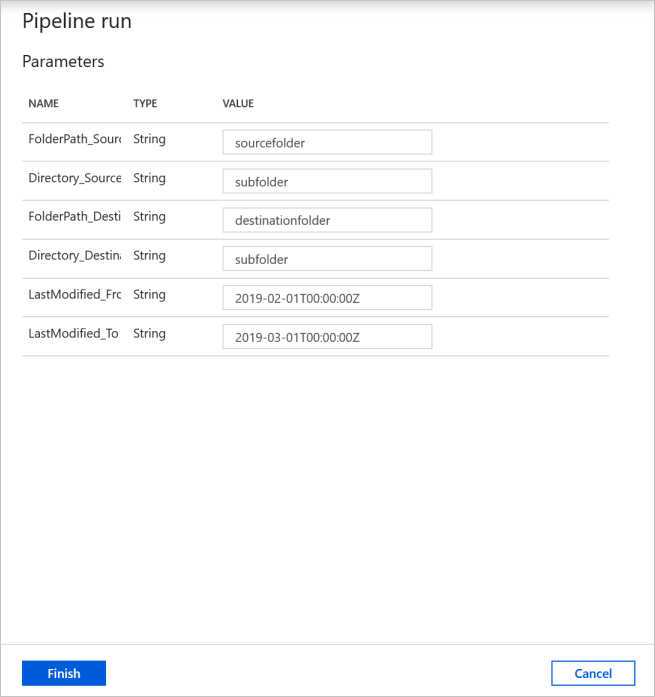

Select Debug, write the value for the Parameters, and select Finish. In the picture that follows, we set the parameters as following.

- FolderPath_Source = sourcefolder

- Directory_Source = subfolder

- FolderPath_Destination = destinationfolder

- Directory_Destination = subfolder

- LastModified_From = 2019-02-01T00:00:00Z

- LastModified_To = 2019-03-01T00:00:00Z

The example is indicating that the files, which were last modified within the timespan (2019-02-01T00:00:00Z to 2019-03-01T00:00:00Z) will be copied from the source path sourcefolder/subfolder to the destination path destinationfolder/subfolder. You can replace these times or folders with your own parameters.

Review the result. You see only the files last modified within the configured timespan are copied to the destination store.

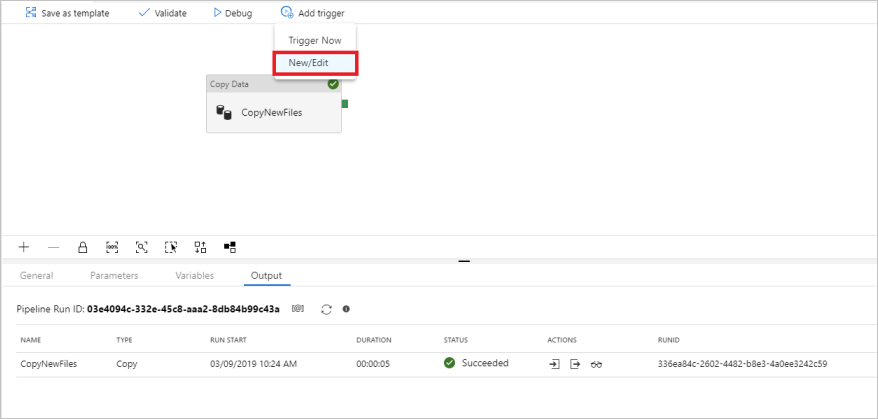

Now you can add a tumbling windows trigger to automate this pipeline, so that the pipeline can always copy new and changed files only by LastModifiedDate periodically. Select Add trigger, and select New/Edit.

In the Add Triggers window, select + New.

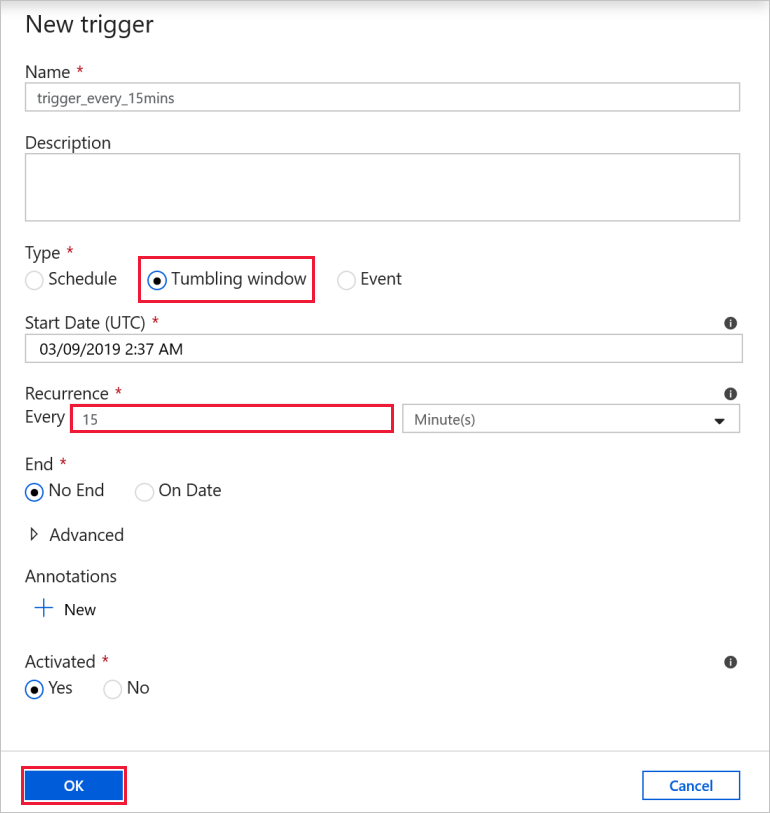

Select Tumbling Window for the trigger type, set Every 15 minute(s) as the recurrence (you can change to any interval time). Select Yes for Activated box, and then select OK.

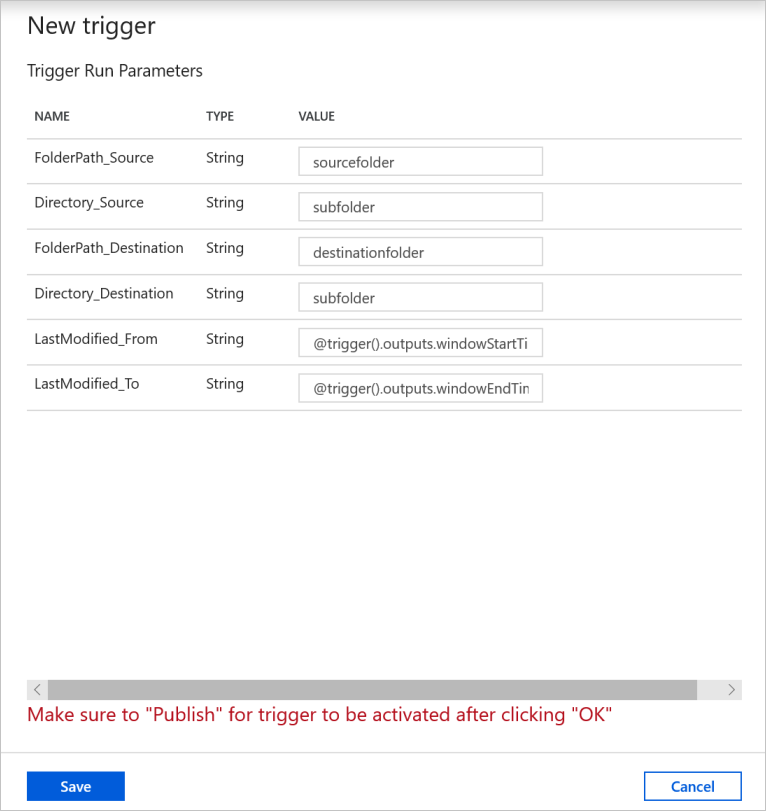

Set the value for the Trigger Run Parameters as following, and select Finish.

- FolderPath_Source = sourcefolder. You can replace with your folder in source data store.

- Directory_Source = subfolder. You can replace with your subfolder in source data store.

- FolderPath_Destination = destinationfolder. You can replace with your folder in destination data store.

- Directory_Destination = subfolder. You can replace with your subfolder in destination data store.

- LastModified_From = @trigger().outputs.windowStartTime. It's a system variable from the trigger determining the time when the pipeline was triggered last time.

- LastModified_To = @trigger().outputs.windowEndTime. It's a system variable from the trigger determining the time when the pipeline is triggered this time.

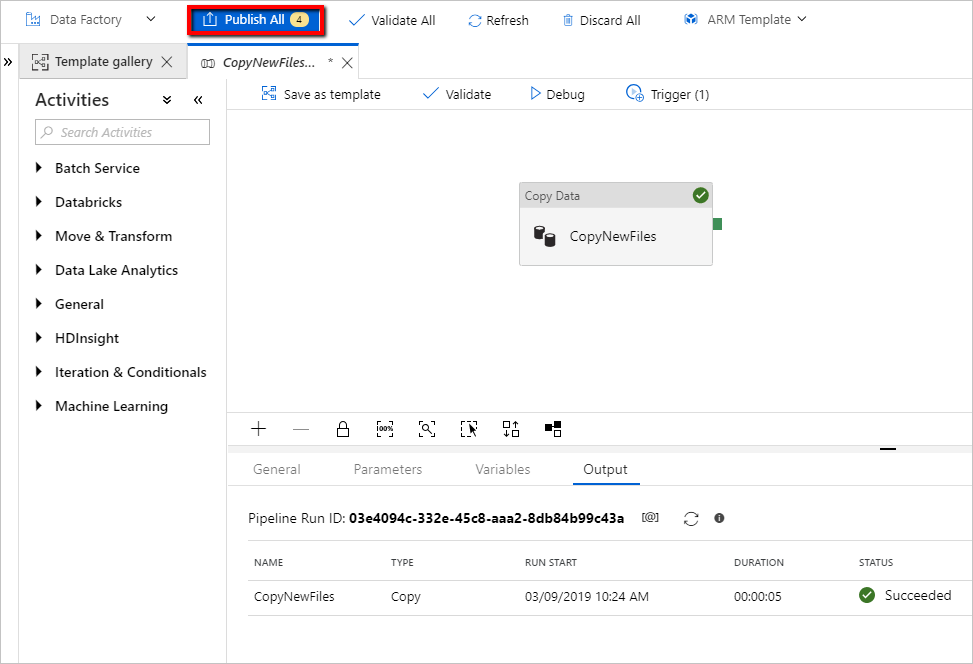

Select Publish All.

Create new files in your source folder of data source store. You're now waiting for the pipeline to be triggered automatically and only the new files are copied to the destination store.

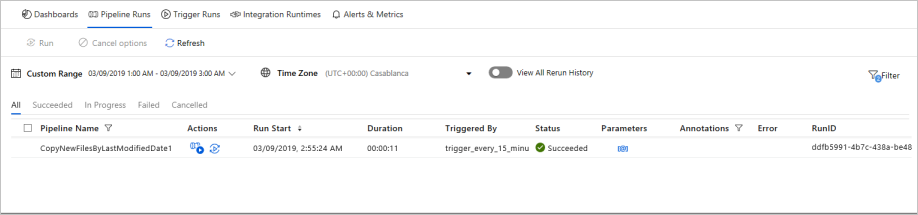

Select Monitor tab in the left navigation panel, and wait for about 15 minutes if the recurrence of trigger was set to every 15 minutes.

Review the result. You see your pipeline is triggered automatically every 15 minutes, and only the new or changed files from source store are copied to the destination store in each pipeline run.