Transform data by running a Synapse Notebook

APPLIES TO:  Azure Data Factory

Azure Data Factory  Azure Synapse Analytics

Azure Synapse Analytics

Tip

Try out Data Factory in Microsoft Fabric, an all-in-one analytics solution for enterprises. Microsoft Fabric covers everything from data movement to data science, real-time analytics, business intelligence, and reporting. Learn how to start a new trial for free!

The Azure Synapse Notebook Activity in a pipeline runs a Synapse notebook in your Azure Synapse Analytics workspace. This article builds on the data transformation activities article, which presents a general overview of data transformation and the supported transformation activities.

You can create an Azure Synapse Analytics notebook activity directly through the Azure Data Factory Studio user interface. For a step-by-step walkthrough of how to create a Synapse notebook activity using the user interface, you can refer to the following.

Add a Notebook activity for Synapse to a pipeline with UI

To use a Notebook activity for Synapse in a pipeline, complete the following steps:

General settings

- Search for Notebook in the pipeline Activities pane, and drag a Notebook activity under the Synapse to the pipeline canvas.

- Select the new Notebook activity on the canvas if it is not already selected.

- In the General settings, enter sample for Name.

- (Option) You can also enter a description.

- Timeout: Maximum amount of time an activity can run. Default is 12 hours, and the maximum amount of time allowed is 7 days. Format is in D.HH:MM:SS.

- Retry: Maximum number of retry attempts.

- Retry interval (sec): The number of seconds between each retry attempt.

- Secure output: When checked, output from the activity won't be captured in logging.

- Secure input: When checked, input from the activity won't be captured in logging.

Azure Synapse Analytics (Artifacts) settings

Select the Azure Synapse Analytics (Artifacts) tab to select or create a new Azure Synapse Analytics linked service that will execute the Notebook activity.

Settings tab

Select the new Synapse Notebook activity on the canvas if it is not already selected.

Select the Settings tab.

Expand the Notebook list, you can select an existing notebook in the linked Azure Synapse Analytics (Artifacts).

Click the Open button to open the page of the linked service where the selected notebook is located.

Select the Settings tab and choose the notebook, and optional base parameters to pass to the notebook.

(Optional) You can fill in information for Synapse notebook. If the following settings are empty, the settings of the Synapse notebook itself will be used to run; if the following settings are not empty, these settings will replace the settings of the Synapse notebook itself.

Property Description Spark pool Reference to the Spark pool. You can select Apache Spark pool from the list. Executor size Number of cores and memory to be used for executors allocated in the specified Apache Spark pool for the session. For dynamic content, valid values are Small/Medium/Large/XLarge/XXLarge. Dynamically allocate executors This setting maps to the dynamic allocation property in Spark configuration for Spark Application executors allocation. Min executors Min number of executors to be allocated in the specified Spark pool for the job. Max executors Max number of executors to be allocated in the specified Spark pool for the job. Driver size Number of cores and memory to be used for driver given in the specified Apache Spark pool for the job.

Azure Synapse Analytics Notebook activity definition

Here is the sample JSON definition of an Azure Synapse Analytics Notebook Activity:

{

"activities": [

{

"name": "demo",

"description": "description",

"type": "SynapseNotebook",

"dependsOn": [],

"policy": {

"timeout": "7.00:00:00",

"retry": 0,

"retryIntervalInSeconds": 30,

"secureOutput": false,

"secureInput": false

},

"userProperties": [

{

"name": "testproperties",

"value": "test123"

}

],

"typeProperties": {

"notebook": {

"referenceName": {

"value": "Notebookname",

"type": "Expression"

},

"type": "NotebookReference"

},

"parameters": {

"test": {

"value": "testvalue",

"type": "string"

}

},

"snapshot": true,

"sparkPool": {

"referenceName": {

"value": "SampleSpark",

"type": "Expression"

},

"type": "BigDataPoolReference"

}

},

"linkedServiceName": {

"referenceName": "AzureSynapseArtifacts1",

"type": "LinkedServiceReference"

}

}

]

}

Azure Synapse Analytics Notebook activity properties

The following table describes the JSON properties used in the JSON definition:

| Property | Description | Required |

|---|---|---|

| name | Name of the activity in the pipeline. | Yes |

| description | Text describing what the activity does. | No |

| type | For Azure Synapse Analytics Notebook Activity, the activity type is SynapseNotebook. | Yes |

| notebook | The name of the notebook to be run in the Azure Synapse Analytics. | Yes |

| sparkPool | The spark pool required to run Azure Synapse Analytics Notebook. | No |

| parameter | Parameter required to run Azure Synapse Analytics Notebook. For more information see Transform data by running a Synapse notebook | No |

Designate a parameters cell

Azure Data Factory looks for the parameters cell and uses the values as defaults for the parameters passed in at execution time. The execution engine will add a new cell beneath the parameters cell with input parameters to overwrite the default values. You can refer to Transform data by running a Synapse notebook.

Read Synapse notebook cell output value

You can read notebook cell output value in activity, for this panel, you can refer to Transform data by running a Synapse notebook.

Run another Synapse notebook

You can reference other notebooks in a Synapse notebook activity via calling %run magic or mssparkutils notebook utilities. Both support nesting function calls. The key differences of these two methods that you should consider based on your scenario are:

- %run magic copies all cells from the referenced notebook to the %run cell and shares the variable context. When notebook1 references notebook2 via

%run notebook2and notebook2 calls a mssparkutils.notebook.exit function, the cell execution in notebook1 will be stopped. We recommend you use %run magic when you want to "include" a notebook file. - mssparkutils notebook utilities calls the referenced notebook as a method or a function. The variable context isn't shared. When notebook1 references notebook2 via

mssparkutils.notebook.run("notebook2")and notebook2 calls a mssparkutils.notebook.exit function, the cell execution in notebook1 will continue. We recommend you use mssparkutils notebook utilities when you want to "import" a notebook.

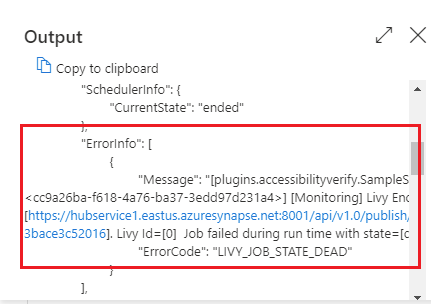

See Azure Synapse Analytics Notebook activity run history

Go to Pipeline runs under the Monitor tab, you'll see the pipeline you have triggered. Open the pipeline that contains notebook activity to see the run history.

For Open notebook snapshot, this feature is not currently supported.

You can see the notebook activity input or output by selecting the input or Output button. If your pipeline failed with a user error, select the output to check the result field to see the detailed user error traceback.