Analyze customer reviews with ai_generate_text() and OpenAI

Important

This feature is in Public Preview.

Warning

The AI function, ai_generate_text() is deprecated. Databricks recommends using ai_query with external models.

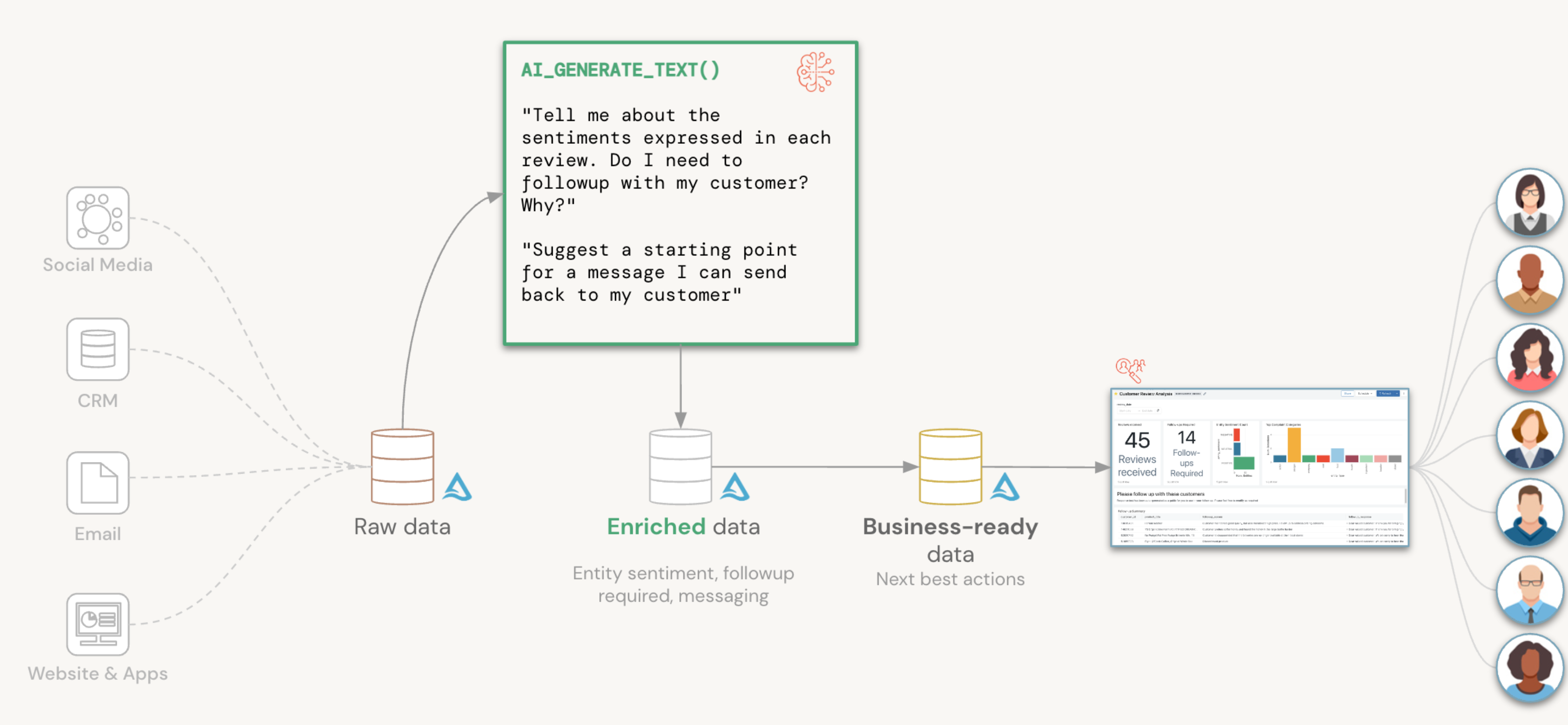

This article illustrates how to use the built-in Databricks SQL function, ai_generate_text() to examine customer reviews and determine if a response needs to be generated. See AI Functions on Azure Databricks for more detail about the function.

The dataset and commands in this guide are from the Databricks demo Action Customer Reviews at Scale with Databricks SQL AI Functions. The demo uses fake data generated by OpenAI that mimics customer reviews for grocery products submitted to an e-commerce website.

This example steps you through:

- Breaking down free-form customer review text into its constituent entities.

- For each entity, determining sentiment and whether a response is required back to the customer.

- Generating a response mentioning alternative products that may satisfy the customer.

Prerequisites

- If you want to run the commands in this guide, you need a dataset to run it on. You can create a fake dataset in the Generate Fake data with AI Functions notebook.

- The SQL commands in this guide must be run in the Databricks SQL query editor. They cannot be run directly in a Azure Databricks notebook using interactive clusters.

- The

ai_generate_text()function is only available in public preview on pro or serverless SQL warehouses.- To enroll in the Public Preview, please populate and submit the AI Functions Public Preview enrollment form.

- An Azure OpenAI key.

- Store the key in Databricks secrets. In this example you store the API key in scope

tokensand secretazure-openai. See Set up and considerations for ai_generate_text().

Prompt design

The keys to getting useful results back from a GPT model are:

- Asking it a well-formed question.

- Being specific about the type of answer that you are expecting.

In order to get results in a form that you can easily store in a table, you can ask the model to return the result in a string that reflects JSON representation, and specify the expected schema.

The following is the example prompt for this scenario:

A customer left a review. Follow up with anyone who appears unhappy.

Extract all entities mentioned. For each entity:

- Classify sentiment as [“POSITIVE”,”NEUTRAL”,”NEGATIVE”]

- Whether customer requires a follow-up: Y or N

- Reason for requiring followup

Return JSON ONLY. No other text outside the JSON.

JSON format:

{

"entities": [{

"entity_name": "entity_name",

"entity_type": "entity_type",

"entity_sentiment": "entity_sentiment",

"followup": "Y or N for follow up",

"followup_reason": "reason for followup"

}]

}

Review:

<’insert_review_text_here’>

Create SQL functions

Databricks recommends decomposing your questions into granular SQL functions so that they can be reused for other scenarios within your organization.

Throughout this section, you create SQL functions in order to abstract away the details of the ai_generate_text() call from the end users, and use those functions as your interface for interacting with Azure OpenAI.

Handle calls to Azure OpenAI

The following wrapper function, prompt_handler(), handles all your calls to Azure OpenAI. The Azure OpenAI API key is stored in a Databricks secret, and you can reference it with the secret() function. You can also pass it the Azure OpenAI resource name (resourceName) and the model’s deployment name (deploymentName).

CREATE OR REPLACE FUNCTION PROMPT_HANDLER(prompt STRING)

RETURNS STRING

RETURN AI_GENERATE_TEXT(prompt,

"azure_openai/gpt-35-turbo",

"apiKey", SECRET("tokens", "azure-openai"),

"temperature", CAST(0.0 AS DOUBLE),

"deploymentName", "llmbricks",

"apiVersion", "2023-03-15-preview",

"resourceName", "llmbricks"

);

Analyze customer review data

The annotate_review() function annotates your review with entities, entity sentiments, and whether a follow-up is required and why. Notice the prompt returns a well-formed json representation, so you can instruct the function to return a struct type for easier querying downstream, such as inserting it into a Delta table.

CREATE OR REPLACE FUNCTION ANNOTATE_REVIEW(review STRING)

RETURNS STRUCT<entities: ARRAY<STRUCT<entity_name: STRING, entity_type: STRING, entity_sentiment: STRING, followup: STRING, followup_reason: STRING>>>

RETURN FROM_JSON(

PROMPT_HANDLER(CONCAT(

'A customer left a review. Follow up with anyone who appears unhappy.

Extract all entities mentioned. For each entity:

- classify sentiment as ["POSITIVE","NEUTRAL","NEGATIVE"]

- whether customer requires a follow-up: Y or N

- reason for requiring followup

Return JSON ONLY. No other text outside the JSON. JSON format:

{

entities: [{

"entity_name": <entity name>,

"entity_type": <entity type>,

"entity_sentiment": <entity sentiment>,

"followup": <Y or N for follow up>,

"followup_reason": <reason for followup>

}]

}

Review:

', review)),

"STRUCT<entities: ARRAY<STRUCT<entity_name: STRING, entity_type: STRING, entity_sentiment: STRING, followup: STRING, followup_reason: STRING>>>"

);

You can pass in data from the customer reviews dataset to see how the annotate_review() function classifies freeform customer reviews.

SELECT review_body,

ANNOTATE_REVIEW(review_body) AS review_annotated

FROM dbdemos.openai_demo.fake_reviews

WHERE product_category = "Grocery"

LIMIT 3;

Generate responses with recommendations

After reviewing the customer responses, you can use the generate_response() function to generate a response to a customer based on their complaint and include recommendations for alternative products to try.

CREATE OR REPLACE FUNCTION GENERATE_RESPONSE(product STRING, entity STRING, reason STRING)

RETURNS STRING

RETURN PROMPT_HANDLER(

CONCAT("What alternative products can you recommend for ", product,

" when a customer had a complaint about ", entity, " because ", reason,

"Give me a response in the tone of an empathetic message back to the customer; only provide the body")

);

The following generates a sample message response for a customer’s review on Country Choice Snacking Cookies.

SELECT GENERATE_RESPONSE("Country Choice Snacking Cookies", "cookies", "Quality issue") AS customer_response

Adhoc queries

You can also create ad-hoc queries using your newly created prompt_handler() function.

For example, you might be interested in understanding whether a review discusses beverages.

SELECT review_id,

PROMPT_HANDLER(

CONCAT(

"Does this review discuss beverages? Answer Y or N only, no explanations or notes. Review: ", review_body)

) AS discusses_beverages,

review_body

FROM dbdemos.openai_demo.fake_reviews

WHERE review_id IN ("R9LEFDWWXPDEY", "R27UON10EV9FSV", "R299ZTEFIAHRQD")

ORDER BY discusses_beverages DESC;