Deploy Azure Databricks in your Azure virtual network (VNet injection)

This article describes the how to deploy a Azure Databricks workspace in your own Azure virtual network, also known as VNet injection.

Network customization with VNet injection

The default deployment of Azure Databricks is a fully managed service on Azure. An Azure virtual network (VNet) is deployed to a locked resource group. All classic compute plane resources are associated with that VNet. If you require network customization, you can deploy Azure Databricks classic compute plane resources in your own virtual network. This enables you to:

- Connect Azure Databricks to other Azure services (such as Azure Storage) in a more secure manner using service endpoints or Azure private endpoints.

- Connect to on-premises data sources using user-defined routes. See User-defined route settings for Azure Databricks.

- Connect Azure Databricks to a network virtual appliance to inspect all outbound traffic and take actions according to allow and deny rules. See Option: Route Azure Databricks traffic using a virtual appliance or firewall

- Configure Azure Databricks to use custom DNS. See Option: Configure custom DNS.

- Configure network security group (NSG) rules to specify egress traffic restrictions.

Deploying Azure Databricks classic compute plane resources to your own VNet also lets you take advantage of flexible CIDR ranges. For the VNet, you can use CIDR range size /16-/24. For the subnets, use IP ranges as small as /26.

Important

You cannot replace the VNet for an existing workspace. If your current workspace cannot accommodate the required number of active cluster nodes, we recommend that you create another workspace in a larger VNet. Follow these detailed migration steps to copy resources (notebooks, cluster configurations, jobs) from the old to new workspace.

Virtual network requirements

The VNet that you deploy your Azure Databricks workspace to must meet the following requirements:

- Region: The VNet must reside in the same region and subscription as the Azure Databricks workspace.

- Subscription: The VNet must be in the same subscription as the Azure Databricks workspace.

- Address space: A CIDR block between

/16and/24for the VNet and a CIDR block up to/26for the two subnets: a container subnet and a host subnet. For guidance about maximum cluster nodes based on the size of your VNet and its subnets, see Address space and maximum cluster nodes. - Subnets: The VNet must include two subnets dedicated to your Azure Databricks workspace: a container subnet (sometimes called the private subnet) and a host subnet (sometimes called the public subnet). When you deploy a workspace using secure cluster connectivity, both the container subnet and host subnet use private IPs. You cannot share subnets across workspaces or deploy other Azure resources on the subnets that are used by your Azure Databricks workspace. For guidance about maximum cluster nodes based on the size of your VNet and its subnets, see Address space and maximum cluster nodes.

Address space and maximum cluster nodes

A workspace with a smaller virtual network can run out of IP addresses (network space) more quickly than a workspace with a larger virtual network. Use a CIDR block between /16 and /24 for the VNet and a CIDR block up to /26 for the two subnets (the container subnet and the host subnet). You can create a CIDR block up to /28 for your subnets, however Databricks does not recommend a subnet smaller than /26.

The CIDR range for your VNet address space affects the maximum number of cluster nodes that your workspace can use.

An Azure Databricks workspace requires two subnets in the VNet: a container subnet and a host subnet. Azure reserves five IPs in each subnet. Azure Databricks requires two IP for each cluster node: one IP address for the host in the host subnet and one IP address for the container in the container subnet.

- You might not want to use all the address space of your VNet. For example, you might want to create multiple workspaces in one VNet. Because you cannot share subnets across workspaces, you might want subnets that do not use the total VNet address space.

- You must allocate address space for two new subnets that are within the VNet’s address space and don’t overlap address space of current or future subnets in that VNet.

The following table shows maximum subnet size based on network size. This table assumes no additional subnets exist that take up address space. Use smaller subnets if you have pre-existing subnets or if you want to reserve address space for other subnets:

| VNet address space (CIDR) | Maximum Azure Databricks subnet size (CIDR) assuming no other subnets |

|---|---|

/16 |

/17 |

/17 |

/18 |

/18 |

/19 |

/20 |

/21 |

/21 |

/22 |

/22 |

/23 |

/23 |

/24 |

/24 |

/25 |

To find the maximum cluster nodes based on the subnet size, use the following table. The IP addresses per subnet column includes the five Azure-reserved IP addresses. The rightmost column indicates the number of cluster nodes that can simultaneously run in a workspace that is provisioned with subnets of that size.

| Subnet size (CIDR) | IP addresses per subnet | Maximum Azure Databricks cluster nodes |

|---|---|---|

/17 |

32768 | 32763 |

/18 |

16384 | 16379 |

/19 |

8192 | 8187 |

/20 |

4096 | 4091 |

/21 |

2048 | 2043 |

/22 |

1024 | 1019 |

/23 |

512 | 507 |

/24 |

256 | 251 |

/25 |

128 | 123 |

/26 |

64 | 59 |

Egress IP addresses when using secure cluster connectivity

If you enable secure cluster connectivity on your workspace that uses VNet injection, Databricks recommends that your workspace has a stable egress public IP.

Stable egress public IP addresses are useful because you can add them to external allow lists. For example, to connect from Azure Databricks to Salesforce with a stable outgoing IP address. If you configure IP access lists, those public IP addresses must be added to an allow list. See Configure IP access lists for workspaces.

Warning

Microsoft announced that on September 30, 2025, default outbound access connectivity for virtual machines in Azure will be retired. See this announcement. This means that Azure Databricks workspaces that use default outbound access rather than a stable egress public IP might not continue to work after that date. Databricks recommends that you add explicit outbound methods for your workspaces before that date.

To configure a stable egress public IP, see Egress with VNet injection

Shared resources and peering

If shared networking resources like DNS are required, Databricks strongly recommends you follow the Azure best practices for hub and spoke model. Use VNet peering to extend the private IP space of the workspace VNet to the hub while keeping spokes isolated from each other.

If you have other resources in the VNet or use peering, Databricks strongly recommends that you add Deny rules to the network security groups (NSGs) that are attached to other networks and subnets that are in the same VNet or are peered to that VNet. Add Deny rules for connections for both inbound and outbound connections so they limit connections both to and from Azure Databricks compute resources. If your cluster needs access to resources on the network, add rules to allow only the minimal amount of access required to meet the requirements.

For related information, see Network security group rules.

Create an Azure Databricks workspace using Azure portal

This section describes how to create an Azure Databricks workspace in the Azure portal and deploy it in your own existing VNet. Azure Databricks updates the VNet with two new subnets if those do not exist yet, using CIDR ranges that you specify. The service also updates the subnets with a new network security group, configuring inbound and outbound rules, and finally deploys the workspace to the updated VNet. For more control over the configuration of the VNet, use Azure-Databricks-supplied Azure Resource Manager (ARM) templates instead of the portal. For example, use existing network security groups or create your own security rules. See Advanced configuration using Azure Resource Manager templates.

The user who creates the workspace must be assigned the Network contributor role to the corresponding Virtual Network, or a custom role that’s assigned the Microsoft.Network/virtualNetworks/subnets/join/action and the Microsoft.Network/virtualNetworks/subnets/write permissions.

You must configure a VNet to which you will deploy the Azure Databricks workspace. You can use an existing VNet or create a new one, but the VNet must be in the same region and same subscription as the Azure Databricks workspace that you plan to create. The VNet must be sized with a CIDR range between /16 and /24. For more requirements, see Virtual network requirements.

Use either existing subnets or specify names and IP ranges for new subnets when you configure your workspace.

In the Azure portal, select + Create a resource > Analytics > Azure Databricks or search for Azure Databricks and click Create or + Add to launch the Azure Databricks Service dialog.

Follow the configuration steps described in the Create an Azure Databricks workspace in your own VNet quickstart.

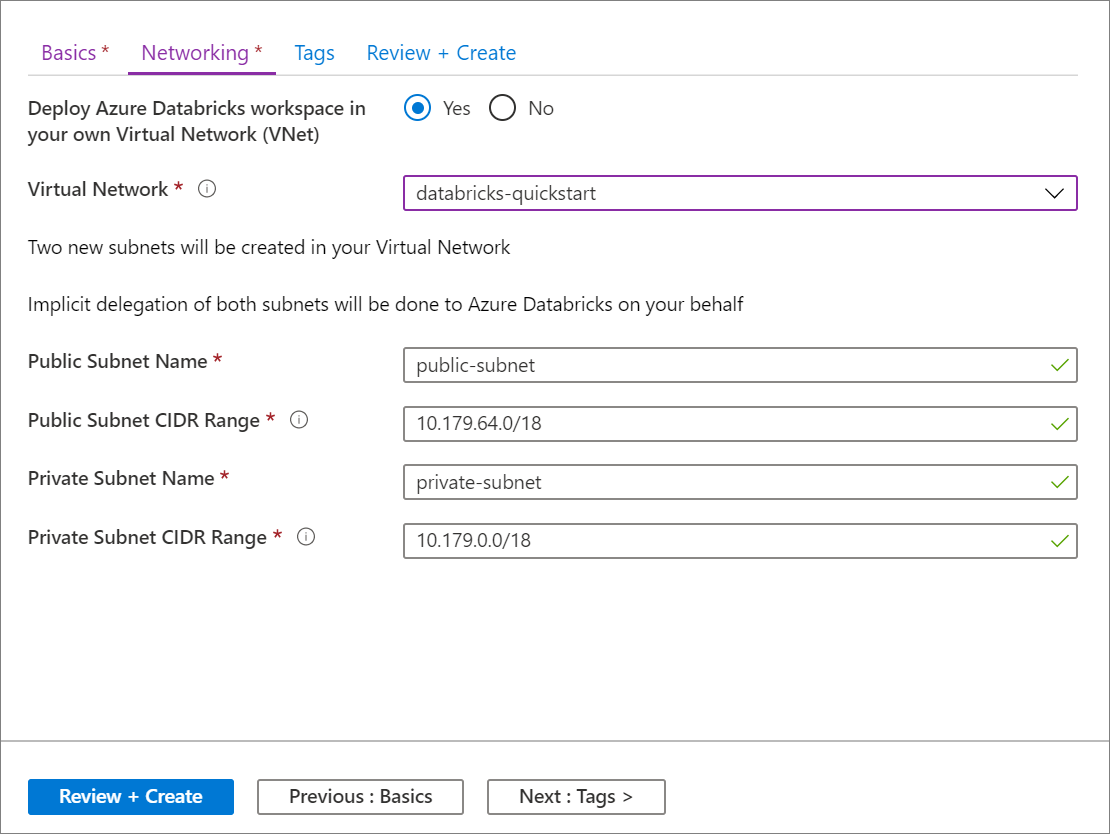

In the Networking tab, select the VNet that you want to use in the Virtual network field.

Important

If you do not see the network name in the picker, confirm that the Azure region that you specified for the workspace matches the Azure region of the desired VNet.

Name your subnets and provide CIDR ranges in a block up to size

/26. For guidance about maximum cluster nodes based on the size of your VNet and its subnets, see Address space and maximum cluster nodes. The subnet CIDR ranges cannot be changed after the workspace is deployed.- To specify existing subnets, specify the exact names of the existing subnets. When using existing subnets, also set the IP ranges in the workspace creation form to exactly match the IP ranges of the existing subnets.

- To create new subnets, specify subnet names that do not already exist in that VNet. The subnets are created with the specified IP ranges. You must specify IP ranges within the IP range of your VNet and not already allocated to existing subnets.

Azure Databricks requires subnet names to be no longer than 80 characters.

The subnets get associated network security group rules that include the rule to allow cluster-internal communication. Azure Databricks has delegated permissions to update both subnets via the

Microsoft.Databricks/workspacesresource provider. These permissions apply only to network security group rules that are required by Azure Databricks, not to other network security group rules that you add or to the default network security group rules included with all network security groups.Click Create to deploy the Azure Databricks workspace to the VNet.

Advanced configuration using Azure Resource Manager templates

For more control over the configuration of the VNet, use the following Azure Resource Manager (ARM) templates instead of the portal-UI-based automatic VNet configuration and workspace deployment. For example, use existing subnets, an existing network security group, or add your own security rules.

If you are using a custom Azure Resource Manager template or the Workspace Template for Azure Databricks VNet Injection to deploy a workspace to an existing VNet, you must create host and container subnets, attach a network security group to each subnet, and delegate the subnets to the Microsoft.Databricks/workspaces resource provider before deploying the workspace. You must have a separate pair of subnets for each workspace that you deploy.

All-in-one template

To create a VNet and Azure Databricks workspace using one template, use the All-in-one Template for Azure Databricks VNet Injected Workspaces.

Virtual network template

To create a VNet with the proper subnets using a template, use the VNet Template for Databricks VNet Injection.

Azure Databricks workspace template

To deploy an Azure Databricks workspace to an existing VNet with a template, use the Workspace Template for Azure Databricks VNet Injection.

The workspace template allows you to specify an existing VNet and use existing subnets:

- You must have a separate pair of host/container subnets for each workspace that you deploy. It is unsupported to share subnets across workspaces or to deploy other Azure resources on the subnets that are used by your Azure Databricks workspace.

- Your VNet’s host and container subnets must have network security groups attached and must be delegated to the

Microsoft.Databricks/workspacesservice before you use this Azure Resource Manager template to deploy a workspace. - To create a VNet with properly delegated subnets, use the VNet Template for Databricks VNet Injection.

- To use an existing VNet when you have not yet delegated the host and container subnets, see Add or remove a subnet delegation.

Network security group rules

The following tables display the current network security group rules used by Azure Databricks. If Azure Databricks needs to add a rule or change the scope of an existing rule on this list, you will receive advance notice. This article and the tables will be updated whenever such a modification occurs.

How Azure Databricks manages network security group rules

The NSG rules listed in the following sections represent those that Azure Databricks auto-provisions and manages in your NSG, by virtue of the delegation of your VNet’s host and container subnets to the Microsoft.Databricks/workspaces service. You do not have permission to update or delete these NSG rules and any attempt to do so is blocked by the subnet delegation. Azure Databricks must own these rules in order to ensure that Microsoft can reliably operate and support the Azure Databricks service in your VNet.

Some of these NSG rules have VirtualNetwork assigned as the source and destination. This has been implemented to simplify the design in the absence of a subnet-level service tag in Azure. All clusters are protected by a second layer of network policy internally, such that cluster A cannot connect to cluster B in the same workspace. This also applies across multiple workspaces if your workspaces are deployed into a different pair of subnets in the same customer-managed VNet.

Important

Databricks strongly recommends that you add Deny rules to the network security groups (NSGs) that are attached to other networks and subnets that are in the same VNet or are peered to that VNet. Add Deny rules for connections for both inbound and outbound connections so they limit connections both to and from Azure Databricks compute resources. If your cluster needs access to resources on the network, add rules to allow only the minimal amount of access required to meet the requirements.

Network security group rules for workspaces

The information in this section applies only to Azure Databricks workspaces created after January 13, 2020. If your workspace was created before the release of secure cluster connectivity (SCC) on January 13, 2020, see the next section.

This table lists the network security group rules for workspaces and includes two inbound security group rules that are included only if secure cluster connectivity (SCC) is disabled.

| Direction | Protocol | Source | Source Port | Destination | Dest Port | Used |

|---|---|---|---|---|---|---|

| Inbound | Any | VirtualNetwork | Any | VirtualNetwork | Any | Default |

| Inbound | TCP | AzureDatabricks (service tag) Only if SCC is disabled |

Any | VirtualNetwork | 22 | Public IP |

| Inbound | TCP | AzureDatabricks (service tag) Only if SCC is disabled |

Any | VirtualNetwork | 5557 | Public IP |

| Outbound | TCP | VirtualNetwork | Any | AzureDatabricks (service tag) | 443, 3306, 8443-8451 | Default |

| Outbound | TCP | VirtualNetwork | Any | SQL | 3306 | Default |

| Outbound | TCP | VirtualNetwork | Any | Storage | 443 | Default |

| Outbound | Any | VirtualNetwork | Any | VirtualNetwork | Any | Default |

| Outbound | TCP | VirtualNetwork | Any | EventHub | 9093 | Default |

Note

If you restrict outbound rules, Databricks recommends that you open ports 111 and 2049 to enable certain library installs.

Important

Azure Databricks is a Microsoft Azure first-party service that is deployed on the Global Azure Public Cloud infrastructure. All communications between components of the service, including between the public IPs in the control plane and the customer compute plane, remain within the Microsoft Azure network backbone. See also Microsoft global network.

Troubleshooting

Workspace creation errors

Subnet <subnet-id> requires any of the following delegation(s) [Microsoft.Databricks/workspaces] to reference service association link

Possible cause: you are creating a workspace in a VNet whose host and container subnets have not been delegated to the Microsoft.Databricks/workspaces service. Each subnet must have a network security group attached and must be properly delegated. See Virtual network requirements for more information.

The subnet <subnet-id> is already in use by workspace <workspace-id>

Possible cause: you are creating a workspace in a VNet with host and container subnets that are already being used by an existing Azure Databricks workspace. You cannot share multiple workspaces across a single subnet. You must have a new pair of host and container subnets for each workspace you deploy.

Troubleshooting

Instances Unreachable: Resources were not reachable via SSH.

Possible cause: traffic from control plane to workers is blocked. If you are deploying to an existing VNet connected to your on-premises network, review your setup using the information supplied in Connect your Azure Databricks workspace to your on-premises network.

Unexpected Launch Failure: An unexpected error was encountered while setting up the cluster. Please retry and contact Azure Databricks if the problem persists. Internal error message: Timeout while placing node.

Possible cause: traffic from workers to Azure Storage endpoints is blocked. If you are using custom DNS servers, also check the status of the DNS servers in your VNet.

Cloud Provider Launch Failure: A cloud provider error was encountered while setting up the cluster. See the Azure Databricks guide for more information. Azure error code: AuthorizationFailed/InvalidResourceReference.

Possible cause: the VNet or subnets do not exist any more. Make sure the VNet and subnets exist.

Cluster terminated. Reason: Spark Startup Failure: Spark was not able to start in time. This issue can be caused by a malfunctioning Hive metastore, invalid Spark configurations, or malfunctioning init scripts. See the Spark driver logs to troubleshoot this issue, and contact Databricks if the problem persists. Internal error message: Spark failed to start: Driver failed to start in time.

Possible cause: Container cannot talk to hosting instance or workspace storage account. Fix by adding a custom route to the subnets for the workspace storage account with the next hop being Internet.

Σχόλια

Σύντομα διαθέσιμα: Καθ' όλη τη διάρκεια του 2024 θα καταργήσουμε σταδιακά τα ζητήματα GitHub ως μηχανισμό ανάδρασης για το περιεχόμενο και θα το αντικαταστήσουμε με ένα νέο σύστημα ανάδρασης. Για περισσότερες πληροφορίες, ανατρέξτε στο θέμα: https://aka.ms/ContentUserFeedback.

Υποβολή και προβολή σχολίων για