General

What is an Azure Event Hubs namespace?

A namespace is a scoping container for event hubs or Kafka topics. It gives you a unique FQDN. A namespace serves as an application container that can house multiple event hubs or Kafka topics.

Is it possible to change pricing tier after deployment?

No. Once deployed, it isn't possible to change (for example) from standard tier to premium tier without deploying a new resource.

When do I create a new namespace vs. use an existing namespace?

Capacity allocations throughput units (TUs) or processing units (PUs)) are billed at the namespace level. A namespace is also associated with a region.

You might want to create a new namespace instead of using an existing one in one of the following scenarios:

- You need an event hub associated with a new region.

- You need an event hub associated with a different subscription.

- You need an event hub with a distinct capacity allocation (that is, the capacity need for the namespace with the added event hub would exceed the 40 TU threshold and you don't want to go for the dedicated cluster).``

What is the difference between Event Hubs basic and standard tiers?

The Standard tier of Azure Event Hubs provides features beyond what is available in the Basic tier. The following features are included with Standard:

- Longer event retention

- Additional brokered connections, with an overage charge for more than the number included

- More than a single consumer group

- Capture

- Kafka integration

For more information about pricing tiers, including Event Hubs Dedicated, see the Event Hubs pricing details.

Where is Azure Event Hubs available?

Azure Event Hubs is available in all supported Azure regions. For a list, visit the Azure regions page.

Can I use a single Advanced Message Queuing Protocol (AMQP) connection to send and receive from multiple event hubs?

Yes, as long as all the event hubs are in the same namespace.

What is the maximum retention period for events?

Event Hubs standard tier currently supports a maximum retention period of seven days while for premium and dedicated tier, this limit is 90 days. Event hubs aren't intended as a permanent data store. Retention periods greater than 24 hours are intended for scenarios in which it's convenient to replay a stream of events into the same systems. For example, to train or verify a new machine learning model on existing data. If you need message retention beyond seven days, enabling Event Hubs Capture on your event hub pulls the data from your event hub into the Storage account or Azure Data Lake Service account of your choosing. Enabling Capture incurs a charge based on your purchased throughput units.

You can configure the retention period for the captured data on your storage account. The lifecycle management feature of Azure Storage offers a rich, rule-based policy for general purpose v2 and blob storage accounts. Use the policy to transition your data to the appropriate access tiers or expire at the end of the data's lifecycle. For more information, see Manage the Azure Blob storage lifecycle.

How do I monitor my event hubs?

Event Hubs emits exhaustive metrics that provide the state of your resources to Azure Monitor. They also let you assess the overall health of the Event Hubs service not only at the namespace level but also at the entity level. Learn about what monitoring is offered for Azure Event Hubs.

Where does Azure Event Hubs store data?

Azure Event Hubs standard, premium, and dedicated tiers store and process data published to it in the region that you select when you create an Event Hubs name space. By default, customer data stays within that region. When geo-disaster recovery is set up for an Azure Event Hubs namespace, metadata is copied over to the secondary region that you select. Therefore, this service automatically satisfies the region data residency requirements including the ones specified in the Trust Center.

What protocols I can use to send and receive events?

Producers or senders can use Advanced Messaging Queuing Protocol (AMQP), Kafka, or HTTPS protocols to send events to an event hub.

Consumers or receivers use AMQP or Kafka to receive events from an event hub. Event Hubs supports only the pull model for consumers to receive events from it. Even when you use event handlers to handle events from an event hub, the event processor internally uses the pull model to receive events from the event hub.

AMQP

You can use the AMQP 1.0 protocol to send events to and receive events from Azure Event Hubs. AMQP provides reliable, performant, and secure communication for both sending and receiving events. You can use it for high-performance and real-time streaming and is supported by most Azure Event Hubs SDKs.

HTTPS/REST API

You can only send events to Event Hubs using HTTP POST requests. Event Hubs doesn't support receiving events over HTTPS. It's suitable for lightweight clients where a direct TCP connection isn't feasible.

Apache Kafka

Azure Event Hubs has a built-in Kafka endpoint that supports Kafka producers and consumers. Applications that are built using Kafka can use Kafka protocol (version 1.0 or later) to send and receive events from Event Hubs without any code changes.

Azure SDKs abstract the underlying communication protocols and provide a simplified way to send and receive events from Event Hubs using languages like C#, Java, Python, JavaScript, etc.

What ports do I need to open on the firewall?

You can use the following protocols with Azure Event Hubs to send and receive events:

- Advanced Message Queuing Protocol 1.0 (AMQP)

- Hypertext Transfer Protocol 1.1 with Transport Layer Security (HTTPS)

- Apache Kafka

See the following table for the outbound ports you need to open to use these protocols to communicate with Azure Event Hubs.

| Protocol | Ports | Details |

|---|---|---|

| AMQP | 5671 and 5672 | See AMQP protocol guide |

| HTTPS | 443 | This port is used for the HTTP/REST API and for AMQP-over-WebSockets. |

| Kafka | 9093 | See Use Event Hubs from Kafka applications |

The HTTPS port is required for outbound communication also when AMQP is used over port 5671, because several management operations performed by the client SDKs and the acquisition of tokens from Microsoft Entra ID (when used) run over HTTPS.

The official Azure SDKs generally use the AMQP protocol for sending and receiving events from Event Hubs. The AMQP-over-WebSockets protocol option runs over port TCP 443 just like the HTTP API, but is otherwise functionally identical with plain AMQP. This option has higher initial connection latency because of extra handshake round trips and slightly more overhead as tradeoff for sharing the HTTPS port. If this mode is selected, TCP port 443 is sufficient for communication. The following options allow selecting the plain AMQP or AMQP WebSockets mode:

| Language | Option |

|---|---|

| .NET | EventHubConnectionOptions.TransportType property with EventHubsTransportType.AmqpTcp or EventHubsTransportType.AmqpWebSockets |

| Java | com.microsoft.azure.eventhubs.EventProcessorClientBuilder.transporttype with AmqpTransportType.AMQP or AmqpTransportType.AMQP_WEB_SOCKETS |

| Node | EventHubConsumerClientOptions has a webSocketOptions property. |

| Python | EventHubConsumerClient.transport_type with TransportType.Amqp or TransportType.AmqpOverWebSocket |

What IP addresses do I need to allow?

When you're working with Azure, sometimes you have to allow specific IP address ranges or URLs in your corporate firewall or proxy to access all Azure services you're using or trying to use. Verify that the traffic is allowed on IP addresses used by Event Hubs. For IP addresses used by Azure Event Hubs: see Azure IP Ranges and Service Tags - Public Cloud.

Also, verify that the IP address for your namespace is allowed. To find the right IP addresses to allow for your connections, follow these steps:

Run the following command from a command prompt:

nslookup <YourNamespaceName>.servicebus.windows.netNote down the IP address returned in

Non-authoritative answer.

If you use a namespace hosted in an older cluster (based on Cloud Services - CNAME ending in *.cloudapp.net) and the namespace is zone redundant, you need to follow few extra steps. If your namespace is on a newer cluster (based on Virtual Machine Scale Set - CNAME ending in *.cloudapp.azure.com) and zone redundant you can skip below steps.

First, you run nslookup on the namespace.

nslookup <yournamespace>.servicebus.windows.netNote down the name in the non-authoritative answer section, which is in one of the following formats:

<name>-s1.cloudapp.net <name>-s2.cloudapp.net <name>-s3.cloudapp.netRun nslookup for each one with suffixes s1, s2, and s3 to get the IP addresses of all three instances running in three availability zones,

Note

The IP address returned by the

nslookupcommand isn't a static IP address. However, it remains constant until the underlying deployment is deleted or moved to a different cluster.

What client IPs are sending events to or receiving events from my namespace?

First, enable IP filtering on the namespace.

Then, Enable diagnostic logs for Event Hubs virtual network connection events by following instructions in the Enable diagnostic logs. You see the IP address for which connection is denied.

{

"SubscriptionId": "0000000-0000-0000-0000-000000000000",

"NamespaceName": "namespace-name",

"IPAddress": "1.2.3.4",

"Action": "Deny Connection",

"Reason": "IPAddress doesn't belong to a subnet with Service Endpoint enabled.",

"Count": "65",

"ResourceId": "/subscriptions/0000000-0000-0000-0000-000000000000/resourcegroups/testrg/providers/microsoft.eventhub/namespaces/namespace-name",

"Category": "EventHubVNetConnectionEvent"

}

Important

Virtual network logs are generated only if the namespace allows access from specific IP addresses (IP filter rules). If you don't want to restrict access to your namespace using these features and still want to get virtual network logs to track IP addresses of clients connecting to the Event Hubs namespace, you could use the following workaround: Enable IP filtering, and add the total addressable IPv4 range (0.0.0.0/1 - 128.0.0.0/1) and IPv6 range (::/1 - 8000::/1).

Note

Currently, it's not possible to determine the source IP of an individual message or event.

Apache Kafka integration

How do I integrate my existing Kafka application with Event Hubs?

Event Hubs provides a Kafka endpoint that can be used by your existing Apache Kafka based applications. A configuration change is all that is required to have the PaaS Kafka experience. It provides an alternative to running your own Kafka cluster. Event Hubs supports Apache Kafka 1.0 and newer client versions and works with your existing Kafka applications, tools, and frameworks. For more information, see Event Hubs for Kafka repo.

What configuration changes need to be done for my existing application to talk to Event Hubs?

To connect to an event hub, you'll need to update the Kafka client configs. It's done by creating an Event Hubs namespace and obtaining the connection string. Change the bootstrap.servers to point the Event Hubs FQDN and the port to 9093. Update the sasl.jaas.config to direct the Kafka client to your Event Hubs endpoint (which is the connection string you've obtained), with correct authentication as shown below:

bootstrap.servers={YOUR.EVENTHUBS.FQDN}:9093

request.timeout.ms=60000

security.protocol=SASL_SSL

sasl.mechanism=PLAIN

sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required username="$ConnectionString" password="{YOUR.EVENTHUBS.CONNECTION.STRING}";

Example:

bootstrap.servers=dummynamespace.servicebus.windows.net:9093

request.timeout.ms=60000

security.protocol=SASL_SSL

sasl.mechanism=PLAIN

sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required username="$ConnectionString" password="Endpoint=sb://dummynamespace.servicebus.windows.net/;SharedAccessKeyName=DummyAccessKeyName;SharedAccessKey=XXXXXXXXXXXXXXXXXXXXX";

Note

If sasl.jaas.config isn't a supported configuration in your framework, find the configurations that are used to set the SASL username and password and use them instead. Set the username to $ConnectionString and the password to your Event Hubs connection string.

What is the message/event size for Event Hubs?

The maximum message size allowed for Event Hubs is 1 MB.

Throughput units

What are Event Hubs throughput units? (Standard tier)

Throughput in Event Hubs defines the amount of data in mega bytes or the number (in thousands) of 1-KB events that ingress and egress through Event Hubs. This throughput is measured in throughput units (TUs). Purchase TUs before you can start using the Event Hubs service. You can explicitly select Event Hubs TUs either by using portal or Event Hubs Resource Manager templates.

Do throughput units apply to all event hubs in a namespace?

Yes, throughput units (TUs) apply to all event hubs in an Event Hubs namespace. It means that you purchase TUs at the namespace level and are shared among the event hubs under that namespace. Each TU entitles the namespace to the following capabilities:

- Up to 1 MB per second of ingress events (events sent into an event hub), but no more than 1,000 ingress events, management operations, or control API calls per second.

- Up to 2 MB per second of egress events (events consumed from an event hub), but no more than 4,096 egress events.

- Up to 84 GB of event storage (enough for the default 1 hour retention period).

How are throughput units billed?

Throughput units (TUs) are billed on an hourly basis. The billing is based on the maximum number of units that was selected during the given hour.

How can I optimize the usage on my throughput units?

You can start as low as one throughput unit (TU), and turn on autoinflate. The autoinflate feature lets you grow your TUs as your traffic/payload increases. You can also set an upper limit on the number of TUs.

How does Autoinflate feature of Event Hubs work?

The autoinflate feature lets you scale up your throughput units (TUs). It means that you can start by purchasing low TUs and autoinflate scales up your TUs as your ingress increases. It gives you a cost-effective option and complete control of the number of TUs to manage. This feature is a scale-up only feature, and you can completely control the scaling down of the number of TUs by updating it.

You might want to start with low throughput units (TUs), for example, 2 TUs. If you predict that your traffic might grow to 15 TUs, enable the auto inflate feature on your namespace, and set the max limit to 15 TUs. You can now grow your TUs automatically as your traffic grows.

Is there a cost associated when I enable the auto inflate feature?

There's no cost associated with this feature.

Can Zone Redundancy be enabled for an existing Event Hubs Namespace?

Currently, this isn't possible because old Event Hubs namespaces are in different clusters, and there's no way to migrate them to the new clusters that automatically enable zone redundancy when new event hub namespaces are created.

How are throughput limits enforced?

If the total ingress throughput or the total ingress event rate across all event hubs in a namespace exceeds the aggregate throughput unit allowances, senders are throttled and receive errors indicating that the ingress quota has been exceeded.

If the total egress throughput or the total event egress rate across all event hubs in a namespace exceeds the aggregate throughput unit allowances, receivers are throttled but no throttling errors are generated.

Ingress and egress quotas are enforced separately, so that no sender can cause event consumption to slow down, nor can a receiver prevent events from being sent into an event hub.

Is there a limit on the number of throughput units that can be reserved/selected?

When creating a basic or a standard tier namespace in the Azure portal, you can select up to 40 TUs for the namespace. Beyond 40 TUs, Event Hubs offers the resource/capacity-based models such as Event Hubs Premium and Event Hubs Dedicated clusters. For more information, see Event Hubs Premium - overview and Event Hubs Dedicated - overview.

Dedicated clusters

What is a dedicated cluster?

Event Hubs Dedicated clusters offer single-tenant deployments for customers with most demanding requirements. This offering builds a capacity-based cluster that isn't bound by throughput units. It means that you could use the cluster to ingest and stream your data as dictated by the CPU and memory usage of the cluster. For more information, see Event Hubs Dedicated clusters.

How do I create an Event Hubs Dedicated cluster?

For step-by-step instructions and more information on setting up an Event Hubs dedicated cluster, see the Quickstart: Create a dedicated Event Hubs cluster using Azure portal.

What can I achieve with a cluster?

For an Event Hubs cluster, how much you can ingest and stream depends on factors such as your producers, consumers, and the rate at which you're ingesting and processing.

The following table shows the benchmark results that we achieved during our testing with a legacy dedicated cluster.

| Payload shape | Receivers | Ingress bandwidth | Ingress messages | Egress bandwidth | Egress messages | Total TUs | TUs per CU |

|---|---|---|---|---|---|---|---|

| Batches of 100x1KB | 2 | 400 MB/sec | 400k messages/sec | 800 MB/sec | 800k messages/sec | 400 TUs | 100 TUs |

| Batches of 10x10KB | 2 | 666 MB/sec | 66.6k messages/sec | 1.33 GB/sec | 133k messages/sec | 666 TUs | 166 TUs |

| Batches of 6x32KB | 1 | 1.05 GB/sec | 34k messages/sec | 1.05 GB/sec | 34k messages/sec | 1,000 TUs | 250 TUs |

In the testing, the following criteria were used:

- A Dedicated-tier Event Hubs cluster with four CUs was used.

- The event hub used for ingestion had 200 partitions.

- The data that was ingested was received by two receiver applications receiving from all partitions.

Can I scale up or scale down my cluster?

If you create the cluster with the Support scaling option set, you can use the self-serve experience to scale out and scale in, as needed. You can scale up to 10 CUs with self-serve scalable clusters. Self-serve scalable dedicated clusters are based on new infrastructure, so they perform better than dedicated clusters that don't support self-serve scaling. The performance of dedicated clusters depends on factors such as resource allocation, number of partitions, and storage. We recommend that you determine the required number of CUs after you test with a real workload.

Submit a support request to scale out or scale in your dedicated cluster in the following scenarios:

- You need more than 10 CUs for a self-serve scalable dedicated cluster (a cluster that was created with the Support scaling option set).

- You need to scale out or scale in a cluster that was created without selecting the Support scaling option.

- You need to scale out or scale in a dedicated cluster that was created before the self-serve experience was released.

Warning

You won't be able to delete the cluster for at least four hours after you create it. You're charged for a minimum of four hours of usage of the cluster. For more information on pricing, see Event Hubs pricing.

Can I migrate from a legacy cluster to a self-serve scalable cluster?

Because of the difference in the underlying hardware and software infrastructure, we don't currently support migration of clusters that don't support self-serve scaling to self-serve scalable dedicated clusters. If you want to use self-serve scaling, you must re-create the cluster. To learn how to create a scalable cluster, see Create an Event Hubs dedicated cluster.

When should I scale my dedicated cluster?

CPU consumption is the key indicator of the resource consumption of your dedicated cluster. When the overall CPU consumption begins to reach 70% (without observing any abnormal conditions, such as a high number of server errors or a low number of successful requests), that means your cluster is moving toward its maximum capacity. You can use this information as an indicator to consider whether you need to scale up your dedicated cluster or not.

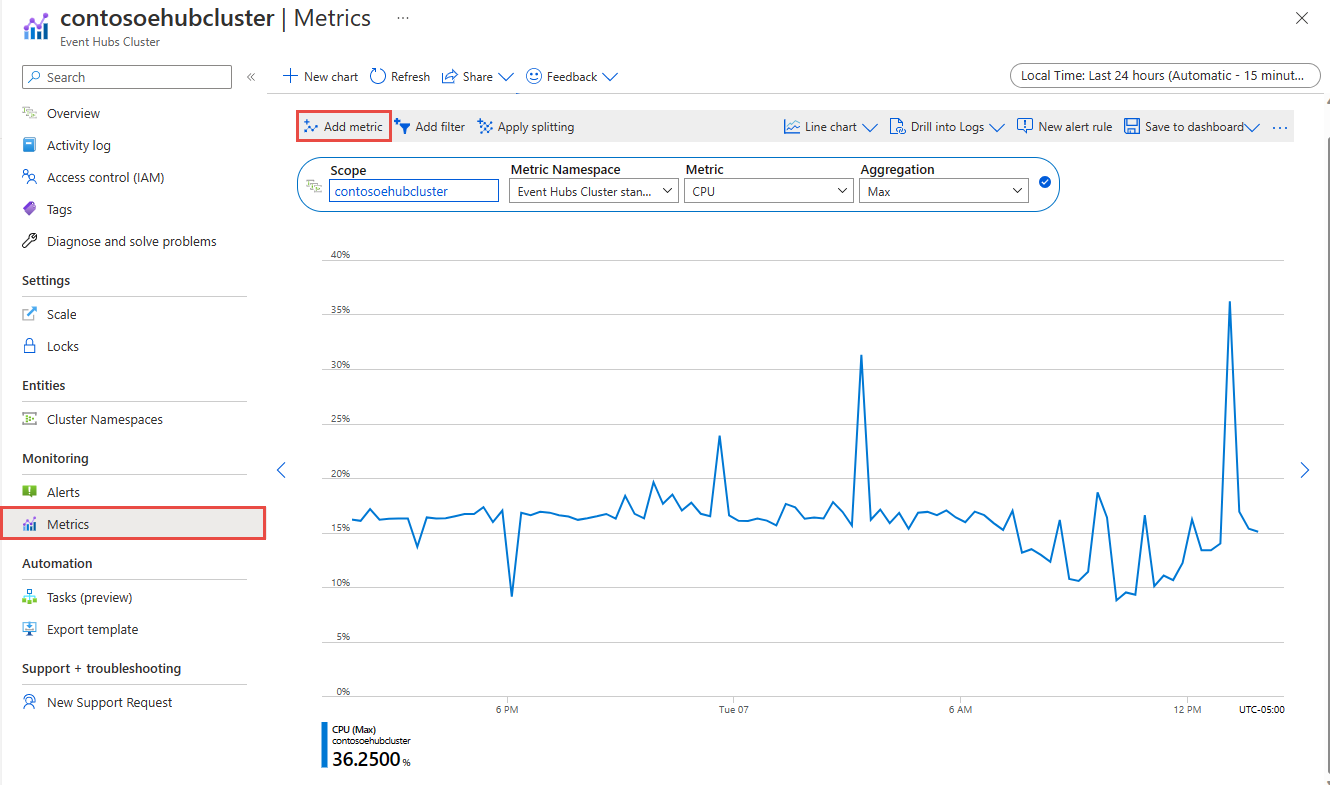

To monitor the CPU usage of the dedicated cluster, follow these steps:

On the Metrics page of your Event Hubs dedicated cluster, select Add metric.

Select CPU as the metric and use Max as the aggregation.

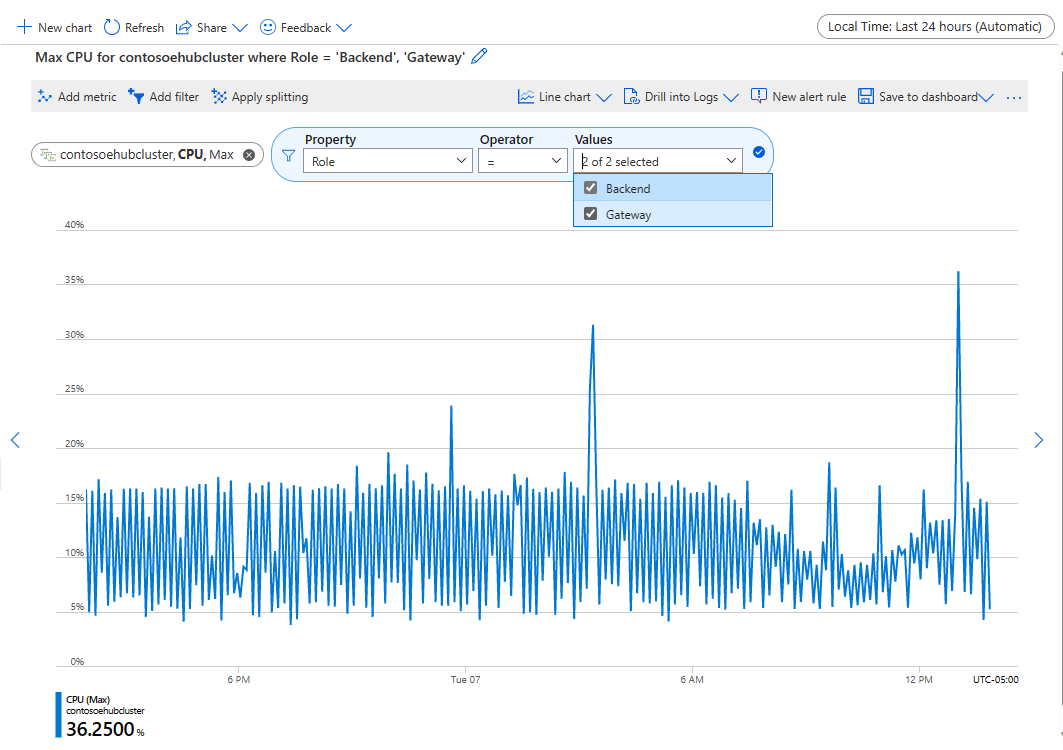

Select Add filter and add a filter for the Property type Role. Use the equal operator and select all the values (Backend and Gateway) from the dropdown list.

Then you can monitor this metric to determine when you should scale your dedicated cluster. You can also set up alerts against this metric to get notified when CPU usage reaches the thresholds you set.

How does geo-disaster recovery work with my cluster?

You can geo-pair a namespace under a Dedicated-tier cluster with another namespace under a Dedicated-tier cluster. We don't encourage pairing a Dedicated-tier namespace with a namespace in the Standard offering because the throughput limit is incompatible and results in errors.

Can I migrate my Standard or Premium namespaces to a Dedicated-tier cluster?

We don't currently support an automated migration process for migrating your Event Hubs data from a Standard or Premium namespace to a dedicated one.

Why does a legacy zone-redundant dedicated cluster have a minimum of eight CUs?

To provide zone redundancy for the Dedicated offering, all compute resources must have three replicas across three datacenters in the same region. This minimum requirement supports zone redundancy (so that the service can still function when two zones or datacenters are down) and results in a compute capacity equivalent to eight CUs.

We can't change this quota. It's a restriction of the current architecture with a Dedicated tier.

Partitions

How many partitions do I need?

A partition is a data organization mechanism that enables parallel publishing and consumption. While it supports parallel processing and scaling, total capacity remains limited by the namespace’s scaling allocation. We recommend that you balance scaling units (throughput units for the standard tier, processing units for the premium tier, or capacity units for the dedicated tier) and partitions to achieve optimal scale. In general, we recommend a maximum throughput of 1 MB/s per partition. Therefore, a rule of thumb for calculating the number of partitions would be to divide the maximum expected throughput by 1 MB/s. For example, if your use case requires 20 MB/s, we recommend that you choose at least 20 partitions to achieve the optimal throughput.

However, if you have a model in which your application has an affinity to a particular partition, increasing the number of partitions isn't beneficial. For more information, see availability and consistency.

Can partition count be increased in the Standard tier of Event Hubs?

No, it's not possible because partitions are immutable in the Standard tier. Dynamic addition of partitions is available only in premium and dedicated tiers of Event Hubs.

Pricing

Where can I find more pricing information?

For complete information about Event Hubs pricing, see the Event Hubs pricing details.

Is there a charge for retaining Event Hubs events for more than 24 hours?

The Event Hubs Standard tier does allow message retention periods longer than 24 hours, for a maximum of seven days. If the size of the total number of stored events exceeds the storage allowance for the number of selected throughput units (84 GB per throughput unit), the size that exceeds the allowance is charged at the published Azure Blob storage rate. The storage allowance in each throughput unit covers all storage costs for retention periods of 24 hours even if the throughput unit is used up to the maximum ingress allowance.

How is the Event Hubs storage size calculated and charged?

The total size of all stored events, including any internal overhead for event headers or on disk storage structures in all event hubs, is measured throughout the day. At the end of the day, the peak storage size is calculated. The daily storage allowance is calculated based on the minimum number of throughput units that were selected during the day (each throughput unit provides an allowance of 84 GB). If the total size exceeds the calculated daily storage allowance, the excess storage is billed using Azure Blob storage rates (at the Locally Redundant Storage rate).

How are ingress events calculated?

Each event sent to an event hub counts as a billable message. An ingress event is defined as a unit of data that is less than or equal to 64 KB. Any event that is less than or equal to 64 KB in size is considered to be one billable event. If the event is greater than 64 KB, the number of billable events is calculated according to the event size, in multiples of 64 KB. For example, an 8-KB event sent to the event hub is billed as one event, but a 96-KB message sent to the event hub is billed as two events.

Events consumed from an event hub, and management operations and control calls such as checkpoints, aren't counted as billable ingress events, but accrue up to the throughput unit allowance.

Do brokered connection charges apply to Event Hubs?

Connection charges apply only when the AMQP protocol is used. There are no connection charges for sending events using HTTP, regardless of the number of sending systems or devices. If you plan to use AMQP (for example, to achieve more efficient event streaming or to enable bi-directional communication in IoT command and control scenarios), see the Event Hubs pricing information page for details about how many connections are included in each service tier.

How is Event Hubs Capture billed?

Capture is enabled when any event hub in the namespace has the Capture option enabled. Event Hubs Capture is billed monthly per purchased throughput unit. As the throughput unit count is increased or decreased, Event Hubs Capture billing reflects these changes in whole hour increments. For more information about Event Hubs Capture billing, see Event Hubs pricing information.

Do I get billed for the storage account I select for Event Hubs Capture?

Capture uses a storage account you provide when enabled on an event hub. As it is your storage account, any changes for this configuration are billed to your Azure subscription.

Quotas

Are there any quotas associated with Event Hubs?

For a list of all Event Hubs quotas, see quotas.

Troubleshooting

Why am I not able to create a namespace after deleting it from another subscription?

When you delete a namespace from a subscription, wait for 4 hours before recreating it with the same name in another subscription. Otherwise, you might receive the following error message: Namespace already exists.

What are some of the exceptions generated by Event Hubs and their suggested actions?

For a list of possible Event Hubs exceptions, see Exceptions overview.

Diagnostic logs

Event Hubs supports two types of diagnostics logs - Capture error logs and operational logs - both of which are represented in json and can be turned on through the Azure portal.

Support and SLA

Technical support for Event Hubs is available through the Microsoft Q&A question page for Azure Service Bus. Billing and subscription management support is provided at no cost.

To learn more about our SLA, see the Service Level Agreements page.

Azure Stack Hub

How can I target a specific version of Azure Storage SDK when using Azure Blob Storage as a checkpoint store?

If you run this code on Azure Stack Hub, you'll experience runtime errors unless you target a specific Storage API version. That's because the Event Hubs SDK uses the latest available Azure Storage API available in Azure that might not be available on your Azure Stack Hub platform. Azure Stack Hub might support a different version of Storage Blob SDK than that are typically available on Azure. If you're using Azure Blog Storage as a checkpoint store, check the supported Azure Storage API version for your Azure Stack Hub build and target that version in your code.

For example, If you're running on Azure Stack Hub version 2005, the highest available version for the Storage service is version 2019-02-02. By default, the Event Hubs SDK client library uses the highest available version on Azure (2019-07-07 at the time of the release of the SDK). In this case, besides following steps in this section, you'll also need to add code to target the Storage service API version 2019-02-02. For an example of how to target a specific Storage API version, see the following samples for C#, Java, Python, and JavaScript/TypeScript.

For an example of how to target a specific Storage API version from your code, see the following samples on GitHub:

- .NET

- Java

- Python - Synchronous, Asynchronous

- JavaScript and TypeScript

Next steps

You can learn more about Event Hubs by visiting the following links: