Hi,

I am building a AML pipeline for training models and I want to log the evaluation metrics using mlflow.log_metrics(metric) method, but no metrics data is logged for some reason.

My code is below:

1. Get the evaluate component

def get_evaluator(env_name: str, env_version: str) -> Component:

evaluation_component = command(

name="uci_heart_evaluate",

version="1",

display_name="Evaluate XGBoost classifier",

description="Evaluates the XGBoost classifier using evaluation results",

type="command",

inputs={

"evaluation_results": Input(type=AssetTypes.URI_FOLDER),

},

code="src/sdk/classification_conmponents/evaluate",

command="""python evaluate.py \

--evaluation_results ${{inputs.evaluation_results}} \

""",

environment=f"azureml:{env_name}:{env_version}",

)

return evaluation_component

2. Create pipeline

@pipeline() ``# type: ignore[call-overload,misc]

def uci_heart_classifier_trainer_scorer(input_data: Input, score_mode: str) -> dict[str, Any]:

"""The pipeline demonstrates how to make batch inference using a model from the Heart Disease Data Set problem, where pre and post processing is required as steps. The pre and post processing steps can be components reusable from the training pipeline."""

prepared_data = prepare_data(

data=input_data,

transformations=Input(type=AssetTypes.CUSTOM_MODEL, path=transformation_model.id),

)

trained_model = trainer(

data=prepared_data.outputs.prepared_data,

target_column="target",

register_best_model=True,

registered_model_name=get_dotenv().model_name,

eval_size=0.3,

)

evaluate_metrics = evaluator(

evaluation_results=trained_model.outputs.evaluation_results

)

scored_data = scorer(

data=prepared_data.outputs.prepared_data,

model=trained_model.outputs.model,

score_mode=score_mode,

)

return {

"scores": scored_data.outputs.scores,

"trained_model": trained_model.outputs.model,

}

3. Code log metrics in evaluate component

true_labels = training_data_df['target']

predictions = training_data_df['Labels']

# Calculate metrics

accuracy = accuracy_score(true_labels, predictions)

precision = precision_score(true_labels, predictions, average='weighted')

recall = recall_score(true_labels, predictions, average='weighted')

f1 = f1_score(true_labels, predictions, average='weighted')

# Save metrics

metrics = {

"accuracy": accuracy,

"precision": precision,

"recall": recall,

"f1_score": f1,

}

mlflow.log_metrics(metrics)

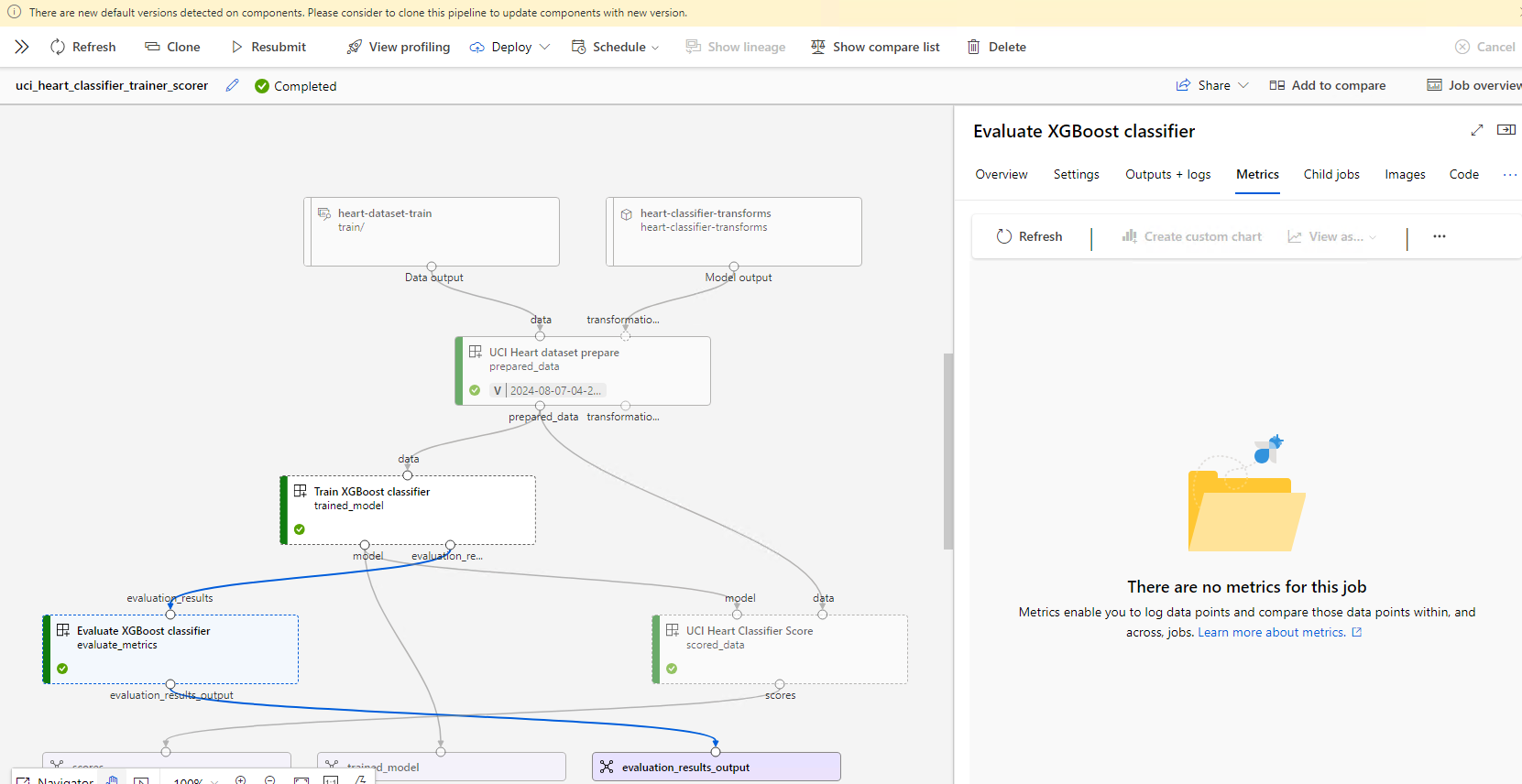

No metrics are logged on AzureML

Are there any possible reasons why this error occurs, and how to fix it?