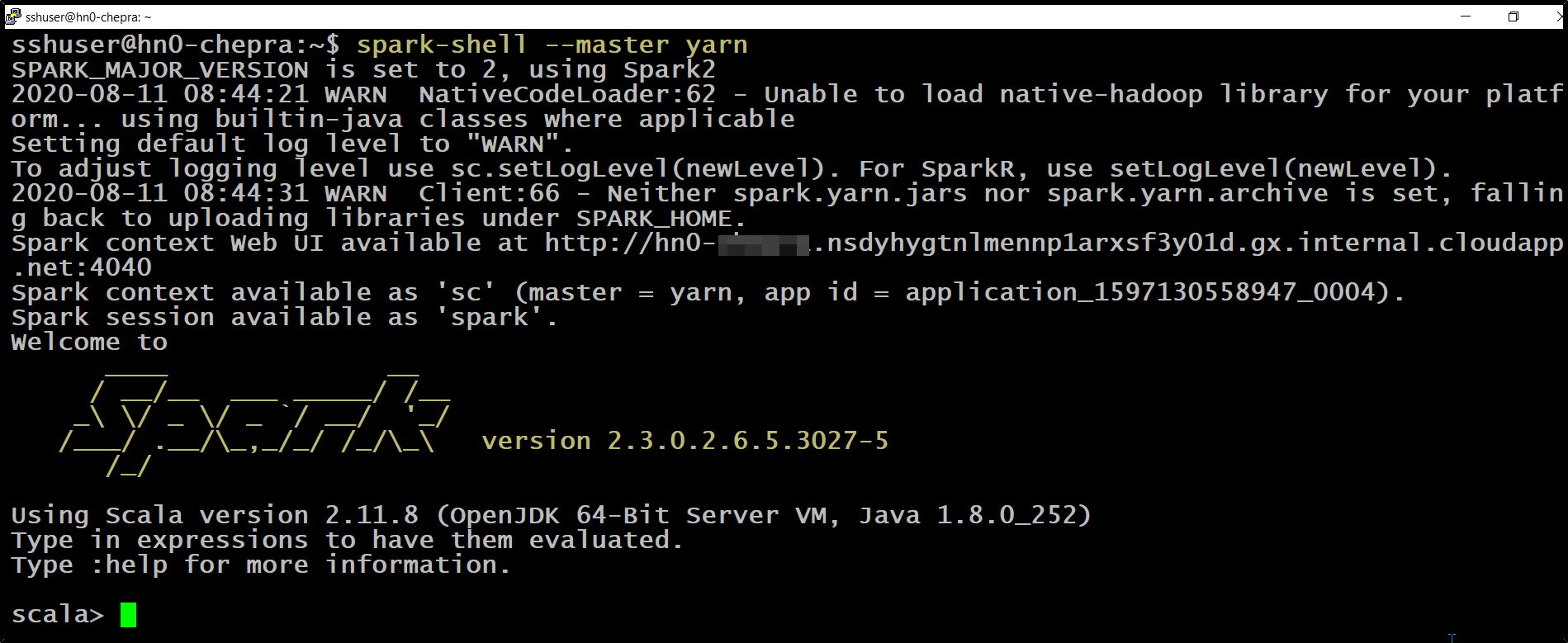

I am trying to run below query on spark-shell on HDInsight cluster:

val df=spark.sql("select * from hivesampletable")

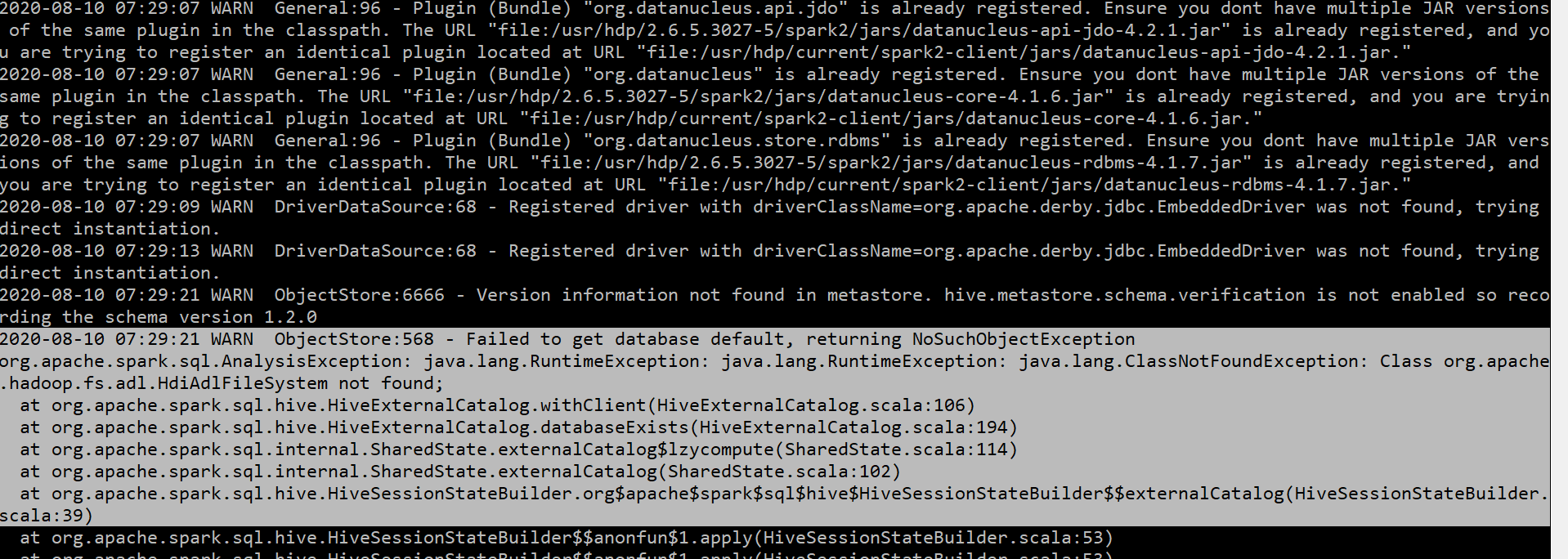

But it is giving below error repeatedly (irrespective of the query):

2020-08-10 07:29:21 WARN ObjectStore:568 - Failed to get database default, returning NoSuchObjectException

org.apache.spark.sql.AnalysisException: java.lang.RuntimeException: java.lang.RuntimeException: java.lang.ClassNotFoundException: Class org.apache.hadoop.fs.adl.HdiAdlFileSystem not found;

at org.apache.spark.sql.hive.HiveExternalCatalog.withClient(HiveExternalCatalog.scala:106)

at org.apache.spark.sql.hive.HiveExternalCatalog.databaseExists(HiveExternalCatalog.scala:194)

at org.apache.spark.sql.internal.SharedState.externalCatalog$lzycompute(SharedState.scala:114)

at org.apache.spark.sql.internal.SharedState.externalCatalog(SharedState.scala:102)

at org.apache.spark.sql.hive.HiveSessionStateBuilder.org$apache$spark$sql$hive$HiveSessionStateBuilder$$externalCatalog(HiveSessionStateBuilder.scala:39)

Although on running "hdfs dfs -ls /" command, It is listing the directories successfully which shows connectivity to DataLake Storage Gen1.

Please help me resolve the issue on priority.

Thanks