Good day,

Let me be a bit harsh and start with my personal golden rule, saying that using Azure Data Warehouse in most cases if you think about using increment integers (in the same way the identity property is used in SQL Server), then you probably do not understand the idea of big data and data warehouse. This is not necessarily true to you but this is my golden rule which I found usually fit. This implies improper architecture of your data warehouse system or using the wrong tool for the task!

It is a red light for me if one of my client have this request while designing a system based on such database (like mongoDB, Azure Data warehouse, CosmosDB, Azure TableStorage ...).

Why? Since these are Big Data Warehouse solutions and are NOT equivalent of using a database which is stored and managed in a single machine. These solutions are meant to be hosted and managed by multiple machines and be used by millions of users at the same time. There is a very good reason why all these products do not support this feature in the same way SQL Server implement IDENTITY.

You need this ID only for analysis, not data storage. It's unwise to use incremental sequences integer in most cases when you have millions of users using a database which is sharded on multiple machines.

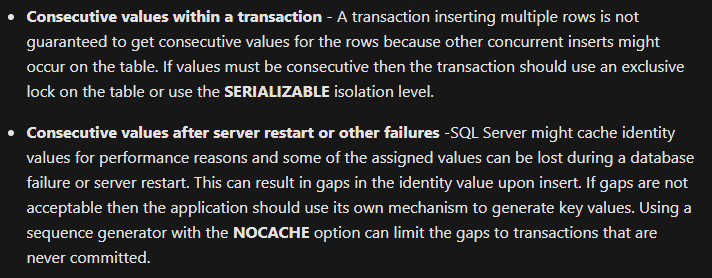

it may provide any random value

It is not fully random but as you said it is not guarantee to be incremented.

So is there any way we can achieve this?

There is always a way to "achieve" specific request but it usually not free and in this case you might pay in performance dramatically.

You should remember that your so call database in Data warehouse is in fact a sharded database which might be divided in multiple machines. To manage something like "identity" in SQL Server means that you must sync between all the machines.

One possible workaround is to take the max(id) column and incrementing before inserting the data into a column.

(1) And what is two users try to INSERT row at the same time? And what a million users do it?!?

In order to implement a solution which you can count on, you will have to manage locks to prevent users from reading the max current value at the same time. If user A read current max value and user B read the same value and if you only implement locks on write then these two users read the same max value before the first insert and both will try to insert the same "next value". It is something that simple databases like SQL Server do implement but it is much simpler to implement it when you have a single machine and all is managed in that single machine.

(2) Using max value is not recommended for most cases. This approach also called "findAndModify" approach. You pay in this case for the "find" which is not recommended usually.

Another approach which is much better is to use COUNT + 1. In order to count the rows, the server do not really need to read any data from the rows! This can be implemented if you do not delete rows from your database, which by the way... is something that recommended anyway (I highly recommend NOT to delete rows in data warehouse usually!).

you can use function and a counters sequence (store the value outside the records SET). This way you do not need to use the "findAndModify" approach. In fact, this is common recommended solution for mongoDB as well for example (if you must have such feature!)

This feature might be supported in the future, but I doubt if it will, and even if it will then it will probably reduce performance.