@DimitriB-1079 Welcome to the Microsoft Q&A platform.

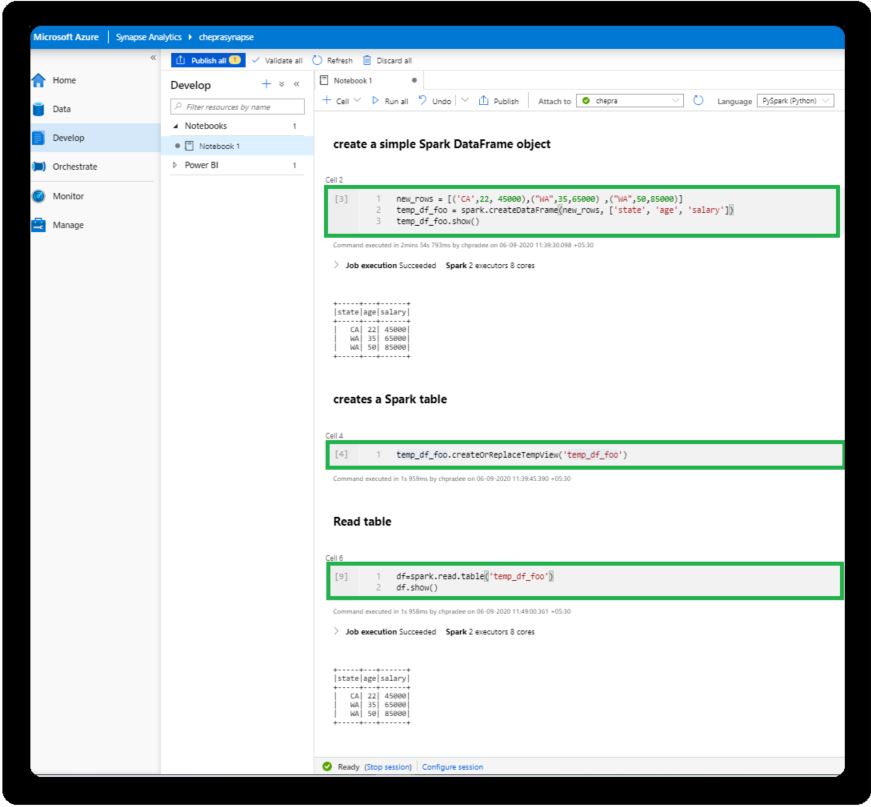

You can create an Apache Spark pool in Azure Synapse Analytics and run the same queries which you are running in Azure Databricks.

Reference: Quickstart: Create an Apache Spark pool (preview) in Azure Synapse Analytics using web tools.

Hope this helps. Do let us know if you any further queries.

Do click on "Accept Answer" and Upvote on the post that helps you, this can be beneficial to other community members.