Document Intelligence custom models

Important

- Document Intelligence public preview releases provide early access to features that are in active development. Features, approaches, and processes may change, prior to General Availability (GA), based on user feedback.

- The public preview version of Document Intelligence client libraries default to REST API version 2024-07-31-preview.

- Public preview version 2024-07-31-preview is currently only available in the following Azure regions. Note that the custom generative (document field extraction) model in AI Studio is only available in North Central US region:

- East US

- West US2

- West Europe

- North Central US

This content applies to: ![]() v4.0 (preview) | Previous versions:

v4.0 (preview) | Previous versions: ![]() v3.1 (GA)

v3.1 (GA) ![]() v3.0 (GA)

v3.0 (GA) ![]() v2.1 (GA)

v2.1 (GA)

This content applies to: ![]() v3.1 (GA) | Latest version:

v3.1 (GA) | Latest version: ![]() v4.0 (preview) | Previous versions:

v4.0 (preview) | Previous versions: ![]() v3.0

v3.0 ![]() v2.1

v2.1

This content applies to: ![]() v3.0 (GA) | Latest versions:

v3.0 (GA) | Latest versions: ![]() v4.0 (preview)

v4.0 (preview) ![]() v3.1 | Previous version:

v3.1 | Previous version: ![]() v2.1

v2.1

This content applies to: ![]() v2.1 | Latest version:

v2.1 | Latest version: ![]() v4.0 (preview)

v4.0 (preview)

Document Intelligence uses advanced machine learning technology to identify documents, detect and extract information from forms and documents, and return the extracted data in a structured JSON output. With Document Intelligence, you can use document analysis models, pre-built/pre-trained, or your trained standalone custom models.

Custom models now include custom classification models for scenarios where you need to identify the document type before invoking the extraction model. Classifier models are available starting with the 2023-07-31 (GA) API. A classification model can be paired with a custom extraction model to analyze and extract fields from forms and documents specific to your business. Standalone custom extraction models can be combined to create composed models.

Custom document model types

Custom document models can be one of two types, custom template or custom form and custom neural or custom document models. The labeling and training process for both models is identical, but the models differ as follows:

Custom extraction models

To create a custom extraction model, label a dataset of documents with the values you want extracted and train the model on the labeled dataset. You only need five examples of the same form or document type to get started.

Custom neural model

Important

Starting with version 4.0 (2024-02-29-preview) API, custom neural models now support overlapping fields and table, row and cell level confidence.

The custom neural (custom document) model uses deep learning models and base model trained on a large collection of documents. This model is then fine-tuned or adapted to your data when you train the model with a labeled dataset. Custom neural models support extracting key data fields from structured, semi-structured, and unstructured documents. When you're choosing between the two model types, start with a neural model to determine if it meets your functional needs. See neural models to learn more about custom document models.

Custom template model

The custom template or custom form model relies on a consistent visual template to extract the labeled data. Variances in the visual structure of your documents affect the accuracy of your model. Structured forms such as questionnaires or applications are examples of consistent visual templates.

Your training set consists of structured documents where the formatting and layout are static and constant from one document instance to the next. Custom template models support key-value pairs, selection marks, tables, signature fields, and regions. Template models and can be trained on documents in any of the supported languages. For more information, see custom template models.

If the language of your documents and extraction scenarios supports custom neural models, we recommend that you use custom neural models over template models for higher accuracy.

Tip

To confirm that your training documents present a consistent visual template, remove all the user-entered data from each form in the set. If the blank forms are identical in appearance, they represent a consistent visual template.

For more information, see Interpret and improve accuracy and confidence for custom models.

Input requirements

For best results, provide one clear photo or high-quality scan per document.

Supported file formats:

Model PDF Image: jpeg/jpg,png,bmp,tiff,heifMicrosoft Office:

Word (docx), Excel (xlsx), PowerPoint (pptx)Read ✔ ✔ ✔ Layout ✔ ✔ ✔ (2024-02-29-preview, 2023-10-31-preview, and later) General Document ✔ ✔ Prebuilt ✔ ✔ Custom extraction ✔ ✔ Custom classification ✔ ✔ ✔ ✱ Microsoft Office files are currently not supported for other models or versions.

For PDF and TIFF, up to 2,000 pages can be processed (with a free tier subscription, only the first two pages are processed).

The file size for analyzing documents is 500 MB for paid (S0) tier and 4 MB for free (F0) tier.

Image dimensions must be between 50 x 50 pixels and 10,000 px x 10,000 pixels.

If your PDFs are password-locked, you must remove the lock before submission.

The minimum height of the text to be extracted is 12 pixels for a 1024 x 768 pixel image. This dimension corresponds to about

8-point text at 150 dots per inch.For custom model training, the maximum number of pages for training data is 500 for the custom template model and 50,000 for the custom neural model.

For custom extraction model training, the total size of training data is 50 MB for template model and 1G-MB for the neural model.

For custom classification model training, the total size of training data is

1GBwith a maximum of 10,000 pages.

Optimal training data

Training input data is the foundation of any machine learning model. It determines the quality, accuracy, and performance of the model. Therefore, it's crucial to create the best training input data possible for your Document Intelligence project. When you use the Document Intelligence custom model, you provide your own training data. Here are a few tips to help train your models effectively:

Use text-based instead of image-based PDFs when possible. One way to identify an image*based PDF is to try selecting specific text in the document. If you can select only the entire image of the text, the document is image based, not text based.

Organize your training documents by using a subfolder for each format (JPEG/JPG, PNG, BMP, PDF, or TIFF).

Use forms that have all of the available fields completed.

Use forms with differing values in each field.

Use a larger dataset (more than five training documents) if your images are low quality.

Determine if you need to use a single model or multiple models composed into a single model.

Consider segmenting your dataset into folders, where each folder is a unique template. Train one model per folder, and compose the resulting models into a single endpoint. Model accuracy can decrease when you have different formats analyzed with a single model.

Consider segmenting your dataset to train multiple models if your form has variations with formats and page breaks. Custom forms rely on a consistent visual template.

Ensure that you have a balanced dataset by accounting for formats, document types, and structure.

Build mode

The build custom model operation adds support for the template and neural custom models. Previous versions of the REST API and client libraries only supported a single build mode that is now known as the template mode.

Template models only accept documents that have the same basic page structure—a uniform visual appearance—or the same relative positioning of elements within the document.

Neural models support documents that have the same information, but different page structures. Examples of these documents include United States W2 forms, which share the same information, but vary in appearance across companies.

This table provides links to the build mode programming language SDK references and code samples on GitHub:

| Programming language | SDK reference | Code sample |

|---|---|---|

| C#/.NET | DocumentBuildMode Struct | Sample_BuildCustomModelAsync.cs |

| Java | DocumentBuildMode Class | BuildModel.java |

| JavaScript | DocumentBuildMode type | buildModel.js |

| Python | DocumentBuildMode Enum | sample_build_model.py |

Compare model features

The following table compares custom template and custom neural features:

| Feature | Custom template (form) | Custom neural (document) |

|---|---|---|

| Document structure | Template, form, and structured | Structured, semi-structured, and unstructured |

| Training time | 1 to 5 minutes | 20 minutes to 1 hour |

| Data extraction | Key-value pairs, tables, selection marks, coordinates, and signatures | Key-value pairs, selection marks, and tables |

| Overlapping fields | Not supported | Supported |

| Document variations | Requires a model per each variation | Uses a single model for all variations |

| Language support | Language support custom template | Language support custom neural |

Custom classification model

Document classification is a new scenario supported by Document Intelligence with the 2023-07-31 (v3.1 GA) API. The document classifier API supports classification and splitting scenarios. Train a classification model to identify the different types of documents your application supports. The input file for the classification model can contain multiple documents and classifies each document within an associated page range. To learn more, see custom classification models.

Note

Starting with the 2024-02-29-preview API version document classification now supports Office document types for classification. This API version also introduces incremental training for the classification model.

Custom model tools

Document Intelligence v3.1 and later models support the following tools, applications, and libraries, programs, and libraries:

| Feature | Resources | Model ID |

|---|---|---|

| Custom model | • Document Intelligence Studio • REST API • C# SDK • Python SDK |

custom-model-id |

Custom model life cycle

The life cycle of a custom model depends on the API version that is used to train it. If the API version is a general availability (GA) version, the custom model has the same life cycle as that version. The custom model isn't available for inference when the API version is deprecated. If the API version is a preview version, the custom model has the same life cycle as the preview version of the API.

Document Intelligence v2.1 supports the following tools, applications, and libraries:

Note

Custom model types custom neural and custom template are available with Document Intelligence version v3.1 and v3.0 APIs.

| Feature | Resources |

|---|---|

| Custom model | • Document Intelligence labeling tool • REST API • Client library SDK • Document Intelligence Docker container |

Build a custom model

Extract data from your specific or unique documents using custom models. You need the following resources:

An Azure subscription. You can create one for free.

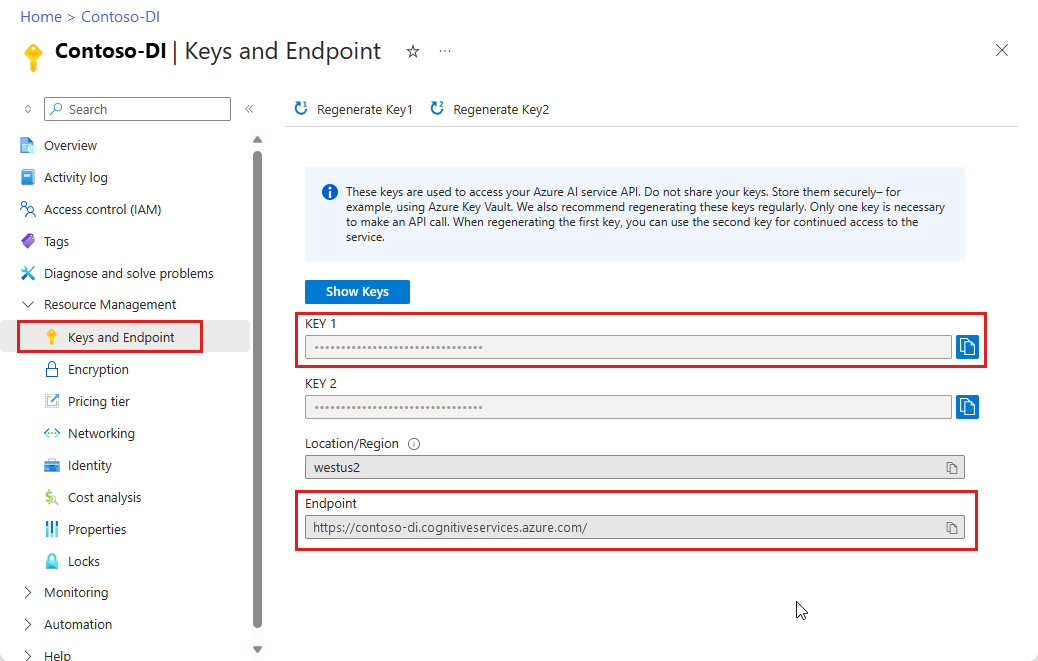

A Document Intelligence instance in the Azure portal. You can use the free pricing tier (

F0) to try the service. After your resource deploys, select Go to resource to get your key and endpoint.

Sample Labeling tool

Tip

- For an enhanced experience and advanced model quality, try the Document Intelligence v3.0 Studio.

- The v3.0 Studio supports any model trained with v2.1 labeled data.

- You can refer to the API migration guide for detailed information about migrating from v2.1 to v3.0.

- See our REST API or C#, Java, JavaScript, or Python SDK ../quickstarts to get started with the v3.0 version.

The Document Intelligence Sample Labeling tool is an open source tool that enables you to test the latest features of Document Intelligence and Optical Character Recognition (OCR) features.

Try the Sample Labeling tool quickstart to get started building and using a custom model.

Document Intelligence Studio

Note

Document Intelligence Studio is available with v3.1 and v3.0 APIs.

On the Document Intelligence Studio home page, select Custom extraction models.

Under My Projects, select Create a project.

Complete the project details fields.

Configure the service resource by adding your Storage account and Blob container to Connect your training data source.

Review and create your project.

Add your sample documents to label, build, and test your custom model.

For a detailed walkthrough to create your first custom extraction model, see How to create a custom extraction model.

Custom model extraction summary

This table compares the supported data extraction areas:

| Model | Form fields | Selection marks | Structured fields (Tables) | Signature | Region labeling | Overlapping fields |

|---|---|---|---|---|---|---|

| Custom template | ✔ | ✔ | ✔ | ✔ | ✔ | n/a |

| Custom neural | ✔ | ✔ | ✔ | n/a | * | ✔ (2024-02-29-preview) |

Table symbols:

✔—Supported

**n/a—Currently unavailable;

*-Behaves differently depending upon model. With template models, synthetic data is generated at training time. With neural models, exiting text recognized in the region is selected.

Tip

When choosing between the two model types, start with a custom neural model if it meets your functional needs. See custom neural to learn more about custom neural models.

Custom model development options

The following table describes the features available with the associated tools and client libraries. As a best practice, ensure that you use the compatible tools listed here.

| Document type | REST API | SDK | Label and Test Models |

|---|---|---|---|

| Custom template v 4.0 v3.1 v3.0 | Document Intelligence 3.1 | Document Intelligence SDK | Document Intelligence Studio |

| Custom neural v4.0 v3.1 v3.0 | Document Intelligence 3.1 | Document Intelligence SDK | Document Intelligence Studio |

| Custom form v2.1 | Document Intelligence 2.1 GA API | Document Intelligence SDK | Sample labeling tool |

Note

Custom template models trained with the 3.0 API will have a few improvements over the 2.1 API stemming from improvements to the OCR engine. Datasets used to train a custom template model using the 2.1 API can still be used to train a new model using the 3.0 API.

For best results, provide one clear photo or high-quality scan per document.

Supported file formats are JPEG/JPG, PNG, BMP, TIFF, and PDF (text-embedded or scanned). Text-embedded PDFs are best to eliminate the possibility of error in character extraction and location.

For PDF and TIFF files, up to 2,000 pages can be processed. With a free tier subscription, only the first two pages are processed.

The file size must be less than 500 MB for paid (S0) tier and 4 MB for free (F0) tier.

Image dimensions must be between 50 x 50 pixels and 10,000 x 10,000 pixels.

PDF dimensions are up to 17 x 17 inches, corresponding to Legal or A3 paper size, or smaller.

The total size of the training data is 500 pages or less.

If your PDFs are password-locked, you must remove the lock before submission.

Tip

Training data:

- If possible, use text-based PDF documents instead of image-based documents. Scanned PDFs are handled as images.

- Please supply only a single instance of the form per document.

- For filled-in forms, use examples that have all their fields filled in.

- Use forms with different values in each field.

- If your form images are of lower quality, use a larger dataset. For example, use 10 to 15 images.

Supported languages and locales

See our Language Support—custom models page for a complete list of supported languages.

Next steps

Try processing your own forms and documents with the Document Intelligence Sample Labeling tool.

Complete a Document Intelligence quickstart and get started creating a document processing app in the development language of your choice.

Try processing your own forms and documents with the Document Intelligence Studio.

Complete a Document Intelligence quickstart and get started creating a document processing app in the development language of your choice.