The indexing configuration guide

Important

Due to the Azure Media Services retirement announcement, Azure AI Video Indexer announces Azure AI Video Indexer features adjustments. See Changes related to Azure Media Service (AMS) retirement to understand what this means for your Azure AI Video Indexer account. See the Preparing for AMS retirement: VI update and migration guide.

It's important to understand the configuration options to index efficiently while ensuring you meet your indexing objectives. When indexing videos, users can use the default settings or adjust many of the settings. Azure AI Video Indexer allows you to choose between a range of language, indexing, custom models, and streaming settings that have implications on the insights generated, cost, and performance.

This article explains each of the options and the impact of each option to enable informed decisions when indexing. The article discusses the Azure AI Video Indexer website experience but the same options apply when submitting jobs through the API (see the API guide). When indexing large volumes, follow the at-scale guide.

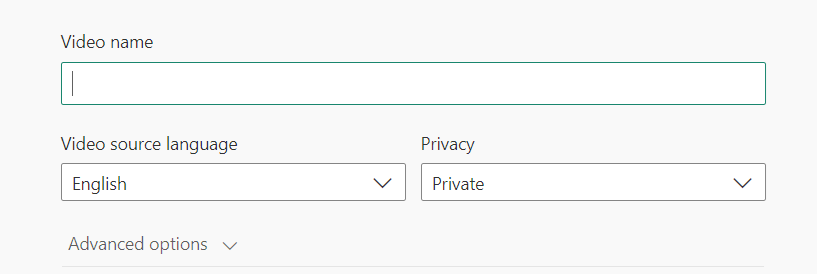

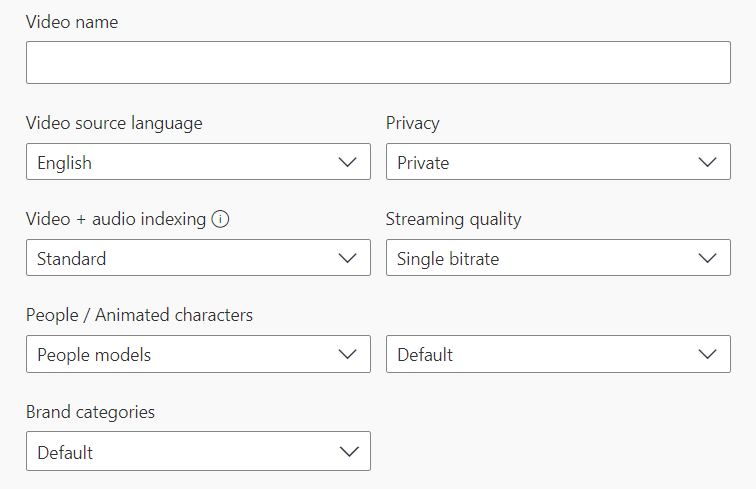

The initial upload screen presents options to define the video name, source language, and privacy settings.

All the other setting options appear if you select Advanced options.

Default settings

By default, Azure AI Video Indexer is configured to a Video source language of English, Privacy of private, Standard audio and video setting, and Streaming quality of single bitrate.

Tip

This topic describes each indexing option in detail.

Below are a few examples of when using the default setting might not be a good fit:

- If you need insights observed people or matched person that is only available through Advanced Video.

- If you're only using Azure AI Video Indexer for transcription and translation, indexing of both audio and video isn’t required, Basic for audio should suffice.

- If you're consuming Azure AI Video Indexer insights but have no need to generate a new media file, streaming isn't necessary and No streaming should be selected to avoid the encoding job and its associated cost.

- If a video is primarily in a language that isn't English.

Video source language

If you're aware of the language spoken in the video, select the language from the video source language list. If you're unsure of the language of the video, choose Auto-detect single language. When uploading and indexing your video, Azure AI Video Indexer will use language identification (LID) to detect the videos language and generate transcription and insights with the detected language.

If the video may contain multiple languages and you aren't sure which ones, select Auto-detect multi-language. In this case, multi-language (MLID) detection will be applied when uploading and indexing your video.

While auto-detect is a great option when the language in your videos varies, there are two points to consider when using LID or MLID:

- LID/MLID don't support all the languages supported by Azure AI Video Indexer.

- The transcription is of a higher quality when you pre-select the video’s appropriate language.

Learn more about language support and supported languages.

Privacy

This option allows you to determine if the insights should only be accessible to users in your Azure AI Video Indexer account or to anyone with a link.

Indexing options

When indexing a video with the default settings, beware each of the audio and video indexing options may be priced differently. See Azure AI Video Indexer pricing for details.

Below are the indexing type options with details of their insights provided. To modify the indexing type, select Advanced settings.

| Audio only | Video only | Audio & Video |

|---|---|---|

| Basic | Basic | Basic |

| Standard | Standard | Standard |

| Advanced | Advanced | Advanced |

Advanced settings

Audio only

- Basic: Indexes and extract insights by using audio only (ignoring video) and provides the following insights: transcription, translation, formatting of output captions and subtitles (closed captions).

- Standard: Indexes and extract insights by using audio only (ignoring video) and provides the following insights: transcription, translation, formatting of output captions and subtitles (closed captions), automatic language detection, emotions, keywords, named entities (brands, locations, people), sentiments, speakers, topic extraction, and textual content moderation.

- Advanced: Indexes and extract insights by using audio only (ignoring video) and provides the following insights: transcription, translation, formatting of output captions and subtitles (closed captions), automatic language detection, audio event detection, emotions, keywords, named entities (brands, locations, people), sentiments, speakers, topic extraction, and textual content moderation.

Video only

- Basic: Indexes and extract insights by using video only (ignoring audio) and provides the following insights: labels, object detection, OCR, scenes (keyframes and shots), and black frame detection.

- Standard: Indexes and extract insights by using video only (ignoring audio) and provides the following insights: labels (OCR), named entities (OCR - brands, locations, people), OCR, people, scenes (keyframes and shots), black frames, visual content moderation, and topic extraction (OCR).

- Advanced: Indexes and extract insights by using video only (ignoring audio) and provides the following insights: labels (OCR), matched person (preview), named entities (OCR - brands, locations, people), OCR, observed people (preview), people, scenes (keyframes and shots), clapperboard detection, digital pattern detection, featured clothing insight, textless slate detection, textual logo detection, black frames, visual content moderation, and topic extraction (OCR).

Audio and Video

- Basic: Indexes and extract insights by using audio and video and provides the following insights: transcription, translation, formatting of output captions and subtitles (closed captions), object detection, OCR, scenes (keyframes and shots), and black frames.

- Standard: Indexes and extract insights by using audio and video and provides the following insights: transcription, translation, formatting of output captions and subtitles (closed captions), automatic language detection, emotions, keywords, named entities (brands, locations, people), OCR, scenes (keyframes and shots), black frames, visual content moderation, people, sentiments, speakers, topic extraction, and textual content moderation.

- Advanced: Indexes and extract insights by using audio and video and provides the following insights: transcription, translation, formatting of output captions and subtitles (closed captions), automatic language detection, textual content moderation, audio event detection, emotions, keywords, matched person, named entities (brands, locations, people), OCR, observed people (preview), people, clapperboard detection, digital pattern detection, featured clothing insight, textless slate detection, sentiments, speakers, scenes (keyframes and shots), textual logo detection, black frames, visual content moderation, and topic extraction.

Streaming quality options

There are two options for streaming indexed videos:

- Single bitrate: If the video height is greater than or equal to 720p HD, Azure AI Video Indexer encodes it with a resolution of 1280 x 720. Otherwise, it's encoded as 640 x 468.

- No streaming: Insights are generated but no streaming operation is performed and the video isn't available on the Azure AI Video Indexer website. When No streaming is selected, you aren't billed for encoding.

Customizing content models

Azure AI Video Indexer allows you to customize some of its models to be adapted to your specific use case. These models include brands, language, and person. If you have customized models, this section enables you to configure if one of the created models should be used for the indexing.

Feedback

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback.

Submit and view feedback for