Using Azure Data Lake Storage Gen1 for big data requirements

Note

Azure Data Lake Storage Gen1 is now retired. See the retirement announcement here.Data Lake Storage Gen1 resources are no longer accessible.

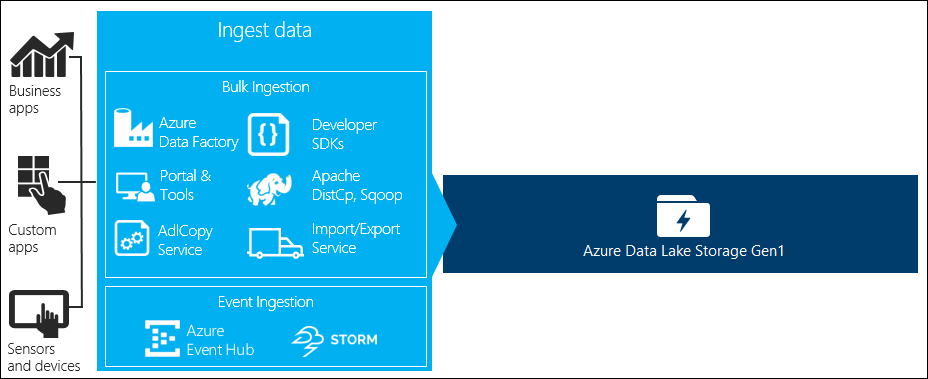

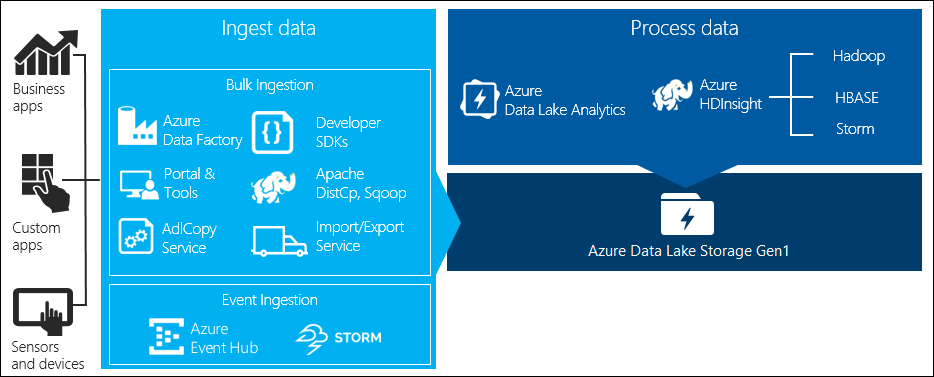

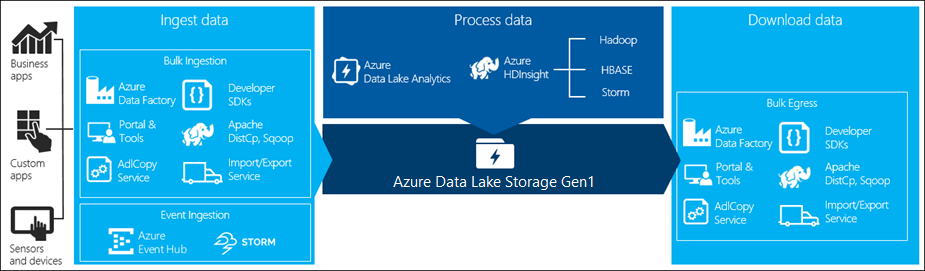

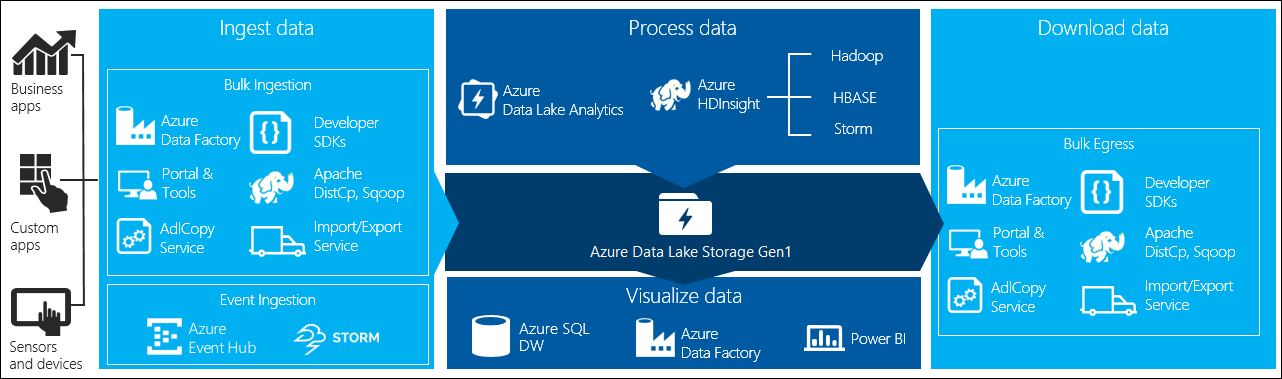

There are four key stages in big data processing:

- Ingesting large amounts of data into a data store, at real-time or in batches

- Processing the data

- Downloading the data

- Visualizing the data

In this article, we look at these stages with respect to Azure Data Lake Storage Gen1 to understand the options and tools available to meet your big data needs.

Ingest data into Data Lake Storage Gen1

This section highlights the different sources of data and the different ways in which that data can be ingested into a Data Lake Storage Gen1 account.

Ad hoc data

This represents smaller data sets that are used for prototyping a big data application. There are different ways of ingesting ad hoc data depending on the source of the data.

| Data Source | Ingest it using |

|---|---|

| Local computer | |

| Azure Storage Blob |

Streamed data

This represents data that can be generated by various sources such as applications, devices, sensors, etc. This data can be ingested into Data Lake Storage Gen1 by a variety of tools. These tools will usually capture and process the data on an event-by-event basis in real-time, and then write the events in batches into Data Lake Storage Gen1 so that they can be further processed.

Following are tools that you can use:

- Azure Stream Analytics - Events ingested into Event Hubs can be written to Azure Data Lake Storage Gen1 using an Azure Data Lake Storage Gen1 output.

- EventProcessorHost – You can receive events from Event Hubs and then write it to Data Lake Storage Gen1 using the Data Lake Storage Gen1 .NET SDK.

Relational data

You can also source data from relational databases. Over a period of time, relational databases collect huge amounts of data which can provide key insights if processed through a big data pipeline. You can use the following tools to move such data into Data Lake Storage Gen1.

Web server log data (upload using custom applications)

This type of dataset is specifically called out because analysis of web server log data is a common use case for big data applications and requires large volumes of log files to be uploaded to Data Lake Storage Gen1. You can use any of the following tools to write your own scripts or applications to upload such data.

For uploading web server log data, and also for uploading other kinds of data (e.g. social sentiments data), it is a good approach to write your own custom scripts/applications because it gives you the flexibility to include your data uploading component as part of your larger big data application. In some cases this code may take the form of a script or simple command line utility. In other cases, the code may be used to integrate big data processing into a business application or solution.

Data associated with Azure HDInsight clusters

Most HDInsight cluster types (Hadoop, HBase, Storm) support Data Lake Storage Gen1 as a data storage repository. HDInsight clusters access data from Azure Storage Blobs (WASB). For better performance, you can copy the data from WASB into a Data Lake Storage Gen1 account associated with the cluster. You can use the following tools to copy the data.

Data stored in on-premises or IaaS Hadoop clusters

Large amounts of data may be stored in existing Hadoop clusters, locally on machines using HDFS. The Hadoop clusters may be in an on-premises deployment or may be within an IaaS cluster on Azure. There could be requirements to copy such data to Azure Data Lake Storage Gen1 for a one-off approach or in a recurring fashion. There are various options that you can use to achieve this. Below is a list of alternatives and the associated trade-offs.

| Approach | Details | Advantages | Considerations |

|---|---|---|---|

| Use Azure Data Factory (ADF) to copy data directly from Hadoop clusters to Azure Data Lake Storage Gen1 | ADF supports HDFS as a data source | ADF provides out-of-the-box support for HDFS and first class end-to-end management and monitoring | Requires Data Management Gateway to be deployed on-premises or in the IaaS cluster |

| Export data from Hadoop as files. Then copy the files to Azure Data Lake Storage Gen1 using appropriate mechanism. | You can copy files to Azure Data Lake Storage Gen1 using:

|

Quick to get started. Can do customized uploads | Multi-step process that involves multiple technologies. Management and monitoring will grow to be a challenge over time given the customized nature of the tools |

| Use Distcp to copy data from Hadoop to Azure Storage. Then copy data from Azure Storage to Data Lake Storage Gen1 using appropriate mechanism. | You can copy data from Azure Storage to Data Lake Storage Gen1 using: | You can use open-source tools. | Multi-step process that involves multiple technologies |

Really large datasets

For uploading datasets that range in several terabytes, using the methods described above can sometimes be slow and costly. In such cases, you can use the options below.

Using Azure ExpressRoute. Azure ExpressRoute lets you create private connections between Azure datacenters and infrastructure on your premises. This provides a reliable option for transferring large amounts of data. For more information, see Azure ExpressRoute documentation.

"Offline" upload of data. If using Azure ExpressRoute is not feasible for any reason, you can use Azure Import/Export service to ship hard disk drives with your data to an Azure data center. Your data is first uploaded to Azure Storage Blobs. You can then use Azure Data Factory or AdlCopy tool to copy data from Azure Storage Blobs to Data Lake Storage Gen1.

Note

While using the Import/Export service, the file sizes on the disks that you ship to Azure data center should not be greater than 195 GB.

Process data stored in Data Lake Storage Gen1

Once the data is available in Data Lake Storage Gen1 you can run analysis on that data using the supported big data applications. Currently, you can use Azure HDInsight and Azure Data Lake Analytics to run data analysis jobs on the data stored in Data Lake Storage Gen1.

You can look at the following examples.

- Create an HDInsight cluster with Data Lake Storage Gen1 as storage

- Use Azure Data Lake Analytics with Data Lake Storage Gen1

Download data from Data Lake Storage Gen1

You might also want to download or move data from Azure Data Lake Storage Gen1 for scenarios such as:

- Move data to other repositories to interface with your existing data processing pipelines. For example, you might want to move data from Data Lake Storage Gen1 to Azure SQL Database or SQL Server.

- Download data to your local computer for processing in IDE environments while building application prototypes.

In such cases, you can use any of the following options:

You can also use the following methods to write your own script/application to download data from Data Lake Storage Gen1.

Visualize data in Data Lake Storage Gen1

You can use a mix of services to create visual representations of data stored in Data Lake Storage Gen1.

- You can start by using Azure Data Factory to move data from Data Lake Storage Gen1 to Azure Synapse Analytics

- After that, you can integrate Power BI with Azure Synapse Analytics to create visual representation of the data.