Hi all, I am working with Azure Synapse Spark pool with below configuration:

Spark version 3.3

Python version3.8

Scala 2.12.15

Java version 1.8.0_282

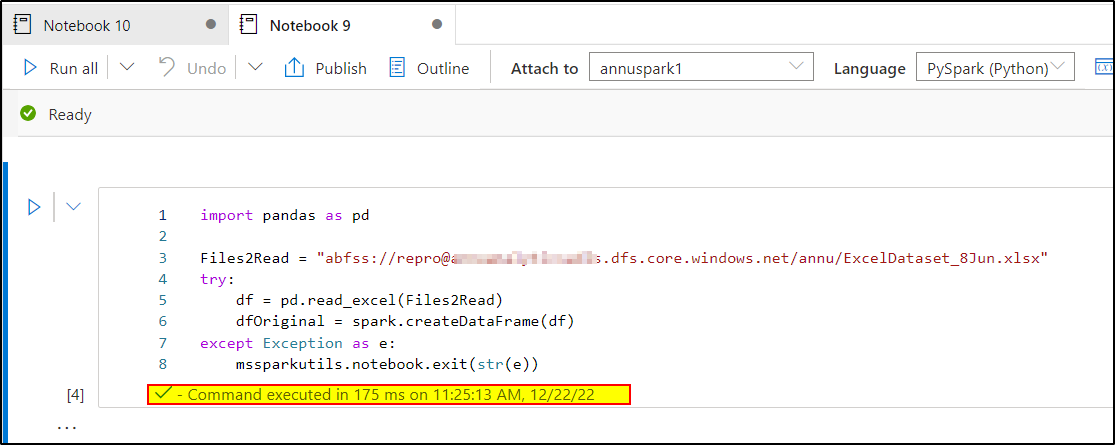

I am trying to read an excel file in a Azure ADL folder using libraries pandas and spark.pandas. The code executed is:

import pandas as pd

Files2Read = "abfss://*****@ADLACCOUNT.dfs.core.windows.net/FOLDER/SUBFOLDER/FILENAME.xlsx"

try:

df = pd.read_excel(Files2Read)

dfOriginal = spark.createDataFrame(df)

except Exception as e:

mssparkutils.notebook.exit(str(e))*

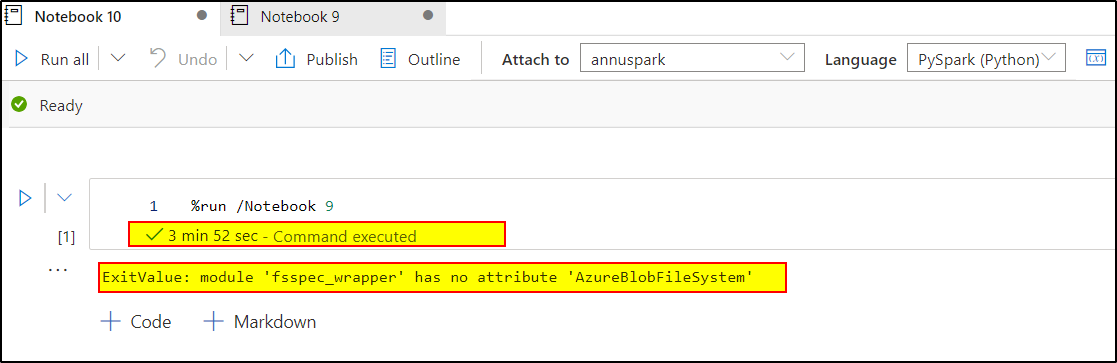

The code is working if I execute it in a notebook but when I call the notebook from another notebook with mssparkutils the code fails with error "Notebook Exit: 'JavaPackage' object is not callable". The extended error:

ERROR notebookUtils: Uncaught throwable from user code: ---------------------------------------------------------------------------TypeError Traceback (most recent call last)/tmp/ipykernel_27337/1874386971.py in <module>

49 df = pd.read_excel(Files2Read)

---> 50 dfOriginal = spark.createDataFrame(df)

51 #######Clean Dataframe

/opt/spark/python/lib/pyspark.zip/pyspark/sql/session.py in createDataFrame(self, data, schema, samplingRatio, verifySchema)

890 # Create a DataFrame from pandas DataFrame.

--> 891 return super(SparkSession, self).createDataFrame( # type: ignore[call-overload]

892 data, schema, samplingRatio, verifySchema

/opt/spark/python/lib/pyspark.zip/pyspark/sql/pandas/conversion.py in createDataFrame(self, data, schema, samplingRatio, verifySchema)

435 raise

--> 436 converted_data = self._convert_from_pandas(data, schema, timezone)

437 return self._create_dataframe(converted_data, schema, samplingRatio, verifySchema)

/opt/spark/python/lib/pyspark.zip/pyspark/sql/pandas/conversion.py in _convert_from_pandas(self, pdf, schema, timezone)

472 else:

--> 473 should_localize = not is_timestamp_ntz_preferred()

474 for column, series in pdf.iteritems():

/opt/spark/python/lib/pyspark.zip/pyspark/sql/utils.py in is_timestamp_ntz_preferred()

295 jvm = SparkContext._jvm

--> 296 return jvm is not None and jvm.PythonSQLUtils.isTimestampNTZPreferred()

TypeError: 'JavaPackage' object is not callable

During handling of the above exception, another exception occurred:

NotebookExit Traceback (most recent call last)/tmp/ipykernel_27337/1874386971.py in <module>

57 except Exception as e:

58 print("Error in temp_"+DeltaTableName)

---> 59 mssparkutils.notebook.exit(str(e))

~/cluster-env/clonedenv/lib/python3.8/site-packages/notebookutils/mssparkutils/notebook.py in exit(value)

19

20 def exit(value):

---> 21 nb.exit(value)

~/cluster-env/clonedenv/lib/python3.8/site-packages/notebookutils/mssparkutils/handlers/notebookHandler.py in exit(self, value)

56 def exit(self, value):

57 self.exitVal = str(value)

---> 58 raise NotebookExit(value)

NotebookExit: 'JavaPackage' object is not callable

Did you see this behavior before?