Hi there -

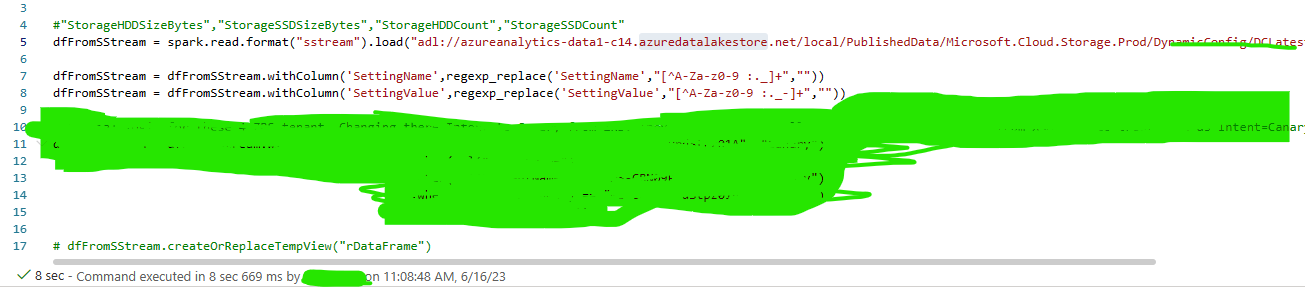

I was trying to read SS from ADL, and the command goes through without any problem when run manually in notebook. See below:

However, the same cell run into below error:

Operation on target ConfigDrift_WithNationalCloud_ProdVer failed: ---------------------------------------------------------------------------

Py4JJavaError Traceback (most recent call last)

/tmp/ipykernel_20459/3824683993.py in <module>

3

4 #"StorageHDDSizeBytes","StorageSSDSizeBytes","StorageHDDCount","StorageSSDCount"

----> 5 dfFromSStream = spark.read.format("sstream").load("adl://azureanalytics-data1-c14.azuredatalakestore.net/local/PublishedData/Microsoft.Cloud.Storage.Prod/[Removed manually]

6

7 dfFromSStream = dfFromSStream.withColumn('SettingName',regexp_replace('SettingName',"[^A-Za-z0-9 :._]+",""))

/opt/spark/python/lib/pyspark.zip/pyspark/sql/readwriter.py in load(self, path, format, schema, **options)

202 self.options(**options)

203 if isinstance(path, str):

--> 204 return self._df(self._jreader.load(path))

205 elif path is not None:

206 if type(path) != list:

~/cluster-env/clonedenv/lib/python3.8/site-packages/py4j/java_gateway.py in __call__(self, *args)

1302

1303 answer = self.gateway_client.send_command(command)

-> 1304 return_value = get_return_value(

1305 answer, self.gateway_client, self.target_id, self.name)

1306

/opt/spark/python/lib/pyspark.zip/pyspark/sql/utils.py in deco(*a, **kw)

109 def deco(*a, **kw):

110 try:

--> 111 return f(*a, **kw)

112 except py4j.protocol.Py4JJavaError as e:

113 converted = convert_exception(e.java_exception)

~/cluster-env/clonedenv/lib/python3.8/site-packages/py4j/protocol.py in get_return_value(answer, gateway_client, target_id, name)

324 value = OUTPUT_CONVERTER[type](answer[2:], gateway_client)

325 if answer[1] == REFERENCE_TYPE:

--> 326 raise Py4JJavaError(

327 "An error occurred while calling {0}{1}{2}.\n".

328 format(target_id, ".", name), value)

Py4JJavaError: An error occurred while calling o3673.load.

: org.apache.hadoop.security.AccessControlException: GETFILESTATUS failed with error 0x83090aa2 (Forbidden. ACL verification failed. Either the resource does not exist or the user is not authorized to perform the requested operation.). [Removed] failed with error 0x83090aa2 (Forbidden. ACL verification failed. Either the resource does not exist or the user is not authorized to perform the requested operation.). [Removed][2023-06-15T22:25:51.6334033-07:00] [ServerRequestId:154c4a46-b7c6-4714-9f0e-7440ff736e4d]

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method)

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62)

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)

at java.lang.reflect.Constructor.newInstance(Constructor.java:423)

at com.microsoft.azure.datalake.store.ADLStoreClient.getRemoteException(ADLStoreClient.java:1299)

at com.microsoft.azure.datalake.store.ADLStoreClient.getExceptionFromResponse(ADLStoreClient.java:1264)

at com.microsoft.azure.datalake.store.ADLStoreClient.getDirectoryEntry(ADLStoreClient.java:815)

at org.apache.hadoop.fs.adl.AdlFileSystem.getFileStatus(AdlFileSystem.java:504)

at org.apache.hadoop.fs.Globber.getFileStatus(Globber.java:65)

at org.apache.hadoop.fs.Globber.doGlob(Globber.java:281)

at org.apache.hadoop.fs.Globber.glob(Globber.java:149)

at org.apache.hadoop.fs.FileSystem.globStatus(FileSystem.java:2029)

at com.microsoft.sstream.SStreamMetadataProvider.$anonfun$getInputFileListFromPath$2(SStreamMetadataProvider.scala:46)

at com.microsoft.sstream.SStreamMetadataProvider.$anonfun$getInputFileListFromPath$2$adapted(SStreamMetadataProvider.scala:36)

at scala.collection.IndexedSeqOptimized.foreach(IndexedSeqOptimized.scala:36)

at scala.collection.IndexedSeqOptimized.foreach$(IndexedSeqOptimized.scala:33)

at scala.collection.mutable.ArrayOps$ofRef.foreach(ArrayOps.scala:198)

at com.microsoft.sstream.SStreamMetadataProvider.getInputFileListFromPath(SStreamMetadataProvider.scala:36)

at com.microsoft.sstream.SStreamMetadataProvider.<init>(SStreamMetadataProvider.scala:74)

at com.microsoft.spark.sql.execution.datasources.sstream.DefaultSource.inferSchema(DefaultSource.scala:29)

at org.apache.spark.sql.execution.datasources.v2.DataSourceV2Utils$.getTableFromProvider(DataSourceV2Utils.scala:81)

at org.apache.spark.sql.DataFrameReader.$anonfun$load$1(DataFrameReader.scala:303)

at scala.Option.map(Option.scala:230)

at org.apache.spark.sql.DataFrameReader.load(DataFrameReader.scala:273)

at org.apache.spark.sql.DataFrameReader.load(DataFrameReader.scala:241)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at py4j.reflection.MethodInvoker.invoke(MethodInvoker.java:244)

at py4j.reflection.ReflectionEngine.invoke(ReflectionEngine.java:357)

at py4j.Gateway.invoke(Gateway.java:282)

at py4j.commands.AbstractCommand.invokeMethod(AbstractCommand.java:132)

at py4j.commands.CallCommand.execute(CallCommand.java:79)

at py4j.GatewayConnection.run(GatewayConnection.java:238)

at java.lang.Thread.run(Thread.java:750)

Can anyone explain why such discrepancy? Am I missing something here?

Thanks!