Hi! I got this error while trying to run a job for creating a pipeline using Python SDK:

Traceback (most recent call last):

Cell In[14], line 3

new_pipeline = Pipeline(workspace=ws, steps=steps)

File ~\anaconda3\Lib\site-packages\azureml\core\_experiment_method.py:104 in wrapper

return init_func(self, *args, **kwargs)

File ~\anaconda3\Lib\site-packages\azureml\pipeline\core\pipeline.py:95 in __init__

self._graph_context = _GraphContext("placeholder", workspace=workspace,

File ~\anaconda3\Lib\site-packages\azureml\pipeline\core\_graph_context.py:44 in __init__

self._workflow_provider = _AevaWorkflowProvider.create_provider(workspace=workspace,

File ~\anaconda3\Lib\site-packages\azureml\pipeline\core\_aeva_provider.py:104 in create_provider

service_caller = _AevaWorkflowProvider.create_service_caller(workspace=workspace,

File ~\anaconda3\Lib\site-packages\azureml\pipeline\core\_aeva_provider.py:121 in create_service_caller

service_endpoint = _AevaWorkflowProvider.get_endpoint_url(workspace, experiment_name)

File ~\anaconda3\Lib\site-packages\azureml\pipeline\core\_aeva_provider.py:138 in get_endpoint_url

service_context = ServiceContext(

TypeError: ServiceContext.__init__() missing 1 required positional argument: 'workspace_discovery_url'

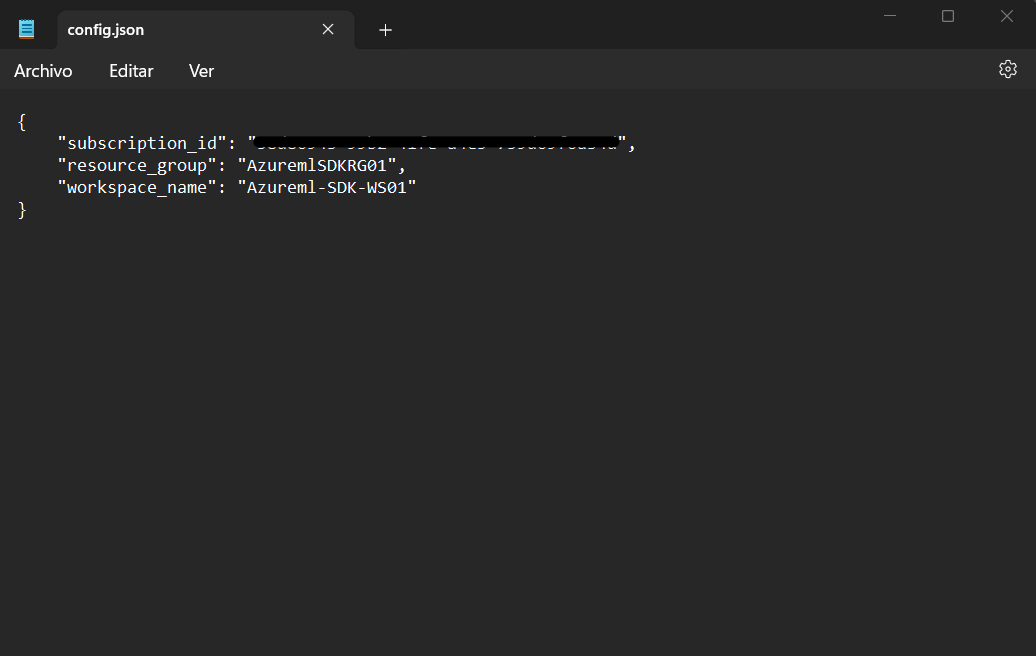

The error seems to be related with my workspace but I really don't see the problem. Here is my code for the pipeline creation and my .config file for worspace creation.

#------------------------------------------------------------

# Job script build a pipeline and running it in a experiment

#------------------------------------------------------------

from azureml.core import Workspace

ws = Workspace.from_config("./config")

#------------------------------------------------------------

# Create custom environment

from azureml.core import Environment

from azureml.core.conda_dependencies import CondaDependencies

myenv = Environment(name="MyEnvironment")

#Create the dependencies object

myenv_dep = CondaDependencies.create(conda_packages=["scikit-learn", "pandas", "numpy", "scipy"])

myenv.python.conda_dependencies = myenv_dep

# Register the environment

myenv.register(ws)

#------------------------------------------------------------

# Create compute cluster

# Provisioning configuration using AMLCompute

from azureml.core.compute import AmlCompute

cluster_name = "pipeline-cluster"

compute_config = AmlCompute.provisioning_configuration(

vm_size="STANDARD_E2d_v4",

max_nodes=2)

from azureml.core.compute import ComputeTarget

compute_cluster = ComputeTarget.create(ws, cluster_name, compute_config)

compute_cluster.wait_for_completion()

#------------------------------------------------------------

# Configuration for the steps

from azureml.core.runconfig import RunConfiguration

run_config = RunConfiguration()

run_config.target = compute_cluster

run_config.environment = myenv

#------------------------------------------------------------

# Defining pipeline steps

from azureml.pipeline.steps import PythonScriptStep

from azureml.pipeline.core import PipelineData

from azureml.data.data_reference import DataReference

#input_ds = ws.datasets.get("Defaults")

#Create input as DataReference for using in step 1

input_data = DataReference(datastore=ws.get_default_datastore(),

data_reference_name="Defaults",

path_on_datastore="defaults.csv")

dataFolder = PipelineData("dataFolder", datastore=ws.get_default_datastore())

# Step 1 - DATA PREPARATION

dataPrep_step = PythonScriptStep(name="01 Data Preparation",

source_directory=".",

script_name="13-auto-dataprep",

inputs=[input_data],

outputs=[dataFolder],

runconfig=run_config,

arguments=["--dataFolder", dataFolder]

)

# Step 2 - TRAIN THE MODEL

train_step = PythonScriptStep(name="02 Train the Model",

source_directory=".",

script_name="14-auto-training",

inputs=[dataFolder],

runconfig=run_config,

arguments=["--dataFolder", dataFolder]

)

#------------------------------------------------------------

# Configure and build the pipeline

steps = [dataPrep_step, train_step]

from azureml.pipeline.core import Pipeline

new_pipeline = Pipeline(workspace=ws, steps=steps)

# Create the experiment and run the pipeline

from azureml.core import Experiment

new_experiment = Experiment(workspace=ws, name="PipelineExp01")

new_pipeline_run = new_experiment.submit(new_pipeline)

new_pipeline_run.wait_for_completion(show_output=True)

Hope someone can help me!