Ok, nevermind I helped myself with a role transfer via powershell on event 1255. Works well enough as quick (not live) migration, event though it for sure isn't perfect t he perfekt solution.

This browser is no longer supported.

Upgrade to Microsoft Edge to take advantage of the latest features, security updates, and technical support.

Hello,

we operate a 2 Node Failover Cluster with File Share Witness as small high availability solution in our production net.

Each node has 3 NICs, 2 of them with static IPs for cluster only connection, 1 NIC for cluster+client communcation that is also needed for access to file share witness and domain controller. Lets call this NIC NIC3.

Nodes are running Windows Server 2019 Datacenter.

Our expectation is:

If NIC3 is disconnected from one of the nodes (let's call it Node1), automatic failover of VMs running on Node1 happens and they are migrated to Node2.

What actually happens:

On disconnection of NIC3 an automatic live migration of is scheduled but fails due to Event ID 21502. The details of this event contain further information:

0x80090311(No authority could be contacted for authentication)

After reading some similar questions here I guess this is due to the missing connecton of Node1 to our domain controller in case of NIC3 failing?

But the interesting part is, after the automatic failover failed and NIC3 of Node1 keeps disconnected I can happily start (RDP'ed on Node2) a live migration of VMs from Node1 to Node2. Why is authentication not a problem in manual failover?

With the exception of the listed use case live migration is not a problem in our setup, but this case we consider important.

So I wonder what is the real problem here and how can we fix it?

Ok, nevermind I helped myself with a role transfer via powershell on event 1255. Works well enough as quick (not live) migration, event though it for sure isn't perfect t he perfekt solution.

Hi,

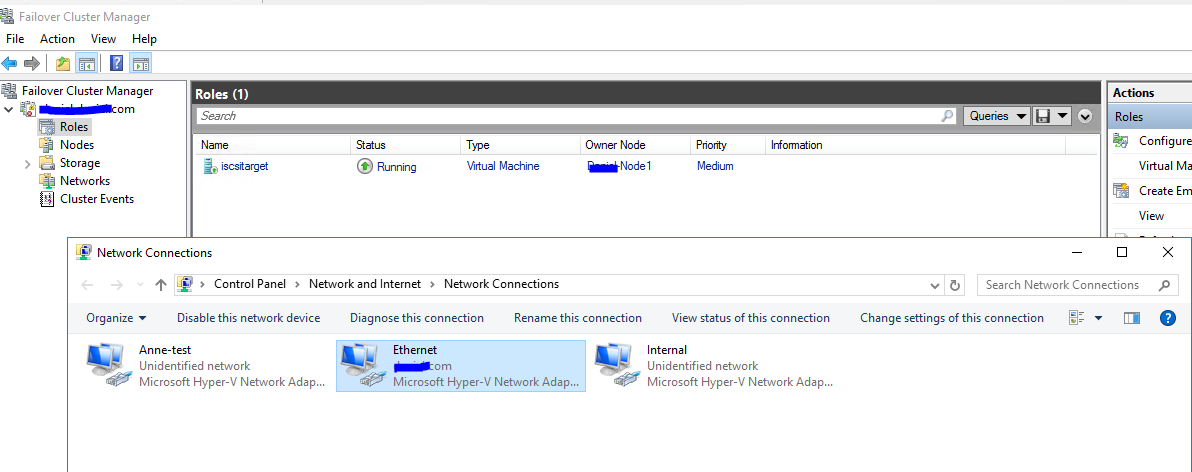

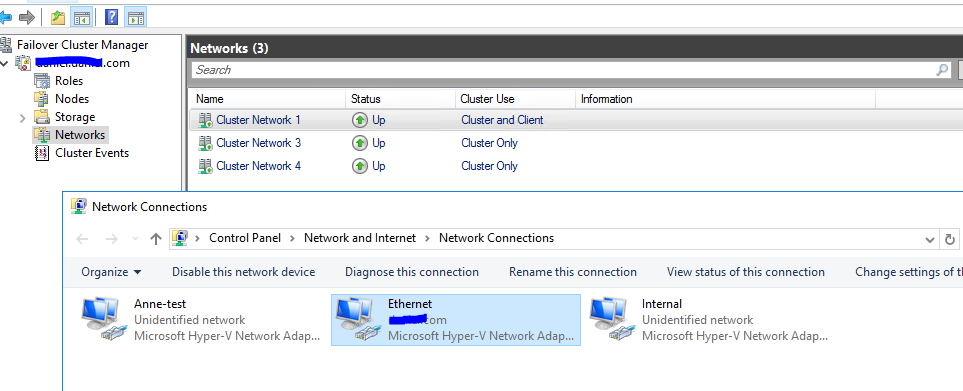

I did the test in my lab with your deployment:

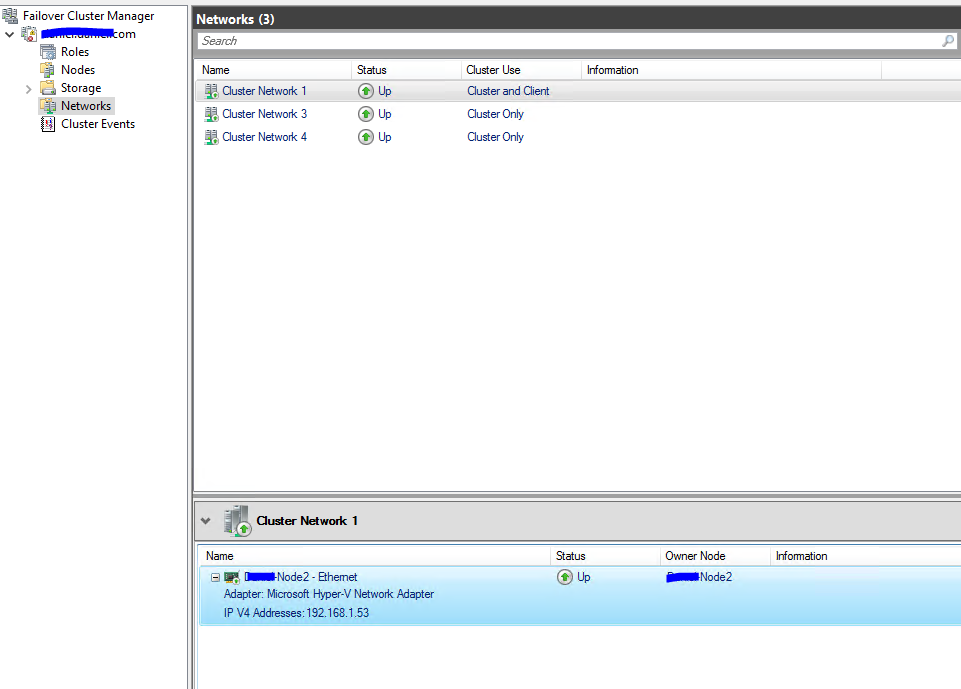

VM running on node 1 previously, and network set as you said:

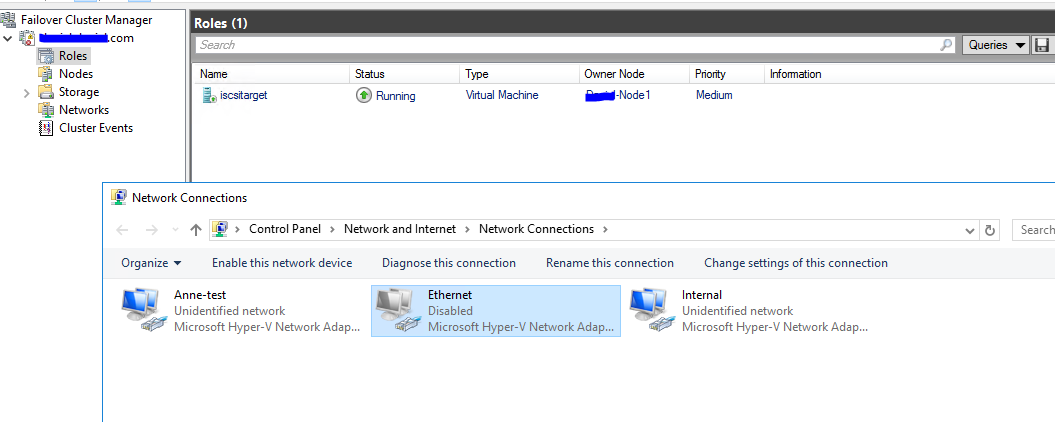

Then try to disable the NIC connecting to DC and file share:

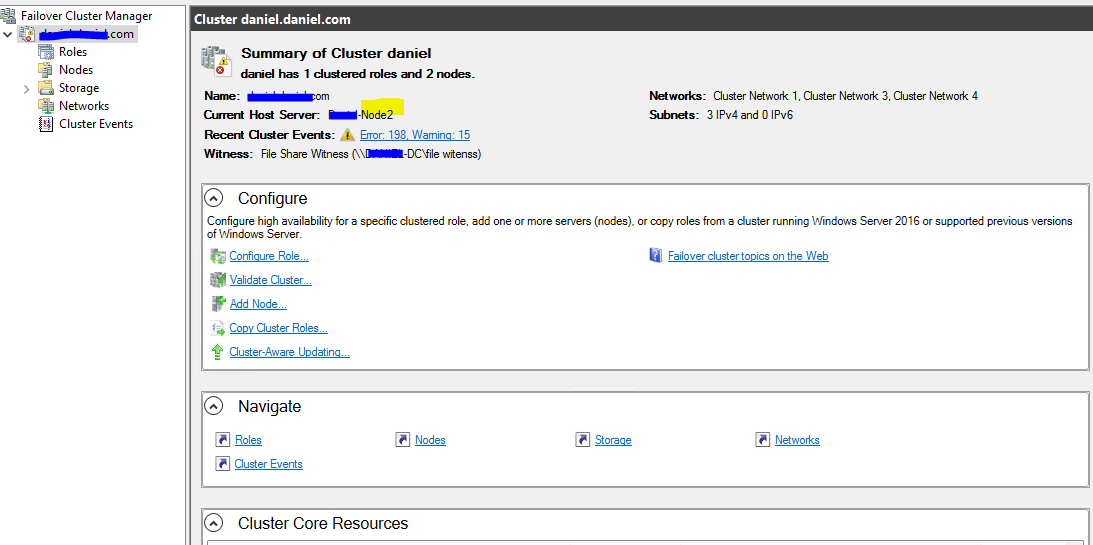

After that, the VM not failover, it still running well in Node 1:

In the network, we may see the NIC on node 1 is disappear, only the NIC on node 2, and I found the current host server of the cluster changes to Node 2:

The behavior above is expected, VM will not failover unless the Node 1 is unable to online the VM such as node 1 offline, if we just disable NIC 3 on node 1, the other two NICs can still send heartbeat packets with node 2, so node 1 should still online in the cluster, and the VMs will not failover when Node 1 still online.

As in your scenario, the VM automatically failover to node 2, please check the Node 1's status when we disable the NIC 3 on node 1, check if the node still online.

Thanks for your time!

Best Regards,

Anne

-----------------------------

If the Answer is helpful, please click "Accept Answer" and upvote it.

Note: Please follow the steps in our documentation to enable e-mail notifications if you want to receive the related email notification for this thread.

Hi Anne,

thanks a lot for your support.

I'm currently in home office so I can't retest before tomorrow. I'll send screenshots once I'm back.

Until then:

Have you made sure in your setup, that the File Share for witness can only be reached via NIC3?

I

Maybe my expectation is completly wrong?

Some sources say, only in case of connection between nodes failing, the nodes check the witness log and transfer roles on the remaining nodes.

If this is the case, this would explain why your nodes do not failover, that is sure.

My (probably totally wrong) thought was, there is also some kind of periodic check on the file share witness by the cluster nodes. So Node1 checks at some time, it is missing connection to the file share witness (Or some check kind of "my only NIC flagged as client connection is dead") and as a result a new quorum is reached. With the result of roles transfer to Node2, even if connection between nodes is still working.

Regardless of the outcome, thanks again for your support.

Hi,

Have you made sure in your setup, that the File Share for witness can only be reached via NIC3?

Yes, file share witness could only be accessed via NIC3. I tested in VMs, the cluster only NICs are not connected to the same virtual switch with NIC3, so the other NICs are unable to connect with DC totally.

only in case of connection between nodes failing, the nodes check the witness log and transfer roles on the remaining nodes.

The witness is for quorum, when node 1 is unable to access the witness, node 2 can still access, so the cluster will still online.

My (probably totally wrong) thought was, there is also some kind of periodic check.....

No such check, file share witness is just for quorum. Cluster nodes check with each other via heartbeat packets (UDP 3343) to verify if other nodes are active.

So, with your test, normal behavior should like my test above. The VMs will not failover if node 1 still online. And node 1 should online with other two networks are fine.

Thanks for your time!

Best Regards,

Anne

-----------------------------

If the Answer is helpful, please click "Accept Answer" and upvote it.

Note: Please follow the steps in our documentation to enable e-mail notifications if you want to receive the related email notification for this thread.

Hello,

ok so I've replayed this test scenario.

Both nodes online, only one vm as role, this one running on node1.

Plugged out client/fileshare witness/DC cable from node1.

Live-Migration tries to start again and fails as descibed, but I think I understand now why it is triggered.

There is event 1255 logged on node 1, stating that a importend network for the virtual computer is in critical state.

Virtual Switch for the vm is connected to NIC3, so I guess this is the reason.

On Node1 also the event 1228 was shown. - Unable to obtain a logon token", Error Code "1311". I guess this is also related to the missing connection to the DC/DNS.

So this is probably the reason for the migration, but why is the migration failing in automatic case, but working when I trigger it afterwarts?