Hello @Sydney-4726 , Thanks for reaching out to us!.

So we have to go with either Cost Effective or Time sensitive strategy [Time to production]

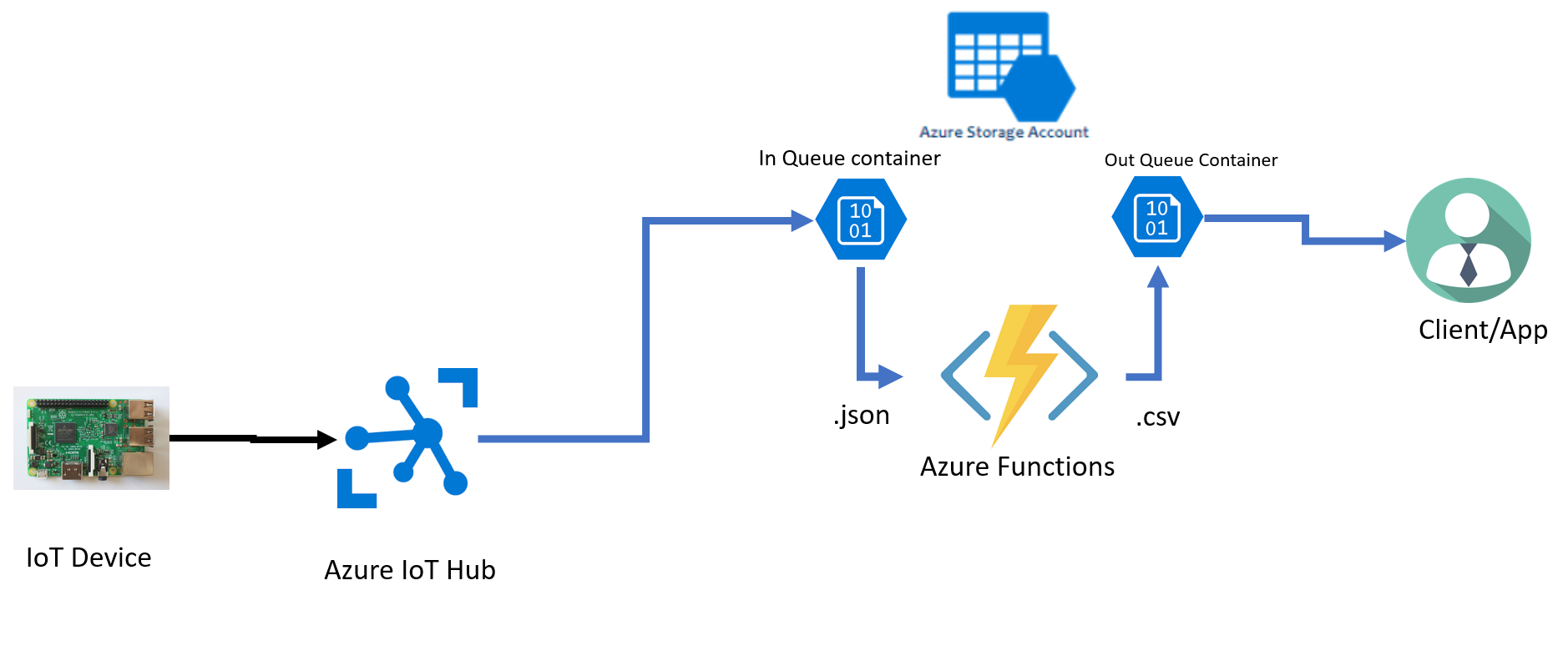

We can chart down the data flow as per below samples, please note that there are many ways/micro-services to implement this flow.

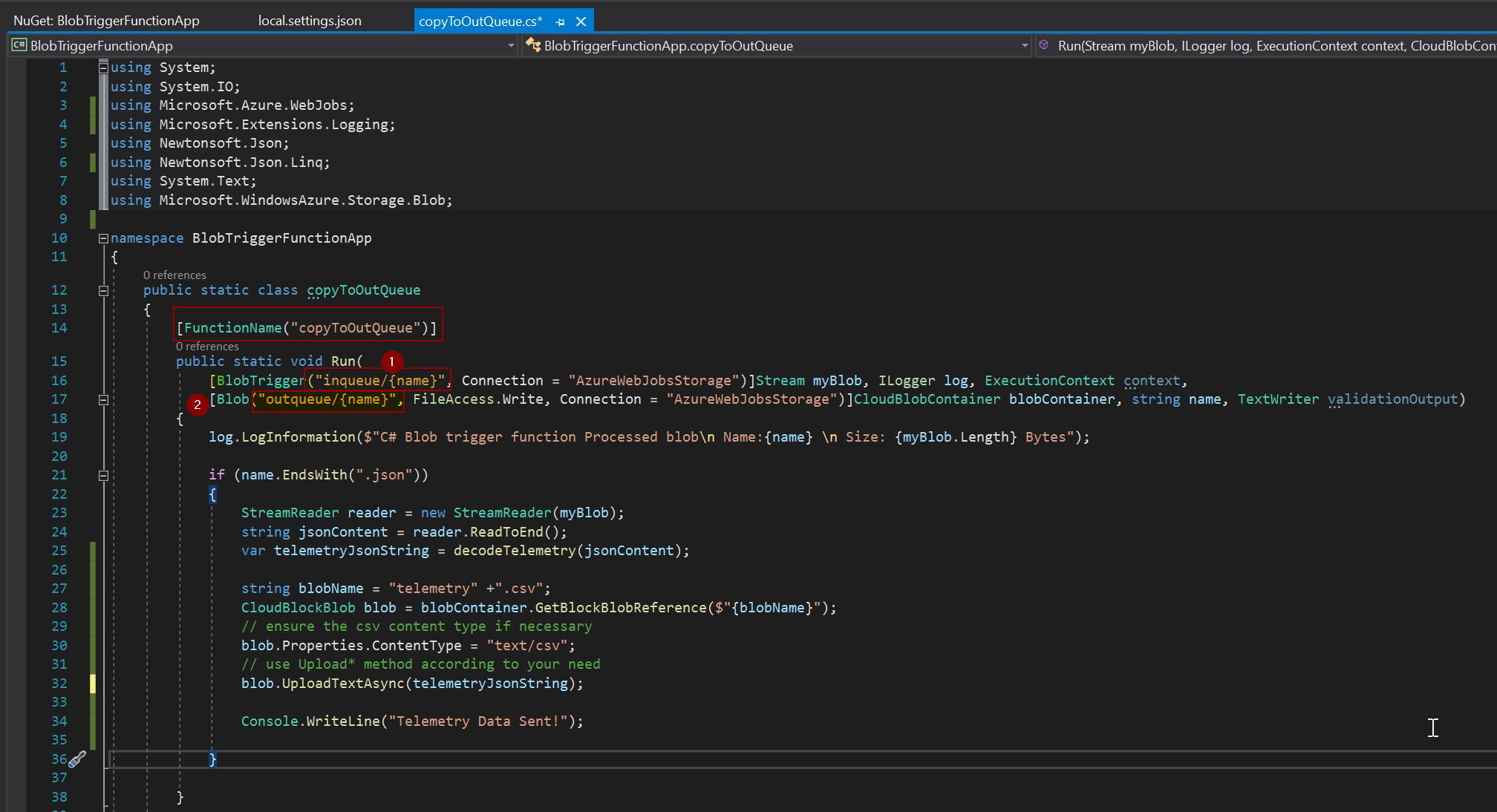

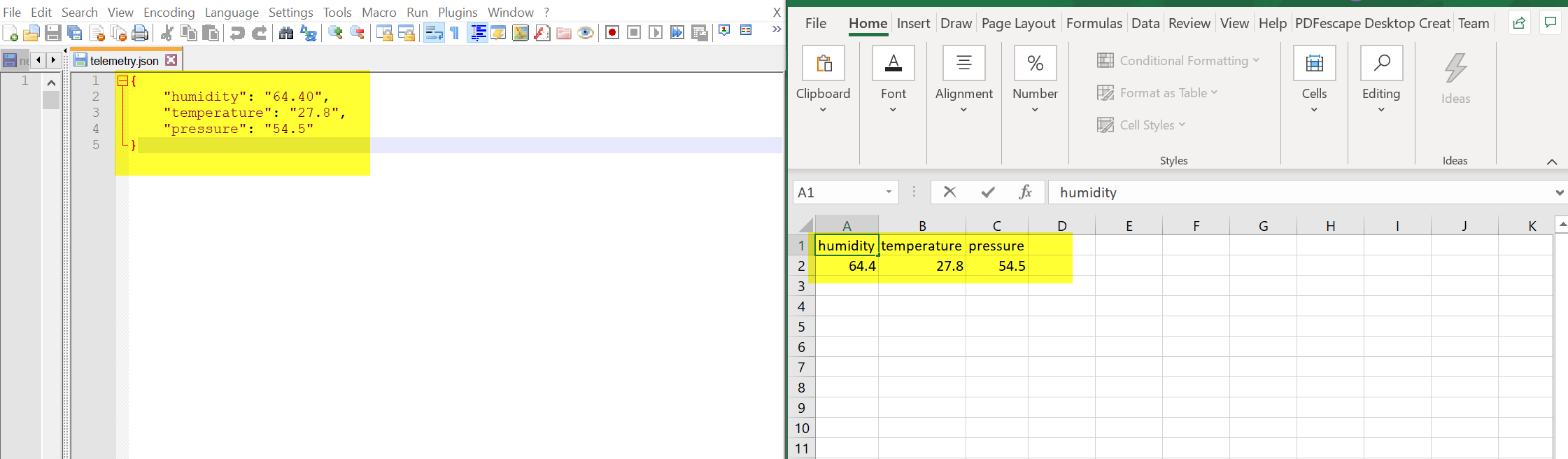

I. Azure Functions, Please the pricing page as well. Below is an example of snippet showing Azure Function App which reads the blob container called 'InQueue' and then decodes or process the telemetry .json and then finally writes the stream to 'OutQueue' as .csv file. You can apply the append rules as well.

C# Function App snippet for blob triggered Function and then create a Blockblob

Append the file: First get the reference of the blob by using the SAS URI, then apend it with new stream. Be advised BlockBlobs cannot be accessed as AppendBlobs. The initial file needs to be created as an AppendBlob for this to work. Aslo see the Blob service REST API, Always check the input .JSON format, since we may have to implement mapping if the .json is nested.

CloudAppendBlob appendBlob = new CloudAppendBlob(new Uri("https://{storage_account}.blob.core.windows.net/{your_container}/append-blob.log?st=2017-09-25T02%3A10%3A00Z&se=2017-09-27T02%3A10%3A00Z&sp=rwl&sv=2015-04-05&sr=b&sig=gsterdft34yugjtugnhdtw"));

appendBlob.AppendFromFile("{filepath}\source.txt");

Also refer to Create a service SAS for a container or blob with .NET.

Azure Functions, is one of the cost effective route which includes code implementation,which has much popularity on server-less computation. It has got attractive pricing as well, like Consumption plan pricing which includes a monthly free grant of 1 million requests.

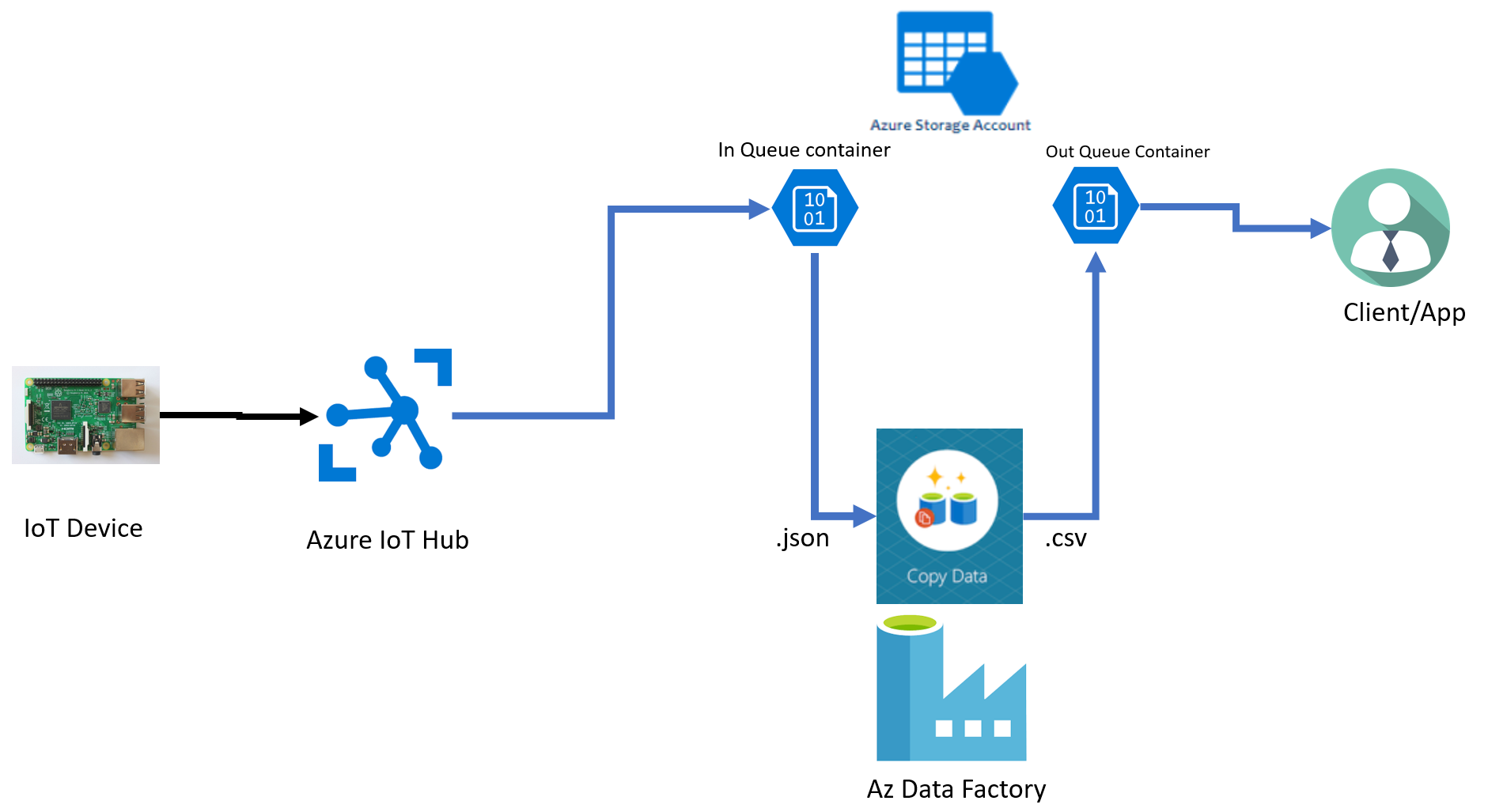

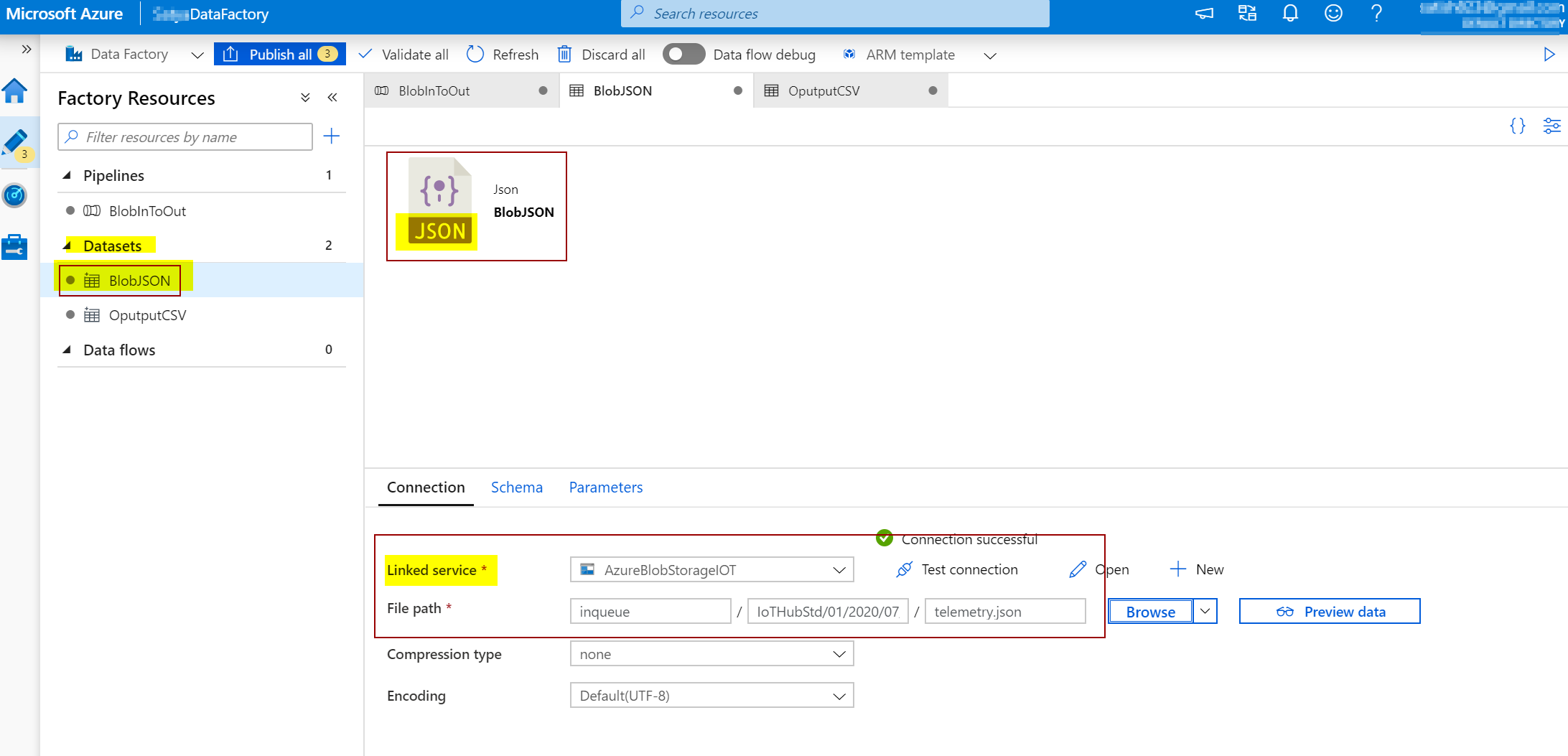

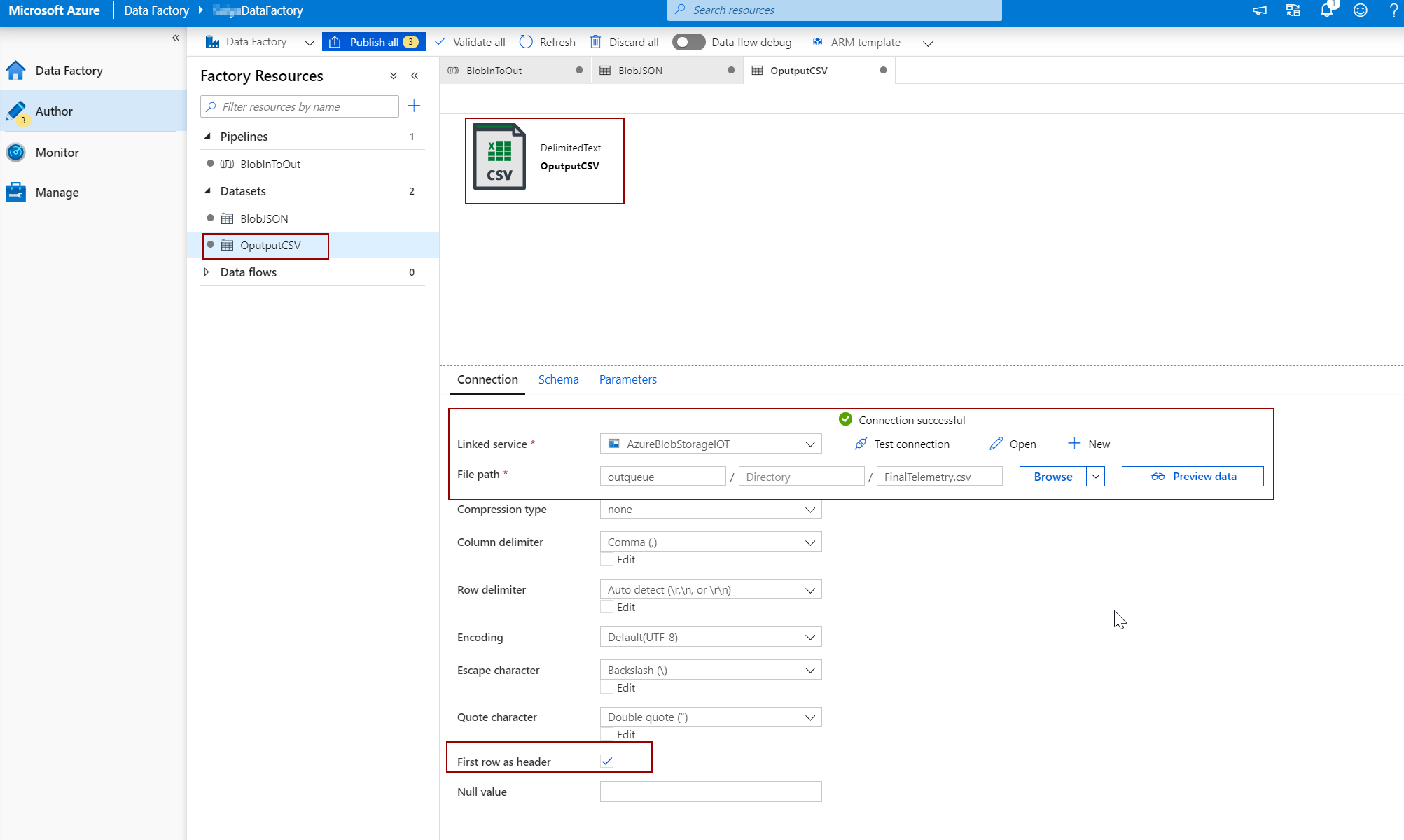

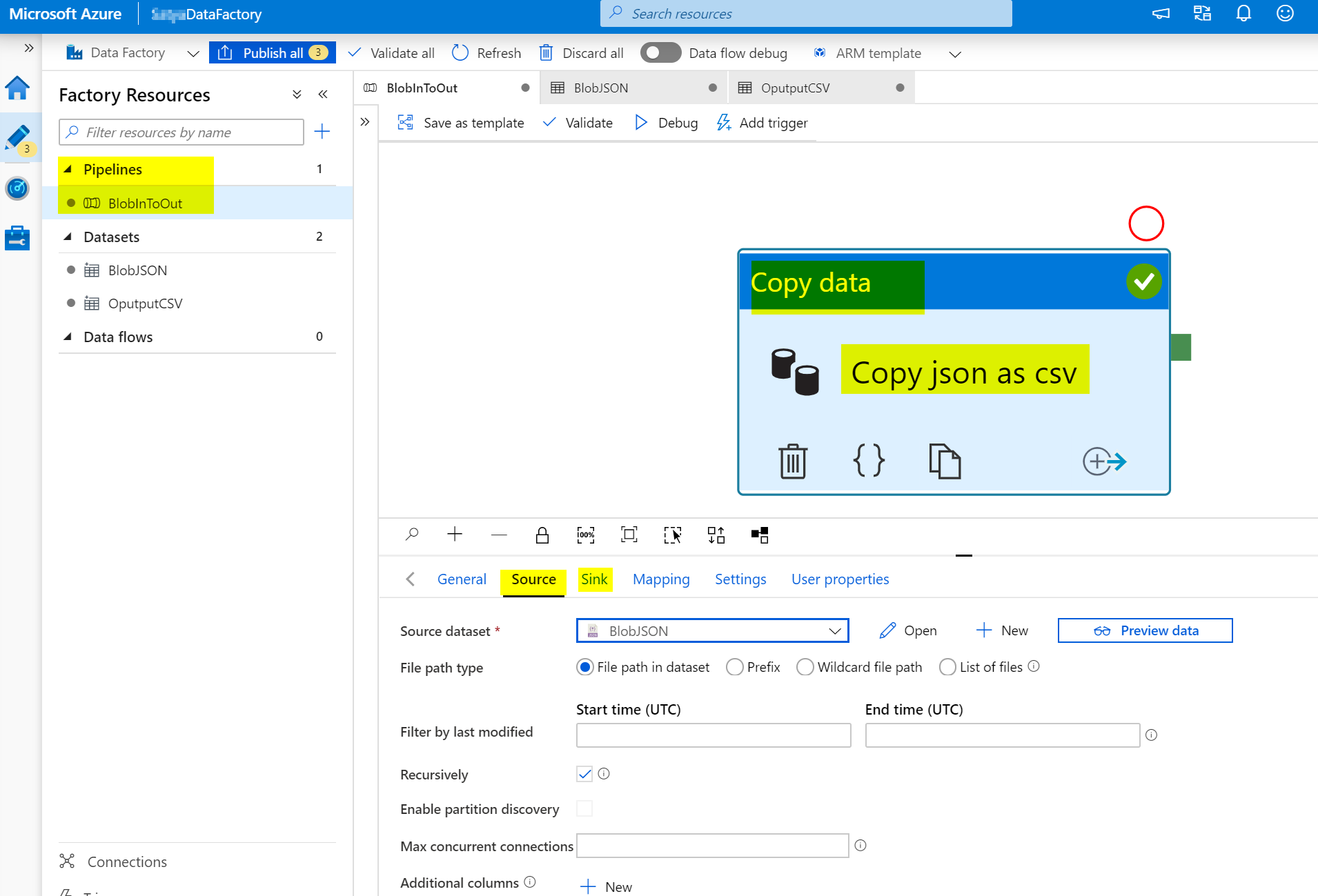

II. Azure Data Factory, Please have a look at Quickstart: Create a data factory by using the Azure Data Factory UI along with its pricing page. This approach is very quick to implement and gives you an opportunity to see the E2E flow without much to spend time on coding.

Quick Steps:

Create In & Out DataSets [Source & Sink Datasets]

Create Pipeline to Copy Data, using Source and Sink Datasets.

Run/Debug the Pipeline and check the Storage Blob Containers In & Out.

Please let us know if you need further help in this matter.