Azure Function Apps: Performance Considerations

While working with Azure Function Apps we often come across performance issues where we see the rate of message processing or the rate at which API calls are being served is not even near to the expected one.

Before we start digging into these issues I would like to mention that no service/product is perfect. As and when product grows it needs more inputs for improvement. It is possible that even after following the best practices the applications may face issues but then Microsoft is always open for feedback and that’s how we grow and keep improving.

This blog assumes the basic knowledge about the Azure Function App.

Let’s discuss the commonly faced Performance Issues one by one.

When we talk about performance it’s commonly about, but not limited to,

- High CPU Consumption

- High Memory Consumption

- Port/ Outbound Socket Consumption

- No. of threads being spawned up

- No of pending requests in the HTTP Pipeline

While creating a Function App one should keep in mind the fact that moving from Consumption Plan to App Service Plan and vice-versa is not possible once the function is created. However, if you delete the Function app and recreate it on the other type of hosting plan then that is possible for obvious reasons.

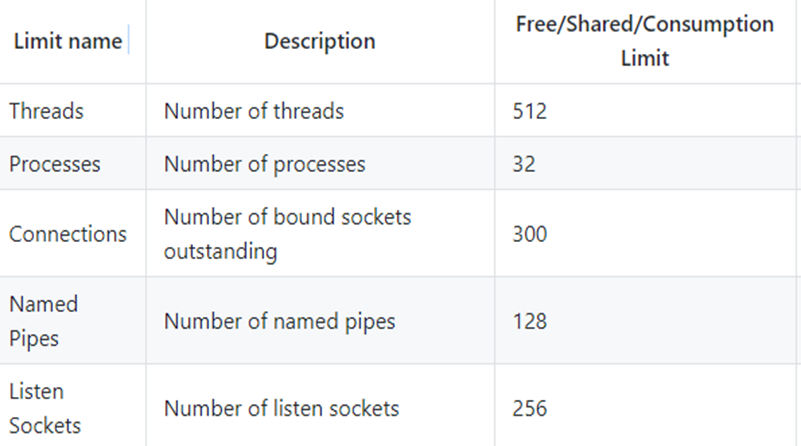

Consumption plan takes care of scaling out whenever there is a need. To describe in simple terms scaling happens when the current processing /output <= the current input. Scaling (scale out) happens when this equation doesn’t hold true. All these performance issues usually we face on Consumption plan where we don’t have the control over scaling up or scaling out the hosting plan. In addition to this, the VM specs are pretty much limited to the consumption plan. Up to 1.5 GB Ram and 1 core CPU is what we get in one instance where the Function app is running, which is not too great I would say. The other limits are:

We should design and develop our function app in a way avoiding performing an intensive tasks taking high CPU, High Memory, spawning large no of threads, opening large no of outbound socket connections etc.

Once the application usage gets past to these limits the processing rate of messages gets reduced and then the scale controller adds a new instance of a machine and the load gets distributed to keep up the processing rate.

However, it takes at least 10 seconds to add a new instance and then the load balancing of the events starts.

Especially with HTTP triggers, it takes a bit longer to add more instances. So if we are running load tests we may see a little bit higher time taken by the requests to complete as they all start to go on a single instance and we can observe the HTTP pipeline growing up initially. However when the new instances are added then processing speeds up and within 20-30 Min of time, the high load(10K requests) will be settled.

This was about the Consumption plan.

In case we use the app service plan then definitely we are choosing the VM specs we want our application to run on. So with features like auto-scaling, we can have the control on when to trigger scaling and also what size of VM to choose. Also for plans on Standard and above there is an option to use Traffic Manager as well to distribute the load.

This was about the VM and how to manage or effectively utilize the VM resources.

While building the application one should also consider the connections to other services internal or external to Azure which the Function app is going to connect while processing. The limit for no of outbound socket connections alive at any given point in time is 300 on Consumption plan. It seems to be pretty low. However, scale-out happens (indirectly) when this limit is reached.

Let's see how this works.

Suppose there is a Function app (Consumption Plan) running on one instance and the Function app itself is making 400 connections to any other service like SQL, COSMOS DB. For one user request to the Function App, there are 400 connections being made to the external service. In this case, the auto scale-out which happens on consumption plan will not help as all those outgoing connections are coming from same worker process and same instance of the worker process. Now imagine after the scale out happens, each instance will have its own worker process and each worker process will make 400 calls to the external service and every worker process in every instance will start failing with the same issue.

The consumption plan scaling will work in a scenario where there are 400 requests to the Function app itself and every function call making one connection to external service. So if initially we have one instance to start with it will get 400 calls then as it will not be able to process more than 300 calls (it will make 300 connections to the external service and will consume all 300 ports) the consumption plan scaling will happen and will add a new instance to serve rest of the calls and balance out the further load.

Along with these considerations, we can implement concurrent/parallel processing of messages by using respective trigger properties in HOST.JSON file of the Function app. These are:

{

"eventHub": {

"maxBatchSize": 64,

"prefetchCount": 256,

"batchCheckpointFrequency": 1

},

"http": {

"routePrefix": "api",

"maxOutstandingRequests": 20,

"maxConcurrentRequests": 10,

"dynamicThrottlesEnabled": false

},

"queues": {

"maxPollingInterval": 2000,

"visibilityTimeout": "00:00:30",

"batchSize": 16,

"maxDequeueCount": 5,

"newBatchThreshold": 8

},

"serviceBus": {

"maxConcurrentCalls": 16,

"prefetchCount": 100,

"autoRenewTimeout": "00:05:00"

}

}

While connecting to the external services it’s always advisable to use connection pooling. However, that also depends on what scenario you are working on.

So the considerations discussed above, the concurrent processing properties and connection pooling combined together can significantly improve the function app performance.

Before ending the blog couple of important points I want to share here which are worth keeping in mind when we are comparing the performance of Function app on Consumption plan vs on App Service plan Vs the same code in a Web App on a VM

- Compute power of all the three kinds of VM’s are different.

- If we are running the same code on the Function app, app service plan and in an application on VM with the similar configuration like RAM and CPU, there will be a difference in computing power observed as the underlying VM’s can be of different series. Web apps use A Series VM which are for moderate loads. When we are choosing the actual VM, we can take it of any series we want and usually we end up in choosing D series VM having high computing power. Here is an article to go through all the series of VM’s available on Azure.

I hope this will give you all a little more insight on how to deal with Azure Function Apps Performance.

Comments

- Anonymous

April 15, 2018

It's worth noting that the scale characteristics of HTTP triggered functions on the consumption plan have been improved significantly since the original implementation. See this post for a recent discussion of this: https://www.azurefromthetrenches.com/azure-functions-significant-improvements-in-http-trigger-scaling/