Why is using “Instant File Initialization” on my SQL Server a potential security issue?

Starting in SQL Server 2005 and onward, data files can be “instantly” initialized. This helps to drastically reduce the amount of time required for creating large databases, adding files to an existing database, increasing the size of an existing data file (i.e. an auto-grow operation), or restoring a database or filegroup. I have seen auto-grow operations take more than 90 seconds to complete and log warnings into the SQL Errorlog due to the length of time it took for the file growth. During this time, the data file is locked and unavailable to users. Normally when a file is created or extended, it is first initialized by filling it with zeroes for security reasons. By enabling “Instant File Initialization”, that time is saved because disk space previously used by deleted files is reclaimed without writing zeros to them. However, for customers under strict security standards or regulatory compliance, this can be a security issue. The problem with this is that it allows someone with access to the system to “discover” previously deleted data since it was not zero-initialized when it was reclaimed. The operating system simply removes the references in the Master File Table (MFT) to the clusters containing the data when a file is deleted, it does not fill that space with zeroes at that time.

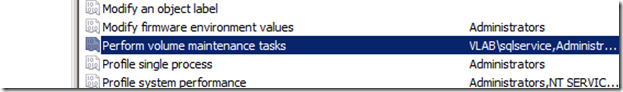

This new feature is provided by a new security right from the operating system. It was first available in Windows 2003. The new security privilege can be found through the Local Security Policy on your Windows 2003/2008 server, and it is called “Perform Volume Maintenance Tasks” or SeManageVolumePrivilege. To enable this feature in SQL Server, just grant the service account for SQL Server this right through the Local Security Policy snap-in. More information on this feature can be found here:

Database File Initialization

https://msdn.microsoft.com/en-us/library/ms175935(SQL.90).aspx

Many articles have been posted discussing the benefits of this feature and how it also imposes a security problem, but exactly how is this a security problem? How can a person read that data if the file has been deleted? In this post, we’re going to find out…

First, we need to verify we have the correct privilege assigned to our service account VLAB\sqlservice:

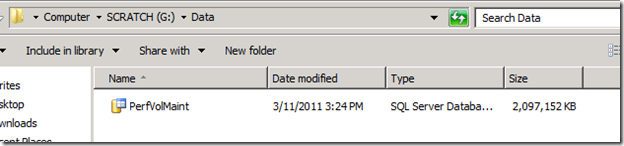

So the service account has the necessary rights to use instant file initialization in SQL Server. To make things simple for the demo, let’s start with a blank, freshly formatted 4GB VHD hard drive attached to my SQL 2008 R2 virtual machine so we can have nice contiguous files on the file system. We then create a simple, empty 2GB database named “PerfVolMaint_Test” with the data file on our new drive (drive G:) in a “data” directory and the log file in the default SQL installation data folder (note: log files do not support instant file initialization):

CREATE DATABASE [PerfVolMaint_Test]

ON

( NAME = PerfVolMaint,

FILENAME = 'G:\Data\PerfVolMaint.mdf',

SIZE = 2048,

MAXSIZE = 4096,

FILEGROWTH = 100 )

LOG ON

( NAME = PerfVolMaint_Log,

FILENAME = 'C:\Program Files\Microsoft SQL Server\MSSQL10_50.MSSQLSERVER\MSSQL\DATA\PerfVolMaint_Log.ldf',

SIZE = 250,

MAXSIZE = 1024,

FILEGROWTH = 50 )

GO

To read this old deleted data directly off the disk, we are going to be using the DBCC PAGE command. Data files are made of database pages (of different types) that are all 8KB (8192 bytes) in SQL Server. The pages are always in physical order in the file by the Page ID. So, you might be wondering about indexes? Indexes are B-tree structures that are constructed through links on each page that point to the previous and next page in the index structure. Thus when the physical order of pages in the file does not mimic the logical order of pages described by an index, we have fragmentation. For our demo here, we need to simply know the last page in the file – even though that page is not yet allocated to an object. Since they are numbered, the last page will be the highest numbered page. We can determine this by taking the size of the file in bytes on the disk and dividing it by the size of a page:

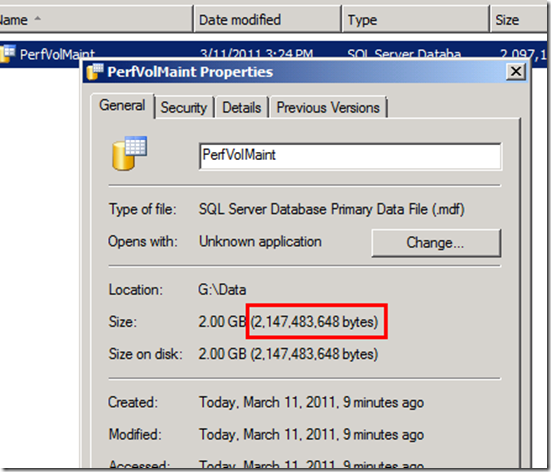

so 2,147,483,648 bytes total / 8192 bytes per page = 262,144 pages. Using the DBCC PAGE command:

DBCC TRACEON(3604)

GODBCC PAGE (5, 1, 262144, 2)

GO

Gives us the following error since no page is found:

Msg 8968, Level 16, State 1, Line 1

Table error: DBCC PAGE page (1:262144) (object ID 0, index ID 0, partition ID 0, alloc unit ID 0 (type Unknown)) is out of the range of this database.

DBCC execution completed. If DBCC printed error messages, contact your system administrator.

This number would actually represent the next page since the first page would actually begin at an offset of 0 bytes. So if we back it up one:

DBCC PAGE (5, 1, 262143, 2)

GO

We get a nice empty page from DBCC PAGE:

Page @0x0000000009970000

m_pageId = (0:0) m_headerVersion = 0 m_type = 0

m_typeFlagBits = 0x0 m_level = 0 m_flagBits = 0x0

m_objId (AllocUnitId.idObj) = 0 m_indexId (AllocUnitId.idInd) = 0 Metadata: AllocUnitId = 0

Metadata: PartitionId = 0 Metadata: IndexId = -1 Metadata: ObjectId = 0

m_prevPage = (0:0) m_nextPage = (0:0) pminlen = 0

m_slotCnt = 0 m_freeCnt = 0 m_freeData = 0

m_reservedCnt = 0 m_lsn = (0:0:0) m_xactReserved = 0

m_xdesId = (0:0) m_ghostRecCnt = 0 m_tornBits = 0Allocation Status

GAM (1:2) = NOT ALLOCATED SGAM (1:3) = NOT ALLOCATED PFS (1:258816) = 0x0 0_PCT_FULL

DIFF (1:6) = NOT CHANGED ML (1:7) = NOT MIN_LOGGEDDATA:

Memory Dump @0x000000004E43A000

000000004E43A000: 00000000 00000000 00000000 00000000 †................

000000004E43A010: 00000000 00000000 00000000 00000000 †................

000000004E43A020: 00000000 00000000 00000000 00000000 †................

000000004E43A030: 00000000 00000000 00000000 00000000 †................

000000004E43A040: 00000000 00000000 00000000 00000000 †................

000000004E43A050: 00000000 00000000 00000000 00000000 †................

Notice how the highlighted region is all zeros – a blank page read from our newly formatted, zero filled drive. Also, our database has 262,143 pages in it – all empty except for meta data pages. Since 262,144 will be the next page when the file grows, that will be the page we want to check to read our deleted data once it is there.

First we must put some data on the drive and delete it in order to be able to read it with the DBCC PAGE command. We could create a simple file in hopes it would be placed adjacently on the disk with the SQL Server data file. Then we could delete that file, hoping that Windows would use these clusters with deleted data over new zeroed out clusters when SQL Server requests more disk space to expand the data file. However, a couple of tests with this method showed that Windows does not seem to allocate the new space for the SQL data file (when growing or extending the data file) directly from the clusters adjacent to the end of the SQL data file if those clusters previously contained data (the old deleted data was not found when doing this with a small file) . To ensure we re-use disk space (clusters) that was previously used by another file – we’ll simply fill up the drive with one large file that has a unique string written to it over and over that we can identify – and then delete that file.

So now we need to figure out how to exactly fill the drive with our string. So we can start with a basic text file in notepad. Right now, we need to generate a file close to 2GB – that’s a lot of typing! We’ll need to automate the file generation. For our string, we will use the word “private!” – so we can easily identify we are reading our old, deleted data. Using the following PowerShell script, we can generate the file with ease:

#query to get reference to our drive

$drive = get-wmiobject -query "Select * from win32_logicaldisk

where DeviceID = 'G:'"#get amount of freespace

$drSize = $drive.FreeSpace#set our unique string

$secretString = "private!"#get size of our secret string

$ssSize = $secretString.Length;#find the scale of the difference in size between freespace and string size

$scale = $drSize/$ssSize;#create string builder to build up our write block

$builder = new-object System.Text.StringBuilder#build the secretString up to 64KB writes for quicker file growth

for ($i = 0; $i -lt (65536/$ssSize); $i++)

{

$builder.Append($secretString)

}#set $secretString to the new string

$secretString = $builder.ToString();

$secretString.Length;#open the file

$file = new-object System.IO.StreamWriter "g:\data\bigfile.txt", True;#write out in 64KB blocks until we’ve filled the drive and attempt to close file – file close may fail if the drive is FULL.

try

{

for ($i=0;$i -le ($drSize/65536);$i++)

{

$file.WriteLine($secretString);

$i * $strSize;

}

$file.close();

}

catch [Exception ex]

{

$file.close();

}

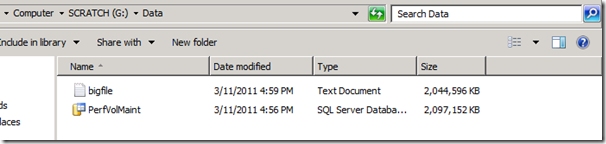

The script writes out in 64KB blocks to fill the file quickly and hopefully within 64KB of the drive’s capacity. SQL Server will always grow a file on an extent boundary, which is also a 64KB block. We now have a large 2GB file with “private!” written in it over and over:

Now, we need to just delete the file. After the file has been deleted, we need SQL Server to grow the main data file for the database and claim some of this previously used disk space. To do so, we just grow it manually:

ALTER DATABASE [PerfVolMaint_Test]

MODIFY FILE

(NAME = PerfVolMaint,

SIZE = 2148MB);

GO

This adds roughly 100MB to our file which we claim from disk space previously used by the big text file we deleted. Now we just need to read that very next page which previously returned an error:

DBCC PAGE (5, 1, 262144, 2)

GO

And we get the following:

Page @0x0000000009C96000

m_pageId = (29296:560297057) m_headerVersion = 97 m_type = 116

m_typeFlagBits = 0x65 m_level = 33 m_flagBits = 0x7270

m_objId (AllocUnitId.idObj) = 560297057 m_indexId (AllocUnitId.idInd) = 30313

Metadata: AllocUnitId = 8532387688658042880 Metadata: PartitionId = 0

Metadata: IndexId = -1 Metadata: ObjectId = 0 m_prevPage = (29296:560297057)

m_nextPage = (29296:560297057) pminlen = 30313 m_slotCnt = 30313

m_freeCnt = 29296 m_freeData = 30313 m_reservedCnt = 30313

m_lsn = (560297057:1986622064:29793) m_xactReserved = 8549 m_xdesId = (29793:1986622064)

m_ghostRecCnt = 8549 m_tornBits = 1986622064Allocation Status

GAM (1:2) = NOT ALLOCATED SGAM (1:3) = NOT ALLOCATED PFS (1:258816) = 0x0 0_PCT_FULL

DIFF (1:6) = NOT CHANGED ML (1:7) = NOT MIN_LOGGEDDATA:

Memory Dump @0x000000004E43A000

000000004E43A000: 61746521 70726976 61746521 70726976 †ate!private!priv

000000004E43A010: 61746521 70726976 61746521 70726976 †ate!private!priv

000000004E43A020: 61746521 70726976 61746521 70726976 †ate!private!priv

000000004E43A030: 61746521 70726976 61746521 70726976 †ate!private!priv

000000004E43A040: 61746521 70726976 61746521 70726976 †ate!private!priv

000000004E43A050: 61746521 70726976 61746521 70726976 †ate!private!priv

000000004E43A060: 61746521 70726976 61746521 70726976 †ate!private!priv

000000004E43A070: 61746521 70726976 61746521 70726976 †ate!private!priv

000000004E43A080: 61746521 70726976 61746521 70726976 †ate!private!priv

000000004E43A090: 61746521 70726976 61746521 70726976 †ate!private!priv

000000004E43A0A0: 61746521 70726976 61746521 70726976 †ate!private!priv

In the dump of the page, we can see our string (private!) that was written to the text file. We’ve recovered or discovered this data which we ordinarily wouldn’t have access to – and perhaps shouldn’t have access to. Furthermore, since this is from a deleted file whose disk space (NTFS clusters) have been given to or claimed by SQL Server, we are able to freely read through this data without being stopped by NTFS security. This could easily be another database file or any other type of file where the data is not encrypted.

Therefore, if you have sensitive data stored on your system, you may want to consider letting the system continue to initialize SQL data pages with zeros – and always encrypt any sensitive data while it is at rest on the disk!

-Jay