Script Component Recompile in SQL Server 2012 Integration Services (SSIS) to refresh metadata

In SQL Server releases 2005-2008 R2 there is a programmatic approach to be able to dynamically change dataflow columns and their data types in a dataflow task from the SSIS programmatic APIs, and have a Script Component still work magically (most of the time).

In Microsoft Customer Service and Support we saw this from time to time, at this was normally fine, but in the case where the metadata changes significantly, such as by changing a data type or changing the order or number of columns, the Script Component would start to misbehave since it may touch the wrong column or wrong data type and leave the data flow buffers in an unexpected state.

Pseudo code example of the problem

Time 1: Original SSIS package design with a Dataflow task having 3 columns: ColA ColB ColC

Script Component in that Dataflow: Col(ColA).value=1; Col(ColB).value=2, Col(ColC).value=3

Then later someone changes the SSIS package design programmatically to introduce new columns.

Time 2: change inputs metadata to ColA, ColC, ColD

Then the Dataflow Script Component could incorrectly do things like use the ordinal position of columns and change the values incorrectly.

Col(ColA).value=1; Col(ColC).value=2, Col(ColD).value=3

This, above could cause data corruption too since you might be writing wrong types. If you put extra numbers of columns, or less numbers of columns, there are some unintended side effects.

So let’s prevent corruption and unexpected values by giving a true error in SSIS 2012

Therefore, the red flag went off in my mind after seeing this a couple of times, and I requested the product team to investigate a way to be sure that SSIS Dataflow buffers match what the Script Component expected when it was designed with the VSTA code editor. The solution is to have a verification mechanism that remembers the original metadata and if the column metadata changes since the task, and raise an error MetadataCheckumMisMatch when the metadata is known to be changed/mismatched.

Error: "The component metedata is out of sync with the compiled script. Recompile the script using the Script Component Editor."

Error: "Script Source" failed validation and returned validation status "VS_ISBROKEN".

Note the error is misspelled in the product – yes I know.

Therefore, in SQL 2012, if you programmatically edit the dataflow task design and change the input or output metadata which is hooked up to a Script Component (as a source, as a transformation, or as a destination) then you may see this error and you will wonder how to fix the problem.

To recompile the Script Component programmatically in SSIS 2008 – R2 we have these posts to help us

It was not well supported to do Script Component programmatically in SQL 2008, since you could not compile the script dynamically- the only supported way was is to open BIDS and click the Script Editor button.

There was a way to show the VSTA IDE and save the contents, which could be done programmatically. We blogged the workaround in SQL 2008 days

- https://dougbert.com/blog/post/Recompile-VSTA-scripts-programmatically-in-SSIS.aspx

- https://dougbert.com/blog/post/adding-a-vsta-script-task-programmatically.aspx

The gist is showing the IDE window, and closing it again automatically saved and recompiled the script code into binary code that gets saves into the SSIS package tags.

comp.ShowIDE();

comp.CloseIDE();

How to Recompile a Script Component programmatically in SSIS 2012

In SQL Server 2012 SSIS the script design was reworked to use a newer approach using the VSTA 3.0 editor, so there are a few differences now in how to open the Script and effectively recompile it with the current metadata values. This code sample shows how to take an existing package (.dtsx in this approach) and loop over all tasks to find the dataflow tasks, and then find all the script components within, to then open and save the code fresh, so that the script code gets the current dataflow pipeline metadata (to avoid the metadata is out of sync error). The highlighted lines are the meaty bits of this code. I don’t claim to be a developer, so please watch out for any bugs I may introduce with this code sample.

This sample is a C# for Visual Studio 2010 .Net 4.0

using System;

using System.Collections.Generic;

using System.Linq;

using System.Text;

using Microsoft.SqlServer.Dts.Design;

using Microsoft.SqlServer.Dts.Runtime;

using Microsoft.SqlServer.Dts.Pipeline.Wrapper;

using Microsoft.SqlServer.Dts.Pipeline;

using Microsoft.SqlServer.VSTAHosting;

using Microsoft.SqlServer.IntegrationServices.VSTA;

/* References

* // General SSIS references

* C:\Windows\Microsoft.NET\assembly\GAC_MSIL\Microsoft.SqlServer.Dts.Design\v4.0_11.0.0.0__89845dcd8080cc91\Microsoft.SqlServer.Dts.Design.dll

* C:\Program Files (x86)\Microsoft SQL Server\110\SDK\Assemblies\Microsoft.SqlServer.DTSPipelineWrap.dll

* C:\Program Files (x86)\Microsoft SQL Server\110\SDK\Assemblies\Microsoft.SQLServer.DTSRuntimeWrap.dll

* C:\Windows\Microsoft.NET\assembly\GAC_MSIL\Microsoft.SqlServer.PipelineHost\v4.0_11.0.0.0__89845dcd8080cc91\Microsoft.SQLServer.PipelineHost.dll

* C:\Windows\Microsoft.NET\assembly\GAC_MSIL\Microsoft.SqlServer.ScriptTask\v4.0_11.0.0.0__89845dcd8080cc91\Microsoft.SqlServer.ScriptTask.dll

* C:\Program Files (x86)\Microsoft SQL Server\110\SDK\Assemblies\Microsoft.SQLServer.ManagedDTS.dll

* // Script related references

* C:\Program Files (x86)\Microsoft SQL Server\110\DTS\PipelineComponents\Microsoft.SqlServer.TxScript.dll

* or C:\Windows\Microsoft.NET\assembly\GAC_MSIL\Microsoft.SqlServer.TxScript\v4.0_11.0.0.0__89845dcd8080cc91\Microsoft.SqlServer.TxScript.dll

* C:\Windows\Microsoft.NET\assembly\GAC_MSIL\Microsoft.SqlServer.VSTAScriptingLib\v4.0_11.0.0.0__89845dcd8080cc91\Microsoft.SqlServer.VSTAScriptingLib.dll

* C:\Windows\Microsoft.NET\assembly\GAC_MSIL\Microsoft.SqlServer.IntegrationServices.VSTA\v4.0_11.0.0.0__89845dcd8080cc91\Microsoft.SqlServer.IntegrationServices.VSTA.dll

*/

namespace RunFromClientAppCS

{

class Program

{

static void Main(string[] args)

{

string pkgLocation;

Package pkg;

Application app;

DTSExecResult pkgResults;

pkgLocation =

@"c:\folder\Package.dtsx";

app = new Application();

pkg = app.LoadPackage(pkgLocation, null);

try

{

TaskHost th = (TaskHost)pkg.Executables["Data Flow Task"];

Executables pExecs = pkg.Executables;

foreach (Executable pExec in pExecs)

{

TaskHost taskHost = (TaskHost)pExec;

Console.WriteLine("Executable name = " + taskHost.Name);

//Test if the task is a data flow

if (taskHost.InnerObject is Microsoft.SqlServer.Dts.Pipeline.Wrapper.MainPipe)

{

//Cast the Executable as a data flow

MainPipe pipe = (MainPipe)taskHost.InnerObject;

// Loop over each object in the dataflow

foreach (IDTSComponentMetaData100 comp in pipe.ComponentMetaDataCollection)

{

Console.WriteLine("Found Component Name = " + comp.Name);

// Find the Script Components

if (comp.Name=="Script Component")

{

//Recompile the the Script Compoentn

CManagedComponentWrapper compWrap = comp.Instantiate();

ScriptComponentHost scriptComp = (compWrap as IDTSManagedComponent100).InnerObject as ScriptComponentHost;

if (!scriptComp.LoadScriptFromComponent())

{

throw new Exception("Failed to load script information from the component");

}

if (scriptComp.CurrentScriptingEngine.VstaHelper == null)

{

throw new Exception("Vsta 3.0 is not installed properly");

}

if (!scriptComp.CurrentScriptingEngine.LoadProjectFromStorage())

{

throw new Exception("Failed to load project files from storage object");

}

if (!scriptComp.SaveScriptProject())

{

throw new Exception("Failed to save project");

}

Console.WriteLine("Recompiled Success: " + comp.Name);

scriptComp.CurrentScriptingEngine.DisposeVstaHelper();

}

Console.WriteLine(" Component Name = " + comp.Name);

}

}

}

}

catch (Exception e)

{

Console.WriteLine(e.Message.ToString());

}

// Run the Package to make sure it works.

pkgResults = pkg.Execute();

Console.WriteLine("Package Execution Result = " + pkgResults.ToString());

Console.ReadKey();

}

}

}

Note: a security Gotcha for the %temp% folder

There is a gotcha which isn’t immediately apparent.

If you try to run this C# code from within a service when the Windows user profile is not loaded, it may fail. The code uses the temp folder for the users profile. Therefore, if you run this code from an SSIS parent package to recompile scripts in a child package, let’s say from a SQL Agent job or from stored procedures, then it may very well fail. The call SaveScriptProject() doesn’t throw an error, it just doesn’t recompile the code.

You can add the proxy account, or the service account to have permissions to the TEMP folder which is failing.

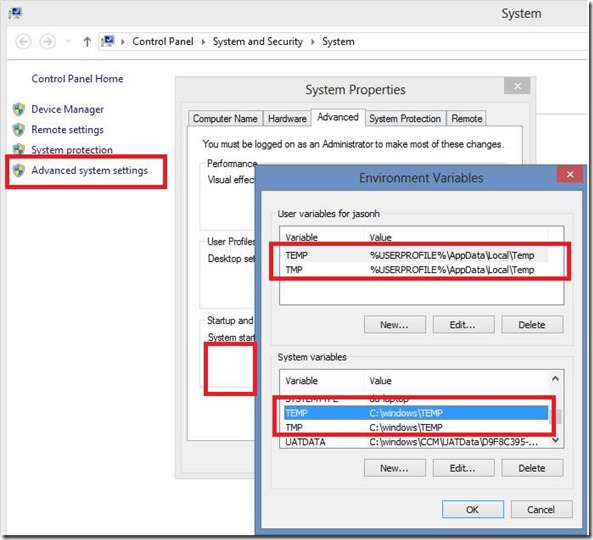

There are generally two environment variables %TEMP% and %TMP%, and two sets – one for the user, and one for the system. If a users profile is not loaded, as is the case when an SSIS job runs as a proxy account user, then the system TEMP may be used.

When the service account for SQL Server launches something, the child process may perceive some system default TEMP folder. If a full user profile is loaded, it would presumably pick the user’s own TEMP folder under C:\Users\<AccountName>\Appdata\Local\Temp. In theory, you could also change the TEMP pointer to point to a different folder if your system admin pushes back. That is the System variables in this case, but that is indeed global and affects all services on the box.

Comments

Anonymous

January 12, 2016

Hi, I get an error of Object reference not set to instance of an object for scriptComp.Anonymous

January 05, 2017

The assembly C:\Program Files (x86)\Microsoft SQL Server\110\SDK\Assemblies\Microsoft.SqlServer.DTSPipelineWrap.dll does not appear to exist on my system causing Microsoft.SqlServer.Dts.Pipeline.Wrapper; not to load. Ultimately I'm trying to accomplish the recompile of all Script Tasks at the root level of a DTSX package (not specifically within Data Flow Tasks), and so perhaps the DTS Wrapper is not needed, but I'm not quite certain how to apply this to our situation...I've attempted to comment out several pieces of the script, pointing it at a test.dtsx package with a single Script Task, but been unsuccessful in iterating through the executable objects in a way that lets me then further process them. I also don't fully understand why we have to cast the executable as a Data Flow ... Considering I don't have that assembly, do I need to be casting the Script Tasks as Script Tasks in order to process them? What about Containers? If I have a container within a container holding a Script Task, must I create catches for those container executable types in order to properly iterated the executables nested one or more levels down?Thanks for posting this, none the less! I feel like it helps put me in the right direction!Anonymous

January 12, 2017

Very helpful. Thank you for sharing. :)Anonymous

January 19, 2018

The comment has been removed