Building an Optimized, Graphics-Intensive Silverlight Application

Seema Ramchandani works on performance as a Program Manager on the Silverlight team. She gave a fast-paced talk at MIX09 covering the graphics and media that contained some helpful tips for performance profiling, debugging and optimization.

Silverlight Rendering Architecture

When building a graphics-rich application, it’s first important to be aware of the underlying architecture of the rendering and media pipelines in Silverlight.

To begin, there is one main execution thread that you need to be careful of not clogging when you build your application. The UI thread executes your code directly along with operating the animation and layout systems. (On older browsers, there’s one UI thread for all tabs in the browser; on newer browsers, we’re starting to see one process per tab.) We spin up separate (non-UI) threads for all other work, such frame rasterization, media decoding and GPU marshalling.

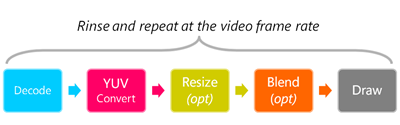

Media is delivered to the end-user through a pipeline: decoding the source, performing YUV –> ARGB conversion, resizing and blending the output (as appropriate) and finally drawing the resultant pixels on-screen. Silverlight 3 enables the latter steps to be performed by the GPU if you enable hardware acceleration. For maximum throughput, make sure you encode the video at the minimum framerate that you need (typically 18-20fps). To make the most of the software renderer, ensure you are also encoding at the desired size so there is no rescaling required, and minimize the amount of media blending.

Media is delivered to the end-user through a pipeline: decoding the source, performing YUV –> ARGB conversion, resizing and blending the output (as appropriate) and finally drawing the resultant pixels on-screen. Silverlight 3 enables the latter steps to be performed by the GPU if you enable hardware acceleration. For maximum throughput, make sure you encode the video at the minimum framerate that you need (typically 18-20fps). To make the most of the software renderer, ensure you are also encoding at the desired size so there is no rescaling required, and minimize the amount of media blending.

One word of caution: most developers have high-specified machines that aren’t representative of their target customers’ devices. As a result, when focusing on performance, set the MaxFrameRate property on the Silverlight control to an arbitrarily high number (e.g. 10,000 fps) so that the speed measured isn’t being capped, and then use the EnableFrameRateCounter (IE-only) and EnableRedrawRegions properties to show the rendering speed and areas being redrawn on a per-frame basis respectively.

For maximum performance, there’s no substitute for minimizing the amount of work being asked of the runtime: reduce the size of the objects being rendered; simplify the visual tree; minimize the number of operations required per draw. Lastly, use windowless mode judiciously: it’s expensive because it requires per-frame blending with the underlying background.

Lastly, be aware when you start developing an application of your target goals: how many objects, elements and animations you’re expecting in the final project. Be aware of bloat in the XAML – for instance, it’s easy for tools to insert tens of keyframes that don’t make any visual difference.

Silverlight Profiling with XPerf

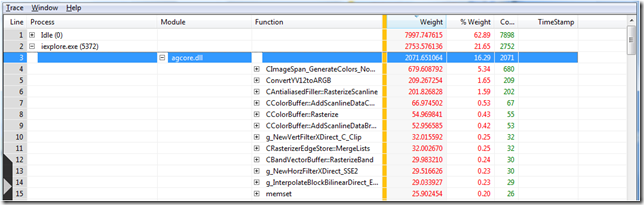

For profiling, one of the best tools is XPerf, a long-standing internal Windows performance analysis tool that has recently been released externally as part of the Windows SDK. It uses Event Tracing for Windows (ETW) to analyze the call stack, taking advantage of the fact that both Silverlight and the CoreCLR have embedded ETW events. XPerf can give you a good idea of the expensive operations in your code: for example, drawing v. browser interactions v. JIT compilation of .NET code.

For profiling, one of the best tools is XPerf, a long-standing internal Windows performance analysis tool that has recently been released externally as part of the Windows SDK. It uses Event Tracing for Windows (ETW) to analyze the call stack, taking advantage of the fact that both Silverlight and the CoreCLR have embedded ETW events. XPerf can give you a good idea of the expensive operations in your code: for example, drawing v. browser interactions v. JIT compilation of .NET code.

To use XPerf, enable the profiler while you run the problematic area of your application to generate a capture. (Seema’s blog has more detailed information on the process.) It shows CPU usage across the period of time captured; if you connect to the Microsoft public symbol server you can then break this down against the various internal methods within Silverlight. It’s relatively easy to tell what’s going on, as the following screenshot demonstrates: As we’ve had customer applications in the Redmond labs, we’ve noticed that it’s quite common to see applications spending a lot of CPU time in the text stack. In Silverlight 3, you can use an inherited property to apply a hint to the text rendering engine when you’re animating the text that turns off pixel-snapping.

As we’ve had customer applications in the Redmond labs, we’ve noticed that it’s quite common to see applications spending a lot of CPU time in the text stack. In Silverlight 3, you can use an inherited property to apply a hint to the text rendering engine when you’re animating the text that turns off pixel-snapping.

RenderOptions.TextRenderingMode = RenderForAnimation

One last note: it’s worth downloading the PowerPoint slides for this session for more information. There are lots of hidden “context” slides included that add depth to the notes above.

Comments

Anonymous

March 24, 2009

The comment has been removedAnonymous

March 24, 2009

Thank you for submitting this cool story - Trackback from DotNetShoutoutAnonymous

March 25, 2009

Cool article! THANKS! Hope this article I like also help you to better understand graphic-intensive applications. Here is direct link http://techzone.enterra-inc.com/architecture/algorythm-of-defining-plain-polygon-signature-point/ Good luck!Anonymous

April 13, 2009

Hi Tim, You say: "For maximum throughput, make sure you encode the video at the minimum framerate that you need (typically 18-20fps)." I understand what you're effectively trying to say ("Less frames to decode and render leads to lower CPU usage and smoother playback"), but it doesn't really work that way with video. You can't just arbitrarily decimate video frame rates to the point of "good enough." 18-20 fps is not an acceptable frame rate for video. There are really only 2 real-world cases where frame rate decimation is acceptable in video encoding:

- Because film is shot at 24 fps and often converted to 60 Hz (30i or 60p) in the NTSC/ATSC world through the process of telecine, it is highly recommended that film-originated video content always be restored back to its original 24p cadence before being encoded for the Web.

- Video content is actually typically shot at 60 Hz (NTSC/ATSC) or 50 Hz (PAL), but for Web video it is an acceptable practice to decimate half the frames and encode video content at 30 and 25 frames per second, respectively, in order to avoid playback performance issues.