Note

Access to this page requires authorization. You can try signing in or changing directories.

Access to this page requires authorization. You can try changing directories.

Important

Items marked (preview) in this article are currently in public preview. This preview is provided without a service-level agreement, and we don't recommend it for production workloads. Certain features might not be supported or might have constrained capabilities. For more information, see Supplemental Terms of Use for Microsoft Azure Previews.

The prompt flow Content Safety tool enables you to use Azure AI Content Safety in Azure AI Foundry portal.

Azure AI Content Safety is a content moderation service that helps detect harmful content from different modalities and languages. For more information, see Azure AI Content Safety.

Prerequisites

Note

You must use a hub based project for this feature. A Foundry project isn't supported. See How do I know which type of project I have? and Create a hub based project.

- An Azure subscription. If you don't have an Azure subscription, create a free account.

- If you don't have one, create a hub based project.

To create an Azure Content Safety connection:

- Sign in to Azure AI Foundry.

- Go to Project settings > Connections.

- Select + New connection.

- Complete all steps in the Create a new connection dialog. You can use an Azure AI Foundry hub or Azure AI Content Safety resource. We recommend that you use a hub that supports multiple Azure AI services.

Build with the Content Safety tool

Create or open a flow in Azure AI Foundry. For more information, see Create a flow.

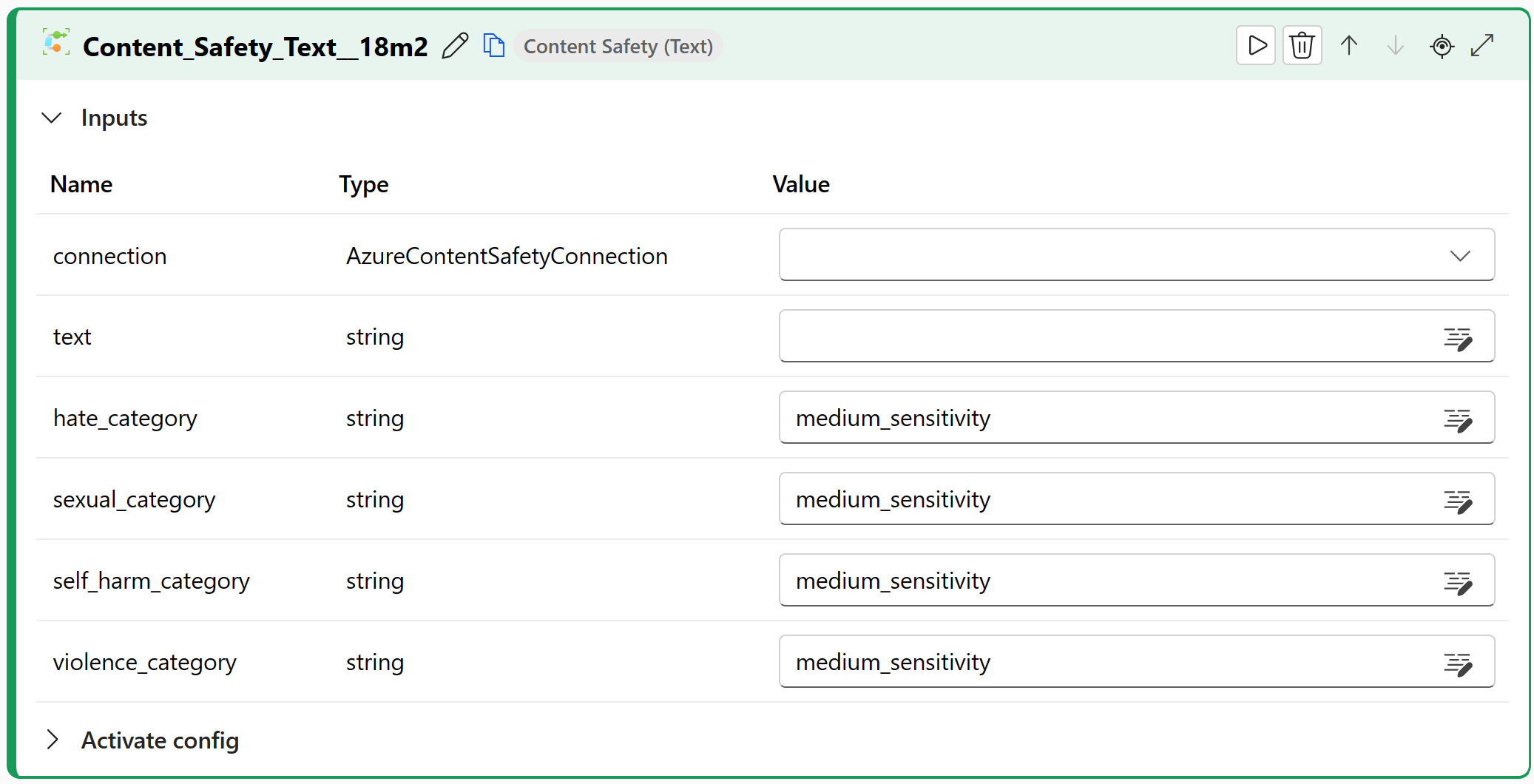

Select + More tools > Content Safety (Text) to add the Content Safety tool to your flow.

Select the connection to one of your provisioned resources. For example, select AzureAIContentSafetyConnection if you created a connection with that name. For more information, see Prerequisites.

Enter values for the Content Safety tool input parameters described in the Inputs table.

Add more tools to your flow, as needed. Or select Run to run the flow.

The outputs are described in the Outputs table.

Inputs

The following input parameters are available.

| Name | Type | Description | Required |

|---|---|---|---|

| text | string | The text that needs to be moderated. | Yes |

| hate_category | string | The moderation sensitivity for the Hate category. You can choose from four options: disable, low_sensitivity, medium_sensitivity, or high_sensitivity. The disable option means no moderation for the Hate category. The other three options mean different degrees of strictness in filtering out hate content. The default option is medium_sensitivity. |

Yes |

| sexual_category | string | The moderation sensitivity for the Sexual category. You can choose from four options: disable, low_sensitivity, medium_sensitivity, or high_sensitivity. The disable option means no moderation for the Sexual category. The other three options mean different degrees of strictness in filtering out sexual content. The default option is medium_sensitivity. |

Yes |

| self_harm_category | string | The moderation sensitivity for the Self-harm category. You can choose from four options: disable, low_sensitivity, medium_sensitivity, or high_sensitivity. The disable option means no moderation for the Self-harm category. The other three options mean different degrees of strictness in filtering out self-harm content. The default option is medium_sensitivity. |

Yes |

| violence_category | string | The moderation sensitivity for the Violence category. You can choose from four options: disable, low_sensitivity, medium_sensitivity, or high_sensitivity. The disable option means no moderation for the Violence category. The other three options mean different degrees of strictness in filtering out violence content. The default option is medium_sensitivity. |

Yes |

Outputs

The following JSON format response is an example returned by the tool:

{

"action_by_category": {

"Hate": "Accept",

"SelfHarm": "Accept",

"Sexual": "Accept",

"Violence": "Accept"

},

"suggested_action": "Accept"

}

You can use the following parameters as inputs for this tool.

| Name | Type | Description |

|---|---|---|

| action_by_category | string | A binary value for each category: Accept or Reject. This value shows if the text meets the sensitivity level that you set in the request parameters for that category. |

| suggested_action | string | An overall recommendation based on the four categories. If any category has a Reject value, suggested_action is also Reject. |