Model packages for deployment (preview)

After you train a machine learning model, you need to deploy it so others can consume its predictions. However, deploying a model requires more than just the weights or the model's artifacts. Model packages are a capability in Azure Machine Learning that allows you to collect all the dependencies required to deploy a machine learning model to a serving platform. You can move packages across workspaces and even outside Azure Machine Learning.

Important

This feature is currently in public preview. This preview version is provided without a service-level agreement, and we don't recommend it for production workloads. Certain features might not be supported or might have constrained capabilities.

For more information, see Supplemental Terms of Use for Microsoft Azure Previews.

What is a model package?

As a best practice before deploying a model, all the dependencies the model requires for running successfully have to be collected and resolved so you can deploy the model in a reproducible and robust approach.

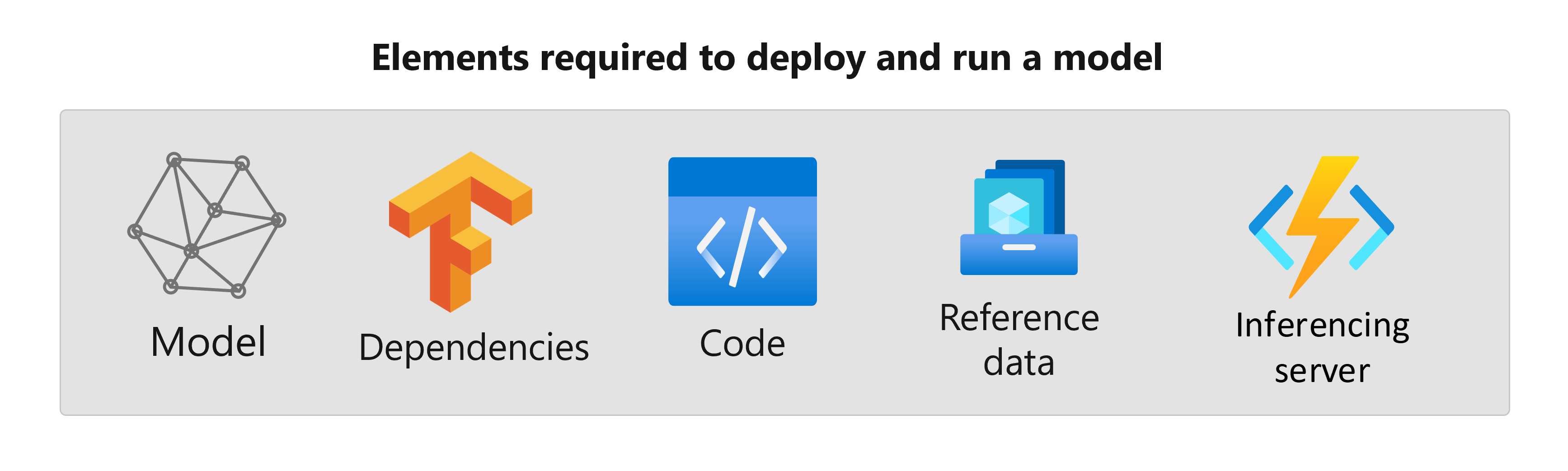

Typically, a model's dependencies include:

- Base image or environment in which your model gets executed.

- List of Python packages and dependencies that the model depends on to function properly.

- Extra assets that your model might need to generate inference. These assets can include label's maps and preprocessing parameters.

- Software required for the inference server to serve requests; for example, flask server or TensorFlow Serving.

- Inference routine (if required).

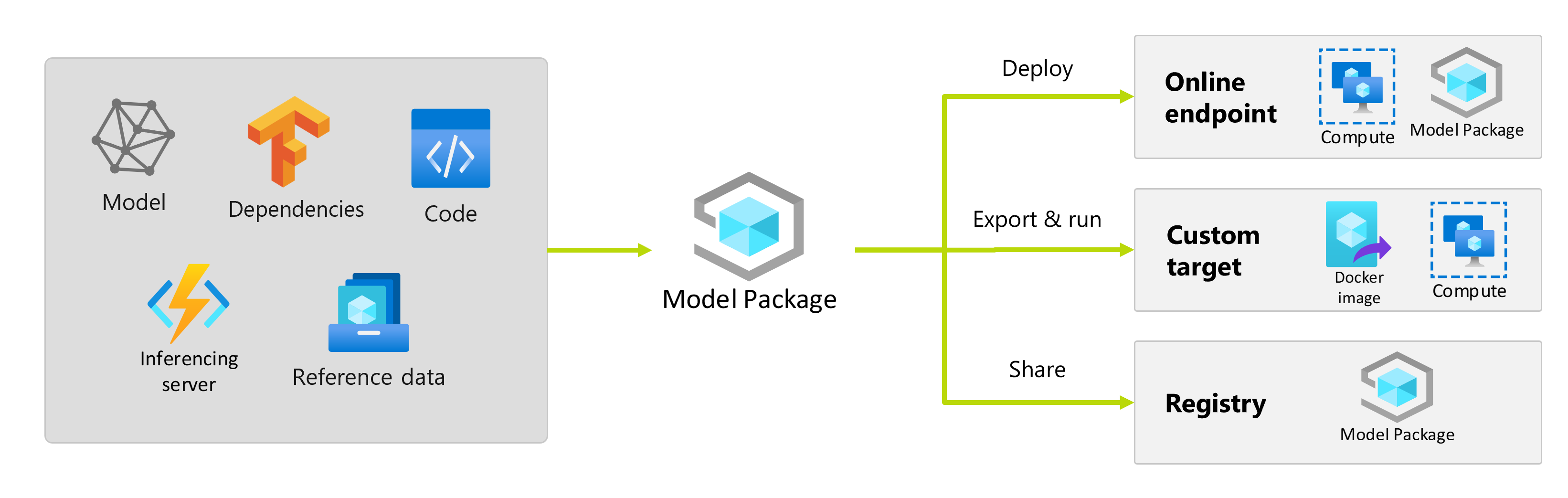

All these elements need to be collected to then be deployed in the serving infrastructure. The resulting asset generated after you've collected all the dependencies is called a model package.

Benefits of packaging models

Packaging models before deployment has the following advantages:

- Reproducibility: All dependencies are collected at packaging time, rather than deployment time. Once dependencies are resolved, you can deploy the package as many times as needed while guaranteeing that dependencies have already been resolved.

- Faster conflict resolution: Azure Machine Learning detects any misconfigurations related with the dependencies, like a missing Python package, while packaging the model. You don't need to deploy the model to discover such issues.

- Easier integration with the inference server: Because the inference server you're using might need specific software configurations (for instance, Torch Serve package), such software can generate conflicts with your model's dependencies. Model packages in Azure Machine Learning inject the dependencies required by the inference server to help you detect conflicts before deploying a model.

- Portability: You can move Azure Machine Learning model packages from one workspace to another, using registries. You can also generate packages that can be deployed outside Azure Machine Learning.

- MLflow support with private networks: For MLflow models, Azure Machine Learning requires an internet connection to be able to dynamically install necessary Python packages for the models to run. By packaging MLflow models, these Python packages get resolved during the model packaging operation, so that the MLflow model package wouldn't require an internet connection to be deployed.

Tip

Packaging an MLflow model before deployment is highly recommended and even required for endpoints that don't have outbound networking connectivity. An MLflow model indicates its dependencies in the model itself, thereby requiring dynamic installation of packages. When an MLflow model is packaged, this dynamic installation is performed at packaging time rather than deployment time.

Deployment of model packages

You can provide model packages as inputs to online endpoints. Use of model packages helps to streamline your MLOps workflows by reducing the chances of errors at deployment time, since all dependencies would have been collected during the packaging operation. You can also configure the model package to generate docker images for you to deploy anywhere outside Azure Machine Learning, either on premises or in the cloud.

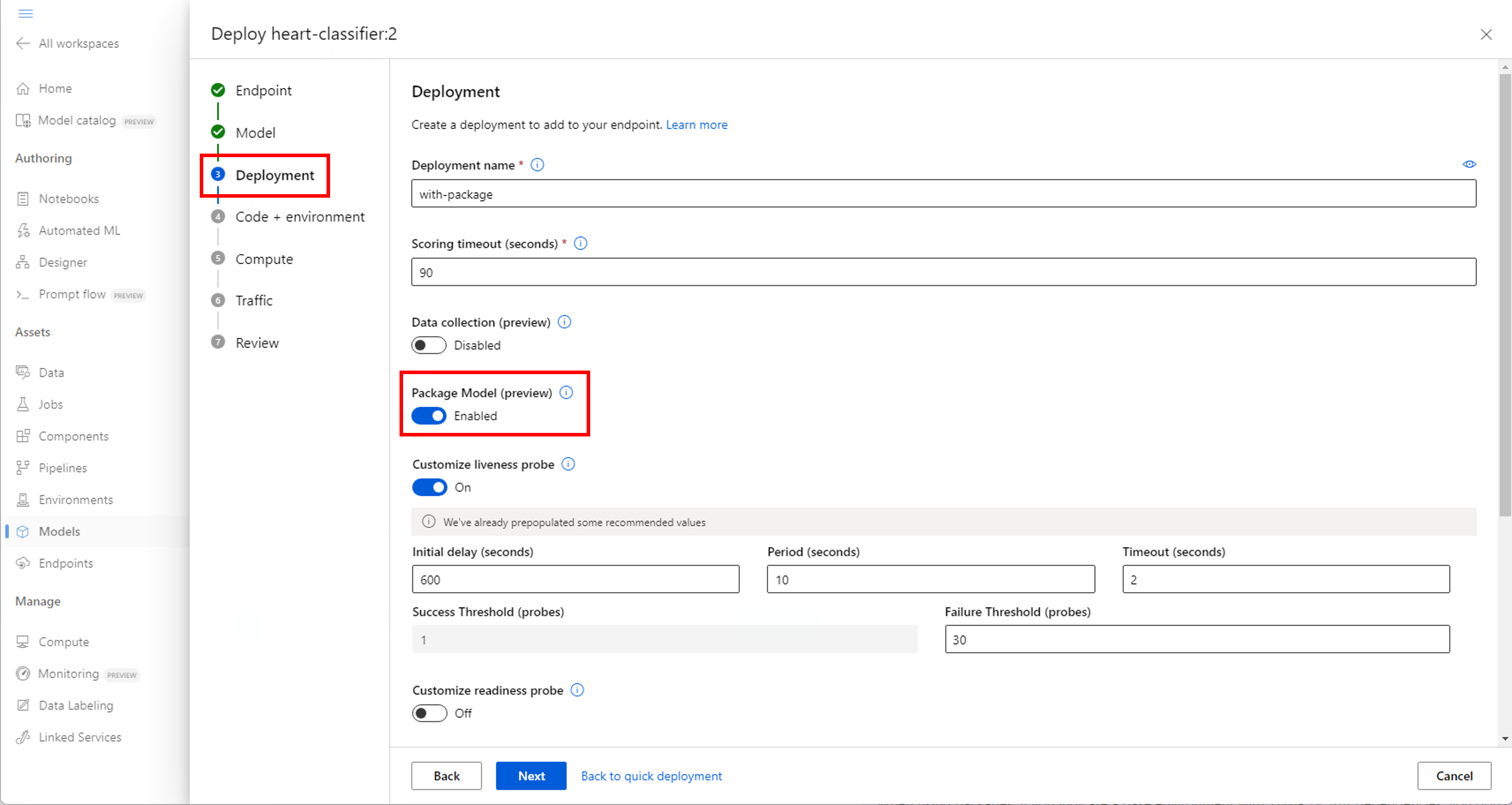

Package before deployment

The simplest way to deploy using a model package is by specifying to Azure Machine Learning to deploy a model package, before executing the deployment. When using the Azure CLI, Azure Machine Learning SDK, or Azure Machine Learning studio to create a deployment in an online endpoint, you can specify the use of model packaging as follows:

Use the --with-package flag when creating a deployment:

az ml online-deployment create --with-package -f model-deployment.yml -e $ENDPOINT_NAME

Azure Machine Learning packages the model first and then executes the deployment.

Note

When using packages, if you indicate a base environment with conda or pip dependencies, you don't need to include the dependencies of the inference server (azureml-inference-server-http). Rather, these dependencies are automatically added for you.

Deploy a packaged model

You can deploy a model that has been packaged directly to an Online Endpoint. This practice ensures reproducibility of results and it's a best practice. See Package and deploy models to Online Endpoints.

If you want to deploy the package outside of Azure Machine Learning, see Package and deploy models outside Azure Machine Learning.

Next step

Feedback

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback.

Submit and view feedback for