Configure Snowflake in a copy activity

This article outlines how to use the copy activity in data pipeline to copy data from and to Snowflake.

Supported configuration

For the configuration of each tab under copy activity, go to the following sections respectively.

General

Refer to the General settings guidance to configure the General settings tab.

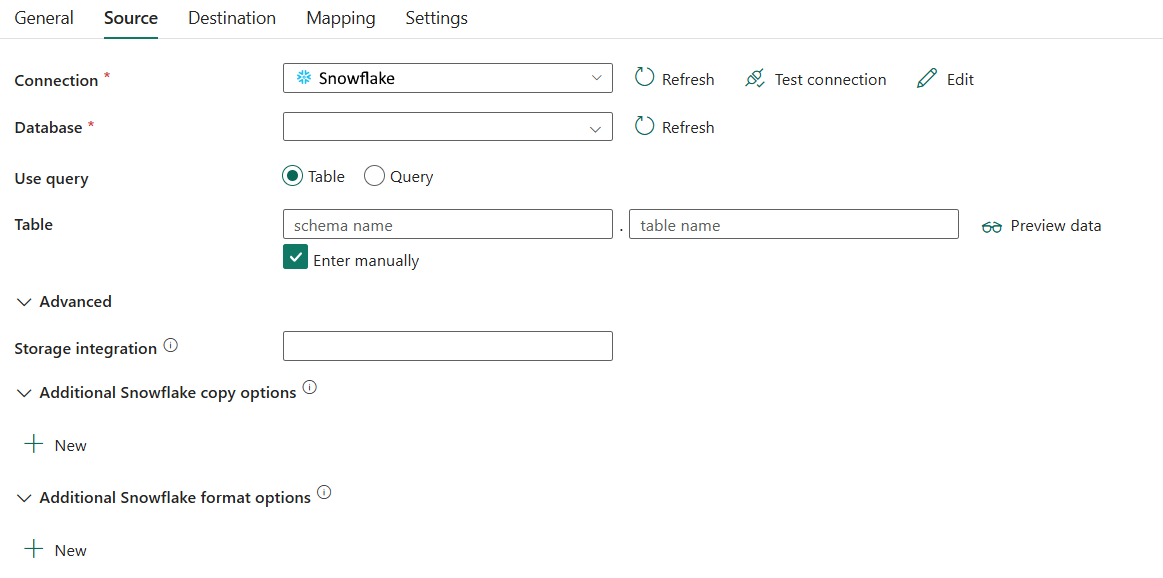

Source

The following properties are supported for Snowflake under the Source tab of a copy activity.

The following properties are required:

- Data store type: Select External.

- Connection: Select a Snowflake connection from the connection list. If the connection doesn't exist, then create a new Snowflake connection by selecting New.

- Database: The default database to use once connected. It should be an existing database for which the specified role has privileges.

- Use query: You can choose either Table or Query as your use query. The following list describes the configuration of each setting.

- Table: Select the table in your database from the drop-down list. Or check Edit to enter your table name manually.

- Query: Specify the SQL query to read data from Snowflake. If the names of the schema, table and columns contain lower case, quote the object identifier in query e.g.

select * from "schema"."myTable".

Under Advanced, you can specify the following fields:

Storage integration: Specify the name of your storage integration that you created in the Snowflake. For the prerequisite steps of using the storage integration, see Configuring a Snowflake storage integration.

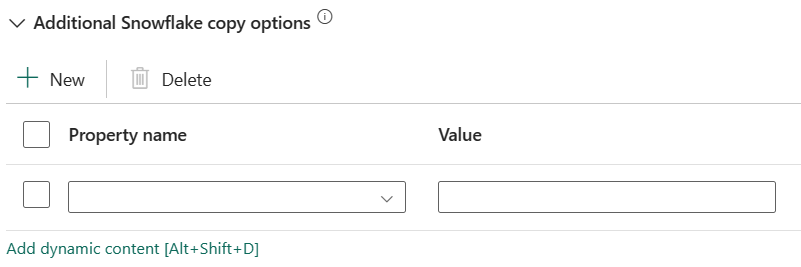

Additional Snowflake copy options: Specify additional Snowflake copy options which will be used in Snowflake COPY statement to load data. Additional copy options are provided as a dictionary of key-value pairs. Examples: MAX_FILE_SIZE, OVERWRITE. For more information, see Snowflake Copy Options.

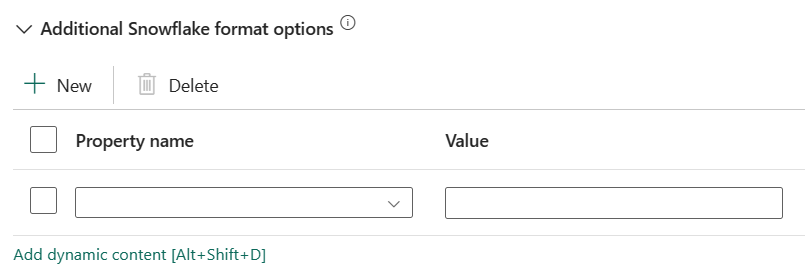

Additional Snowflake format options: Specify additional Snowflake format options, which will be used in Snowflake COPY statement to load data. Additional file format options provided to the COPY command are provided as a dictionary of key-value pairs. Examples: DATE_FORMAT, TIME_FORMAT, TIMESTAMP_FORMAT. For more information, see Snowflake Format Type Options.

Direct copy from Snowflake

If your destination data store and format meet the criteria described in this section, you can use the Copy activity to directly copy from Snowflake to destination. The service checks the settings and fails the Copy activity run if the following criteria is not met:

When you specify Storage integration in the source: The destination data store is the Azure Blob Storage that you referred in the external stage in Snowflake. You need to complete the following steps before copying data:

Create an Azure Blob Storage connection for the destination Azure Blob Storage with any supported authentication types.

Grant at least Storage Blob Data Contributor role to the Snowflake service principal in the destination Azure Blob Storage Access Control (IAM).

When you don't specify Storage integration in the source:

The destination connection is Azure Blob storage with shared access signature authentication. If you want to directly copy data to Azure Data Lake Storage Gen2 in the following supported format, you can create an Azure Blob Storage connection with SAS authentication against your Azure Data Lake Storage Gen2 account, to avoid using staged copy from Snowflake.

The destination data format is of Parquet, DelimitedText, or JSON with the following configurations:

- For Parquet format, the compression codec is None, Snappy, or Lzo.

- For DelimitedText format:

- Row delimiter is \r\n, or any single character.

- Compression type can be None, gzip, bzip2, or deflate.

- Encoding is left as default or set to UTF-8.

- Quote character is Double quote, Single quote, or No quote character.

- For JSON format, direct copy only supports the case that source Snowflake table or query result only has single column and the data type of this column is VARIANT, OBJECT, or ARRAY.

- Compression type can be None, gzip, bzip2, or deflate.

- Encoding is left as default or set to UTF-8.

- File pattern in copy activity destination is left as default or set to Set of objects.

In copy activity source, Additional columns is not specified.

Column mapping is not specified.

Staged copy from Snowflake

When your destination data store or format isn't natively compatible with the Snowflake COPY command, as mentioned in the last section, enable the built-in staged copy using an interim Azure Blob storage instance. The staged copy feature also provides you with better throughput. The service exports data from Snowflake into staging storage, then copies the data to destination, and finally cleans up your temporary data from the staging storage.

To use this feature, create an Azure Blob storage connection that refers to the Azure storage account as the interim staging. Then go to Settings tab to configure your staging settings. You need to select External to configure the Azure Blob Storage staging connection.

When you specify Storage integration in the source, the interim staging Azure Blob Storage should be the one that you referred in the external stage in Snowflake. Ensure that you create an Azure Blob Storage connection for it with any supported authentication, and grant at least Storage Blob Data Contributor role to the Snowflake service principal in the staging Azure Blob Storage Access Control (IAM). The Storage path under Staging settings in Settings tab is required.

When you don't specify Storage integration in the source, the staging Azure Blob Storage connection must use shared access signature authentication, as required by the Snowflake COPY command. Make sure you grant proper access permission to Snowflake in the staging Azure Blob Storage. To learn more about this, see this article.

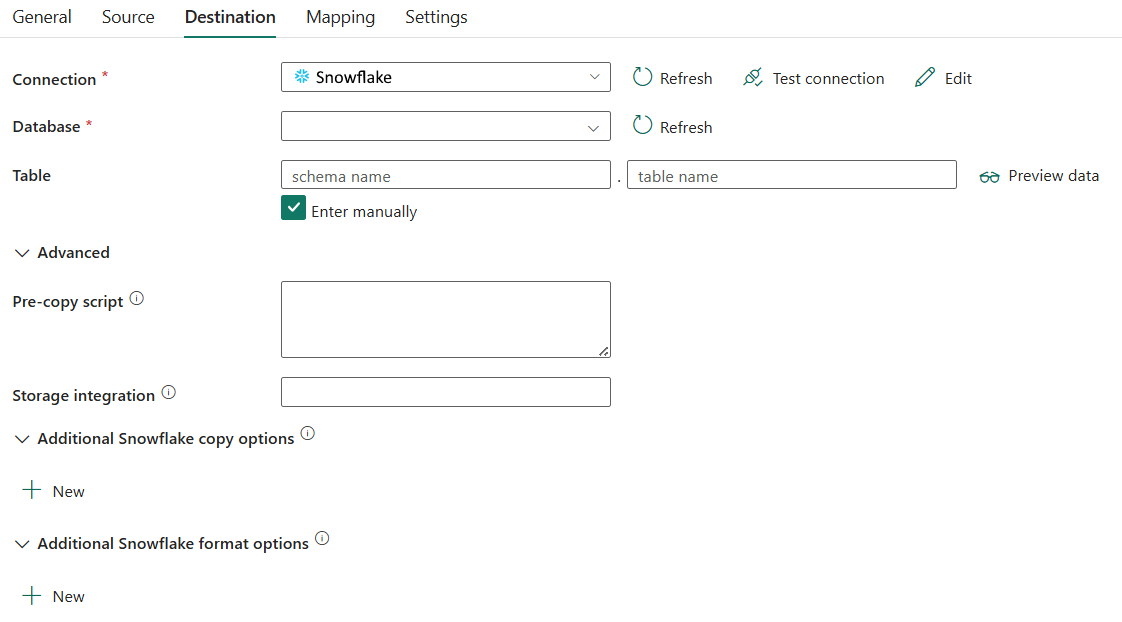

Destination

The following properties are supported for Snowflake under the Destination tab of a copy activity.

The following properties are required:

- Data store type: Select External.

- Connection: Select a Snowflake connection from the connection list. If the connection doesn't exist, then create a new Snowflake connection by selecting New.

- Database: The default database to use once connected. It should be an existing database for which the specified role has privileges.

- Table: Select the table in your database from the drop-down list. Or check Edit to enter your table name manually.

Under Advanced, you can specify the following fields:

Pre-copy script: Specify a script for Copy Activity to execute before writing data into destination table in each run. You can use this property to clean up the pre-loaded data.

Storage integration: Specify the name of your storage integration that you created in the Snowflake. For the prerequisite steps of using the storage integration, see Configuring a Snowflake storage integration.

Additional Snowflake copy options: Specify additional Snowflake copy options, which will be used in Snowflake COPY statement to load data. Additional copy options are provided as a dictionary of key-value pairs. Examples: ON_ERROR, FORCE, LOAD_UNCERTAIN_FILES. For more information, see Snowflake Copy Options.

Additional Snowflake format options: Specify additional Snowflake format options, which will be used in Snowflake COPY statement to load data. Additional file format options provided to the COPY command are provided as a dictionary of key-value pairs. Examples: DATE_FORMAT, TIME_FORMAT, TIMESTAMP_FORMAT. For more information, see Snowflake Format Type Options.

Direct copy to Snowflake

If your source data store and format meet the criteria described in this section, you can use the Copy activity to directly copy from source to Snowflake. The service checks the settings and fails the Copy activity run if the following criteria is not met:

When you specify Storage integration in the destination:

The source data store is the Azure Blob Storage that you referred in the external stage in Snowflake. You need to complete the following steps before copying data:

Create an Azure Blob Storage connection for the source Azure Blob Storage with any supported authentication types.

Grant at least Storage Blob Data Reader role to the Snowflake service principal in the source Azure Blob Storage Access Control (IAM).

When you don't specify Storage integration in the destination:

The source connection is Azure Blob storage with shared access signature authentication. If you want to directly copy data from Azure Data Lake Storage Gen2 in the following supported format, you can create an Azure Blob Storage connection with SAS authentication against your Azure Data Lake Storage Gen2 account, to avoid using staged copy to Snowflake.

The source data format is Parquet, DelimitedText, or JSON with the following configurations:

For Parquet format, the compression codec is None, or Snappy.

For DelimitedText format:

- Row delimiter is \r\n, or any single character. If row delimiter is not “\r\n”, First row as header is unselected, and Skip line count is not specified.

- Compression type can be None, gzip, bzip2, or deflate.

- Encoding is left as default or set to "UTF-8", "UTF-16", "UTF-16BE", "UTF-32", "UTF-32BE", "BIG5", "EUC-JP", "EUC-KR", "GB18030", "ISO-2022-JP", "ISO-2022-KR", "ISO-8859-1", "ISO-8859-2", "ISO-8859-5", "ISO-8859-6", "ISO-8859-7", "ISO-8859-8", "ISO-8859-9", "WINDOWS-1250", "WINDOWS-1251", "WINDOWS-1252", "WINDOWS-1253", "WINDOWS-1254", "WINDOWS-1255".

- Quote character is Double quote, Single quote, or No quote character.

For JSON format, direct copy only supports the case that destination Snowflake table only has single column and the data type of this column is VARIANT, OBJECT, or ARRAY.

- Compression type can be None, gzip, bzip2, or deflate.

- Encoding is left as default or set to UTF-8.

- Column mapping is not specified.

In the Copy activity source:

- Additional columns is not specified.

- If your source is a folder, Recursively is selected.

- Prefix, Start time (UTC) and End time (UTC) in Filter by last modified and Enable partition discovery are not specified.

Staged copy to Snowflake

When your source data store or format isn't natively compatible with the Snowflake COPY command, as mentioned in the last section, enable the built-in staged copy using an interim Azure Blob storage instance. The staged copy feature also provides you with better throughput. The service automatically converts the data to meet the data format requirements of Snowflake. It then invokes the COPY command to load data into Snowflake. Finally, it cleans up your temporary data from the blob storage.

To use this feature, create an Azure Blob storage connection that refers to the Azure storage account as the interim staging. Then go to Settings tab to configure your staging settings. You need to select External to configure the Azure Blob Storage staging connection.

When you specify Storage integration in the destination, the interim staging Azure Blob Storage should be the one that you referred in the external stage in Snowflake. Ensure that you create an Azure Blob Storage connection for it with any supported authentication, and grant at least Storage Blob Data Reader role to the Snowflake service principal in the staging Azure Blob Storage Access Control (IAM). The Storage path under Staging settings in Settings tab is required.

When you don't specify Storage integration in the destination, the staging Azure Blob Storage connection need to use shared access signature authentication as required by the Snowflake COPY command.

Mapping

For Mapping tab configuration, go to Configure your mappings under mapping tab.

Settings

For Settings tab configuration, go to Configure your other settings under settings tab.

Table summary

The following tables contain more information about the copy activity in Snowflake.

Source

| Name | Description | Value | Required | JSON script property |

|---|---|---|---|---|

| Data store type | Your data store type. | External | Yes | / |

| Connection | Your connection to the source data store. | < your connection > | Yes | connection |

| Database | Your database that you use as source. | < your database > | Yes | database |

| Use query | The way to read data from Snowflake. | • Table • Query |

No | • table • query |

| Table | The name of the table to read data. | < name of your source table> | Yes | schema table |

| Query | The SQL query to read data from Snowflake. | < name of your source query> | Yes | query |

| Storage integration | Specify the name of your storage integration that you created in the Snowflake. For the prerequisite steps of using the storage integration, see Configuring a Snowflake storage integration. | < your storage integration > | No | storageIntegration |

| Additional Snowflake copy options | Additional copy options, provided as a dictionary of key-value pairs. Examples: MAX_FILE_SIZE, OVERWRITE. For more information, see Snowflake Copy Options. | • Name • Value |

No | additionalCopyOptions |

| Additional Snowflake format options | Additional file format options that are provided to COPY command as a dictionary of key-value pairs. Examples: DATE_FORMAT, TIME_FORMAT, TIMESTAMP_FORMAT. For more information, see Snowflake Format Type Options. | • Name • Value |

No | additionalFormatOptions |

Destination

Note

While non-Azure Snowflake instances are supported for source, only Azure Snowflake instances are currently supported for Snowflake destinations (also referred to as sinks in Azure Data Factory).

| Name | Description | Value | Required | JSON script property |

|---|---|---|---|---|

| Data store type | Your data store type. | External | Yes | / |

| Connection | Your connection to the destination data store. | < your connection > | Yes | connection |

| Database | Your database that you use as destination. | < your database> | Yes | / |

| Table | Your destination data table. | < name of your destination table> | Yes | • schema • table |

| Pre-copy script | A SQL query for the Copy activity to run before writing data into Snowflake in each run. Use this property to clean up the preloaded data. | < your pre-copy script> | NO | preCopyScript |

| Storage integration | Specify the name of your storage integration that you created in the Snowflake. For the prerequisite steps of using the storage integration, see Configuring a Snowflake storage integration. | < your storage integration > | No | storageIntegration |

| Additional Snowflake copy options | Additional copy options, provided as a dictionary of key-value pairs. Examples: ON_ERROR, FORCE, LOAD_UNCERTAIN_FILES. For more information, see Snowflake Copy Options. | • Name • Value |

No | additionalCopyOptions |

| Additional Snowflake format options | Additional file format options provided to the COPY command, provided as a dictionary of key-value pairs. Examples: DATE_FORMAT, TIME_FORMAT, TIMESTAMP_FORMAT. For more information, see Snowflake Format Type Options. | • Name • Value |

No | additionalFormatOptions |

Related content

Feedback

Coming soon: Throughout 2024 we will be phasing out GitHub Issues as the feedback mechanism for content and replacing it with a new feedback system. For more information see: https://aka.ms/ContentUserFeedback.

Submit and view feedback for