Get started with AI Toolkit for Visual Studio Code

The AI Toolkit for VS Code (AI Toolkit) is a VS Code extension that enables you to download, test, fine-tune, and deploy AI models with your apps or in the cloud. For more information, see the AI Toolkit overview.

Note

Additional documentation and tutorials for the AI Toolkit for VS Code are available in the GitHub repository: microsoft/vscode-ai-toolkit. You'll find guidance on Playground, working with AI models, fine-tuning local and cloud-based models, and more.

In this article, you'll learn how to:

- Install the AI Toolkit for VS Code

- Download a model from the catalog

- Run the model locally using the playground

- Integrate an AI model into your application using REST or the ONNX Runtime

Prerequisites

- VS Code must be installed. For more information, see Download VS Code and Getting started with VS Code.

When utilizing AI features, we recommend that you review: Developing Responsible Generative AI Applications and Features on Windows.

Install

The AI Toolkit is available in the Visual Studio Marketplace and can be installed like any other VS Code extension. If you're unfamiliar with installing VS Code extensions, follow these steps:

- In the Activity Bar in VS Code select Extensions

- In the Extensions Search bar type "AI Toolkit"

- Select the "AI Toolkit for Visual Studio code"

- Select Install

Once the extension has been installed you'll see the AI Toolkit icon appear in your Activity Bar.

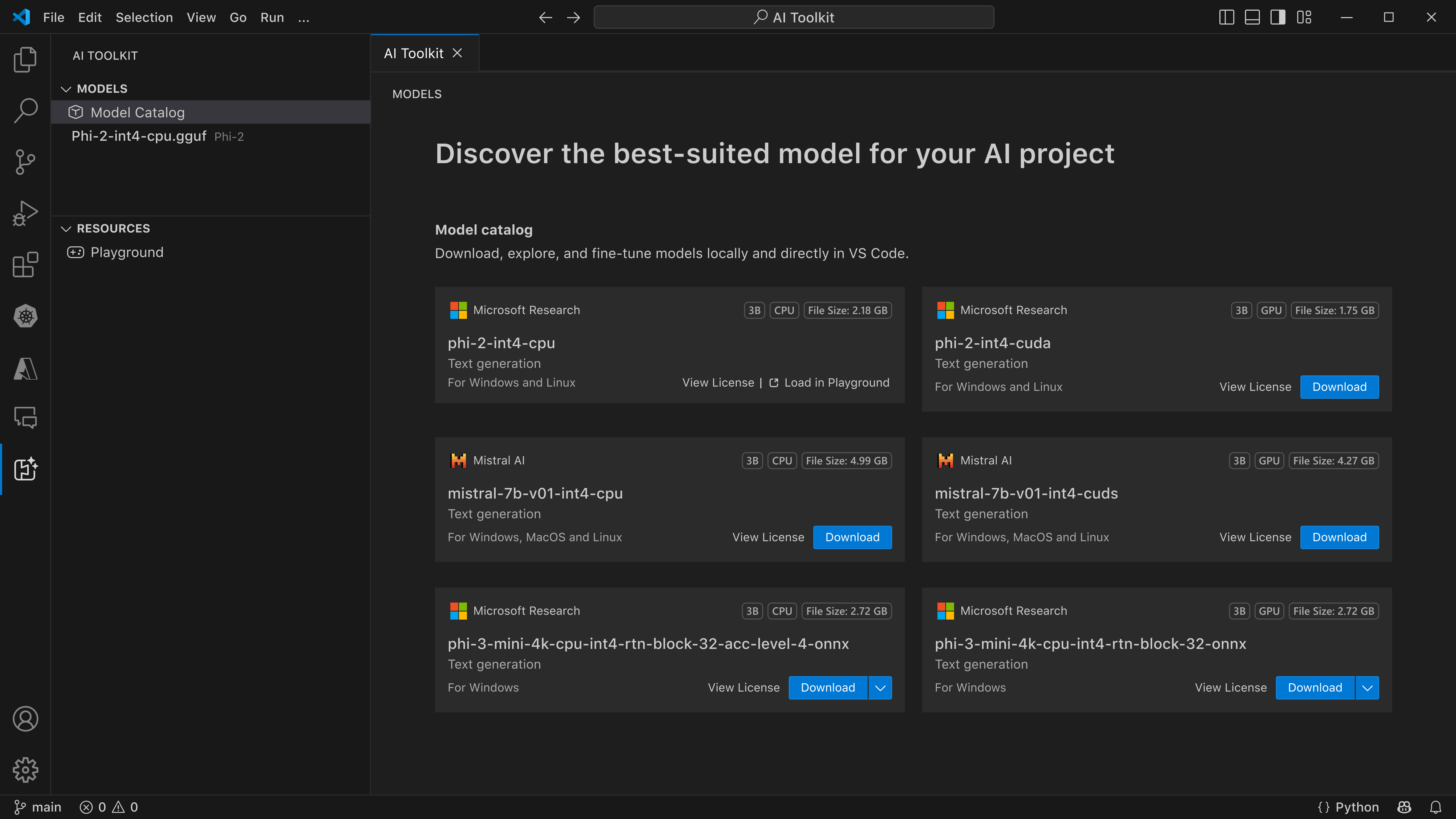

Download a model from the catalog

The primary sidebar of the AI Toolkit is organized into My Models, Catalog, Tools, and Help and Feedback. The Playground, Bulk Run, Evaluation, and Fine tuning features are available in the Tools section. To get started select Models from the Catalog section to open the Model Catalog window:

You can use the filters at the top of the catalog to filter by Hosted by, Publisher, Tasks, and Model type. There's also a Fine-Tuning Support switch that you can toggle on to only show models that can be fine tuned.

Tip

The Model type filter allows you to only show models that will run locally on the CPU, GPU, or NPU or models that support only Remote access. For optimized performance on devices that have at least one GPU, select model type of Local run w/ GPU. This helps to find a model optimized for the DirectML accelerator.

To check whether you have a GPU on your Windows device, open Task Manager and then select the Performance tab. If you have GPU(s), they will be listed under names like "GPU 0" or "GPU 1".

Note

For Copilot+ PCs with a Neural Processing Unit (NPU), you can select models that are optimized for the NPU accelerator. The Deepseek R1 Distilled model is optimized for the NPU and available to download on Snapdragon powered Copilot+ PCs running Windows 11. For more information, see Running Distilled DeepSeek R1 models locally on Copilot+ PCs, powered by Windows Copilot Runtime.

The following models are currently available for Windows devices with one or more GPUs:

- Mistral 7B (DirectML - Small, Fast)

- Phi 3 Mini 4K (DirectML - Small, Fast)

- Phi 3 Mini 128K (DirectML - Small, Fast)

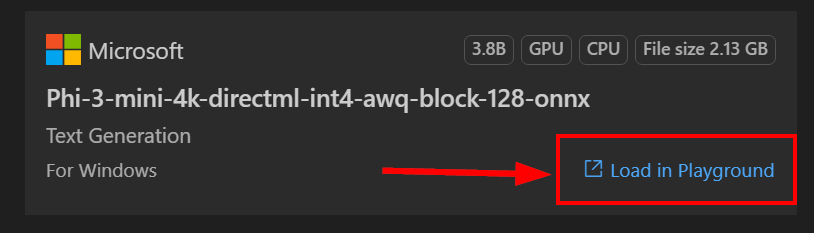

Select the Phi 3 Mini 4K model and click Download:

Note

The Phi 3 Mini 4K model is approximately 2GB-3GB in size. Depending on your network speed, it could take a few minutes to download.

Run the model in the playground

Once your model has downloaded, it will appear in the My Models section under Local models. Right-click the model and select Load in Playground from the context menu:

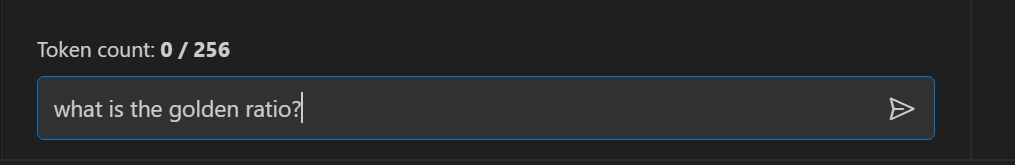

In the chat interface of the playground enter the following message followed by the Enter key:

You should see the model response streamed back to you:

Warning

If you do not have a GPU available on your device but you selected the Phi-3-mini-4k-directml-int4-awq-block-128-onnx model, the model response will be very slow. You should instead download the CPU optimized version: Phi-3-mini-4k-cpu-int4-rtn-block-32-acc-level-4-onnx.

It is also possible to change:

- Context Instructions: Help the model understand the bigger picture of your request. This could be background information, examples/demonstrations of what you want or explaining the purpose of your task.

- Inference parameters:

- Maximum response length: The maximum number of tokens the model will return.

- Temperature: Model temperature is a parameter that controls how random a language model's output is. A higher temperature means the model takes more risks, giving you a diverse mix of words. On the other hand, a lower temperature makes the model play it safe, sticking to more focused and predictable responses.

- Top P: Also known as nucleus sampling, is a setting that controls how many possible words or phrases the language model considers when predicting the next word

- Frequency penalty: This parameter influences how often the model repeats words or phrases in its output. The higher the value (closer to 1.0) encourages the model to avoid repeating words or phrases.

- Presence penalty: This parameter is used in generative AI models to encourage diversity and specificity in the generated text. A higher value (closer to 1.0) encourages the model to include more novel and diverse tokens. A lower value is more likely for the model to generate common or cliche phrases.

Integrate an AI model into your application

There are two options to integrate the model into your application:

- The AI Toolkit comes with a local REST API web server that uses the OpenAI chat completions format. This enables you to test your application locally - using the endpoint

http://127.0.0.1:5272/v1/chat/completions- without having to rely on a cloud AI model service. Use this option if you intend to switch to a cloud endpoint in production. You can use OpenAI client libraries to connect to the web server. - Using the ONNX Runtime. Use this option if you intend to ship the model with your application with inferencing on device.

Local REST API web server

The local REST API web server allows you to build-and-test your application locally without having to rely on a cloud AI model service. You can interact with the web server using REST, or with an OpenAI client library:

Here is an example body for your REST request:

{

"model": "Phi-3-mini-4k-directml-int4-awq-block-128-onnx",

"messages": [

{

"role": "user",

"content": "what is the golden ratio?"

}

],

"temperature": 0.7,

"top_p": 1,

"top_k": 10,

"max_tokens": 100,

"stream": true

}'

Note

You may need to update the model field to the name of the model you downloaded.

You can test the REST endpoint using an API tool like Postman or the CURL utility:

curl -vX POST http://127.0.0.1:5272/v1/chat/completions -H 'Content-Type: application/json' -d @body.json

ONNX Runtime

The ONNX Runtime Generate API provides the generative AI loop for ONNX models, including inference with ONNX Runtime, logits processing, search and sampling, and KV cache management. You can call a high level generate() method, or run each iteration of the model in a loop, generating one token at a time, and optionally updating generation parameters inside the loop.

It has support for greedy/beam search and TopP, TopK sampling to generate token sequences and built-in logits processing like repetition penalties. The following code is an example of how you can leverage the ONNX runtime in your applications.

Please refer to the example shown in Local REST API web server. The AI Toolkit REST web server is built using the ONNX Runtime.