Hello @Christoph Kiefer ,

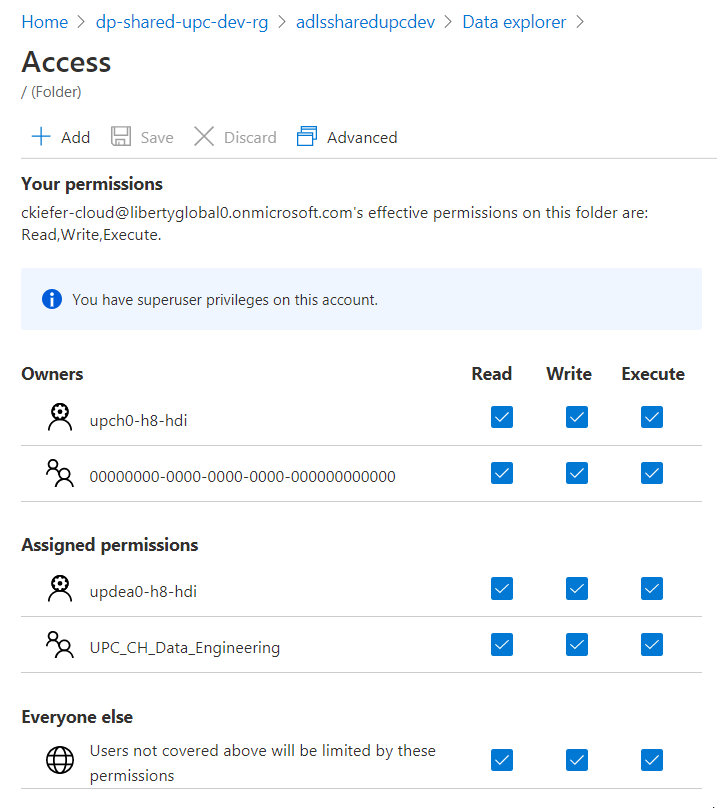

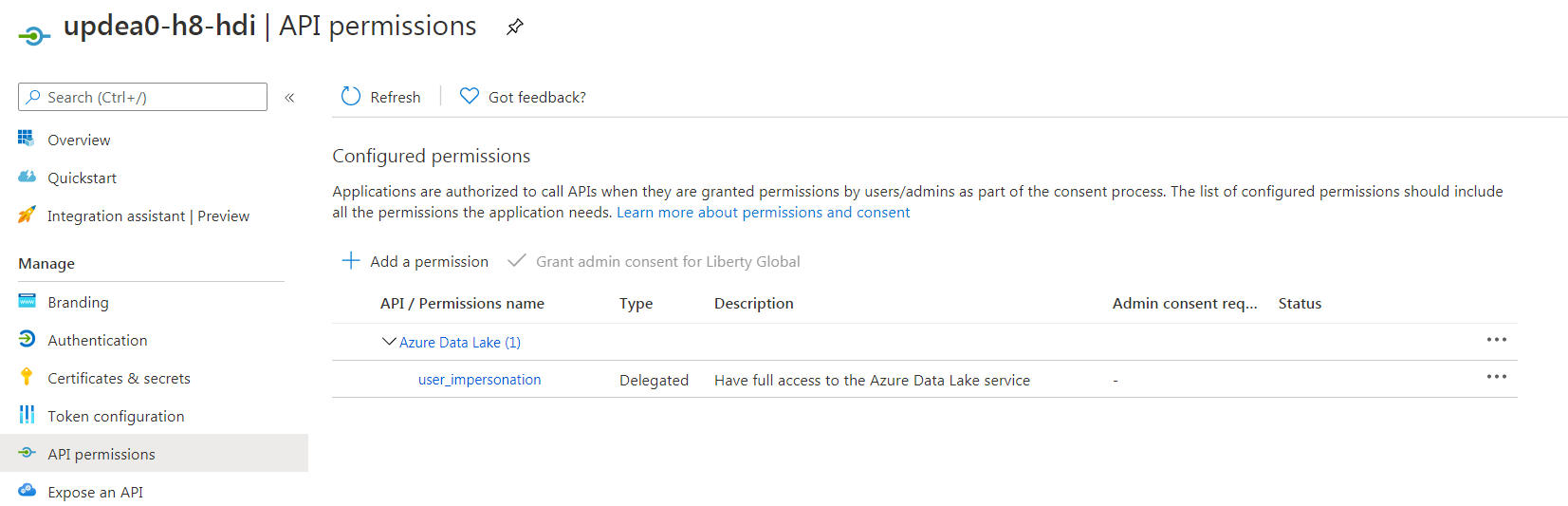

You will receive this error message Exception occurred, Reason: Set Owner failed with failed with error 0x83090aa2 (Forbidden. ACL verification failed. Either the resource does not exist or the user is not authorized to perform the requested operation.). when the user might have revoked permissions of service principal (SP) on files/folders.

Steps to resolve this issue:

Step1: Check that the SP has 'x' permissions to traverse along the path. For more information, see Permissions. Sample dfs command to check access to files/folders in Data Lake storage account:

hdfs dfs -ls /<path to check access>

Step2: Set up required permissions to access the path based on the read/write operation being performed. See here for permissions required for various file system operations.

For more details, refer “Azure HDInsight – Using Azure Data Lake Storage Gen1” and "Troubleshoot Data Lake Store files".

Hope this helps. Do let us know if you any further queries.

----------------------------------------------------------------------------------------

Do click on "Accept Answer" and Upvote on the post that helps you, this can be beneficial to other community members.