Hi @Lucas Silva ,

Welcome to Microsoft Q&A platform and thanks for using this forum.

As per my understanding you are trying to read incremental data from your source (SQL) and append the data (only the incremental data) to existing destination file (Historicos.csv in Azure Data lake store). But instead of appending the incremental data to existing file, the file is overwritten with only incremental data and old data is lost. Please correct me if my understanding is incorrect.

If that is the case could you please confirm what service are you using to copy data from source to sink? Is it ADF? or some other service?

In case if you are using copy activity of ADF, then copy activity doesn't support append file functionality. Please feel free to up-vote and/or comment on an already existing feature request thread: https://feedback.azure.com/forums/270578-data-factory/suggestions/36163726-append-replace-upsert-the-outgoing-data-from-copy

Below are the supported copy behaviors when files are from file-based data store:

copyBehavior -(Defines the copy behavior when the source is files from a file-based data store)

Allowed values are:

- PreserveHierarchy (default): Preserves the file hierarchy in the target folder. The relative path of source file to source folder is identical to the relative path of target file to target folder.

- FlattenHierarchy: All files from the source folder are in the first level of the target folder. The target files have auto-generated names.

- MergeFiles: Merges all files from the source folder to one file. If the file name is specified, the merged file name is the specified name. Otherwise, it's an autogenerated file name.

Workaround:

Possible workaround to achieve your requirement is to utilize CopyBehavior feature of Copy activity.

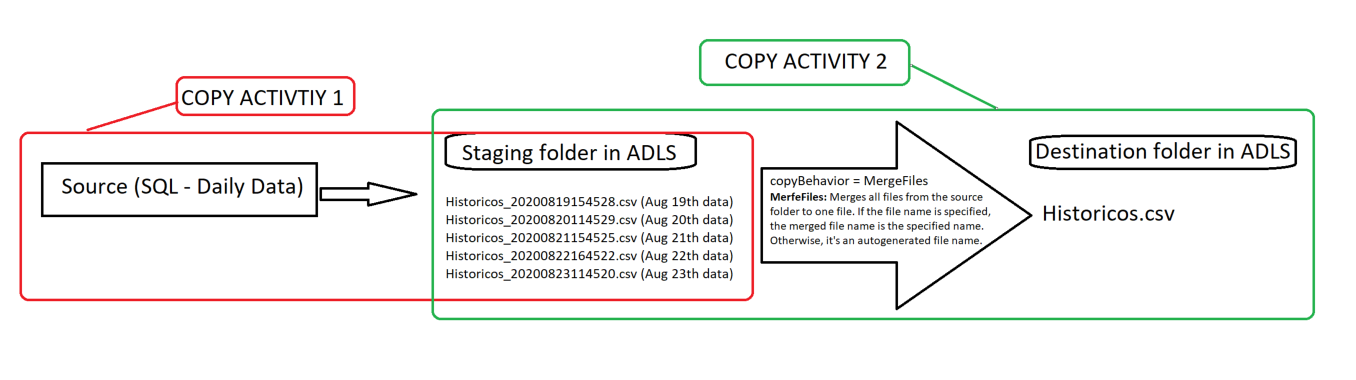

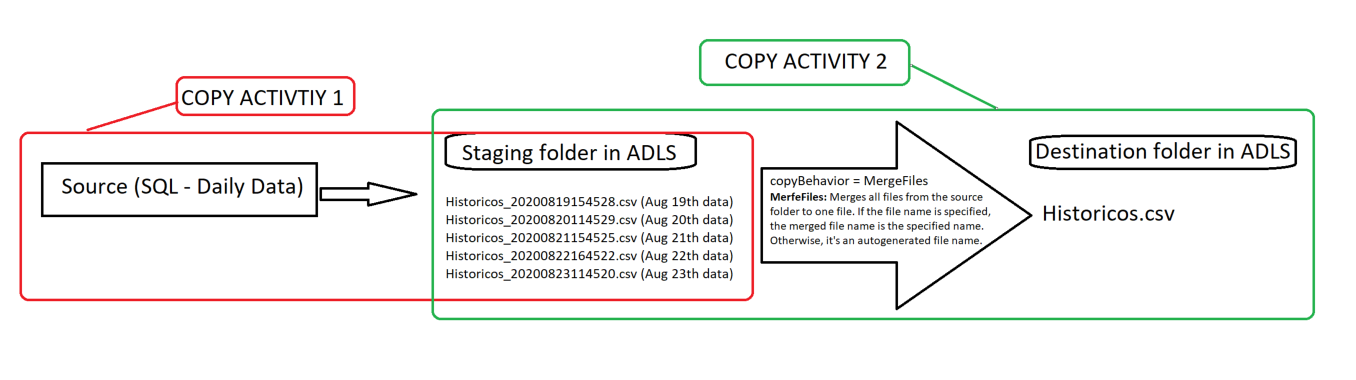

- First have a copy activtiy_1 to Copy daily data from your source (SQL) to a staging folder in your ADLS as filename_datetime.csv (then you will have daily files sitting in this folder as filename_20200819103433.csv, filename_20200820043432.csv, filename_20200821114423.csv,..)

- Then have a subsequent copy activity_2 in the same pipeline to copy those daily files and copy to destination folder using

MergeFiles CopyBehavior of copy activity which will merge all files into a single file. This file will be overwritten daily when the pipeline runs and you have all the historical data including latest incremental data.

If you would like to avoid repeating of copying the same/historical files everyday from staging to destination, another possible workaround is to use custom activity of ADF and write your own logic to copy the data and append to existing file in destination folder.

In case if you are using a different service other than ADF, please let me know, will be happy to assist further.

Hope this info helps.

----------

Thank you

Please do consider to click on "Accept Answer" and "Upvote" on the post that helps you, as it can be beneficial to other community members.